Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Paul Rouget: New Out Of Process Window in Firefox Nightly |

New Out Of Process Window in Firefox Nightly

In Firefox Nightly (and only in Firefox Nightly), there is new menu item called "New OOP window". It opens a new Firefox window that loads tabs in separate processes (electrolysis).

Bill McCloskey explains on bugzilla:

This idea started when we were talking about how to turn electrolysis on in nightly. Doing it in the near future would really anger a lot of people because basic stuff like printing still doesn't work. However, we do want to make it available to more people. A good middle ground would be to add a new menu option "New window in separate process" (name could be improved). It would be sort of like private browsing mode.

|

|

Byron Jones: markup within bugzilla comments |

a response i received multiple times following yesterday’s deployment of comment previews on bugzilla.mozilla.org was “wait, bugzilla allows markup within comments now?”.

yes, and this has been the case for a very long time. here’s a list of all the recognised terms on bugzilla.mozilla.org;

- bug number

- links to the specified bug, if it exists. the href’s tooltip will contain the information about the bug

- eg. “bug 40896''

- attachment number

- links to the specified attachment, if it exists. the href’s tooltip will contain the information about the attachment

- adds action buttons for [diff][details][review]

- eg. “attachment 8374664''

- comment number

- links to a comment in the current bug. “comment 0'' will link to the bug’s description

- eg. “comment 12''

- bug number comment number

- links to a comment in another bug.

- eg. “bug 40896 comment 12''

- email addresses

- linkified into a mailto: href

- eg. “glob@mozilla.com”

- UUID crash-id or bp-crash-id

- links to the crash-stats for the specified crash-id

- eg. “UUID 56630b1a-0913-4b4e-b701-d96452140102'' or “bp-56630b1a-0913-4b4e-b701-d96452140102''

- CVE-number or CAN-number

- links to the cve/can security release information on cve.mitre.org

- eg. “CVE-2013-1733''

- rnumber

- links to a svn.mozilla.org revision number

- eg. “r1234''

- bzr commit messages

- links to the revision on bzr.mozilla.org

- see example here

- hg changesets: repository changeset revision

- links to the changeset on hg.mozilla.org

- eg. “mozilla-inbound changeset c7802c9d6eec”

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/02/14/markup-within-bugzilla-comments/

|

|

Raniere Silva: 3rd mathml meeting |

3rd mathml meeting

This is a report about the 3rd Mozilla MathML IRC Meeting (see the announcement here). The topics of the meeting can be found in this PAD (local copy of the PAD) and the IRC log (local copy of the IRC log).

The next meeting will be in March 13th 9PM UTC at #mathml IRC channel. Please add topics in the PAD.

|

|

Planet Mozilla Interns: Willie Cheong: Release Readiness DashboardGroups of Queries |

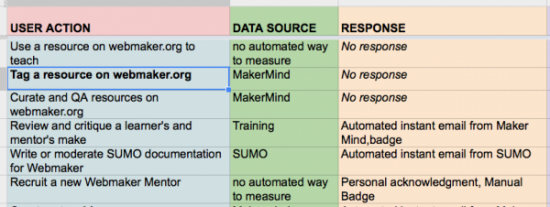

One of the key requirements for the Release Readiness Dashboard was to have multiple widgets of information. Each widget was to contain visuals for at least one data set. In addition, there was also a need for a numeric view that only displays the current count in cases when a view of historic data is not necessary. The above design was the result of those requirements.

Interpreted in terms of what is stored in the database, each grid on the view represents a group that can have multiple queries. I.e. a one-to-many relationship. Groups can be specified to be a plot, number, or both, eventually affecting how it will be loaded on the front view.

Queries are stored in the database as a JSON formatted string with Qb structure, and used to dynamically request for data from Elastic Search each time a page is loaded. No Bugzilla data is stored locally on the dashboard’s server. After the data is retrieved from Elastic Search and depending on the group’s type, a plot (or number) is then generated on the fly and appended onto the view.

Moving forward, another requirement was to have default groups that will display for every version of a specific product. Being linked to through a group.version_id meant that a new group had to be created every time a new version is being set up. The solution to this problem: Polymorphic objects.

With each group as a polymorphic object, we are able to link rows in the same group table to different entities like product and version. In terms of changes to the schema, we simply remove group.version_id and replace it with a generic group.entity and group.entity_id. Note that an actual FOREIGN KEY constraint cannot be reinforced here.

We are now able to define default groups by linking it to a product as a polymorphic object. However, because a single product group and its corresponding queries can now used across different versions, some version dependent text in fields like query.query_qb must be changed dynamically before it can be useful.

To solve this issue, soft tags like and are stored in place of the version dependent text and replaced by the corresponding version’s values when being requested on the server.

So here we have it; Multiple groups that each display multiple data sets:

- With the option to display the group as a plot or a current count.

- With the option to display the group for an individual version (custom group) or for all versions of a product (default group).

|

|

Mark Reid: Recent Telemetry Data Outages |

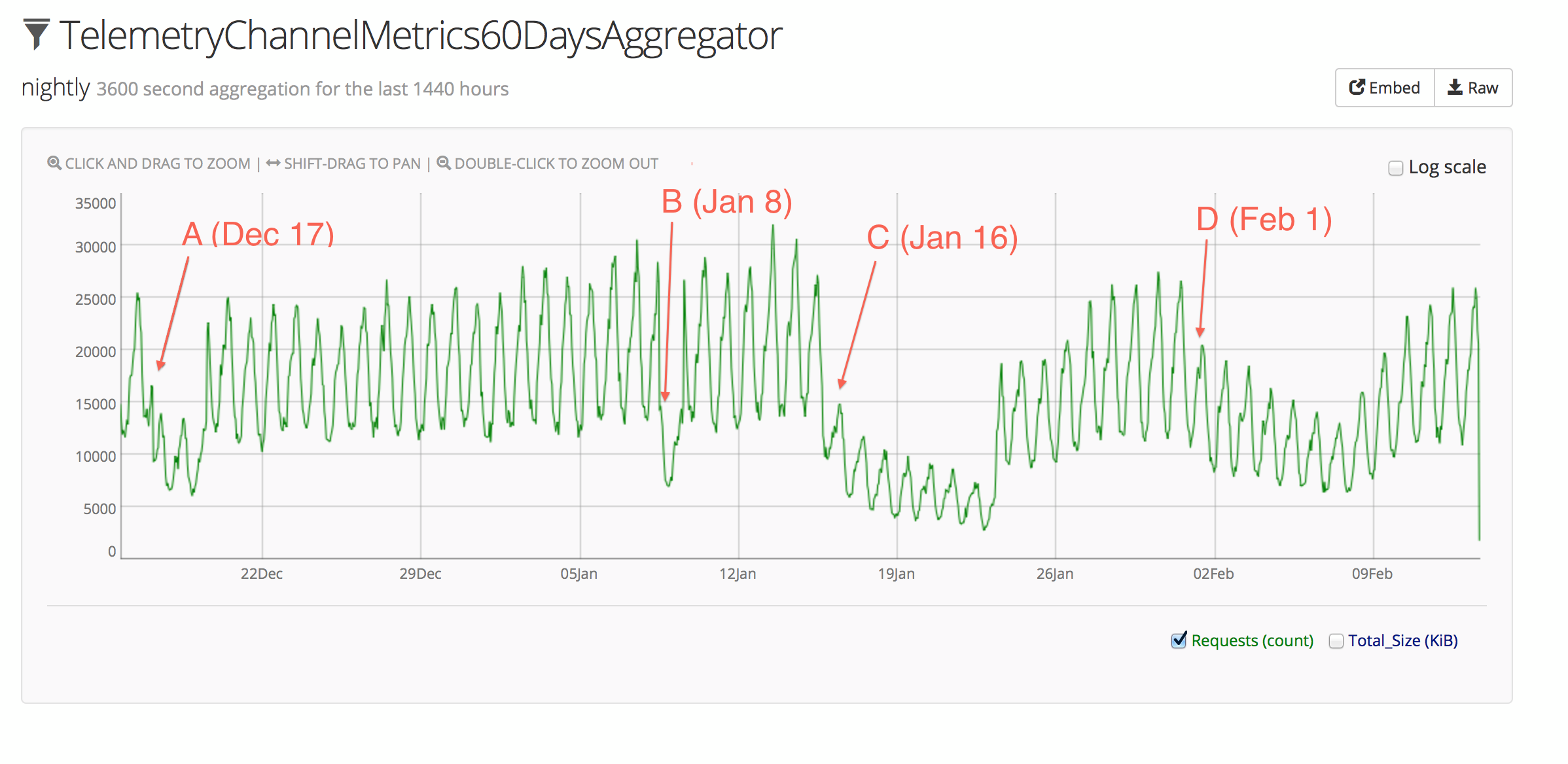

There have been a few data outages in Telemetry recently, so I figured some followup and explanation are in order.

Here’s a graph of the submission rate from the nightly channel (where most of the action has taken place) over the past 60 days:

The x-axis is time, and the y-axis is the number of submissions per hour.

Points A (December 17) and B (January 8) are false alarms, and were just cases where the stats logging itself got interrupted, and so don’t represent data outages.

Point C (January 16) is where it starts to get interesting. In that case, Firefox nightly builds stopped submitting Telemetry data due to a change in event handling when the Telemetry client-side code was moved from a .js file to a .jsm module. The resolution is described in Bug 962153. This outage resulted in missing data for nightly builds from January 16th through to January 22nd.

As shown on the above graph, the submission rate dropped noticeably, but not anywhere close to zero. This is because not everyone on the nightly channel updates to the latest nightly as soon as it’s available, so an interesting side-effect of this bug is that we can see a glimpse of the rate at which nightly users update to new builds. In short, it looks like a large portion of users update right away, with a long tail of users waiting several days to update. The effect is apparent again as the submission rate recovers starting on January 22nd.

The second problem with submissions came at point D (February 1) as a result of changing the client Telemetry code to use OS.File for saving submissions to disk on shutdown. This resulted in a more gradual decrease in submissions, since the “saved-session” submissions were missing, but “idle-daily” submissions were still being sent. This outage resulted in partial data loss for nightly builds from February 1st through to February 7th.

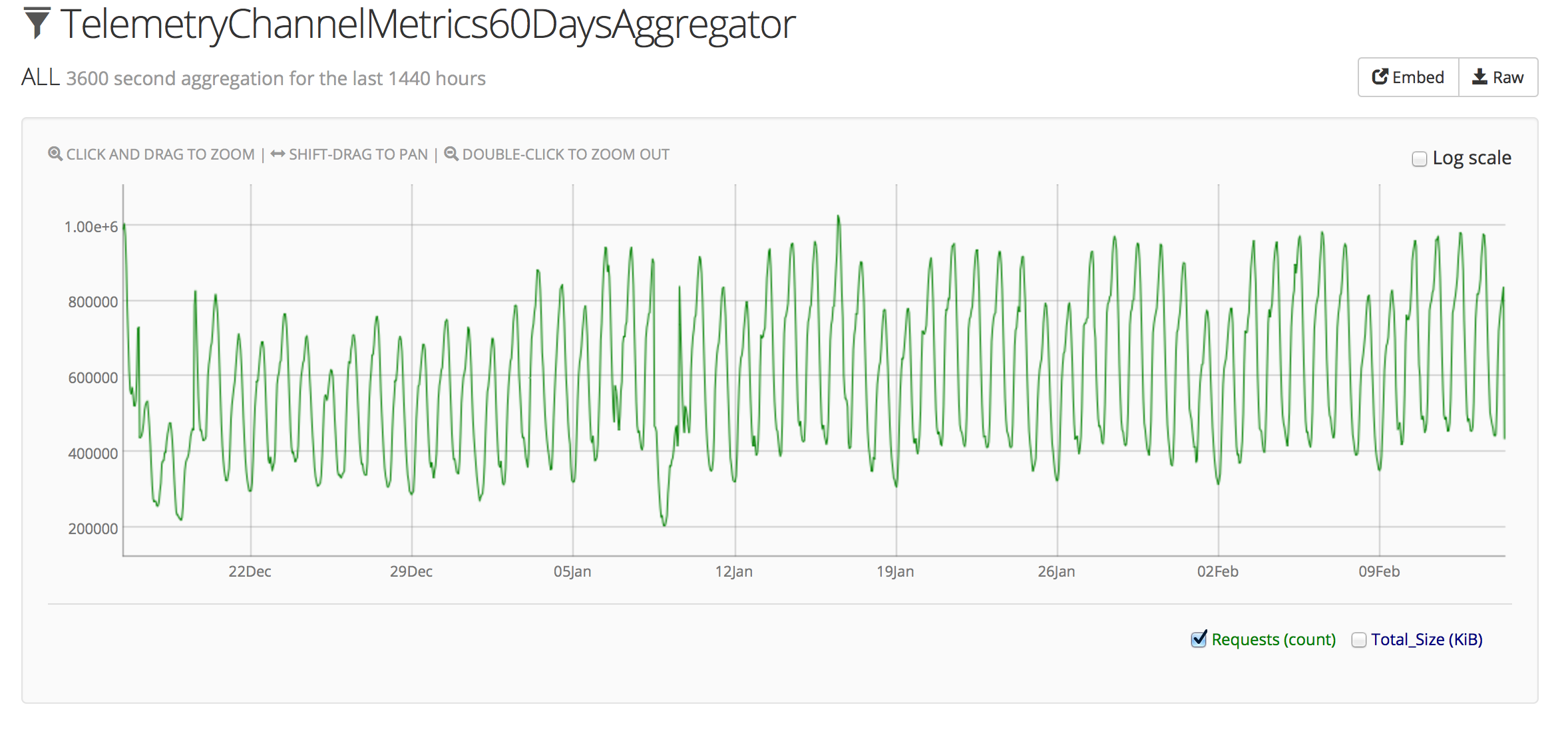

Both of these problems have been limited to the nightly channel, so the actual volume of submissions that were lost is relatively low. In fact, if you compare the above graph to the graph for all channels:

The anomalies on January 16th and February 1st are not even noticeable within the usual weekly pattern of overall Telemetry submissions (we normally observe a sort of “double peak” each day, with weekends showing about 15-20% fewer submissions). This makes sense given that the release channel comprises the vast majority of submissions.

The above graphs are all screenshots of our Heka aggregator instance. You can look at a live view of the submission stats and explore for yourself. Follow the links at the top of the iframes to dig further into what’s available.

There was a third data outage recently, but it cropped up in the server’s data validation / conversion processing so it doesn’t appear as an anomaly on the submission graphs. On February 4th, the revision field for many submissions started being rejected as invalid. The server code was expecting a revision URL of the form http://hg.mozilla.org/..., but was getting URLs starting with https://... and thus rejecting them. Since revision is required in order to determine the correct version of Histograms.json to use for validating the rest of the payload, these submissions were simply discarded. The change from http to https came from a mostly-unrelated change to the Firefox build infrastructure. This outage affected both nightly and aurora, and caused the loss of submissions from builds between February 4th and when the server-side fix landed on Februrary 12th.

So with all these outages happening so close together, what are we doing to fix it?

Going forward, we would like to be able to completely avoid problems like the overly-eager rejection of payloads during validation, and in cases where we can’t avoid problems, we want to detect and fix them as early as possible.

In the specific case of the revision field, we are adding a test that will fail if the URL prefix changes.

In the case where the submission rate drops, we are adding automatic email notifications which will allow us to act quickly to fix the problem. The basic functionality is already in place thanks to Mike Trinkala, though the anomaly detection algorithm needs some tweaking to trigger on things like a gradual decline in submissions over time.

Similarly, if the “invalid submission” rate goes up in the server’s validation process, we want to add automatic alerts there as well.

With these improvements in place, we should see far fewer data outages in Q2 and beyond.

Last minute update

While poking around at various graphs to document this post, I noticed that Bug 967203 was still affecting aurora, so the fix has been uplifted.

http://mreid-moz.github.io/blog/2014/02/13/recent-telemetry-data-outages/

|

|

Geoffrey MacDougall: Why do you need my donation when you have all that Google cash? |

This post is designed to help you answer the question:

“Why do you need my money when you have all that Google cash?”

We’ve provided 5 different ways to answer the question, listed in order of how well they usually satisfy the person posing it. In terms of soliciting support, (1), (2), and (3) all work in decreasing efficacy. If you find yourself answering with (4) or (5), you’ve already lost.

(4) is the one most people inside of Mozilla will tell you. But it won’t inspire anyone to make a donation, won’t inspire anyone about the mission, and demeans the potential upside of what fundraising does for Mozilla both in terms of bringing new resources to the mission and growing our community. (5) is tricky and brings up all sorts of other questions, so use at your own risk.

None of the answers are prescriptive or written as scripts. Just suggestions. You can also make up your own answer: why you feel fundraising is important to Mozilla.

When answering the question, the most important thing is to be confident; you don’t need to excuse anything. More than 120,000 people gave to Mozilla in 2013. Our community understands that we’re different, that we’re tackling a huge challenge, that that challenge matters, and that we need all the expertise, time, and money we can get if we’re to win.

You can also point them to this post on why fundraising matters to Mozilla.

1.) Turn around, look up the hill.

The main thing that works is contextualizing $300M in terms of the scale of the challenge. It’s a lot of cash by itself. It’s nothing compared to what we need to do. Don’t look down the hill at the scale of other non-profits. Look up the hill at the scale of each of our competitors, much less their combined strength. Follow this up by talking about the outsized impact that Mozilla wields. How $10 in our hands goes much further because of our community, our leverage, and our reputation. Look at what Firefox did to Internet Explorer.

Strength: Fact-based and usually obvious, once pointed out.

Weakness: Risks making $10 feel inconsequential.

2.) Product vs. Program

The $300M Firefox makes is spent making Firefox. The Google revenue pays for the main pieces of software we build. There’s barely any left once that’s done. But there’s still a lot we need to do to pursue the mission. That’s where the donations and grants come in. They drive our education work. Our activism. Our protection of privacy. Our research and development. They help us teach kids to code. To get governments to respect our rights to privacy. To address the parts of our mission that Firefox can’t solve by itself.

Strength: Makes it clear where the donations go and why $300M isn’t enough.

Weakness: Most people know and like us because of Firefox. This argument underlines that their donations are not directly connected to keeping Firefox around.

3.) Money is more than money.

Money is a means of contributing. Of expressing support. Of making partnerships real. Of feeling you belong. Of getting us into rooms. It lets us build relationships with funders that help us direct and influence how billions of charitable and public dollars are spent each year. Money brings much needed resources to our organization, but it also grows our community, builds our momentum, helps us solidify key partnerships, and generally gain the energy, access, and reach we need to win.

Strength: Lots of rhetoric and poetry. You can hear the music swelling.

Weakness: Nothing but rhetoric and poetry. Easily deflated.

4.) Being a non-profit matters. And non-profits need to raise money.

Being mission-driven isn’t enough. Mozilla’s founders decided they couldn’t pursue our mission as a software company that maintains a value set. They decided that we actually need to be a non-profit. That’s the anchor that does more than just guide our decision-making, it guarantees we make the right call. And non-profits in the United States have to fundraise. It’s part of what lets you stay a non-profit.

Strength: Fact-based and a one or zero problem set. We don’t fundraise, we can’t stay a non-profit.

Weakness: Completely uninspiring. If you get here you’ve usually already lost, as it’s the least interesting and motivational.

5.) Fundraising is part of our efforts to diversify our revenue.

Saying this is risky, because the size of our fundraising program is nowhere near the scale of our Google deal. However, our fundraising has almost doubled year-over-year since 2009 to reach $12M in 2013. Wikimedia, our closest analog, makes $30M a year, which is real revenue. We’re working to match that. Large charities – hospitals, universities, big health – routinely combine large earned income programs with equally large (if not bigger) fundraising programs measured in 100s of millions a year. This is many years away for us, but it’s a perfectly viable form of long-term revenue.

Strength: Diversification is an obvious good to most people.

Weakness: Fundraising is not a meaningful replacement for the Google deal (yet). Also highlights the dependency on the Google deal.

Filed under: Mozilla, Pitch Geek

http://intangible.ca/2014/02/13/why-do-you-need-my-donation-when-you-have-all-that-google-cash/

|

|

Wladimir Palant: New blog |

For a while, I have been occasionally misusing the Adblock Plus project blog for articles that had no relation to Adblock Plus whatsoever. I posted various articles on security, Mozilla and XULRunner there, general extension development advise, occasionally some private stuff. With the project growing and more people joining I am no longer the only person posting to the Adblock Plus blog, treating it as my private blog isn’t appropriate. So a while ago I decided to set up a separate private blog for myself and now I finally found the time to implement this. My off-topic blog posts have been migrated to the new location. Now you can read my blog if you are interested in the random stuff I post there, or you can keep reading the Adblock Plus blog if all you are interested in is Adblock Plus.

|

|

Nikos Roussos: A short story about mass surveillance |

So imagine you have a camera in your house. In your living room. A surveillance camera, like those you see in a metro station. In fact not just in your house (because hey, after all you are not that important), but in everyone's house. Image there is a surveillance camera on every living room. Creepy right? Would you just let it there staring and watching at you? Every movement you make in your own house? Well this is what is going on right now. No seriously. This is what is happening; but in the digital world. You see a physical camera is so easy to spot and get you frustrated. But on the digital world, which is as real as the physical one, the surveillance mechanisms are not so easy to spot.

So I'm having this camera in my living room watching every move I make. and the other day Maria, a friend of mine, comes for a cup of coffee in my house. She comes from another country, with different surveillance methods and she's surprised to see the camera. "You know you have a camera in your house? Over there in that corner of the ceiling.". There are plenty of ways I could react to this remark. The obvious one would be to look her finger and not where it's pointing, pretending there is nothing there. Or I could start yelling at her "Oh, come on. I know you people, all you do is scare people off. There is no camera! Don't try to terrorize us". Another approach would be that I actually get scared, censor myself and act as "normal" as I can. You know just sit there quietly and open my tv. Because that's what normal people do, right? I don't want to look suspicious and draw any attention on me from whoever is behind this camera. Until of course I really believe this and become that man who watches tv all day doing nothing out of the ordinary. Or... I could just thank Maria for stating the obvious and start thinking about what we can do about it.

A couple of hours later George, another friend, joins our company. He is more into technology than the average people. Actually a lot more. You know, these people that you can watch for hours talking only with acronyms and unknown words. So the discussion comes up again at this disturbing surveillance thing and he points out that there is a way to protect myself. A technical way to hide and obfuscate my movements inside my house, so they can't see what I'm doing (or at least make their job a lot harder). Well again there are various ways I could react to this. I could deny taking any measures to protect myself because that would make me look suspicious. I'm not that important. Let them focus on those who have something to hide. Or I could claim that this is a political problem and the only solution would be a political one. No technical measures can help us, so I refuse even to bother about them. Or... I could just thank George for having the patience to educate me about these things, and yes I would acknowledge the fact that this is a political problem and in the long term the solution should be political. But in the meantime I'm going to make their job a hell of a lot difficult. I'm going to help everyone look suspicious.

http://roussos.cc/2014/02/13/a-story-about-mass-surveillance/

|

|

Mike Hommey: Testing shared cache on try |

After some success with the shared cache experiment (Read about it, and some more), the next step was to get it to work on the Mozilla continuous integration infrastructure, and it turned out to reveal a couple issues.

The first issue is that the DNS server for the AWS build slaves we use is not the AWS DNS, but our in-house DNS. Which has two consequences:

- whatever geolocation S3 does at the DNS level may end up giving a S3 endpoint IP that is not optimal for the AWS region we’re in because it was correlated to the location of our in-house DNS

- the roundtrip to the in-house DNS server was around 80ms, and because every compilation is an independent process, each one does a DNS request, so each one gets that 80ms hit. Note that while suboptimal, doing a DNS request for each compilation also allows to get different S3 endpoints because of both DNS round robin and geolocation S3 uses, which gives very different IPs every so often.

The consequence of this is that build times were very unstable, ranging from 11 minutes like during my experiments up to 45 minutes for a 99% cache hit build! After importing a DNS resolver in the shared cache script and making it use the AWS DNS, build times became much more stable between 11 and 12 minutes. (we actually do need to use the in-house DNS for normal operations on the build slaves, so it’s not possible to switch /etc/resolv.conf)

The second issue is that the US Standard region for S3 can have quite high latency depending on the region you’re connecting to it from. Our build slaves are located in Oregon and Northern Virginia, and while the slaves in Northern Virginia could reach S3 US Standard within 3ms, those in Oregon could only reach it within 90ms. Those numbers were unfortunately gotten with the in-house DNS, so geolocation may have had its impact on them, but after switching DNS, the build times on Oregon slaves were still way higher than on Northern Virginia slaves (~11 minutes vs. ~21 minutes). Which led us to use a S3 bucket per region.

With those issues dealt with, we’re now ready for more widespread testing, and as such I’ve turned the shared cache on on Linux opt, Linux debug, Linux64 opt and Linux64 debug builds, for try only, only if the push contains the relevant setup, which landed in changeset a62bde1d6efe.

See my post on dev-tree-management for a few more details, notably if you hit bugs.

Please note this is only the beginning. More platforms will use the cache soon, including some that aren’t currently using ccache. And I got some timing numbers during the initial tests on try that hint at the most immediate performance issues with the script that need addressing. So you can expect builds to get faster and faster as the cache populates, and as the script is improved with feedback from past experiments and current deployment (I’ll be collecting data from your try pushes). Also relatedly, I’m working on build system improvements that should make the ‘libs’ step much faster, cutting down the time spent on that step.

|

|

Mitchell Baker: Content, Ads, Caution |

I’m starting with content but please rest assured I’ll get to the topic of ads and revenue.

In the early days of Firefox we were very careful not to offer content to our users. Firefox came out of a world in which both Netscape/AOL (the alma mater of many early Mozillians) and Microsoft had valued their content and revenue sources over the user experience. Those of us from Netscape/AOL had seen features, bookmarks, tabs, and other irritants added to the product to generate revenue. We’d seen Mozilla code subsequently “enhanced” with these features.

And so we have a very strong, very negative reaction to any activities that even remotely remind us of this approach to product. That’s good.

This reaction somehow became synonymous with other approaches that are not necessarily so helpful. For a number of years we refused to have any relationship with our users beyond we provide software and they use it. We resisted offering content unless it came directly from an explicit user action. This made sense at first when the web was so young. But over the years many people have come to expect and want their software to do things on their behalf, to take note of what one has done before and do something useful with it.

In the last few years we’ve begun to respond to this. We’re careful about it because the DNA is based on products serving users. Every time we offer something to our users we question ourselves rigorously about the motivations for that offer. Are we sure it’s the most value we can provide to our users? Are we sure, doubly-sure, we’re not fooling ourselves? Sometimes my commercial colleagues laugh at me for the amount of real estate we leave unmonitored or the revenue opportunities we decline.

So we look at the Tiles and wonder if we can do more for people. We think we can. I’ve heard some people say they still don’t want any content offered. They want their experience to be new, to be the same as it was the day they installed the browser, the same as anyone else might experience. I understand this view, and think it’s not the default most people are choosing. We think we can offer people useful content in the Tiles.

When we have ideas about how content might be useful to people, we look at whether there is a revenue possibility, and if that would annoy people or bring something potentially useful. Ads in search turn out to be useful. The gist of the Tiles idea is that we would include something like 9 Tiles on a page, and that 2 or 3 of them would be sponsored — aka “ads.” So to explicitly address the question of whether sponsored tiles (aka “ads”) could be included as part of a content offering, the answer is yes.

These sponsored results/ ads would not have tracking features.

Why would we include any sponsored results? If the Tiles are useful to people then we’ll generate value. That generates revenue that supports the Mozilla project. So to explicitly address the question of whether we care about generating revenue and sustaining Mozilla’s work, the answer is yes. In fact, many of us feel responsible to do exactly this.

Pretty much anytime we talk about revenue at Mozilla people get suspicious. Mozillians get suspicious, and our supporters get suspicious. There’s some value in that, as it reinforces our commitment to user experience and providing value to our users. There’s some drawbacks to this as well, however. I’ll be talking with Mozillians tomorrow and in the coming days on these topics in more detail.

https://blog.lizardwrangler.com/2014/02/13/content-ads-caution/

|

|

Jordan Lund: Adding --list-config-files to Mozharness |

In First Assignment, I touched on how Mozharness can have multiple configs. You can specify on any script say:

./scripts/some_script --cfg path/to/config1 --cfg path/to/config2 [etc..]

The way this works is pretty simple, we look for those configs and see if it is a URL or a local path. We then validate that they exist. Finally, we update what ultimately becomes self.config by calling .update() on self.config with each config given (in order!).

This is nice and allows for some powerful configuration driven scripts. However, while porting desktop builds from Buildbot to Mozharness I have come across 2 features that would be nice to have:

- the ability to have transparency of which config's keys/values self.config is using.

- allow for a specific part of a config file to be added to self.config while dictating the config file hierarchy per script run.

The motivation for these came from the realiziation that Firefox desktop builds can not have just one config file per build type. We would need hundreds of config files! This is because, if you think about it, we have multiple platforms, repo branches, special variants (asan, debug, etc), and build pools for each build. Every permutation of these has its own config's keys/values. Take a peak at buildbot-configs to see how hard this is to manage.

I needed a solution that scales and is easy to understand. Here is what I've done:

First, before I dive into the 2 features I mentioned above, let's recap from my last post. Rather than passing each config explicitly, I gave the script some 'intelligence' when it came to figuring out which scripts to use. There is no black magic: when it runs, the script will tell you what configs it is using and how it is using it:

for example, specifying:

--custom-build-variant debug

will tell the script to look for, depending on your platform and arch, the correct debug config. eg:

configs/builds

+-- releng_sub_linux_configs

+-- 32_debug.py

You can also just explicitly give the path to the build variant config file. For a list of valid shortnames, see '--custom-build-variant --help'. Either way, this adds another config file:

config = {

'default_actions': [

'clobber',

'pull',

'setup-mock',

'build',

'generate-build-props',

# 'generate-build-stats', debug skips this action

'symbols',

'packages',

'upload',

'sendchanges',

# 'pretty-names', debug skips this action

# 'check-l10n', debug skips this action

'check-test',

'update', # decided by query_is_nightly()

'enable-ccache',

],

'platform': 'linux-debug',

'purge_minsize': 14,

...etc

Next up, the branch:

--branch mozilla-central

this does 2 things. 1) It sets the branch we are using (self.config['branch']) and 2) it adds another config file to the script:

configs/builds/branch_specifics.py

This is a config file of dicts:

config = {

"mozilla-central": {

"update_channel": "nightly",

"create_snippets": True,

"create_partial": True,

"graph_server_branch_name": "Firefox",

},

"cypress": {

...etc

}

}

The script will see if the branch name given matches any branch keys in this config. If it does, it will add those keys/values to self.config.

Specifying the build pool behaves similarly:

--build-pool staging

adds the cfg file:

configs/builds/build_pool_specifics.py

which adds the dict under key 'staging' to self.config:

config = {

"staging": {

'balrog_api_root': 'https://aus4-admin-dev.allizom.org',

'aus2_ssh_key': 'ffxbld_dsa',

'aus2_user': 'ffxbld',

'aus2_host': 'dev-stage01.srv.releng.scl3.mozilla.com',

'stage_server': 'dev-stage01.srv.releng.scl3.mozilla.com',

# staging/preproduction we should use MozillaTest

# but in production we let the self.branch decide via

# self._query_graph_server_branch_name()

"graph_server_branch_name": "MozillaTest",

},

"preproduction": {

...etc

put together, we can do something like:

./scripts/fx_desktop_build.py --cfg configs/builds/releng_base_linux_64_builds.py --custom-build-variant asan --branch mozilla-central --build-pool staging

Which is kind of cool. To do this though, requires some 'user friendly' touches. For example, the order in which these arguments come shouldn't matter: the hierarchy of configs should be consistent. For desktop builds, the consistency goes: from highest precedence to lowest: build-pool, branch, custom-build-variant, and finally the base --cfg [file] passed (the platform and arch).

Another friendly touch needed was to be able to specify how this heirarchy effects self.config. As in, which config file do the keys/values come from?

Finally, you may have noticed that for branch and build-pool, I only use one config file for each: 'branchspecific.py' and 'buildpool_specifics.py'. I don't care about any branch or pool in those files that is not specified so we should not add that to self.config.

This ties in to my two features I wanted to add to Mozharness.

(recap):

- the ability to have transparency of which config's keys/values self.config is using.

- allow for a specific part of a config file to be added to self.config while dictating the config file hierarchy per script run.

So how did I achieve this? Let's look at some code snippets:

I will look at three sections of code.

- how the script allows users to specify options like '--branch' and get 'branch_specifics.py' added to my list of config files.

- how we can specify the options in any order while persisting hierarchy. Also how we can grab only part of a config file

- how we can list out each config file used and which keys/values are used in making self.config

*Note, the first one of these I made for a convenience to devs/users who will use this script. You can always just explicitly specify '--cfg path/to/cfg' for each config if you wish.

- in fxdesktopbuilds.py I wrote an OutputParser helper class:

class FxBuildOptionParser(object):

platform = None

bits = None

build_variants = {

'asan': 'builds/releng_sub_%s_configs/%s_asan.py',

'debug': 'builds/releng_sub_%s_configs/%s_debug.py',

'asan-and-debug': 'builds/releng_sub_%s_configs/%s_asan_and_debug.py',

'stat-and-debug': 'builds/releng_sub_%s_configs/%s_stat_and_debug.py',

}

build_pools = {

'staging': 'builds/build_pool_specifics.py',

'preproduction': 'builds/build_pool_specifics.py',

'production': 'builds/build_pool_specifics.py',

}

branch_cfg_file = 'builds/branch_specifics.py'

@classmethod

def set_build_branch(cls, option, opt, value, parser):

# first let's add the branch_specific file where there may be branch

# specific keys/values. Then let's store the branch name we are using

parser.values.config_files.append(cls.branch_cfg_file)

setattr(parser.values, option.dest, value) # the branch name

the above only shows the method for resolving the branch config (build-pool and custom-build-variant live in this class as well). Here is how --branch knows where to look

class FxDesktopBuild(BuildingMixin, MercurialScript, object):

config_options = [

[['--branch'], {

"action": "callback",

"callback": FxBuildOptionParser.set_build_branch,

"type": "string",

"dest": "branch",

"help": "This sets the branch we will be building this for. "

"If this branch is in branch_specifics.py, update our "

"config with specific keys/values from that. See "

"%s for possibilites" % (

FxBuildOptionParser.branch_cfg_file,

)}

],

...etc

2.In mozharness/base/config.py I extracted out of parseargs() where we find the config files and add their dicts. This is put into a seperate method: getcfgsfromfiles()

# append opt_config to allow them to overwrite previous configs

all_config_files = options.config_files + options.opt_config_files

all_cfg_files_and_dicts = self.get_cfgs_from_files(

all_config_files, parser=options

)

getcfgsfrom_files():

def get_cfgs_from_files(self, all_config_files, parser):

""" returns a dict from a given list of config files.

this method can be overwritten in a subclassed BaseConfig

"""

# this is what we will return. It will represent each config

# file name and its assoctiated dict

# eg ('builds/branch_specifics.py', {'foo': 'bar'})

all_cfg_files_and_dicts = []

for cf in all_config_files:

try:

if '://' in cf: # config file is an url

file_name = os.path.basename(cf)

file_path = os.path.join(os.getcwd(), file_name)

download_config_file(cf, file_path)

all_cfg_files_and_dicts.append(

(file_path, parse_config_file(file_path))

)

else:

all_cfg_files_and_dicts.append((cf, parse_config_file(cf)))

except Exception:

if cf in parser.opt_config_files:

print(

"WARNING: optional config file not found %s" % cf

)

else:

raise

return all_cfg_files_and_dicts

This is largely the same as what it was before but now you may notice I am collecting filenames and their assoctiated dicts in a list of tuples. This is on purpose so I can implement the ability to list configs and their keys/values used (I'll get to that later).

By extracting this out, I can now do something fun like subclass BaseConfig and overwrite getcfgsfrom_files():

back in fxdesktopbuild.py see the full method for more inline comments

class FxBuildConfig(BaseConfig):

def get_cfgs_from_files(self, all_config_files, parser):

# overrided from BaseConfig

# so, let's first assign the configs that hold a known position of

# importance (1 through 3)

for i, cf in enumerate(all_config_files):

if parser.build_pool:

if cf == FxBuildOptionParser.build_pools[parser.build_pool]:

pool_cfg_file = all_config_files[i]

if cf == FxBuildOptionParser.branch_cfg_file:

branch_cfg_file = all_config_files[i]

if cf == parser.build_variant:

variant_cfg_file = all_config_files[i]

# now remove these from the list if there was any

# we couldn't pop() these in the above loop as mutating a list while

# iterating through it causes spurious results :)

for cf in [pool_cfg_file, branch_cfg_file, variant_cfg_file]:

if cf:

all_config_files.remove(cf)

# now let's update config with the remaining config files

for cf in all_config_files:

all_config_dicts.append((cf, parse_config_file(cf)))

# stack variant, branch, and pool cfg files on top of that,

# if they are present, in that order

if variant_cfg_file:

# take the whole config

all_config_dicts.append(

(variant_cfg_file, parse_config_file(variant_cfg_file))

)

if branch_cfg_file:

# take only the specific branch, if present

branch_configs = parse_config_file(branch_cfg_file)

if branch_configs.get(parser.branch or ""):

print(

'Branch found in file: "builds/branch_specifics.py". '

'Updating self.config with keys/values under '

'branch: "%s".' % (parser.branch,)

)

all_config_dicts.append(

(branch_cfg_file, branch_configs[parser.branch])

)

if pool_cfg_file:

# largely the same logic as adding branch_cfg

return all_config_dicts

- finally, I added an option '--list-config-files' in Mozharness that any script can use:

self.config_parser.add_option(

"--list-config-files", action="store_true",

dest="list_config_files",

help="Displays what config files are used and how their "

"heirarchy dictates self.config."

)

def list_config_files(self, cfgs=None):

# go through each config_file. We will start with the lowest and print

# its keys/values that are being used in self.config. If any

# keys/values are present in a config file with a higher precedence,

# ignore those.

if not cfgs:

cfgs = []

print "Total config files: %d" % (len(cfgs))

if len(cfgs):

print "Config files being used from lowest precedence to highest:"

print "====================================================="

for i, (lower_file, lower_dict) in enumerate(cfgs):

unique_keys = set(lower_dict.keys())

unique_dict = {}

# iterate through the lower_dicts remaining 'higher' cfgs

remaining_cfgs = cfgs[slice(i + 1, len(cfgs))]

for ii, (higher_file, higher_dict) in enumerate(remaining_cfgs):

# now only keep keys/values that are not overwritten by a

# higher config

unique_keys = unique_keys.difference(set(higher_dict.keys()))

# unique_dict we know now has only keys/values that are unique to

# this config file.

unique_dict = {k: lower_dict[k] for k in unique_keys}

print "Config File %d: %s" % (i + 1, lower_file)

# let's do some sorting and formating so the dicts are parsable

max_key_len = max(len(key) for key in unique_dict.keys())

for key, value in sorted(unique_dict.iteritems()):

# pretty print format for dict

cfg_format = " %%s%%%ds %%s" % (max_key_len - len(key) + 2,)

print cfg_format % (key, '=', value)

print "====================================================="

# finally exit since we only wish to see how the configs are layed out

raise SystemExit(0)

Again the actual method has proper doc strings and more comments.

This can all be tried out. Simply clone from here: https://github.com/lundjordan/mozharness/tree/fx-desktop-builds

and try out a few '--list-config-files' against the script. This option causes the script to end before actually running any actions (akin to 'list-actions') so you can hammer away at my script with various options against it. Or try it against any of other mozharness scripts.

WARNING: fxdesktopbuilds.py is actively under development. Aside from it breaking from time to time, it is only made for our continuous integration system. There is not a config(s) that is friendly for local development. So only run this if you also supply '--list-config-files'

Notice each one gives you output the keys/values that are actually used by self.config: e.g.: If relengbaselinux64builds.py has a key thats value differs from a key in buildpool config, it won't be shown (buildpool has precedence).

examples you can try:

./scripts/fx_desktop_build.py --cfg configs/builds/releng_base_linux_64_builds.py --custom-build-variant asan --branch mozilla-central --build-pool staging --list-config-files

./scripts/fx_desktop_build.py --cfg configs/builds/releng_base_linux_32_builds.py --custom-build-variant debug --build-pool preproduction --list-config-files

./scripts/fx_desktop_build.py --cfg configs/builds/releng_base_linux_64_builds.py --custom-build-variant asan --list-config-files

./scripts/fx_desktop_build.py --list-config-files

example output (large parts of this is omitted for brevity):

Branch found in file: "builds/branch_specifics.py". Updating self.config with keys/values under branch: "mozilla-central".

Build pool config found in file: "builds/build_pool_specifics.py". Updating self.config with keys/values under build pool: "staging".

Total config files: 4

Config files being used from lowest precedence to highest:

=====================================================

Config File 1: configs/builds/releng_base_linux_64_builds.py

buildbot_json_path = buildprops.json

clobberer_url = http://clobberer.pvt.build.mozilla.org/index.php

default_vcs = hgtool

do_pretty_name_l10n_check = True

enable_ccache = True

enable_count_ctors = True

enable_package_tests = True

exes = {'buildbot': '/tools/buildbot/bin/buildbot'}

graph_branch = MozillaTest

graph_selector = /server/collect.cgi

graph_server = graphs.allizom.org

hgtool_base_bundle_urls = ['http://ftp.mozilla.org/pub/mozilla.org/firefox/bundles']

hgtool_base_mirror_urls = ['http://hg-internal.dmz.scl3.mozilla.com']

latest_mar_dir = /pub/mozilla.org/firefox/nightly/latest-%(branch)s

mock_mozilla_dir = /builds/mock_mozilla

use_mock = True

vcs_share_base = /builds/hg-shared

etc...

=====================================================

Config File 2: /Users/jlund/devel/mozilla/dirtyRepos/mozharness/scripts/../configs/builds/releng_sub_linux_configs/64_asan.py

base_name = Linux x86-64 %(branch)s asan

default_actions = ['clobber', 'pull', 'setup-mock', 'build', 'generate-build-props', 'symbols', 'packages', 'upload', 'sendchanges', 'check-test', 'update', 'enable-ccache']

enable_signing = False

enable_talos_sendchange = False

platform = linux64-asan

etc ...

=====================================================

Config File 3: builds/branch_specifics.py

create_partial = True

create_snippets = True

update_channel = nightly

=====================================================

Config File 4: builds/build_pool_specifics.py

aus2_host = dev-stage01.srv.releng.scl3.mozilla.com

aus2_ssh_key = ffxbld_dsa

aus2_user = ffxbld

balrog_api_root = https://aus4-admin-dev.allizom.org

download_base_url = http://dev-stage01.srv.releng.scl3.mozilla.com/pub/mozilla.org/firefox/nightly

graph_server_branch_name = MozillaTest

sendchange_masters = ['dev-master01.build.scl1.mozilla.com:8038']

stage_server = dev-stage01.srv.releng.scl3.mozilla.com

=====================================================

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [917878] “patches submitted” and “patches reviewed” on the user profile don’t take github pull requests into consideration

- [926085] Forbid single quotes to delimit URLs (no )

- [961789] large dependency trees with lots of resolved bugs are very slow to load

- [970184] “possible duplicates” shouldn’t truncate words at the first non-word character

- [967910] “IO request failed : undefined” on My Dashboard

- [971872] Default secure group for “Audio/Visual Infrastructure” should not be “core-security”

- [40896] Bugzilla needs a “preview” mode for comments

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/02/13/happy-bmo-push-day-82/

|

|

Benjamin Kerensa: Will Firefox Really Have Ads? |

There has been a lot of sensational writing by a number of media outlets over the last 24 hours in reaction to a post by Darren Herman who is VP of Content Services. Lots of people have been asking me whether there will be ads in Firefox and pointing out these articles that have sprung up everywhere.

There has been a lot of sensational writing by a number of media outlets over the last 24 hours in reaction to a post by Darren Herman who is VP of Content Services. Lots of people have been asking me whether there will be ads in Firefox and pointing out these articles that have sprung up everywhere.

So first I want to look at the Merriam Webster definition of an Advertisement:

ad·ver·tise·ment

noun \

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/8ad-WShNGAs/will-firefox-really-ads

|

|

Manish Goregaokar: Getting started with bug-squashing for Firefox |

First off, a big thanks to @Debloper (and @hardfire) for showing me the basics. The process is intimidating, though once you've done it with help, it becomes pretty natural. These Mozilla reps got me past that intimidation point, so I'm really grateful for that.

This post is basically an tutorial on how to get started. It's basically an in-depth version of this tutorial, which I feel misses a few things.

Note that I am still a beginner at this, comments on how to improve this post/my workflow appreciated.

Ok, let's get started.

Step 1: Identifying a bug you want to fix

Firstly, there's What Can I Do For Mozilla?. This is an interactive questionnaire that helps you find out which portions of Mozilla or Firefox you may be able to comfortably contribute to. Note that this is not just Firefox, though if you select the HTML or JS categories you will be presented with the Firefox subcategory which contains various entries.

This doesn't help find bugs as much as it helps you find the areas of the codebase that you might want to look at.

However, there is a different tool that is built specifically for this purpose; to look for easy bugs given one's preferences and capabilities. It's called Bugs Ahoy, and it lets you tick your preferences and programming languages to filter for bugs. It also has two insanely useful options, one that lets you filter out assigned bugs, and one that tells it to look for "good first bugs" ("simple bugs"). "Good first bug"s on Bugzilla are easy bugs which are kept aside for new users to try their hand at. There is a mentor for these bugs, who is a very active community member or employee. These mentors help you through the rest of the process, from where you need to look in the code to how to put up a patch. I've found that the mentors are very friendly and helpful, and the experience of being mentored on a bug is rather enjoyable.

Make sure the bug isn't assigned to anyone, and look through the comments and attachments for details on the status of the bug. Some bugs are still being discussed, and some bugs are half-written (it's not as easy to use these for your first bug). If you need help on choosing a bug, join #introduction on irc.mozilla.org. There are lots of helpful people out there who can give feedback on your chosen bug, and help you get started.

Step 2: Finding the relevant bits of code

saveTo.label as the string. (Remember, all displayed strings will be in a localization file). Searching for saveTo.label turns up main.xul. Now that you've found this, you can dig deeper by looking at the event handling and figuring out where the relevant javascript is, or you can look around this same file and figure out how it works, depending on what you want to fix.Step 3: Downloading and building the code

Not all bugs need a build. Some are quite easy to do without having a full copy of the code or a build, and while you'll eventually want to have both of these, it is possible to hold this off for a while, depending on the bug. While it's easier to create patchfiles when the system is all set up, I will address patching without the full code in the next section.

sudo apt-get mercurial works).One way is to simply

hg clone https://hg.mozilla.org/mozilla-central. This will download the full repository. However, if you don't think your internet connection will be stable, download the mozilla-central bundle from here and follow the steps given there. Note that Mercurial is a bit different from Git, so you may wish to read up on the basics.To build firefox , first do the OS-specific build environment setup linked to here. Once done, go to the root directory of the firefox code and run ./mach build. After your first build, you can run incremental builds (that only build the files you ask it to, and rebuilds any files depending on it) by using

./mach build

, eg ./mach build browser/components/preferences/. You can specify both folders and files to the incremental build.Note that for some javascript files, you have to build their containing directory — so if your changes aren't getting reflected in the incremental build, try building the directory they are in.

Step 4: Getting a patch

Creating patches with hg

~/.hgrc to enable the mercurial queues extension with the proper settings.[ui]

username = Firstname Lastname

[defaults]

qnew = -Ue

[extensions]

mq =

[diff]

git = 1

unified = 8

showfunc = 1

Once done, navigate to the firefox source tree and run hg qqueue -c somequeuenamehere. This will create a named patch queue that you can work on.

Now, run hg qnew patchname.patch and then hg qpush patchname.patch. This creates a new patch by that name in the .hg/patches-queuename folder, and pushes it onto the curretly applied stack of patches. You can update its contents with the changes made to the code by hg qrefresh or simply hg qref. This patch is the one that you can submit in step 5.

When you run hg qnew, it will ask you to enter a commit message. Write the bug name and a short description of the patch ("Bug 12345 - Frob the baz button when foo happens"), and add a ";r=nameofreviewer". In case of mentored bugs, the uername of the mentor will be your reviewer. If not, you'll have to find a reviewer (more details on this later, for now you may leave this blank and edit it in the patch file later). Note that the default editor for this is usually vim, so you have to press Ins before typing text and then Esc followed by a

:x and Enter to save.Advanced usage

If you want to work on a different bug in parallel, you just have to pop all current patches out, and create a new patch queue with hg qqueue -c. You can then switch between the queues with hg qqueue queuename.

Creating patches without hg

For preliminary patches, with just one file

If you're just editing one file, put the old version and the new version side by side, and run diff -u oldfile newfile >mypatch.patch in the same directory. Now, open the patch file and edit the paths to match the relative filepath of the edited file from the root firefox directory (eg if you edited

main.xulold to main.xul, replace both names with browser/components/preferences/main.xul)Proper patches

a/filename and b/filename lines to be a/path/to/filename and b/path/to/filename. The paths here are relative with respect to the root directory.Now, add the following to the top of the patch

# HG changeset patch

# Parent parenthash

# User Firstname Lastname email@something.com>

Bug 12345 - Frob the baz button when foo happens; r=jaws

Set the commit message as described in the above section for creating patches with hg.

As for the parent hash, you can get it by going to the mozilla-central hg repository and copying the hash of the tip commit.

Step 5: Submitting the patch, and the review process

- If the bug is mentored, your mentor will be able to review your code. Usually the mentor name will turn up in the "suggested reviewers" dropdown box in bold, too.

- If the bug isn't mentored, you still might be able to find reviewers in the suggested reviewers dropdown. The dropdown is available for bugs in most firefox and b2g components.

- Otherwise, ask around in IRC or check out the hg logs of the file you modified (start here) to find out who would be an appropriate reviewer.

- A list of module owners and peers for each module can be found here (the Firefox and Toolkit ones are usually the ones you want). These users are allowed to review code in that module, so make sure you pick from those. If you mistakenly pick someone else, they'll usually be helpful enough to redirect the review to the right person.

http://inpursuitoflaziness.blogspot.com/2014/02/getting-started-with-bug-squashing.html

|

|

Rizky Ariestiyansyah: Hola! Planet Mozilla |

|

|

John O'Duinn: “We are all remoties” @ Twilio |

(My life been hectic on several other fronts, so I only just now noticed that I never actually published this blog post. Sorry!!)

On 07-nov-2013, I was invited to present “We are all remoties” in Twilio’s headquarters here in San Francisco as part of their in-house tech talk series.

On 07-nov-2013, I was invited to present “We are all remoties” in Twilio’s headquarters here in San Francisco as part of their in-house tech talk series.

For context, its worth noting that Twilio is doing great as a company, which means they are hiring. And outgrowing their current space, so one option they were investigating was to keep the current space, and open up a second office elsewhere in the bay area. As they’d always been used to working in the one location, this “split into two offices” was top of everyone’s mind… hence the invitation from Thomas to give this company-wide talk about remoties.

Twilio’s entire office is a large, SOMA-style-warehouse-converted-into-open-plan-offices layout, packed with lots of people. The area I was to present in was their big “common area”, where they typically host company all-hand meetings, Friday socials and other big company-wide events. Quite, quite large. I’ve no idea how many people were there but it felt huge, and was wall-to-wall packed. The size gave an echo-y audio effect off the super-high high concrete ceilings and far-distant bare concrete walls, with a weird couple of structural pillars right in the middle of the room. Despite my best intentions, during the session, I found myself trying to “peer around” the pillars, aware of the people blocked from view.

Its great to see the response from folks when slides in a presentation *exactly* hit onto what is on top-of-their-minds. One section, about companies moving to multiple locations, clearly hit home with everyone… not too surprising, given the context.  Another section, about a trusted employee moving out from office to start being a 100% remote employee, hit a very personal note – there was someone in the 2nd row who was a long-trusted employee actually about to embark on this exact change. He got quite the attention from everyone around him, and we stopped everything for a few minutes to talk about his exact situation. As far as I can tell, he found the entire session very helpful, but only time will tell how things work out for him.

Another section, about a trusted employee moving out from office to start being a 100% remote employee, hit a very personal note – there was someone in the 2nd row who was a long-trusted employee actually about to embark on this exact change. He got quite the attention from everyone around him, and we stopped everything for a few minutes to talk about his exact situation. As far as I can tell, he found the entire session very helpful, but only time will tell how things work out for him.

The very great interactions, the lively Q+A, and the crowd of questions afterwards were all lots of fun and quite informative.

Big thanks to Thomas Wilsher @ Twilio for putting it all together. I found it a great experience, and the lively discussions before+during+after lead me to believe others did too.

John.

PS: For a PDF copy of the presentation, click on the smiley faces! For the sake of my poor blogsite, the much, much, larger keynote file is available on request.

http://oduinn.com/blog/2014/02/12/we-are-all-remoties-twilio/

|

|

K Lars Lohn: Today We Fight Back |

surveillance campaign.

I urge you to support the USA Freedom Act (H.R. 3361/S. 1599), an important first step in stopping mass spying, reforming the FISA court, and increasing transparency. But reform shouldn't stop there: please push for stronger privacy protections that stop dragnet surveillance of innocent users across the globe, and stop the NSA from sabotaging international encryption standards.

I'm also urging you to oppose S. 1631, the so-called FISA Improvements Act. This bill aims to entrench some of the worst forms of NSA surveillance and extend the NSA surveillance programs in unprecedented ways. It would allow the NSA to continue to collect the phone records of hundreds of millions of Americans not suspected of any crime—a program I absolutely oppose—and could expand into collecting records of Internet usage.

The NSA mass surveillance programs chill freedom of speech, undermine confidence in US Internet companies, and run afoul of the Constitution. We need reform now.

sort of government intrusion into our lives

the government should NOT be allowed to have.

What's the difference?

within his own country.

|

|

Pete Moore: Weekly review 2014-02-12 |

Achievements

This vcs2vcs sync process is now live on github-sync2.dmz.scl3.mozilla.com for all the build/* repos - currently sync’ing to https://github.com/petermoore.

The last remaining step is to disable the legacy system and point the new environment to the production target: https://github.com/mozilla.

This I hope to achieve later today, pending review approval from Aki and Hal.

After this, the priority is:

- getting the new mapper working locally, and tested

- deploying the new mapper into elastic bean stalk

- getting security review of new mapper

- looking at adding validation of keys (hash-based) - to ensure integrity of keys after iterative diff-pushes

Once new mapper is live, this unblocks:

- l10n vsync

- gecko-git vsync

These are the two next big vcs2vcs projects on the list.

|

|

Michelle Thorne: Webmaker Workweek: So Wow. |

Just coming home from a truly fantastic week in Toronto with the talented Webmaker team. This was the first time the Webmaker product + community teams, plus Mozilla Foundation’s engagement and operations teams, met face-to-face to hack on Webmaker.

There’s only one way to describe it (hat tip, Brett!):

Webmaker Meta

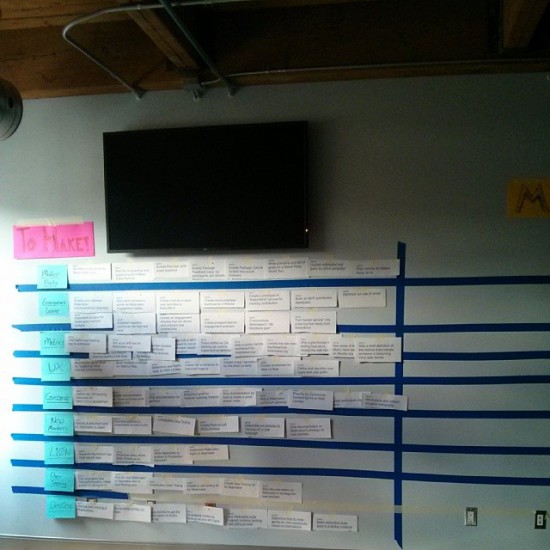

In addition to trampolining, ax-throwing and general revelry, we also shipped a lot of things. Guided by a beloved scrum board, we got in small group to tackle big, interwoven topics.

Our new-and-improved wiki page shows how we’re building and improving the various aspects of Webmaker. (Thanks for meta-wrangling this, Matt!)

What’s also emerging is a helpful way to describe Webmaker’s offering. Lifted from Geoffrey’s notes, here’s a summary of the components that make up Webmaker:

We are clear on who Webmaker is for (people who want to teach the web), what we offer them (tools, a skills map, teaching kits, training, credentials), and the ways people take these offerings to their learners and the broader world (events, partners, a global community).

These are increasingly more interconnected and aligned than ever before. That is really exciting.

Towards More Contributors

The Mozilla Foundation’s collective goal this year is to collaborate with 10,000 contributors. A big portion of these contributors will engage with Mozilla through Webmaker.

A Webmaker contributor is anyone actively teaching, making or organizing around web literacy. What we did the last week was get clearer on what those actions are and how we might be able to count them.

MOAR Teach the Web

In addition to shaping the engagement ladder and metrics, our small group (aka the Teach the Web team) focused on three major deliverables:

- i) teaching kits

- ii) the Web Literacy Map

- and iii) training.

Here’s a recap of what that means and what we shipped:

Teaching Kits

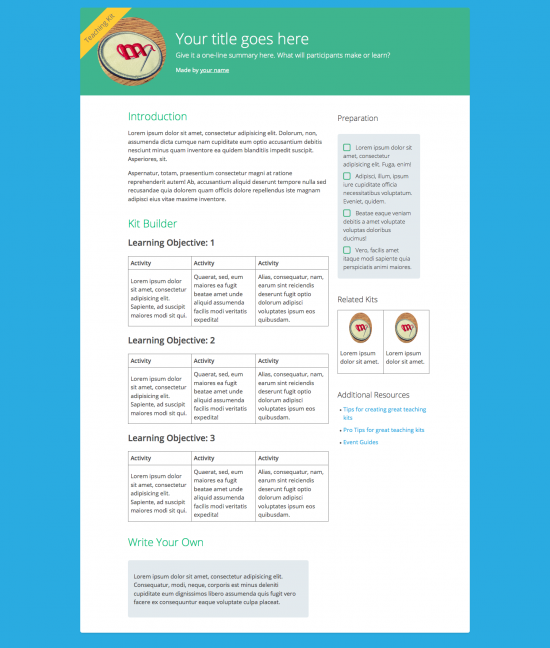

Teaching Kits are a modular collection of activities and resources about how to teach a web literacy competency or competencies.

The kits incorporate activities and resources that live on webmaker.org, as well as external resources that are tagged with the Web Literacy Map. Mentors learn how to use and make these kits in our training. Kits are also co-designed online and at live events with partners and community members.

What we shipped:

- With a new UX and simplified taxonomy, users will soon find it easier to i) rip and read, 2) remix and 3) create new kits that align with the Web Literacy Map. The kits have always been modular (that’s their genius), but it’s been hard to use and remix them in practice. An improved layout and a simplified taxonomy means that users better understand the structure and modularity of these offerings. There are also lots of important features to make it easier to edit, such as writing in Markdown.

- Thanks to Webmaker’s insightful localization team, David Humphrey and Aali, we’ll better prepare the kits for localization using Transifex, a powerful tool already helping Webmaker be translated into 50+ languages.

- Furthermore, we realized that teaching kits are best created in a co-design process. We made an example agenda for how to run an in-person co-design sprint with partners and community members, as well as how to user test the results afterward. We want to roadtest the co-design process with a few partners over the coming weeks.

Year goal:

Hundreds of new teaching kits. Tens of exemplary kits.

Web Literacy Map

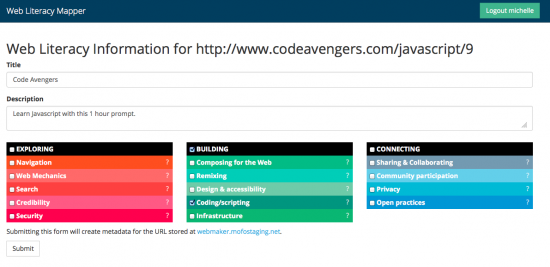

The Web Literacy Map is a flexible specification of the skills and competencies that Mozilla and our community of stakeholders believe are important to pay attention to when getting better at reading, writing and participating on the web.

The map guides mentors to find teaching kits and activities for the skills they care about. It provides a structured way to approach web literacy while also encouraging customization and expansion to fit the mentors’ and learners’ interests.

What we shipped:

- A bookmarklet that makes it easy for users to tag any resource on the web–such as a Webmaker “make” or lesson plans on an external URL–with the Web Literacy Map.

- These tagged resources will soon be discoverable and searchable on webmaker.org, as Web Literacy becomes the heart of the site’s UX. It will make it easier for users to find and use these resources, as well as create new kits that put the resources in context. Down the line, there will also be badges that align with the map.

- Furthermore, we’re drafting a whitepaper about the Web Literacy Map and how it’s part of Mozilla’s overall webmaking efforts and the general web literacy landscape.

Year end goal:

Thousands of tagged resources. An oft-cited whitepaper and influence in web literacy discourse.

Training

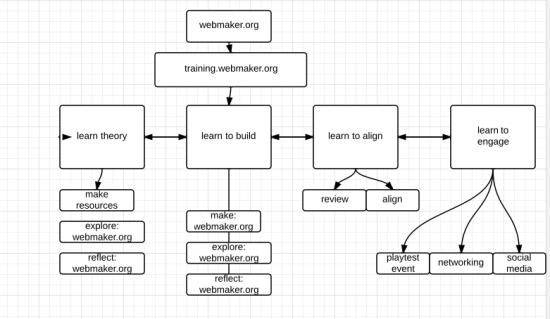

Training for Webmaker is a modular offering that mixes online and offline learning to teach mentors 1) our pedagogy and webmaking 2) how to use, remix and create new teaching kits and 3) how to align resources with the Web Literacy Map.

The trainings include additional “engagement” sections about how to run events, conduct local user-testing and even for mentors to lead a training themselves. Mentors will move seamlessly between the training content and webmaker.org, as key assignments include using and making things on Webmaker.

What we shipped:

- A course syllabus that merges the best-of content from our previous trainings while becoming more modular and interlinked to Webmaker.

- A brief for a staging platform that runs on Github pages to be ready for an “alpha” online training in March. Importantly, this platform should give users the ability to copy an existing training, remix it and run it for their own community. Also, we will link Webmaker logins and design to the site, so that training feels like a natural extension of webmaker.org.

- After a massive kickoff training in May, Maker Party will be ablaze with community-led trainings and targeted in-person ones around the world. We developed a timeline and event-driven model that shows an arc from our initial training to growing a circle of Super Mentors who can facilitate their own trainings as well as partnership pathways to run bespoke trainings for certain groups.

Year end goal:

Thousands of Webmaker mentors.

Thanks!

All of these incredible outcomes were shaped and shipped by the stellar the Teach the Web team: Laura, Kat, Doug, and William. A big thank you! So wow.

Also, thanks so much to the people who joined our sessions and made this work come to life: Karen, Robert, Julia, Chris L., Paula, Atul, Cassie, Kate, and Gavin. We’re hugely indebted to your help and contributions!

We’d also love to hear your thoughts about the above ideas and how you’re interested to plug in. Leave a comment here or post to our mailing list.

|

|

Rub'en Mart'in: Apps market trends, Firefox OS and Telegram |

In the last months there have been a lot of heated discussions around Firefox OS and how the lack of a concrete app was a blocker for the whole platform. Yes, I’m talking about Whatsapp and their intention to not develop a webapp/Firefox OS app at this time. Let’s see why this is not that important over time.

Trends are just trends

In the past two weeks we have seen a surprising trend change in some countries, and specially in Spain around IM apps. A new app came into scene and people went crazy installing it. The same users we were told that won’t move from Whatsapp no matter what.

Telegram is an IM app, with an UI almost identical to Whatsapp, an open API and protocol, official open source and free clients for Android, iOS and also third party Win, Mac, Linux and web apps. The point is that everyone can build their own, they use secure protocols and it allows you not to go through their servers thanks to “secret and temporal chats”.

Tech blogs and press started to talk about it a few weeks ago in Spanish, and it went viral, really viral.

To be fair, most people installed it mainly because they don’t want to pay 1€/yr for Whatsapp, and also because they were told by their friends it’s quicker, it has secure chats where you can auto-destroy messages on both sides and it has desktop and web clients. It has some of the things people were complaining about Whatsapp for a long time and were never implemented.

In fact the success is being so big here that Telegram reported to be growing 200k users per day just in Spain and reached top 1 free app on Android and iOS stores last week. I’ve found myself surprised seeing some of my less tech-savvy contacts joining Telegram in the last weeks (60+ and counting right now).

What’s the point?

- Market can change, suddenly.

- No app should push a platform back.

- Trend are just that, trends.

- People trust their friends more than ads (and we know this very well at mozilla).

Our goal at Mozilla with Firefox OS is to enable and empower the web as the platform, and allow projects like Telegram to be successful if they have a vision. Projects which rely on out of date, closed and locked business models with no respect to user control and privacy are condemned to fail over time, and users are aware about this more and more these days.

I would like to encourage anyone interested in a Telegram app for Firefox OS to check Webogram, a webapp for Telegram, currently close to be ready for mobile, which need more testers and web hackers.

http://www.nukeador.com/12/02/2014/app-market-trends-firefox-os-and-telegram/

|

|