Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Chris Lord: Web Navigation Transitions |

Wow, so it’s been over a year since I last blogged. Lots has happened in that time, but I suppose that’s a subject for another post. I’d like to write a bit about something I’ve been working on for the last week or so. You may have seen Google’s proposal for navigation transitions, and if not, I suggest reading the spec and watching the demonstration. This is something that I’ve thought about for a while previously, but never put into words. After reading Google’s proposal, I fear that it’s quite complex both to implement and to author, so this pushed me both to document my idea, and to implement a proof-of-concept.

I think Google’s proposal is based on Android’s Activity Transitions, and due to Android UI’s very different display model, I don’t think this maps well to the web. Just my opinion though, and I’d be interested in hearing peoples’ thoughts. What follows is my alternative proposal. If you like, you can just jump straight to a demo, or view the source. Note that the demo currently only works in Gecko-based browsers – this is mostly because I suck, but also because other browsers have slightly inscrutable behaviour when it comes to adding stylesheets to a document. This is likely fixable, patches are most welcome.

Navigation Transitions specification proposal

Abstract

An API will be suggested that will allow transitions to be performed between page navigations, requiring only CSS. It is intended for the API to be flexible enough to allow for animations on different pages to be performed in synchronisation, and for particular transition state to be selected on without it being necessary to interject with JavaScript.

Proposed API

Navigation transitions will be specified within a specialised stylesheet. These stylesheets will be included in the document as new link rel types. Transitions can be specified for entering and exiting the document. When the document is ready to transition, these stylesheets will be applied for the specified duration, after which they will stop applying.

Example syntax:

When navigating to a new page, the current page’s ‘transition-exit‘ stylesheet will be referenced, and the new page’s ‘transition-enter‘ stylesheet will be referenced.

When navigation is operating in a backwards direction, by the user pressing the back button in browser chrome, or when initiated from JavaScript via manipulation of the location or history objects, animations will be run in reverse. That is, the current page’s ‘transition-enter‘ stylesheet will be referenced, and animations will run in reverse, and the old page’s ‘transition-exit‘ stylesheet will be referenced, and those animations also run in reverse.

[Update]

Anne van Kesteren suggests that forcing this to be a separate stylesheet and putting the duration information in the tag is not desirable, and that it would be nicer to expose this as a media query, with the duration information available in an @-rule. Something like this:

@viewport {

navigate-away-duration: 500ms;

}

@media (navigate-away) {

...

}I think this would indeed be nicer, though I think the exact naming might need some work.

Transitioning

When a navigation is initiated, the old page will stay at its current position and the new page will be overlaid over the old page, but hidden. Once the new page has finished loading it will be unhidden, the old page’s ‘transition-exit‘ stylesheet will be applied and the new page’s ‘transition-enter’ stylesheet will be applied, for the specified durations of each stylesheet.

When navigating backwards, the CSS animations timeline will be reversed. This will have the effect of modifying the meaning of animation-direction like so:

Forwards | Backwards -------------------------------------- normal | reverse reverse | normal alternate | alternate-reverse alternate-reverse | alternate

and this will also alter the start time of the animation, depending on the declared total duration of the transition. For example, if a navigation stylesheet is declared to last 0.5s and an animation has a duration of 0.25s, when navigating backwards, that animation will effectively have an animation-delay of 0.25s and run in reverse. Similarly, if it already had an animation-delay of 0.1s, the animation-delay going backwards would become 0.15s, to reflect the time when the animation would have ended.

Layer ordering will also be reversed when navigating backwards, that is, the page being navigated from will appear on top of the page being navigated backwards to.

Signals

When a transition starts, a ‘navigation-transition-start‘ NavigationTransitionEvent will be fired on the destination page. When this event is fired, the document will have had the applicable stylesheet applied and it will be visible, but will not yet have been painted on the screen since the stylesheet was applied. When the navigation transition duration is met, a ‘navigation-transition-end‘ will be fired on the destination page. These signals can be used, amongst other things, to tidy up state and to initialise state. They can also be used to modify the DOM before the transition begins, allowing for customising the transition based on request data.

JavaScript execution could potentially cause a navigation transition to run indefinitely, it is left to the user agent’s general purpose JavaScript hang detection to mitigate this circumstance.

Considerations and limitations

Navigation transitions will not be applied if the new page does not finish loading within 1.5 seconds of its first paint. This can be mitigated by pre-loading documents, or by the use of service workers.

Stylesheet application duration will be timed from the first render after the stylesheets are applied. This should either synchronise exactly with CSS animation/transition timing, or it should be longer, but it should never be shorter.

Authors should be aware that using transitions will temporarily increase the memory footprint of their application during transitions. This can be mitigated by clear separation of UI and data, and/or by using JavaScript to manipulate the document and state when navigating to avoid keeping unused resources alive.

Navigation transitions will only be applied if both the navigating document has an exit transition and the target document has an enter transition. Similarly, when navigating backwards, the navigating document must have an enter transition and the target document must have an exit transition. Both documents must be on the same origin, or transitions will not apply. The exception to these rules is the first document load of the navigator. In this case, the enter transition will apply if all prior considerations are met.

Default transitions

It is possible for the user agent to specify default transitions, so that navigation within a particular origin will always include navigation transitions unless they are explicitly disabled by that origin. This can be done by specifying navigation transition stylesheets with no href attribute, or that have an empty href attribute.

Note that specifying default transitions in all situations may not be desirable due to the differing loading characteristics of pages on the web at large.

It is suggested that default transition stylesheets may be specified by extending the iframe element with custom ‘default-transition-enter‘ and ‘default-transition-exit‘ attributes.

Examples

Simple slide between two pages:

[page-1.html]

[page-1-exit.css]

#bg {

animation-name: slide-left;

animation-duration: 0.25s;

}

@keyframes slide-left {

from {}

to { transform: translateX(-100%); }

}

[page-2.html]

[page-2-enter.css]

#bg {

animation-name: slide-from-left;

animation-duration: 0.25s;

}

@keyframes slide-from-left {

from { transform: translateX(100%) }

to {}

}

I believe that this proposal is easier to understand and use for simpler transitions than Google’s, however it becomes harder to express animations where one element is transitioning to a new position/size in a new page, and it’s also impossible to interleave contents between the two pages (as the pages will always draw separately, in the predefined order). I don’t believe this last limitation is a big issue, however, and I don’t think the cognitive load required to craft such a transition is considerably higher. In fact, you can see it demonstrated by visiting this link in a Gecko-based browser (recommended viewing in responsive design mode Ctrl+Shift+m).

I would love to hear peoples’ thoughts on this. Am I actually just totally wrong, and Google’s proposal is superior? Are there huge limitations in this proposal that I’ve not considered? Are there security implications I’ve not considered? It’s highly likely that parts of all of these are true and I’d love to hear why. You can view the source for the examples in your browser’s developer tools, but if you’d like a way to check it out more easily and suggest changes, you can also view the git source repository.

http://chrislord.net/index.php/2015/04/24/web-navigation-transitions/

|

|

Cameron Kaiser: IonPower progress report |

% /Applications/TenFourFoxG5.app/Contents/MacOS/js --no-ion -f run.js

Richards: 203

DeltaBlue: 582

Crypto: 358

RayTrace: 584

EarleyBoyer: 595

RegExp: 616

Splay: 969

NavierStokes: 432

----

Score (version 7): 498

% ../../../../mozilla-36t/obj-ff-dbg/dist/bin/js -f run.js

Richards: 337

DeltaBlue: 948

Crypto: 1083

RayTrace: 913

EarleyBoyer: 350

RegExp: 259

Splay: 584

NavierStokes: 3262

----

Score (version 7): 695

I've got one failing test case left to go (the other is not expected to pass because it assumes a little-endian memory alignment)! We're almost to the TenFourFox 38 port!

http://tenfourfox.blogspot.com/2015/04/ionpower-progress-report.html

|

|

Emma Irwin: My year on Reps Council |

It’s been one year! An incredible year of learning, leading and helping evolve the Mozilla Reps program as a council member. As my term ends I want to share my experiences with those considering this same path, but also as a way to lend to the greater narrative of Reps as a leadership platform.

I could write easily write 12 posts to cover the experience – but I thought this might be more helpful:

The 7 things I know for sure

(after 12 months on Reps Council)

1. Mozilla Reps Council Is a Journey of Learning and Inspiration

I assumed, when I first started council, that my workload would consist of mostly administrative tasks (although to be truthful there is a lot of that). I also assumed I would effortlessly lean on my existing leadership skills to ‘help out’ where needed. It turns out, I had a lot to learn and improve on – here are some of the new and sharpened skills I am emerging with:

- Problem solving

- Conflict Resolution/ Crisis Management

- Communication

- Strategy

- Transparency

- Project Planning

- Task Management

- Writing

- Respecting Work-Life Balance

- Debating Respectfully

- Public Speaking

- Facilitation

- The art of saying ‘no’/when to step back

- The art of ‘not dropping balls’ or knowing which balls will bounce back, and which will break

- Being brave (aka talking to leadership and with nagging imposter syndrome)

- Empathy

- Planning for Diversity

- Outreach

- Teaching

- Mentorship

2. 2015 is a (super) important year for Reps

Nurtured by the loving hands of 5 previous Reps councils, a strong mentorship structure and over 400 Reps and thousands of community members the Mozilla Reps program has come to an important milestone as a recognized body of leadership across Mozilla. The clearly articulated vision of Reps as a ‘launch pad for leadership’ has pushed us to be more strategic in our goals. And we are. The next council together with mentors will be critical in executing these goals.

3. The voice of community is valued, and Mozilla is listening

In the past few months, we’ve worked with Mitchell Baker, Chris Beard, Mark Surman and David Slater, Mary-Ellen and others on everything from conflict resolution, to VP interview and on-boarding processes. Reps Council is on the Mozilla leadership page. The Mozilla Reps call has been attended by Firefox and Brand teams in need of feedback. It’s not a coincidence, and it’s not casual – your voice matters. Reps as leaders have the ear of the entire organization, because Reps are the voice of their extended community.

I encourage Rep Mentors with loud minds – to run for council this year.

4. Mozilla Reps is ever-evolving

When I joined Reps Council, I had a lot of ideas about what would would ‘fix’. And I laugh at myself now – ‘fixing’ is something we do to flaws, to errors and mistakes – but the Reps program is not an immovable force – it’s a living organism, it’s alive with people, their ideas, inventions and actions. How we evolve, while aligning with the needs of project goals, is a bit like changing the tire on a moving car . If you are considering a run for council, it might help to envision ways you can evolve, improve and grow the program as it shifts, and in response to community vision for their own participation goals.

5. Changing minds is hard / Outreach matters

I can’t write a list like this without acknowledging a my personal challenge of recognizing and trying to change ‘perception problems’. It was strange to move from what had been a fairly easy transition between community, Rep and mentor to Reps council where almost suddenly – I was regarded as part of a bureaucratic structure. I didn’t see or feel that from my fellow council members, and it’s been important to me that we change that perception through outreach.

Perceptions of our extended community have also been challenging – the idea that Reps is somehow isolated or a special contributor group is contrary to the leadership platform we are really building.

Slowly we are changing minds, slowly outreach is making a difference – I am happy and optimistic about this.

6. Diversity Matters Reps is an incredibly diverse community with diverse representation in many areas including age, geography and experience. Few other communities can compare . But, like much of the technology world we struggle with the representation of women in our council, and mentorship base. To be truly reflective of our community, and our world – to have the benefit of all perspectives we need to encourage women leaders. As I leave council, my hope is that we will continue to prioritize women in leadership roles.

7. Our community rocks Brilliant, creative, energetic, passionate, motivated, friends and second family. The heart of what we do, lies here.

To the Reps community, mentors, the Reps team, Mozilla leadership and community I thank you for this incredible opportunity to contribute and to grow. I plan to pay it forward.

Feature Image Credit: Fay Tandog

|

|

Air Mozilla: Privacy Lab and Cryptoparty with guest speaker Melanie Ensign - How Security/Crypto Experts Can Communicate with Non-Technical Audiences |

Our April Privacy Lab will include a speaker and an optional and free Cryptoparty, hosted by Wildbee (https://wildbee.org/cryptoparty.html). Our speaker will be Melanie Ensign. Melanie's...

Our April Privacy Lab will include a speaker and an optional and free Cryptoparty, hosted by Wildbee (https://wildbee.org/cryptoparty.html). Our speaker will be Melanie Ensign. Melanie's...

https://air.mozilla.org/privacy-lab-and-cryptoparty-with-guest-speaker-melanie-ensign/

|

|

L. David Baron: Thoughts on migrating to a secure Web |

Brad Hill asked what I and other candidates in the TAG election think of Tim Berners-Lee's article Web Security - "HTTPS Everywhere" harmful. The question seems worth answering, and I don't think an answer fits within a tweet. So this is what I think, even though I feel the topic is a bit outside my area of expertise:

The current path of switching content on the Web to being accessed through secure connections generally involves making the content available via http URLs also available via https URLs, redirecting http URLs to https ones, and (hopefully, although not all that frequently in reality) using HSTS to ensure that the user's future attempts to access HTTP resources get converted to HTTPS without any insecure connection being made. This is a bit hacky, and hasn't solved the problem of the initial insecure connection, but it mostly works, and doesn't degrade the security of anything we have today (e.g., bookmarks or links to https URLs).

It's not clear to me what the problem that Tim is trying to solve is. I think some of it is concern over the semantic Web (e.g., his concern over the “identity of the resource”), although there may be other concerns there that I don't understand. I'd tend to prioritize the interests of the browseable Web (with users counted in the billions) and other uses of the Web that are widespread, over those of the semantic Web.

There are good reasons for the partitioning that browsers do between http and https:

- Some of the partitioning prevents attacks directly (for example, sending a cookie that should be sent only to an https site to its http equivalent could allow an active attacker to steal the information in that cookie). Likewise for many other attacks involving the same-origin policy, where http and https are considered different origins.

- Some of it (e.g., identifying https pages that load resources over http as insecure) is intended to prevent large classes of mistakes that would otherwise be widespread and drastically reduce the security of the Web. Circa 2000, a common Web developer complaint about browser security UI was that a site couldn't be considered secure if an image was loaded over HTTP. This might have been fine if the image was the company logo (and the attack under consideration was avoiding theft of money or credentials rather than avoiding monitoring), but isn't fine if the image is a graph of a bank account balance or if the image's URL has authentication information in it. (On the other hand, if it were a script rather than an image, an active attacker could compromise the entire page if the script could be loaded without authentication.) I think a similar rationale applies for not having mechanisms to do authentication without encryption (even though there are many cases where that would be fine).

It's not clear to me how Tim's proposal of making http secure would address these issues (and keep everything else working at the same time). For example, is a secure-http page same-origin with insecure-http on the same host, or with https, or neither? They may well be solvable, but I don't see how to solve them off the top of my head, and I think they'd need to be solved before actually pursuing this approach.

One problem that I think is worth solving is that HTTPS as a user-presentable prefix has largely failed. Banks tell their customers to go to links like "bofa.com/activate" or "wellsfargo.com/activate". (The first one doesn't even work if the user adds "https://". I guess there's a chance that the experience of existing users could be fixed with HSTS, but that's not the case today.) They do this for a good reason; each additional character (especially the strange characters) is going to reduce the chance the user succeeds at the task.

It's possible Tim's proposal might help solve this, although it's not clear to me how it could do so with an active man-in-the-middle attacker. (It could help against passive attackers, as could browsers trying https before trying http.) In the long term, maybe the Web will get to a point where typing such URLs tries https and doesn't try http, but I think we're a long way away from a browser being able to do that without losing a large percentage of its users.

I think I basically understand the current approach of migrating to secure connections by migrating to https, which seems to be working, although slowly. I'm hopeful that Let's Encrypt will help speed this up. It's possible that the approach Tim is suggesting could lead to a faster migration to secure connections on the Web, although I don't see enough in Tim's article to evaluate its security and feasibility.

|

|

Air Mozilla: German speaking community bi-weekly meeting |

Zweiw"ochentliches Meeting der deutschsprachigen Community. ==== German speaking community bi-weekly meeting.

Zweiw"ochentliches Meeting der deutschsprachigen Community. ==== German speaking community bi-weekly meeting.

https://air.mozilla.org/german-speaking-community-bi-weekly-meeting-20150423/

|

|

Jeff Walden: Specialty plates in circuit courts, and the parties’ arguments at the Supreme Court |

Yesterday I discussed the background to Walker v. Texas Division, Sons of Confederate Veterans. Stated briefly, Texas denied Texas SCV‘s application for a specialty license plate with a Confederate flag on it, because the design might be “offensive”. The question is whether Texas is required by the First Amendment to grant the application.

Today I discuss how specialty plate programs have fared in lower courts, and the arguments Texas and Texas SCV bring to the case.

In the courts

Almost every circuit court has required that specialty plate programs be viewpoint-neutral, not restricting designs because of their views. (And the one exception judged a program without an open invitation for designs.) So it’s unsurprising that Texas SCV won its Fifth Circuit case.

Texas appealed to the Supreme Court, which agreed to answer two questions. Are specialty plate programs “government speech” that need not be viewpoint-neutral, such that the design can be rejected as “offensive” (or, indeed, for almost any reason)? And did Texas discriminate by viewpoint in rejecting Texas SCV’s design?

Texas’s argument

Texas says license plates are entirely the government speaking, and it can say or not say whatever it wants. Texas relies on two cases: Pleasant Grove City v. Summum, in which a city’s approval of a limited set of monuments in its city park (and denial of a particular monument) was deemed government speech; and Johanns v. Livestock Marketing Association, in which a government beef-promotion plan that exacted a fee from beef producers to support speech (including the Beef. It’s What’s For Dinner tagline) was deemed government speech that program participants couldn’t challenge on the grounds that it compelled them to speak.

According to Texas, its specialty plates are government speech because Texas “effectively control[s]” the whole program. What matters is whether Texas “exercises final approval authority over every word used” — and it does. Texas allows private citizens to participate, but it has “final approval authority” over every design. Texas also argues that it can’t be compelled to speak by displaying the Confederate flag. By making a license plate, the state’s authority backs (or doesn’t back) every design approved or rejected. Plate purchasers shouldn’t be able to force Texas to espouse the views of an unwanted specialty plate, which drivers would then ascribe to Texas.

And of course, Texas says ruling against them would lead to “untenable consequences”. For every “Stop Child Abuse” plate there’d have to be an opposing plate supporting child abuse, and so on for the whole parade of horribles. Texas particularly notes that the Eighth Circuit forced Missouri to let the Ku Klux Klan join the state’s Adopt-a-Highway program under this logic. (The person behind me in the oral argument line related that one of the highways entering Arkansas was adopted by the KKK under that rule, giving Arkansas visitors that delightful first impression of the state.)

Texas also asserted that assessing how members of the public view a specialty plate is “an objective inquiry”, so that deciding a specialty plate “might be offensive” doesn’t discriminate on the basis of the specialty plate’s viewpoint. As to the Fifth Circuit’s criticism of the “unbridled discretion” provided by the “might be offensive” bar, Texas instead describes it as “discriminating among levels of offensiveness”, such latitude permitted because the state is “assisting speech”.

Texas SCV’s argument

Texas SCV says Texas is being hypocritical. The Capitol gift shop sells Confederate flags. Texas recognizes a state Confederate Heroes Day. It maintains monuments to Confederate soldiers. Either Texas doesn’t really think the Confederate flag is offensive to the public, or its other “government speech” is flatly inconsistent with its specialty-plate stance.

Texas SCV also distinguishes the plates designed by the state legislature from plates designed by private entities. The former are the product of the government, but the only government involvement in the latter is in approval or disapproval. The driver has ultimate control, because only when he designs a plate and ultimately drives a vehicle with it does speech occur. And under Wooley v. Maynard — a case where a Jehovah’s Witness protested New Hampshire’s fining of people who covered up “Live Free or Die” on their license plates, and the Court said New Hampshire couldn’t force a person to espouse the state motto — it’s the individual’s speech (at least for non-legislatively-designed plates).

Texas SCV brushes off Summum and Johanns. Permanent monuments in parks have always been associated with the government, because parks physically can’t accommodate all monuments. Not so for license plates. (And Texas’s $8000 deposit covers startup costs that might justify treating rare plates differently.) And while the beef-promotion messages were part of a “coordinated program” by government to “advance the image and desirability of beef and beef products”, privately-designed specialty plates are not — especially as their fully–contradictory messages are “consistent” only as a fundraiser.

Finally, given that privately-designed specialty plates are private speech, the First Amendment requires that restrictions be viewpoint-neutral. By restricting Texas SCV’s message based on its potential for offensiveness, Texas endorsed viewpoints that deem the Confederate flag racist and discriminated against viewpoints that do not.

Tomorrow, analysis of Texas’s government speech and compelled speech arguments.

|

|

Edward Lee: Whys and Hows of Suggested Tiles |

As Darren discussed on Monday’s project call [wiki.mozilla.org], Suggested Tiles has been on track to go live to a larger audience next week for wider beta testing. I’ll provide some context around why we implemented this feature, details of how it works, and open questions of how to make it even better.

We’ve been looking for ways to improve the user experience within Firefox by combining data that Firefox knows about with data Mozilla can provide. We’re also in a good position to work with the advertising ecosystem so that we can change it to care more about the values Mozilla cares about. We want to create advertising products that respect user’s choice/control over their data and get others interested by showing money can be made.

Last November, we launched Tiles with a framework to show external content within Firefox’s new tab page. A relatively small portion of Firefox users saw these because they only showed up if there would have been empty tiles, i.e., new users with little history or existing users who cleared history. Suggested Tiles expands on this to be a little bit smarter by showing content based on the user’s top sites. For example, if a user tends to visit sites about mobile phones, Firefox can now decide to show a suggestion for Firefox for Android.

We intend to bring value to users by showing them content that they would be interested in and engage with. On the flip side, this means we purposefully hold back on showing content that users might get annoyed with and block. We do this in a way that requires using a minimal amount of the user’s data, and as usual, we provide controls to the user to turn things off if that’s desired. For the initial release of Suggested Tiles, we plan to show content from Mozilla such as mobile Firefox, MDN, and HTML5 gaming.

The Tiles framework has been built in ways that are different from traditional web advertising in both how it gets data into Firefox [blog.mozilla.org] and how it reports on Tiles performance. The two linked posts have quite a bit of detail, but to summarize, Firefox makes generic encrypted cookieless requests to get enough data to decide locally in Firefox whether content should be added to the new tab page. In order for us to have data on how to improve the experience, Firefox reports back when users see and click on these tiles and includes tile data such as IDs of added content but no URLs. We have aggressive data deletion policies and don’t keep any unique identifiers that can be associated with our users.

The technical changes to support Suggested Tiles are not overly complex as the server provides one additional value specifying when a suggestion should be shown. This value informs Firefox which sites need to be in the user’s top sites before showing the tile. The reporting mechanism is unchanged, so if a Suggested Tile is shown or clicked, Firefox reports back the tile’s ID and no URLs just as before.

Even though the technical changes are not too complicated, the effect of this can be significant. In particular, Firefox reports back if it shows a Suggested Tile, and if that tile is only shown when the user has been to one of various news sites, Firefox reveals to our servers that this user reads news. Our handling of the data is no different from before.

Because we care about user privacy, we have policies around how/what suggestions can be made. For example, to match on news sites, we make sure there’s at least 5 popular news sites for Firefox to check against. This makes it so there’s user deniability of which site the user actually visited. We also focus on broad uncontroversial topics, so we don’t make suggestions based on adult content or illegal gambling sites.

An additional layer of protection is built into Firefox by only allowing predefined sets of sites [hg.mozilla.org] for making suggestions. These include mobile phones, technology news, web development, and video games. This rigidness protects Firefox from accepting fake/malicious suggestions that could reveal data Mozilla doesn’t want to collect.

This last point is important to highlight because we want to have a discussion around how we can be more flexible in showing more relevant content and fixing mistakes. For example, people who care about video games might only care about a specific gaming platform, but because Firefox only allows for the predefined sites, we would end up suggesting content that many users didn’t actually want to see.

Feel free to respond with comments about Suggested Tiles or to join in on the discussion about various topics on dev.planning [groups.google.com]:

- Improving trust and transparency for Suggested Tiles

- Ideas for policies to protect Suggested Tiles users’ data

- Suggesting tiles by region with finer granularity than country

- Potential privacy issues of not showing suggestions in certain contexts

As usual, we have the source code available for Firefox [hg.mozilla.org] and our servers that send/receive tiles data [github.com], create tiles, and process tiles data. You can also find additional details in the Directory Links Architecture and Data Formats documentation [people.mozilla.org].

No commentshttp://ed.agadak.net/2015/04/whys-and-hows-of-suggested-tiles

|

|

Air Mozilla: Community Education Call |

The Community Education Working Group exists to merge ideas, opportunities, efforts and impact across the entire project through Education & Training.

The Community Education Working Group exists to merge ideas, opportunities, efforts and impact across the entire project through Education & Training.

|

|

William Lachance: PyCon 2015 |

So I went to PyCon 2015. While I didn’t leave quite as inspired as I did in 2014 (when I discovered iPython), it was a great experience and I learned a ton. Once again, I was incredibly impressed with the organization of the conference and the diversity and quality of the speakers.

Since Mozilla was nice enough to sponsor my attendance, I figured I should do another round up of notable talks that I went to.

Technical stuff that was directly relevant to what I work on:

- To ORM or not to ORM (Christine Spang): Useful talk on when using a database ORM (object relational manager) can be helpful and even faster than using a database directly. I feel like there’s a lot of misinformation and FUD on this topic, so this was refreshing to see. video slides

- Debugging hard problems (Alex Gaynor): Exactly what it says — how to figure out what’s going on when things aren’t behaving as they should. Great advice and wisdom in this one (hint: take nothing for granted, dive into the source of everything you’re using!). video slides

- Python Performance Profiling: The Guts And The Glory (Jesse Jiryu Davis): Quite an entertaining talk on how to properly profile python code. I really liked his systematic and realistic approach — which also discussed the thought process behind how to do this (hint: again it comes down to understanding what’s really going on). Unfortunately the video is truncated, but even the first few minutes are useful. video

Non-technical stuff:

- The Ethical Consequences Of Our Collective Activities (Glyph): A talk on the ethical implications of how our software is used. I feel like this is an under-discussed topic — how can we know that the results of our activity (programming) serves others and does not harm? video

- How our engineering environments are killing diversity (and how we can fix it) (Kate Heddleston): This was a great talk on how to make the environments in which we develop more welcoming to under-represented groups (women, minorities, etc.). This is something I’ve been thinking a bunch about lately, especially in the context of expanding the community of people working on our projects in Automation & Tools. The talk had some particularly useful advice (to me, anyway) on giving feedback. video slides

I probably missed out on a bunch of interesting things. If you also went to PyCon, please feel free to add links to your favorite talks in the comments!

|

|

Mozilla Release Management Team: 38.0 & 38.0.5: An update on the upflits and branches |

During the 38 cycle, we are going to publish a release between 38 & 39 (called 38.0.5).

In order to continue the development of 38 & 38.0.5 in parallel, we merged mozilla-beta (m-b) into mozilla-release (m-r).

Before:

- m-b = 38.0.0 beta

- m-r = 37.0.2

Now:

- m-b = 38.0.5 beta (even if we won't build any for now)

- m-r = 38.0 beta (next one being beta7)

We will do regular m-r => m-b merges to make sure 38.0.5 is up to date.

This does not impact aurora (aka 39). In case we have to make a new 37 dot release, we would use a relbranch.

The m-b tree is closed avoid any confusion.

Last but not least, uplift requests to 38 should be filled for mozilla-release 38.0.5 would be mozilla-beta. However, release managers and sheriffs will translate the information if the uplift requests are incorrect.

The schedule has been updated.

http://release.mozilla.org/38/schedule/2015/04/23/update-branch.html

|

|

Ian Bicking: A Product Journal: As A Building Block |

I’m blogging about the development of a new product in Mozilla, look here for my other posts in this series

I teeter between thinking big about PageShot and thinking small. The benefit of thinking small is: how can this tool provide value to people who wouldn’t know if it would provide any value? And: how do we get it done?

Still I can’t help but thinking big too. The web gave us this incredible way to talk about how we experience the web: the URL. An incredible amount of stuff has been built on that, search and sharing and archiving and ways to draw people into content and let people skim. Indexes, summaries, APIs, and everyone gets to mint their own URLs and accept anyone else’s URLs, pointing to anything.

But not everyone gets to mint URLs. Developers and site owners get to do that. If something doesn’t have a URL, you can’t point to it. And every URL is a pointer, a kind of promise that the site owner has to deliver on, and sometimes doesn’t choose to, or they lose interest.

I want PageShot to give a capability to users, the ability to address anything, because PageShot captures the state of any page at a moment, not an address so someone else can try to recreate that page. The frozen page that PageShot saves is handy for things like capturing or highlighting parts of the page, which I think is the feature people will find attractive, but that’s just a subset of what you might want to do with a snapshot of web content. So I also hope it will be a building block. When you put content into PageShot, you will know it is well formed, you will know it is static and available, you can point to exact locations and recover those locations later. And all via a tool that is accessible to anyone, not just developers. I think there are neat things to be built on that. (And if you do too, I’d be interested in hearing about your thoughts.)

http://www.ianbicking.org/blog/2015/04/product-journal-building-block.html

|

|

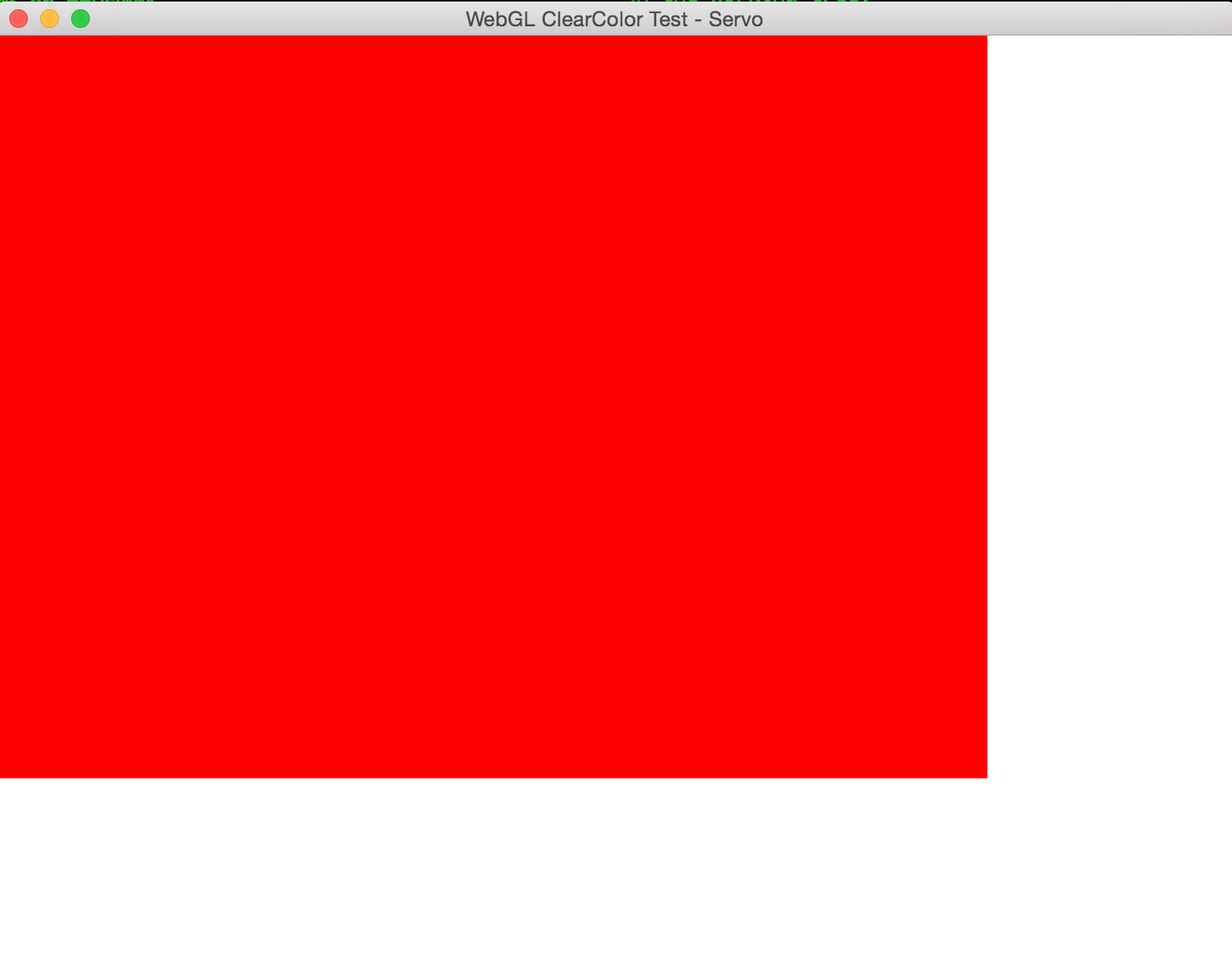

The Servo Blog: This Week In Servo 31 |

In the past two weeks, we merged 100 pull requests

Lars and Mike wrote a blog post on Servo on the Samsung OSG blog

Notable additions

- Diego started off our WebGL implementation

- Patrick improved scrolling performance

- Guro Bokum added timeline support to our Firefox devtools implementation

- M'aty'as added support for rectangles,

lineCap/lineJoin, global compositing/blending, clipping path and save/restore for canvas - Ms2ger added support for Servo-specific wpt tests, the replacement for our contenttest suite, which was removed

- Josh implemented child reparenting and node removal

- Joe Seaton extended CSS animation support to most CSS properties

- Patrick tweaked block size computation for

height:autoelements with padding and layout of nested elements with differentvertical-align - Ms2ger added a JS runtime struct

- Anthony Ramine made another set of DOM changes

- Adenilson implemented CSS blur filter. Also implemented support for displaying the Firefox icon for broken image links as a follow up to loading a placeholder.

- Adenilson implemented dump of optimized display lists. Together with dumping of regular display lists, dumping of flowtree and reflow events, debugging layout bugs in servo became easier.

New contributors

Screenshots

This is a simple demo of our new WebGL support.

Meetings

- We’re trying out Reviewable for code review instead of Critic. It’s pretty neat!

- Homu is working out very well for us

- We ought to have some new team members soon!

- Integration with the Firefox timeline devtool has landed

|

|

Jeff Walden: Texas specialty license plates |

Yesterday I discussed the second Supreme Court oral argument I attended in a recent trip to the Supreme Court. Today I describe the basic controversy in the first oral argument I attended, in a case potentially implicating the First Amendment. First Amendment law is complicated, so this is the first of several posts on the case.

Texas specialty license plates

State license plates, affixed to vehicles to permit legal use on public roads, typically come in one or very few standard designs. But in many states you can purchase a specialty plate with special imagery, designs, coloring, &c. (Specialty plates are distinct from “vanity” plates. A vanity plate has custom letters and numbers, e.g. a vegetarian might request LUVTOFU.) Some state legislatures direct that specialty designs delivering particular messages be offered. And some state legislatures enact laws that permit organizations or individuals to design specialty plates.

The state of Texas sells both legislatively-requested designs and designs ordered by organizations or individuals. (The latter kind require an $8000 bond, covering ramp-up costs until a thousand plates are sold.) The DMVB evaluates designs for compliance with legislated criteria: for example, reflectivity and legibility concerns. One criterion allows (but does not require) Texas to reject “offensive” plates.

The department may refuse to create a new specialty license plate if the design might be offensive to any member of the public.

An “offensive” specialty plate design

Texas rejected one particular design for just this reason. As they say, a picture is worth a thousand words:

For those unfamiliar with American imagery: the central feature of the Texas SCV insignia is the Confederate flag. Evoking many things, but in some minds chiefly representative of revanchist desire to resurrect Southern racism, Jim Crow, and the rest of that sordid time. Such minds naturally find the Confederate flag offensive.

Is the SCV actually racist? (Assuming you don’t construe mere use of the flag as prima facie evidence.) A spokesman denies the claim. Web searches find some who disagree and others who believe it is (or was) of divided view. I find no explicit denunciation of racism on the SCV’s website, but I searched only very briefly. Form your own conclusions.

Tomorrow, specialty plate programs in the courts, and the parties’ arguments.

http://whereswalden.com/2015/04/22/texas-specialty-license-plates/

|

|

Air Mozilla: Product Coordination Meeting |

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

https://air.mozilla.org/product-coordination-meeting-20150422/

|

|

Air Mozilla: The Joy of Coding (mconley livehacks on Firefox) - Episode 11 |

Watch mconley livehack on Firefox Desktop bugs!

Watch mconley livehack on Firefox Desktop bugs!

https://air.mozilla.org/the-joy-of-coding-mconley-livehacks-on-firefox-episode-11/

|

|

Mozilla Release Management Team: Firefox 38 beta5 to beta6 |

A smaller beta release.

In this release, we disabled screen sharing (will arrive with 38.0.5), reading list and reading view are going to be disabled in beta 7. We also took some stability fixes (as usual) and some polishing patches.

- 32 changesets

- 71 files changed

- 857 insertions

- 313 deletions

| Extension | Occurrences |

| js | 25 |

| cpp | 11 |

| jsm | 7 |

| java | 6 |

| mn | 5 |

| ini | 4 |

| h | 4 |

| html | 2 |

| css | 2 |

| xul | 1 |

| list | 1 |

| json | 1 |

| idl | 1 |

| Module | Occurrences |

| browser | 29 |

| toolkit | 9 |

| mobile | 7 |

| dom | 7 |

| js | 6 |

| testing | 4 |

| layout | 3 |

| widget | 2 |

| modules | 1 |

| media | 1 |

| docshell | 1 |

List of changesets:

| Carsten Tomcat Book | Bug 1155679 - "mozharness update to ref 4f1cf3369955" on a CLOSED TREE . r=ryanvm, a=test-only - 6103268d785d |

| Terrence Cole | Bug 1152177 - Make jsid and Value pre barriers symetrical. r=jonco, a=abillings - d79194507f32 |

| Mats Palmgren | Bug 1153478 - Part 1: Add nsInlineFrame::StealFrame and make it deal with being called on the wrong parent for aChild (due to lazy reparenting). r=roc, a=sledru - 18b8b10f2fbd |

| Mats Palmgren | Bug 1153478 - Part 2: Remove useless assertions. r=roc, a=sledru - e1dd0d7756c5 |

| Mike Shal | Bug 1152031 - Bump mozharness.json to 23dee28169d6. a=test-only - 4411b07ee6bd |

| Gijs Kruitbosch | Bug 1153900 - Fix IE cookies migration. a=sylvestre - 55837b9aa111 |

| Jim Chen | Bug 1072529 - Only create GeckoEditable once. r=esawin, a=sledru - 69e54b268783 |

| Paul Adenot | Bug 1136360 - Take into account the output device latency in the clock, and be more robust about rounding error accumulation, in cubeb_wasapi.cpp. r=kinetik, a=sledru - fff936b47a9f |

| Mike de Boer | Bug 1155195 - Disable Loop screensharing for Fx38. r=Standard8, a=sledru - 6a5c3aa5b912 |

| Gijs Kruitbosch | Bug 1153900 - add fixes to tests for aurora, rs=me, a=RyanVM - b158e9bdd8a0 |

| Robert Strong | Bug 1154591 - getCanStageUpdates has incorrect checks for Windows. r=spohl, a=sledru - 86d3b1103197 |

| Ed Lee | Bug 1152145 - Filter for specific suggested tiles adgroups/buckets/frecent_sites lists with display name [r=adw, a=sylvestre] - e66ad17db13f |

| Gijs Kruitbosch | Bug 1147487 - Don't bother sending reader mode updates when isArticle is false. r=margaret, a=sledru - 125ec6c54576 |

| Ehsan Akhgari | Bug 1151873 - Stop forcing text/plain-only content being copied to the clipboard when an ancestor of the selected node has significant whitespace. r=roc, a=sledru - 7e31d76c4d7b |

| Margaret Leibovic | Bug 785549 - Use textContent instead of innerHTML to set domain and credits in reader view. r=Gijs, a=sledru - 38e095acde46 |

| Paul Kerr [:pkerr] | Bug 1154482 - about:webrtc intermittently throws a js type error. r=jib, a=sledru - 899ee022ed4c |

| Jared Wein | Bug 1134501 - UITour: Force page into Reader View automatically whenever the ReaderView/ReadingList tour page is loaded. r=gijs, a=dolske - e5d6dc48f6de |

| Gijs Kruitbosch | Bug 1152219 - Make reader mode node limit a pref, turn off entirely for desktop because of isProbablyReaderable. r=margaret, a=sledru - 4a98323f8e68 |

| Gijs Kruitbosch | Bug 1124217 - Don't gather telemetry for windows that have died. r=mconley, a=sledru - 849bf3c58408 |

| Blake Winton | Bug 1149068 - Use the correct font for the Sans Serif font button. ui-r=maritz, r=jaws, r=margaret, a=sledru - 44de10db57a6 |

| Gijs Kruitbosch | Bug 1155692 - Include latest Readability/JSDOMParser changes into m-c. a=sledru - eb5e2063637b |

| Bas Schouten | Bug 1150376 - Do not try to use D3D11 for popup windows. r=jrmuizel, a=sledru - 746934eab883 |

| Bas Schouten | Bug 1155228 - Only use basic OMTC for popups when using WARP. r=jrmuizel, a=sledru - 4dc8d874746b |

| Olli Pettay | Bug 1153688 - Treat JS Symbol as void on C++ side of Variant. r=bholley, a=abillings - 18af6cfb3b86 |

| Chenxia Liu | Bug 1154980 - Localize first run pager titles. r=ally, a=sledru - 65cf03fc2bc9 |

| Gijs Kruitbosch | Bug 1141031 - Fix in-content prefs dialogs overflowing. r=jaws, a=sledru - 9117f9af554e |

| Boris Zbarsky | Bug 1155788 - Make the Ion inner-window optimizations work again. r=efaust, a=sledru - e4192150f53a |

| Gijs Kruitbosch | Bug 1150520 - Disable EME for Windows XP. r=dolske, a=sledru - 704989f295eb |

| Luke Wagner | Bug 1152280 - OdinMonkey: tighten changeHeap mask validation. r=bbouvier, a=abillings - 5dc0d44c8dbd |

| Boris Zbarsky | Bug 1154366 - Pass in a JSContext to StructuredCloneContainer::InitFromJSVal so it will throw its exceptions somewhere where people might see them. r=bholley, ba=sledru - 72f1b4086067 |

| Ryan VanderMeulen | Bug 1150376 - Fix rebase typo. a=bustage - f3dd042acc18 |

| Ralph Giles | Bug 1144875 - Disable EME on ESR releases. r=dolske, a=sledru - 630336da65f2 |

http://release.mozilla.org/statistics/38/2015/04/22/fx-38-b5-to-b6.html

|

|

Mozilla Science Lab: Introducing Mozilla Science Study Groups |

For a long time now, I’ve been thinking about three big challenges in open science:

- Coding is hard enough by any measure – coding for sharing & reuse is even more demanding. Given that our traditional education system isn’t yet imparting these skills to scientists & researchers, and given that it takes sustained practice over a long time to integrate these skills into our research, how can we help build those skills at scale?

- Many students and early career researchers feel intensely isolated and unsupported in their efforts to learn to code, leading to fear of embarrassment before their colleagues, struggles with imposter syndrome, and uncertainty on how or even if to proceed with their research careers.

- The production of open source software in support of open science is not enough on its own; we also need to lower the barriers to discoverability and collaboration so that those projects actually get reused, as was done at the NCEAS Codefest last year – but we need to do it at scale and at home, without requiring expensive trips to conferences.

At some level, these are all the same problem: they are all endemic to a fragmented community. Taken all together, the scientific community has a huge amount of programming knowledge; but it’s split up across individuals that rarely have the opportunity to share that knowledge. Crippling self doubt often arises not from genuine inadequacy, but a loss of perspective that comes from working in isolation where it becomes possible to imagine that we are the worst of all our peers. And as we saw at the NCEAS event, the so-called discoverability problem evaporates very quickly with even a small group of people pooling their experience.

The skills & knowledge we need are there in pieces – we have to find a way to assemble them in a way that elevates us all. The Mozilla Science Lab thinks we can do this via a loose federation of Study Groups.

Our Powers Combined

I started thinking about Study Groups last Autumn, after a conversation with Rachel Sanders (PyLadies San Fransisco); Sanders described regular small PyLadies meetups where learners would support each other as they explored a tutorial, project or idea, where emphasis was on communal, participatory learning, lecturing and leadership roles took a distant back seat, and learning occurred over the long term. By blending these ideas with something like a journal club familiar to many academics, I think we can build Study Groups that powerfully address the questions I started with. I’d like Study Groups to do a few things:

- Promote learning via a network effect of skill sharing. By highlighting the authentic, practice-driven use of code, tools and packages led by the researchers who actually use them in the wild, we create an exchange of skills that scales, grows richer and tracks real scientific practice the more people participate.

- Create and normalize a custom of discussing code as a research object. Scientists and researchers need forums where the focus is on code and the methodologies surrounding it, in order to create space for the conversations that lead to discovering new tools and improving personal practice.

- Acknowledge the ongoing process of learning to code by putting that learning process out in the open & making it shared among colleagues, in order to dispel the misconception that these skills are intuitive, obvious, or in any way inherent.

In practice, these things can be achieved by getting together in an open meetup anywhere from once a month to once a week, where individuals can lead follow-along demos, have a co-working space to explore and experiment together, and everyone feels comfortable asking the group for ideas and help.

Predecessors & Beta Tests

A number of powerful examples of similar groups predate this project, and I had the good fortune to learn from them over the past several months. Noam Ross leads the Davis R User’s Group, a tremendously successful R meetup that has generated a wealth of teaching content on R over the past few years; Ross also organized a recent Ask Us Anything panel on the Mozilla Science Forum, and invited the leads from a number of different similar programs to sit in and share their stories and experiences. I met Rob Johnson and others behind Data Science Hobart while I was in Australia recently; DaSH is doing an amazing job of pulling in speakers and demo leaders from an eclectic range of disciplines and interests, to great effect. And I’ve recently had the privilege of sitting in on lessons from the UBC Earth & Ocean Science coding workout group, which informed my thinking around community-led demos on tools as they are actually used, such as Kathi Unglert‘s work on awk and Nancy Soontiens‘s basemap demo.

Hacky Hour is one of the many awesome ideas from the Research Bazaar.

From these examples and others, I and a team of people at UBC began discussing what a Study Group could look like. For the first few weeks, we met over beers at a university pub, in the Hacky Hour tradition started by our colleagues in Melbourne at the Research Bazaar. Enthusiasm was high – people were very keen to have a place to come and learn about coding in the lab, and find out what that would look like. Soon, with the help of many but particularly with the energetic leadership and community organizing of Amy Lee, we had booked our first event; Andrew MacDonald led a packed (and about 2/3 female) room through introductory R, and within 24 hours attendees had stepped up to volunteer to lead half a dozen further sessions on more advanced topics in R from their research.

There was no shortage of enthusiasm at UBC for the opportunities a Study Group presented, and I see no reason why UBC should be a unique case; the Mozilla Science Lab is prepared to help support and iterate on similar efforts where you are. All that’s required to start a Study Group at your home institution, is your leadership.

Woot hoo a great turnout for our first #rstats group. Cc: @billdoesphysics @MozillaScience @polesasunder pic.twitter.com/K9oNwiXEVm

— Amy Lee (@minisciencegirl) April 15, 2015

Your Turn

In order to support you as you start your own Study Group, the Mozilla Science Lab has a collection of tools for you:

- We’ve built a template website using GitHub Pages that you can fork and remix for your own use. Not only is the website served automagically from GitHub, but we took a page from Nodeschool.io, and set things up to direct conversation & event listings to your issue tracker, thus adding a free message board & mailing list. Check out the Vancouver R Study Group‘s use of the page; setup instructions are in the README, as well as on YouTube – and as always, feel free to open an issue or contact us at sciencelab@mozillafoundation.org if something isn’t working for you.

- We’ve written a first draft of the Study Group Handbook, that pulls in lessons learned from other groups and guides newcomers through the process of setting up their own, including a step-by-step guide for your first few events, lesson resources, and more. This is a work in progress, and it’ll only get better as more people try it out and send us feedback!

- We have begun to collect lesson plans & resources delivered in similar meetups for reuse community-wide. If you’d like to maintain your own lessons, send us a link and we’ll point to your work from our Study Group Handbook; if you’d rather we do the maintenance for you, send a pull request to our collection and we’ll make sure your work helps elevate the entire community.

- Finally, get on the map! Whether you start a Study Group with our tools, or you’re in one running on its own, send us a link and a location and we’ll add you to the map of Study Groups Worldwide, so others in your community can find your meetup, and we can all see the global community that is emerging around working together.

We’re very much looking forward to working with you to help you spool up your own Study Group, and learn from your experiences on how to make this program what the research community needs it to be; we hope you’ll join us.

Study Groups, Hacky Hours & Open Science Meetups

http://mozillascience.org/introducing-mozilla-science-study-groups/

|

|

Adam Lofting: Measuring Quality |

At the end of last year, Cassie raised the question of ‘how to measure quality?’ on our metrics mailing list, which is an excellent question. And like the best questions, I come back to it often. So, I figured it needed a blog post.

There are a bunch of tactical opportunities to measure quality in various processes, like the QA data you might extract from a production line for example. And while those details interest me, this thought process always bubbles up to the aggregate concept: what’s a consistent measure of quality across any product or service?

I have a short answer, but while you’re here I’ll walk you through how I get there. Including some examples of things I think are of high quality.

One of the reasons this question is interesting, is that it’s quite common to divide up data into quantitative and qualitative buckets. Often splitting the crisp metrics we use as our KPIs from the things we think indicate real quality. But, if you care about quality, and you operate at ‘scale’, you need a quantitative measure of quality.

On that note, in a small business or on a small project, the quality feedback loop is often direct to the people making design decisions that affect quality. You can look at the customers in your bakery and get a feel for the quality of your business and products. This is why small initiatives are sometimes immensely high in quality but then deteriorate as they attempt to replicate and scale what they do.

What I’m thinking about here is how to measure quality at scale.

Some things of quality, IMHO:

This axe is wonderful. As my office is also my workshop, this axe is usually near to hand. It will soon be hung on the wall. Not because I am preparing for the zombie apocalypse, but because it is both useful as a tool, and as a visual reminder about what it means to build quality products. If this ramble of mine isn’t enough of a distraction, watch Why Values are Important to understand how this axe relates to measures of quality especially in product design.

This axe is wonderful. As my office is also my workshop, this axe is usually near to hand. It will soon be hung on the wall. Not because I am preparing for the zombie apocalypse, but because it is both useful as a tool, and as a visual reminder about what it means to build quality products. If this ramble of mine isn’t enough of a distraction, watch Why Values are Important to understand how this axe relates to measures of quality especially in product design.

This toaster is also wonderful. We’ve had this toaster more than 10 years now, and it works perfectly. If it were to break, I can get the parts locally and service it myself (it’s deliberately built to last and be repaired). It was an expensive initial purchase, but works out cheap in the long run. If it broke today, I would fix it. If I couldn’t fix it for some extreme reason, I would buy the same toaster in a blink. It is a high quality product.

This toaster is also wonderful. We’ve had this toaster more than 10 years now, and it works perfectly. If it were to break, I can get the parts locally and service it myself (it’s deliberately built to last and be repaired). It was an expensive initial purchase, but works out cheap in the long run. If it broke today, I would fix it. If I couldn’t fix it for some extreme reason, I would buy the same toaster in a blink. It is a high quality product.

This is the espresso coffee I drink every day. Not the tin, it’s another brand that comes in a bag. It has been consistently good for a couple of years until the last two weeks when the grind has been finer than usual and it keeps blocking the machine. It was a high-quality product in my mind, until recently. I’ll let another batch pass through the supermarket shelves and try it again. Otherwise I’ll switch.

This is the espresso coffee I drink every day. Not the tin, it’s another brand that comes in a bag. It has been consistently good for a couple of years until the last two weeks when the grind has been finer than usual and it keeps blocking the machine. It was a high-quality product in my mind, until recently. I’ll let another batch pass through the supermarket shelves and try it again. Otherwise I’ll switch.

This spatula looks like a novelty product and typically I don’t think very much of novelty products in place of useful tools, but it’s actually a high quality product. It was a gift, and we use it a lot and it just works really well. If it went missing today, I’d want to get another one the same. Saying that, it’s surprisingly expensive for a spatula. I’ve only just looked at the price, as a result of writing this. I think I’d pay that price though.

This spatula looks like a novelty product and typically I don’t think very much of novelty products in place of useful tools, but it’s actually a high quality product. It was a gift, and we use it a lot and it just works really well. If it went missing today, I’d want to get another one the same. Saying that, it’s surprisingly expensive for a spatula. I’ve only just looked at the price, as a result of writing this. I think I’d pay that price though.

All of those examples are relatively expensive products within their respective categories, but price is not the measure of quality, even if price sometimes correlates with quality. I’ll get on to this.

How about things of quality that are not expensive in this way?

What is quality music, or art, or literature to you? Is it something new you enjoy today? Or something you enjoyed several years ago? I personally think it’s the combination of those two things. And I posit that you can’t know the real quality of something until enough time has passed. Though ‘enough time’ varies by product.

Ten years ago, I thought all the music I listened to was of high quality. Re-listening today, I think some of it was high-quality. As an exercise, listen to some music you haven’t for a while, and think about which tracks you enjoy for the nostalgia and which you enjoy for the music itself.

In the past, we had to rely on sales as a measure of the popularity of music. But like price, sales doesn’t always relate to quality. Initial popularity indicates potential quality, but not quality in itself (or it indicates manipulation of the audience via effective marketing). Though there are debates around streaming music services and artist payment, we do now have data points about the ongoing value of music beyond the initial parting of listener from cash. I think this can do interesting things for the quality of music overall. And in particular that the future is bleak for album filler tracks when you’re paid per stream.

Another question I enjoy thinking about is why over the centuries, some art has lasting value, and other art doesn’t. But I think I’ve taken enough tangents for now.

So, to join this up.

My view is that quality is reflected by loyalty. And for most products and services, end-user loyalty is something you can measure and optimize for.

Loyalty comes from building things that both last, and continue to be used.

Every other measurable detail about quality adds up to that.

Reducing the defect rate of component X by 10% doesn’t matter unless it impacts on the end-user loyalty.

It’s harder to measure, but this is true even for things which are specifically designed not to last. In particular, “experiences”; a once-in-a-lifetime trip, a festival, a learning experience, etc, etc. If these experiences are of high quality, the memory lasts and you re-live them and re-use them many times over. You tell stories of the experience and you refer your friends. You are loyal to the experience.

Bringing this back to work.

For MoFo colleagues reading this, our organization goals this year already point us towards Quality. We use the industry term ‘Retention’. We have targets for Retention Rates and Ongoing Teaching Activity (i.e. retained teachers). And while the word ‘retention’ sounds a bit cold and business like, it’s really the same thing as measuring ‘loyalty’. I like the word loyalty but people have different views about it (in particular whether it’s earned or expected).

This overarching theme also aligns nicely with the overall Mozilla goal of increasing the ‘number of long term relationships’ we hold with our users.

Language is interesting though. Thinking about a ‘20% user loyalty rate’ 7 days after sign-up focuses my mind slightly differently than a ‘20% retention rate’. ‘Retention’ can sound a bit too much like ‘detention’, which might explain why so many businesses strive for consumer ‘lock-in’ as part of their business model.

Talking to OpenMatt about this recently he put a better MoFo frame on it than loyalty; Retention is a measure of how much people love what we’re doing. When we set goals for increasing retention rate, we are committing to building things people love so much that they keep coming back for more.

In summary:

- You can measure quality by measuring loyalty

- I’m happy retention rates are one of our KPIs this year

My next post will look more specifically about the numbers and how retention rates factor into product growth.

And I’ll try not to make it another essay. ![]()

http://feedproxy.google.com/~r/adamlofting/blog/~3/dpL3mxey0k4/

|

|

Weekly Mozilla Reps call

Weekly Mozilla Reps call