Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Robert O'Callahan: rr 3.1 Released |

I released rr 3.1 just now. It contains reverse execution support, but I'm not yet convinced that feature is stable enough to release rr 4.0. (Some people are already using it with joy, but it's much more fun to use when you can really trust it to not blow up your debugging session, and I don't yet.) We needed to do a minor release since we've added a lot of bug fixes and system-call support since 3.0, and for Firefox development rr 3.0 is almost unusable now. In particular various kinds of sandboxing support are being added to desktop Linux Firefox (using unshare and seccomp syscalls) and supporting those in rr was nontrivial.

In the future we plan to do more frequent minor releases to ensure low-risk bug fixes and expanded system-call support are quickly made available in a release.

An increasing number of Mozilla developers are trying rr, which is great. However, I need to figure out a way to measure how much rr is being used successfully, and the impact it's having.

|

|

Laura de Reynal: Understanding Web Literacy within the Web Journey |

Since 2012, pioneering educators and web activists have been reflecting and developing answers to the question, “What is web literacy?”

These conversations have shaped our Web Literacy Map, a guiding document that outlines the skills and competencies that are essential to reading, writing, and participating on the Web.

Just the other week, we wrapped up improvements to the Web Literacy Map, proudly unveiling version 1.5. Thank you to all who contributed to that discussion, and to Doug Belshaw for facilitating it.

We believe being web literate is not just knowing how to code in HTML, CSS, and Javascript. These are great tools, but they’re only one aspect of being a Web creator and citizen. Therefore, the updated Web Literacy Map includes competencies like privacy, remixing, and collaboration.

As we design and test offerings to foster web literacy, we are also determining how these skills fit into a larger web journey. Prompted by user research in Bangladesh, India, Kenya, and beyond, we’re asking: What skill levels and attitudes encourage people to learn more about web literacy? And how can one wield the Web after learning its fundamentals?

Mozilla believes this is an important question to reflect on in the open. With this blog post, we’d like to start a series of discussions, and warmly invite you to think this through with us.

What is the Web Journey ?

As we talked to 356 people in four different countries (India, Bangladesh, Kenya, and Brazil) over the past six months, we learned how people perceive and use the Web in their daily lives. Our research teams identified common patterns, and we gathered them into one framework called “The Web Journey.”

This framework outlines five stages of engagement with the Web:

- Unaware: Have never heard of the Web, and have no idea what it is (for example, these smartphone owners in Bangladesh)

- No use: Are aware of the existence of the Web, but do not use it, either by rejection (“the Web is not for me, women don’t go online”), Inability (“I can’t afford data”), or perceived inability (“The Web is only for businessmen”)

- Basic use: Are online, and are stuck in the “social media bubble,” unaware of what else is possible (Internet = Facebook). These users have little understanding of the Web, and don’t leverage its full range of possibilities

- Leverage: Are able to seize the opportunities the Web has to offer to improve their quality of life (to find jobs, to learn, or to grow their business)

- Creation: From the tinkerer to the web developer, creators understand how to build the Web and are able to make it their own

You can read the full details of the Web Journey, with constraints and triggers, in the Webmaker Field Research Report from India.

Why do the Web Literacy Map and the Web Journey fit together?

While the Web Literacy Map explores the skills needed, the Web Journey describes various stages of engagement with the Web. It appears certain skills may be more necessary for some stages of the Web Journey. For example: Is there a list of skills that people need to acquire to move from “Basic use” to “Leverage”?

As we continue to research digital literacy in Chicago and London (April – August 2015), we’ll seek to understand how to couple skills listed in the Web Literacy Map with steps of engagement outlined in the Web Journey. Bridging the two can help us empower Mozilla Clubs all around the world.

What are the discussion questions ?

To kick off the conversation, consider the following:

- Literacy isn’t an on/off state. It’s more a continuum, and there are many learning pathways. How can this nuance be illustrated and made more intuitive?

- How can we leverage the personal motivators highlighted along the Web Journey to propose interest-driven learning pathways?

- Millions of people think Facebook is the Internet. How can the Web Literacy Map be a guide for these learners to know more and do more with the Web?

- As web literacy skills and competencies increase throughout a learner’s journey, and as people participate in web cultures, particular attitudes emerge and evolve. What are those nuances of web culture? How might we determine a “fluency” in the Web?

- How does the journey continue after someone has learned the fundamentals of the Web? How can they begin to participate in their community and share that knowledge forward? How can mentorship, and eventually leadership, be a more explicit part of a web journey? How do confidence and ability to teach others become part of the web journey?

http://lauradereynal.com/2015/04/22/understanding-web-literacy-within-the-web-journey/

|

|

Michael Kaply: Firefox 38 ESR is Almost Here |

Just a reminder that the next Firefox ESR is only three weeks away. In my next post I'll give you some details on what to expect.

Also, if there are any Firefox enterprise topics you'd like to see me cover on my blog, please let me know.

https://mike.kaply.com/2015/04/21/firefox-38-esr-is-almost-here/

|

|

John O'Duinn: “We are ALL Remoties” (Apr2015 edition) |

Last week, I had the great privilege of talking with people at Wikimedia Foundation about “we are all remoties”!

This was also the first presentation by a non-Wikimedia person in their brand new space, and was further complicated with local *and* remote attendees! Chip, Greg and Rachel did a great job of making sure everything went smoothly, quickly setting up a complex multi-display remote-and-local video configuation, debugging some initial audio issues, moderating questions from remote attendees, etc. We even had extra time to cover topics like “Disaster Recovery”, “interviewing tips for remoties” and “business remotie trends”. Overall, it was a long, very engaged, session but felt helpful, informative, great fun and seemed to be well received by everyone.

As usual, you can get the latest version of these slides, in handout PDF format, by clicking on the thumbnail image. I’ve changed the PDF format slightly as requested, so let me know if you think this format is better/worse.

As usual, you can get the latest version of these slides, in handout PDF format, by clicking on the thumbnail image. I’ve changed the PDF format slightly as requested, so let me know if you think this format is better/worse.

As always, if you have any questions, suggestions or good/bad stories about working in a remote or geo-distributed teams, please let me know – I’d love to hear them.

Thanks

John.

=====

ps: Oh, and by the way, Wikimedia are hiring – see here for current job openings. They are smart, nice people, literally changing the world – and yes, remoties ARE welcome. ![]()

http://oduinn.com/blog/2015/04/21/we-are-all-remoties-apr2015-edition/

|

|

Jeff Walden: Police, force, and armed and violent disabled people: San Francisco v. Sheehan |

Yesterday I began a series of posts discussing the Supreme Court cases I saw in my latest visit for oral arguments. Today I discuss San Francisco v. Sheehan

San Francisco v. Sheehan concerned a messy use of force by police in San Francisco in responding to a violent, mentally-ill person making threats with a knife — an unhappy situation for all. Very imprecisely, the question is whether the officers used excessive force to subdue an armed and violent, disabled suspect, knowing that suspect might require special treatment under the Americans with Disabilities Act or the Fourth Amendment while being arrested. (Of course, whatever baseline those laws require, police often should and will be held to a higher standard.)

The obvious prediction

Mildly-interested readers need know but two things to predict this case’s outcome. First, this case arose in the Ninth Circus Circuit: a court regularly with very outlier views. And not solely along the tired left-right axis: when the Court often summarily reverses the Ninth Circuit without even hearing argument, partisanship can play no role. Second, Sheehan must overcome qualified immunity, which for better and worse protects “all but the plainly incompetent” police against lawsuit. These facts typically guarantee San Francisco will win and Sheehan will lose.

That aside, one observation struck me. Stereotyping heavily, it’s surprising that San Francisco in particular would argue, to use overly-reductive descriptions, “for” police and “against” the disabled. Usually we’d assume San Francisco would stand by, not against, underprivileged minorities.

“Bait and switch”

That expectation makes this letter from advocacy groups requesting San Francisco abandon its appeal very interesting. At oral argument Justice Scalia interrupted San Francisco’s argument before it even started to bluntly charge the city with changing its argument, between its request for the Supreme Court to hear the case and when San Francisco presented its argument for why it should win — even calling it a “bait and switch”. Minutes later, Justice Sotomayor echoed his views (in more restrained terms).

When requesting Supreme Court review, San Francisco argued that the ADA “does not require accommodations for armed and violent suspects who are disabled” — during an arrest, all such suspects may be treated identically regardless of ability. In response the Court agreed to decide “whether Title II of the Americans with Disabilities Act requires law enforcement officers to provide accommodations to an armed, violent, and mentally ill suspect” while bringing him into custody.

But San Francisco’s written argument instead argued, “Sheehan was not entitled to receive accommodations in her arrest under Title II of the [ADA]” because her armed violence “posed a direct threat in the reasonable judgment of the officers”. In other words, San Francisco had changed from arguing no armed and violent, disabled suspect deserved an ADA accommodation, to arguing Sheehan particularly deserved no ADA accommodation because she appeared to be a direct threat.

The followup

Thus San Francisco’s argument derailed, on this and other points. Several minutes in Justice Kagan even prefaced a question with, “And while we are talking about questions that are not strictly speaking in the case,” to audience laughter. A Ninth-Circuit, plaintiff-friendly, appeal-by-the-government case is usually a strong bet for reversal, but San Francisco seems to have complicated its own case.

The Court could well dismiss this case as “improvidently granted”, preserving the lower court’s decision without creating precedent. Oral argument raised the possibility, but a month later it seems unlikely. San Francisco’s still likely to win, but the justices’ frustration with San Francisco’s alleged argument change might not bode well when San Francisco next wants the Court to hear a case.

Back to the letter

Again consider the letter urging San Francisco to abandon its appeal. Suppose the letter’s authors first privately requested San Francisco drop the case, resorting to open letter once those overtures failed.

But what if the letter wasn’t a complete failure? Could San Francisco have changed its argument to “split the baby”, protecting its officers and attempting to placate interest groups? The shift couldn’t have responded to just the letter, sent one day before San Francisco made its final argument. But it might have been triggered by prior behind-the-scenes negotiation.

This fanciful possibility requires that the open letter not be San Francisco’s first chance to hear its arguments. It further grants the letter’s authors extraordinary political power…yet too little to change San Francisco’s position. Occam’s Razor absolutely rejects this explanation. But if some involved interest group promptly tried to dissuade San Francisco, the letter might have been partially effective.

Final analysis

Are Justice Scalia’s and Sotomayor’s criticisms reasonable? I didn’t fully read the briefs, and I don’t know when it’s acceptable for a party to change its argument (except by settling the case). It appears to me that San Francisco changed its argument; my sense is doing so but claiming you didn’t is the wrong way to change one’s position. But I don’t know enough to be sure of either conclusion.

As I said yesterday, I didn’t fully prepare for this argument, so I hesitate to say too much. And frankly the messy facts make me glad I don’t have to choose a position. So I’ll leave my discussion at that.

Tomorrow I continue to the primary case I came to see, a First Amendment case.

|

|

Mozilla Release Management Team: Firefox 37.0.1 to 37.0.2 |

This stability release for Desktop focuses on graphic issues. We took patches to fix graphic rendering issues or crashes.

- 7 changesets

- 15 files changed

- 164 insertions

- 100 deletions

| Extension | Occurrences |

| cpp | 7 |

| txt | 2 |

| h | 2 |

| sh | 1 |

| json | 1 |

| in | 1 |

| idl | 1 |

| Module | Occurrences |

| widget | 4 |

| gfx | 4 |

| mobile | 2 |

| dom | 2 |

| testing | 1 |

| config | 1 |

| browser | 1 |

List of changesets:

| Mark Finkle | Bug 1151469 - Tweak the package manifest to avoid packaging the wrong file. r=rnewman, a=lmandel - c8866e34cbf3 |

| Jeff Muizelaar | Bug 1137716 - Increase the list of devices that are blocked. a=sledru, a=lmandel - 1931c4e48e39 |

| Matt Woodrow | Bug 1151721 - Disable hardware accelerated video decoding for older intel drivers since it gives black frames on youtube. r=ajones, a=lmandel - 29e130e0b166 |

| Aaron Klotz | Bug 1141081 - Add weak reference support to HTMLObjectElement and use it in nsPluginInstanceOwner. r=jimm, a=lmandel - fa7d8b9db216 |

| Jeff Muizelaar | Bug 1153381 - Add a D3D11 ANGLE blacklist. r=mstange, a=lmandel, ba=const-only-change - 56fada8104a6 |

| Ryan VanderMeulen | Bug 1154434 - Bump mozharness.json to revision 4567c42063b7. a=test-only - a550f8bc2f26 |

| Bas Schouten | Bug 1151361 - Wrap WARP D3D11 creation in a try catch block like done with regular D3D11. r=jrmuizel, a=sledru - 5aa012e8ba58 |

http://release.mozilla.org/statistics/37/2015/04/21/fx-37.0.1-to-37.0.2.html

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1113375] Make changes to MDN’s feature request form

- [579089] Change default Hardware / OS values to be “Unspecified/Unspecified”

- [1154730] rewrite product/component searching to use jquery-ui instead of yui

- [1155528] stop linking bzr commit messages to bzr.mozilla.org’s loggerhead

- [1155869] Ctrl+e is consumed by Edit button and cursor doesn’t move to the end of the line.

- [880227] Install of Bugzilla DBI module fails due to mirror.hiwaay.net not being available

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2015/04/21/happy-bmo-push-day-137/

|

|

Ian Bicking: A Product Journal: What Are We Making? |

I’m blogging about the development of a new product in Mozilla, look here for my other posts in this series

I’ve managed to mostly avoid talking about what we’re making here. Perhaps shyness.

We are making a tool for sharing on the web. This tool creates a new kind of thing to share, it’s not a communication medium of any kind. We’re calling it PageShot, similar to a screenshot but with all the power we can add to it since web pages are much more understandable than pixels. (The things it makes we call a Shot.)

The tool emphasizes sharing clips or highlights from pages. These can be screenshots (full or part of the screen) or text clippings. Along with those clips we keep an archival copy of the entire web page, preserving the full context of the page you were looking at and the origin of each clip. Generally we try to save as much information and context about the page as we can. We are trying to avoid choices, the burdensome effort to decide what you might want in the future. The more effort you put into using this tool, the more information or specificity you can add to your Shot, but we do what we can to save everything so you can sort it out later if you want.

I mentioned earlier that I started this idea thinking about how to make use of frozen copies of the DOM. What we’re working on now looks much more like a screenshotting tool that happens to keep this copy of the page. This changed happened in part because of user research done at Mozilla around saving and sharing, where I became aware of just how prevalent screenshots had become to many people.

The current (rough) state of the tool

The current (rough) state of the toolIt’s not hard to understand the popularity of screenshots, specifically on mobile devices. iPhone users at least have mostly figured out screenshotting, functionality that remains somewhat obscure on desktop devices (and for the life of me I can’t get my Android device to make a screenshot). Also screenshots are the one thing that works across applications – even with an application that supports sharing, you don’t really know what’s going to be shared, but you know what the screenshot will contain. You can also share screenshots with confidence: the recipient won’t have to log in or sign up, they can read it on any device they want, once it has arrived they don’t need a network connection. Screenshots are a reliable tool. A lesson I try to regularly remind myself of: availability beats fidelity.

In a similar vein we’ve seen the rise of the animated gif over the video (though video resurging now that it’s just a file again), and the smuggling in of long texts to Twitter via images.

A lot of this material moves through communication mediums via links and metadata, but those links and metadata are generally under the control of site owners. It’s up to the site owner what someone sees when they click a link, it’s up to them what the metadata will suggest go into the image previous and description. PageShot gives that control to the person sharing, since each Shot is your link, your copy and your perspective.

As of this moment (April 2015) our designs are still ahead of our implementation, so there’s not a lot to try out at this moment, but this is what we’re putting together.

If you want to follow along, check out the repository.

http://www.ianbicking.org/blog/2015/04/product-journal-what-are-we-making.html

|

|

Nick Thomas: Changes coming to ftp.mozilla.org |

ftp.mozilla.org has been around for a long time in the world of Mozilla, dating back to original source release in 1998. Originally it was a single server, but it’s grown into a cluster storing more than 60TB of data, and serving more than a gigabit/s in traffic. Many projects store their files there, and there must be a wide range of ways that people use the cluster.

This quarter there is a project in the Cloud Services team to move ftp.mozilla.org (and related systems) to the cloud, which Release Engineering is helping with. It would be very helpful to know what functionality people are relying on, so please complete this survey to let us know. Thanks!

https://ftangftang.wordpress.com/2015/04/21/changes-coming-to-ftp-mozilla-org/

|

|

Mozilla Science Lab: Effective Code Review for Journals |

Nature Biotechnology recently announced that it would be requiring authors to ‘check the accessibility of code used in computational studies’, in an effort to mitigate retractions and errors resulting from bugs & under-validated code. The article quoted the Science Lab’s director, Kaitlin Thaney, in observing the Science Lab’s position that openness in research is not only a matter of releasing information, but making sure it is effectively reusable, too, in order to reproduce and confirm results and carry that work forward.

But, technical challenges remain. As was discovered in the series of code review pilot studies from the Science Lab and Marian Petre from Open University in 2013 and 2014, third parties reviewing code they weren’t involved in writing leads to superficial reviews without much value; see reflections on these studies from Thaney as well as Greg Wilson, in addition to recent comments to the same effect from Wilson here.

However, journals like Nature Biotech can still compel some very valuable change by marshaling a system of code review for their submissions. As we discuss in our teaching kit on code review (and as was originally investigated in this study), much value can be derived from setting expectations for code clarity and integrity. By demanding authors submit a high-coverage test suite for any original code used, journals can encourage researchers to use this fundamental technique for ensuring code quality; also, as discussed in depth in the study linked above, the act of requiring authors to describe and justify the changes made at each pull request results in measurably less bugs committed – before code review has even begun. Specifically, journals could require:

- a passing test suite with a minimum standard of coverage (>90%)

- a commit log consisting of small pull requests (<500 lines each), each with an accompanying description & justification of the changes made and strategies taken.

Neither of these require reviewers to read code in-depth, but both push authors to seriously reflect on their code, and thus improve its quality.

For more strategies on how to implement a system of code review for scientific software, check out our curriculum on code review. The ideas and strategies presented there are crafted with busy scientists in mind, and explore how to get the most out of short, low-time-commitment reviews; feedback and contributions always welcome over at the project repo.

http://mozillascience.org/effective-code-review-for-journals/

|

|

Jeff Walden: Another D.C. trip |

A month ago, I visited Washington, D.C. to see (unfortunately only a subset of) friends in the area, to get another Supreme Court bobblehead (Chief Justice Rehnquist) — and, naturally, to watch interesting Supreme Court oral arguments. I attended two arguments on March 23: the first for a First Amendment case, the second for (roughly) a police use-of-force case.

I did relatively little preparation for the police use-of-force case, limiting myself to the facts, questions presented, and cursory summaries of the parties’ arguments. My discussion of that case will be brief.

But the other case (for which I amply prepared) will receive different treatment. First Amendment law is extraordinarily complicated. A proper treatment of the case, its background, legal analysis, and oral argument discussion well exceeds a single post.

So a post series it is. Tomorrow: the police use-of-force case.

|

|

Benjamin Smedberg: Using crash-stats-api-magic |

A while back, I wrote the tool crash-stats-api-magic which allows custom processing of results from the crash-stats API. This tool is not user-friendly, but it can be used to answer some pretty complicated questions.

As an example and demonstration, see a bug that Matthew Gregan filed this morning asking for a custom report from crash-stats:

In trying to debug bug 1135562, it’s hard to guess the severity of the problem or look for any type of version/etc. correlation because there are many types of hangs caught under the same mozilla::MediaShutdownManager::Shutdown stack. I’d like a report that contains only those with mozilla::MediaShutdownManager::Shutdown in the hung (main thread) stack *and* has wasapi_stream_init on one of the other threads, please.

To build this report, start with a basic query and then refine it in the tool:

- Construct a supersearch query to select the crashes we’re interested in. The only criteria for this query was “signature contains ‘MediaShutdownManager::Shutdown`. When possible, filter on channel, OS, and version to reduce noise.

- After the supersearch query is constructed, choose “More Options” from the results page and copy the “Public API URL” link.

- Load crash-stats-api-magic and paste the query URL. Choose “Fetch” to fetch the results. Look through the raw data to get a sense for its structure. Link

- The meat of this function is to filter out the crashes that don’t have “wasapi_stream_init” on a thread. Choose “New Rule” and create a filter rule:

function(d) { var ok = false; d.json_dump.threads.forEach(function(thread) { thread.frames.forEach(function(frame) { if (frame.function && frame.function.indexOf("wasapi_stream_init") != -1) { ok = true; } }); }); return ok; }Choose “Execute” to run the filter. Link

- To get the final report we output only the signature and the crash ID for each result. Choose “New Rule” again and create a mapping rule:

function(d) { return [d.uuid, d.signature]; }

One of the advantages of this tool is that it is possible to iterate quickly on the data without constantly re-querying, but at the end it should be possible to permalink to the results in bugzilla or email exchanges.

If you need to do complex crash-stats analysis, please try it out! email me if you have questions, and pull requests are welcome.

http://benjamin.smedbergs.us/blog/2015-04-20/using-crash-stats-api-magic/

|

|

Armen Zambrano: How to install pywin32 on Windows |

This dependency if you're going to run older scripts that are needed for the release process.

Unfortunately, at the moment, we can't get rid of this dependency and need to install it.

If you're not using Mozilla-build, you can easily install it with these steps:

- Use Python 2.7.9's 32-bit installer

- If you don't already have Python installed for Windows

- Use Pywin32 for Python 2.7 32-bit (Build 219) installer

Since the process was a bit painful for me, I will take note of it for future reference.

I tried few approaches until I figured out that we need to use easy_install instead of pip and we need to point to an .exe file rather than a normal Python package.

Use easy_install

easy_install http://hivelocity.dl.sourceforge.net/project/pywin32/pywin32/Build%20219/pywin32-219.win32-py2.7.exe

python -c "import win32api"

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/-sKZxGbc7wM/how-to-install-pywin32-on-windows.html

|

|

Mozilla Science Lab: Mozilla Science Week in Review, April 13-19 |

The Week in Review is our weekly roundup of what’s new in open science from the past week. If you have news or announcements you’d like passed on to the community, be sure to share on Twitter with @mozillascience and @billdoesphysics, or join our mailing list and get in touch there.

Government & Policy

- The World Health Organization has released a statement demanding the results of all medical clinical trials be published, in order to combat the effects of dissemination bias.

Tools & Projects

- Apple has released ResearchKit, a framework supporting the creation of medical apps using biosensors in current Apple devices, as an open source project on GitHub.

Events & Conferences

- NASA held its annual NASA Space Apps Challenge, a worldwide hackathon encouraging people to build and learn with a collection of open data sets released by the space agency.

Blogs & Papers

- Jon Udell blogged at PLOS on an update to his 2000 work, Internet Groupware for Scientific Collaboration. The update is entitled When Open Access is the norm, how do scientists work together online?, and reflects on where science on the web has come since the original report was published.

- April Wright wrote a compelling blog post describing her methods in pursuit of reproducibility on one of her recent papers; Wright eloquently makes the point that openness is not an all-or-nothing effort, and that ‘perfection’ in openness is neither possible nor required for efforts at reproducibility to be very valuable.

- Eva Amsen reviewed Ontspoorde Wetenschap (‘Derailed Science’), a book describing examples of research fraud and misconduct in the Netherlands by Frank van Kolfschooten. Van Kolfschooten concludes the book with the observation that the free dissemination of data would have prevented many of these failures of process from progressing as far as they did.

- Don’t miss F1000’s open science roundup from last week, written by our colleague Eva Amsen.

http://mozillascience.org/mozilla-science-week-in-review-april-13-19/

|

|

Chris AtLee: RelEng Retrospective - Q1 2015 |

RelEng had a great start to 2015. We hit some major milestones on projects like Balrog and were able to turn off some old legacy systems, which is always an extremely satisfying thing to do!

We also made some exciting new changes to the underlying infrastructure, got some projects off the drawing board and into production, and drastically reduced our test load!

Firefox updates

Balrog

All Firefox update queries are now being served by Balrog! Earlier this year, we switched all Firefox update queries off of the old update server, aus3.mozilla.org, to the new update server, codenamed Balrog.

Already, Balrog has enabled us to be much more flexible in handling updates than the previous system. As an example, in bug 1150021, the About Firefox dialog was broken in the Beta version of Firefox 38 for users with RTL locales. Once the problem was discovered, we were able to quickly disable updates just for those users until a fix was ready. With the previous system it would have taken many hours of specialized manual work to disable the updates for just these locales, and to make sure they didn't get updates for subsequent Betas.

Once we were confident that Balrog was able to handle all previous traffic, we shut down the old update server (aus3). aus3 was also one of the last systems relying on CVS (!! I know, rite?). It's a great feeling to be one step closer to axing one more old system!

Funsize

When we started the quarter, we had an exciting new plan for generating partial updates for Firefox in a scalable way.

Then we threw out that plan and came up with an EVEN MOAR BETTER plan!

The new architecture for funsize relies on Pulse for notifications about new nightly builds that need partial updates, and uses TaskCluster for doing the generation of the partials and publishing to Balrog.

The current status of funsize is that we're using it to generate partial updates for nightly builds, but not published to the regular nightly update channel yet.

There's lots more to say here...stay tuned!

FTP & S3

Brace yourselves... ftp.mozilla.org is going away...

...in its current incarnation at least.

Expect to hear MUCH more about this in the coming months.

tl;dr is that we're migrating as much of the Firefox build/test/release automation to S3 as possible.

The existing machinery behind ftp.mozilla.org will be going away near the end of Q3. We have some ideas of how we're going to handle migrating existing content, as well as handling new content. You should expect that you'll still be able to access nightly and CI Firefox builds, but you may need to adjust your scripts or links to do so.

Currently we have most builds and tests doing their transfers to/from S3 via the task cluster index in addition to doing parallel uploads to ftp.mozilla.org. We're aiming to shut off most uploads to ftp this quarter.

Please let us know if you have particular systems or use cases that rely on the current host or directory structure!

Release build promotion

Our new Firefox release pipeline got off the drawing board, and the initial proof-of-concept work is done.

The main idea here is to take an existing build based on a push to mozilla-beta, and to "promote" it to a release build. So we need to generate all the l10n repacks, partner repacks, generate partial updates, publish files to CDNs, etc.

The big win here is that it cuts our time-to-release nearly in half, and also simplifies our codebase quite a bit!

Again, expect to hear more about this in the coming months.

Infrastructure

In addition to all those projects in development, we also tackled quite a few important infrastructure projects.

OSX test platform

10.10 is now the most widely used Mac platform for Firefox, and it's important to test what our users are running. We performed a rolling upgrade of our OS X testing environment, migrating from 10.8 to 10.10 while spending nearly zero capital, and with no downtime. We worked jointly with the Sheriffs and A-Team to green up all the tests, and shut coverage off on the old platform as we brought it up on the new one. We have a few 10.8 machines left riding the trains that will join our 10.10 pool with the release of ESR 38.1.

Got Windows builds in AWS

We saw the first successful builds of Firefox for Windows in AWS this quarter as well! This paves the way for greater flexibility, on-demand burst capacity, faster developer prototyping, and disaster recovery and resiliency for windows Firefox builds. We'll be working on making these virtualized instances more performant and being able to do large-scale automation before we roll them out into production.

Puppet on windows

RelEng uses puppet to manage our Linux and OS X infrastructure. Presently, we use a very different tool chain, Active Directory and Group Policy Object, to manage our Windows infrastructure. This quarter we deployed a prototype Windows build machine which is managed with puppet instead. Our goal here is to increase visibility and hackability of our Windows infrastructure. A common deployment tool will also make it easier for RelEng and community to deploy new tools to our Windows machines.

New Tooltool Features

We've redesigned and deployed a new version of tooltool, the content-addressable store for large binary files used in build and test jobs. Tooltool is now integrated with RelengAPI and uses S3 as a backing store. This gives us scalability and a more flexible permissioning model that, in addition to serving public files, will allow the same access outside the releng network as inside. That means that developers as well as external automation like TaskCluster can use the service just like Buildbot jobs. The new implementation also boasts a much simpler HTTP-based upload mechanism that will enable easier use of the service.

Centralized POSIX System Logging

Using syslogd/rsyslogd and Papertrail, we've set up centralized system logging for all our POSIX infrastructure. Now that all our system logs are going to one location and we can see trends across multiple machines, we've been able to quickly identify and fix a number of previously hard-to-discover bugs. We're planning on adding additional logs (like Windows system logs) so we can do even greater correlation. We're also in the process of adding more automated detection and notification of some easily recognizable problems.

Security work

Q1 included some significant effort to avoid serious security exploits like GHOST, escalation of privilege bugs in the Linux kernel, etc. We manage 14 different operating systems, some of which are fairly esoteric and/or no longer supported by the vendor, and we worked to backport some code and patches to some platforms while upgrading others entirely. Because of the way our infrastructure is architected, we were able to do this with minimal downtime or impact to developers.

API to manage AWS workers

As part of our ongoing effort to automate the loaning of releng machines when required, we created an API layer to facilitate the creation and loan of AWS resources, which was previously, and perhaps ironically, one of the bigger time-sinks for buildduty when loaning machines.

Cross-platform worker for task cluster

Release engineering is in the process of migrating from our stalwart, buildbot-driven infrastructure, to a newer, more purpose-built solution in taskcluster. Many FirefoxOS jobs have already migrated, but those all conveniently run on Linux. In order to support the entire range of release engineering jobs, we need support for Mac and Windows as well. In Q1, we created what we call a "generic worker," essentially a base class that allows us to extend taskcluster job support to non-Linux operating systems.

Testing

Last, but not least, we deployed initial support for SETA, the search for extraneous test automation!

This means we've stopped running all tests on all builds. Instead, we use historical data to determine which tests to run that have been catching the most regressions. Other tests are run less frequently.

http://atlee.ca/blog/posts/releng-retrospective-q1-2015.html

|

|

Robert O'Callahan: Another VMWare Hypervisor Bug |

Single-stepping through instructions in VMWare (6.0.4 build-2249910 in my case) with a 32-bit x86 guest doesn't trigger hardware watchpoints.

Steps to reproduce:

- Configure a VMWare virtual machine (6.0.4 build-2249910 in my case) booting 32-bit Linux (Ubuntu 14.04 in my case).

- Compile this program with gcc -g -O0 and run it in gdb:

int main(int argc, char** argv) {

char buf[100];

buf[0] = 99;

return buf[0];

} - In gdb, do

- break main

- run

- watchpoint -l buf[0]

- stepi until main returns

- This should trigger the watchpoint. It doesn't :-(.

Doing the same thing in a KVM virtual machine works as expected.

Sigh.

http://robert.ocallahan.org/2015/04/another-vmware-hypervisor-bug.html

|

|

Mike Conley: Things I’ve Learned This Week (April 13 – April 17, 2015) |

When you send a sync message from a frame script to the parent, the return value is always an array

Example:

// Some contrived code in the browser

let browser = gBrowser.selectedBrowser;

browser.messageManager.addMessageListener("GIMMEFUE,GIMMEFAI", function onMessage(message) {

return "GIMMEDABAJABAZA";

});

// Frame script that runs in the browser

let result = sendSendMessage("GIMMEFUE,GIMMEFAI");

console.log(result[0]);

// Writes to the console: GIMMEDABAJABAZA

From the documentation:

Because a single message can be received by more than one listener, the return value of sendSyncMessage() is an array of all the values returned from every listener, even if it only contains a single value.

I don’t use sync messages from frame scripts a lot, so this was news to me.

You can use [cocoaEvent hasPreciciseScrollingDeltas] to differentiate between scrollWheel events from a mouse and a trackpad

scrollWheel events can come from a standard mouse or a trackpad1. According to this Stack Overflow post, one potential way of differentiating between the scrollWheel events coming from a mouse, and the scrollWheel events coming from a trackpad is by calling:

bool isTrackpad = [theEvent hasPreciseScrollingDeltas];

since mouse scrollWheel is usually line-scroll, whereas trackpads (and Magic Mouse) are pixel scroll.

The srcdoc attribute for iframes lets you easily load content into an iframe via a string

It’s been a while since I’ve done web development, so I hadn’t heard of srcdoc before. It was introduced as part of the HTML5 standard, and is defined as:

The content of the page that the embedded context is to contain. This attribute is expected to be used together with the sandbox and seamless attributes. If a browser supports the srcdoc attribute, it will override the content specified in the src attribute (if present). If a browser does NOT support the srcdoc attribute, it will show the file specified in the src attribute instead (if present).

So that’s an easy way to inject some string-ified HTML content into an iframe.

Primitives on IPDL structs are not initialized automatically

I believe this is true for structs in C and C++ (and probably some other languages) in general, but primitives on IPDL structs do not get initialized automatically when the struct is instantiated. That means that things like booleans carry random memory values in them until they’re set. Having spent most of my time in JavaScript, I found that a bit surprising, but I’ve gotten used to it. I’m slowly getting more comfortable working lower-level.

This was the ultimate cause of this crasher bug that dbaron was running into while exercising the e10s printing code on a debug Nightly build on Linux.

This bug was opened to investigate initializing the primitives on IPDL structs automatically.

Networking is ultimately done in the parent process in multi-process Firefox

All network requests are proxied to the parent, which serializes the results back down to the child. Here’s the IPDL protocol for the proxy.

On bi-directional text and RTL

gw280 and I noticed that in single-process Firefox, a

If the value was “A) Something else”, the string would come out unchanged.

We were curious to know why this flipping around was happening. It turned out that this is called “BiDi”, and some documentation for it is here.

If you want to see an interesting demonstration of BiDi, click this link, and then resize the browser window to reflow the text. Interesting to see where the period on that last line goes, no?

It might look strange to someone coming from a LTR language, but apparently it makes sense if you’re used to RTL.

I had not known that.

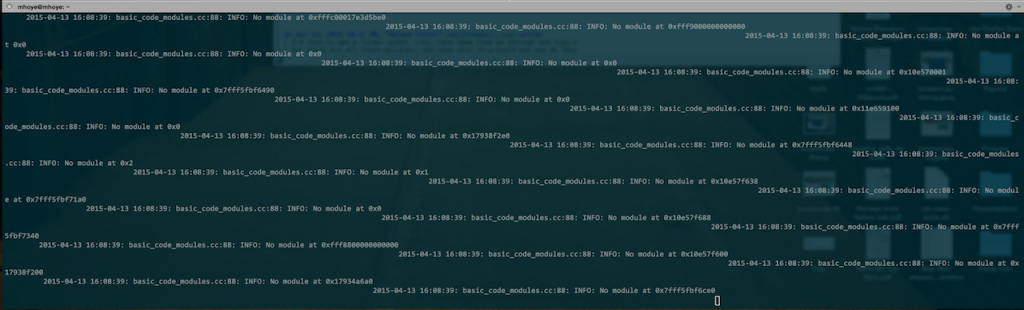

Some terminal spew

My friend and colleague Mike Hoye showed me the above screenshot upon coming into work earlier this week. He had apparently launched Nightly from the terminal, and at some point, all that stuff just showed up.

“What is all of that?”, he had asked me.

I hadn’t the foggiest idea – but a quick DXR showed basic_code_modules.cc inside Breakpad, the tool used to generate crash reports when things go wrong.

I referred him to bsmedberg, since that fellow knows tons about crash reporting.

Later that day, mhoye got back to me, and told me that apparently this was output spew from Firefox’s plugin hang detection code. Mystery solved!

So if you’re running Firefox from the terminal, and suddenly see some basic_code_modules.cc stuff show up… a plugin you’re running probably locked up, and Firefox shanked it.

http://mikeconley.ca/blog/2015/04/18/things-ive-learned-this-week-april-13-april-17-2015/

|

|

Mike Conley: The Joy of Coding (Ep. 10): The Mystery of the Cache Key |

In this episode, I kept my camera off, since I was having some audio-sync issues1.

I was also under some time-pressure, because I had a meeting scheduled for 2:30 ET2, giving me exactly 1.5 hours to do what I needed to do.

And what did I need to do?

I needed to figure out why an nsISHEntry, when passed to nsIWebPageDescriptor’s loadPage, was not enough to get the document out from the HTTP cache in some cases. 1.5 hours to figure it out – the pressure was on!

I don’t recall writing a single line of code. Instead, I spent most of my time inside XCode, walking through various scenarios in the debugger, trying to figure out what was going on. And I eventually figured it out! Read this footnote for the TL;DR:3

References

Bug 1025146 – [e10s] Never load the source off of the network when viewing source – Notes

http://mikeconley.ca/blog/2015/04/18/the-joy-of-coding-ep-9-more-view-source-hacking-2/

|

|

Alex Gibson: My second year working at Mozilla |

This week marked my second year Mozillaversary. I did plan to write this blog post of the 15th April, which would have marked the day I started, but this week flew by so quickly I almost completely missed it!

Carrying on from last years blog post, much of my second year at Mozilla has been spent working on various parts of mozilla.org, to which I made a total of 196 commits this year.

Much of my time has been spent working on Firefox on-boarding. Following the success of the on-boarding flow we built for the Firefox 29 Australis redesign last year, I went on to work on several more on-boarding flows to help introduce new features in Firefox. These included introducing the Firefox 33.1 privacy features, Developer Edition firstrun experience, 34.1 search engine changes, and 36.0 for Firefox Hello. I also got to work on the first time user experience for when a user makes their first Hello video call, which initially launched in 35.0. It was all a crazy amount of work from a lot of different people, but something I really enjoyed getting to work on alongside various other teams at Mozilla.

In between all that I also got to work on some other cool things, including the 2015 mozilla.org homepage redesign. Something I consider quite a privilege!

On the travel front, I got to visit both San Fransisco and Santa Clara a bunch more times (I’m kind of losing count now). I also got to visit Portland for the first time when Mozilla had their all-hands week last December, which was such a great city!

I’m looking forward to whatever year three has in store!

http://alxgbsn.co.uk/2015/04/18/my-second-year-working-at-mozilla

|

|

Air Mozilla: Webdev Beer and Tell: April 2015 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

|

|