Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Justin Crawford: Report: Web Compatibility Summit |

Last week I spoke at Mozilla’s Compatibility Summit. I spoke about compatibility data. Here are my slides.

Compatibility data…

- is information curated by web developers about the cross-browser compatibility of the web’s features.

- documents browser implementation of standards, browser anomalies (both bugs and features), and workarounds for common cross-browser bugs. It theoretically covers hundreds of browser/version combinations, but naturally tends to focus on browsers/versions with the most use.

- is partly structured (numbers and booleans can answer the question, “does Internet Explorer 10 support input type=email?”) and partly unstructured (numbers and booleans cannot say, “Safari Mobile for iOS applies a default style of opacity: 0.4 to disabled textual elements.”).

Compatibility data changes all the time. As a colleague pointed out last week, “Every six weeks we have all new browsers” — each with an imperfect implementation of web standards, each introducing new incompatibilities into the web.

Web developers are often the first to discover and document cross-browser incompatibilities since their work product is immediately impacted. And they do a pretty good job of sharing compatibility data. But as I said in my talk: Compatibility data is an oral tradition. Web developers gather at the town pump and share secrets for making boxes with borders float in Browser X. Web developers sit at the feet of venerated elders and hear how to make cross-browser compatible CSS transitions. We post our discoveries and solutions in blogs; in answers on StackOverflow; in GitHub repositories. We find answers on old blogs; in countless abandoned PHP forums; on the third page of search results.

There are a small number of truly canonical sources for compatibility data. We surveyed MDN visitors in January and learned that they refer to two sources far more than any others: MDN and caniuse.com. MDN has more comprehensive information — more detailed, for more browser versions, accompanied by encyclopedic reference materials. caniuse has a much better interface and integrates with browser market share data. Both have good communities of contributors. Together, they represent the canon of compatibility data.

Respondents to our recent survey said they use the two sites differently: They use caniuse for planning new sites and debugging existing issues, and use MDN for exploring new features and when answering questions that come up when writing code.

On MDN, we’re working on a new database of compatibility data with a read/write API. This project solves some maintenance issues for us, and promises to create more opportunities for web developers to build automation around web compatibility data (for an example of this, see doiuse.com, which scans your CSS for features covered by caniuse and returns a browser coverage report).

Of course the information in MDN and caniuse is only one tool for improving web compatibility, which is why the different perspectives at the Compat Summit were so valuable.

If we think of web compatibility as a set of concentric circles, MDN and caniuse (and the entire, sprawling web compatibility data corpus) occupy a middle ring. In the illustration above, the rings get more diffuse as they get larger, representing the increasing challenge of finding and solving incompatibility as it moves from vendor to web developer to site.

- By the time most developers encounter cross-browser compatibility issues, those issues have been deployed in browser versions. So browser vendors have a lot of responsibility to make web standards possible; to deploy as few standards-breaking features and bugs as possible. Jacob Rossi from Microsoft invited Compat Summit participants to collaborate on a framework that would allow browser vendors to innovate and push the web forward without creating durable incompatibility issues in deployed websites.

- When incompatibilities land in browser releases, web developers find them, blog about them, and build their websites around them. At the Compat Summit, Alex McPherson from Quickleft presented his clever work quantifying some of these issues, and I invited all present to start treating compatibility data like an important public resource (as described above).

- Once cross-browser incompatibilities are discussed in blog posts and deployed on the web, the only way to address incompatibilities is to politely ask web developers to fix them. Mike Taylor and Colleen Williams talked about Mozilla’s and Microsoft’s developer outreach activities — efforts like Webcompat.com, “bug reporting for the internet”.

At the end of the Compat Summit, Mike Taylor asked the participants whether we should have another. My answer takes the form of two questions:

- Should someone work to keep the importance of cross-browser compatibility visible among browser vendors and web developers?

- If we do not, who will?

I think the answer is clear.

Special thanks to J'er'emie Patonnier for helping me get up to speed on web compatibility data.

http://hoosteeno.com/2015/02/24/report-web-compatibility-summit/

|

|

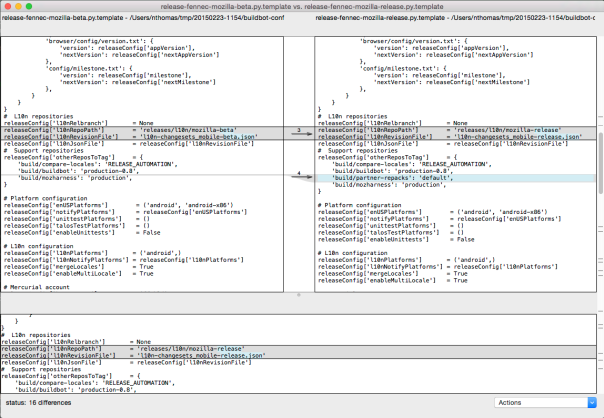

Armen Zambrano: Listing builder differences for a buildbot-configs patch improved |

However, there is a problem with this, buildbot-configs is always to be on the same branch as buildbotcustom. Otherwise, we can have changes land in one repository which require changes on the other one.

The fix was to simply make sure that both repositories are either on default or their associated production branches.

Besides this fix, I have landed two more changes:

- Use the production branches instead of 'default'

- Use -p

- Clobber our whole set up (e.g. ~/.mozilla/releng)

- Use -c

Here are the two changes:

https://hg.mozilla.org/build/braindump/rev/7b93c7b7c46a

https://hg.mozilla.org/build/braindump/rev/bbb5c54a7d42

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/xty-_oMH5Hc/listing-builder-differences-for.html

|

|

Nathan Froyd: measuring power usage with power gadget and joulemeter |

In the continuing evaluation of how Firefox’s energy usage might be measured and improved, I looked at two programs, Microsoft Research’s Joulemeter and Intel’s Power Gadget.

As you might expect, Joulemeter only works on Windows. Joulemeter is advertised as “a software tool that estimates the power consumption of your computer.” Estimates for the power usage of individual components (CPU/monitor/disk/”base”) are provided while you’re running the tool. (No, I’m not sure what “base” is, either. Perhaps things like wifi?) A calibration step is required for trying to measure anything. I’m not entirely sure what the calibration step does, but since you’re required to be running on battery, I presume that it somehow obtains statistics about how your battery drains, and then apportions power drain between the individual components. Desktop computers can use a WattsUp power meter in lieu of running off battery. Statistics about individual apps are also obtainable, though only power draw based on CPU usage is measured (estimated). CSV logfiles can be captured for later analysis, taking samples every second or so.

Power Gadget is cross-platform, and despite some dire comments on the download page above, I’ve had no trouble running it on Windows 7 and OS X Yosemite (though I do have older CPUs in both of those machines). It works by sampling energy counters maintained by the processor itself to estimate energy usage. As a side benefit, it also keeps track of the frequency and temperature of your CPU. While the default mode of operation is to draw pretty graphs detailing this information in a window, Power Gadget can also log detailed statistics to a CSV file of your choice, taking samples every ~100ms. The CSV file also logs the power consumption of all “packages” (i.e. CPU sockets) on your system.

I like Power Gadget more than Joulemeter: Power Gadget is cross-platform, captures more detailed statistics, and seems a little more straightforward in explaining how power usage is measured.

Roberto Vitillo and Joel Maher wrote a tool called energia that compares energy usage between different browsers on pre-selected sets of pages; Power Gadget is one of the tools that can be used for gathering energy statistics. I think this sort of tool is the primary use case of Power Gadget in diagnosing power problems: it helps you see whether you might be using too much power, but it doesn’t provide insight into why you’re using that power. Taking logs along with running a sampling-based stack profiler and then attempting to correlate the two might assist in providing insight, but it’s not obvious to me that stacks of where you’re spending CPU time are necessarily correlated with power usage. One might have turned on discrete graphics in a laptop, or high-resolution timers on Windows, for instance, but that wouldn’t necessarily be reflected in a CPU profile. Perhaps sampling something different (if that’s possible) would correlate better.

https://blog.mozilla.org/nfroyd/2015/02/24/measuring-power-usage-with-power-gadget-and-joulemeter/

|

|

The Servo Blog: This Week In Servo 25 |

This week, we merged 60 pull requests.

Selector matching has been extracted into an independent library! You can see it here.

We now have automation set up for our Gonk (Firefox OS) port, and gate on it.

Notable additions

- Chris Paris landed fragment parsing in html5ever and implemented the innerHTML setter in Servo.

- Jack Moffitt added a visualization of parallelization in effect when rendering pages.

- Manish and Lars added

./mach build-gonkto automate building for Firefox OS - Edit Balint implemented canvas pixel manipulation.

- Nick Nethercote improved the state of memory profiling on Linux.

- Simon Sapin extracted CSS selector matching into an independent library.

- Patrick Walton implemented a bunch of missing canvas operations

- Andreas improved rust-azure’s

stroke-optionsand landed it in Servo - Ms2ger updated web-platform-tests and enabled the

DOMEventstests - Nathan Froyd added support for invoking

rrfrom mach - Shing Lyu added activation behavior to anchors, fixing a papercut

Screenshots

Parallel painting, visualized:

New contributors

Meeting

- Rust in Gecko: We’re slowly figuring out what we need to do (and have a tracking bug). Some more candidates for a Rust component in Gecko are an MP4 demultiplexer, and a replacement of safe browsing code.

- Selector matching is now in a new library

./mach build-gonkworks. At the moment it needs a build of B2G, which is huge (and requires a device), but we’re working on packaging up the necessary bits which are relatively small.

|

|

Cameron Kaiser: Two victories |

|

|

Daniel Stenberg: curl, smiley-URLs and libc |

Some interesting Unicode URLs have recently been seen used in the wild – like in this billboard ad campaign from Coca Cola, and a friend of mine asked me about curl in reference to these and how it deals with such URLs.

(Picture by stevencoleuk)

I ran some tests and decided to blog my observations since they are a bit curious. The exact URL I tried was ‘www.

http://daniel.haxx.se/blog/2015/02/24/curl-smiley-urls-and-libc/

|

|

Michael Kaply: What I Do |

One of the trickier things about being self-employed, especially around an open source project like Firefox, is knowing where to draw the line between giving out free advice and asking people to pay for my services. I'm always hopeful that answering a question here and there will one day lead to folks hiring me or purchasing CCK2 support. That's why I try to be as helpful as I can.

That being said, I'm still surprised at the number of times a month I get an email from someone requesting that I completely customize Firefox to their requirements and deliver it to them for free. Or write an add-on for them. Or diagnose the problem they are having with Firefox.

While I appreciate their faith in me, somehow I think it's gotten lost somewhere that this is what I do for a living.

So I just wanted to take a moment and let people know that you can hire me. If you need help with customizing and deploying Firefox, or building Firefox add-ons or building a site-specific browser, that's what I do. And I'd be more than happy to help you do that.

But if all you have is a question, that's great too. The best place to ask is on my support site at cck2.freshdesk.com.

|

|

Planet Mozilla Interns: Michael Sullivan: Forcing memory barriers on other CPUs with mprotect(2) |

I have something of an unfortunate fondness for indefensible hacks.

Like I discussed in my last post, RCU is a synchronization mechanism that excels at protecting read mostly data. It is a particularly useful technique in operating system kernels because full control of the scheduler permits many fairly simple and very efficient implementations of RCU.

In userspace, the situation is trickier, but still manageable. Mathieu Desnoyers and Paul E. McKenney have built a Userspace RCU library that contains a number of different implementations of userspace RCU. For reasons I won’t get into, efficient read side performance in userspace seems to depend on having a way for a writer to force all of the reader threads to issue a memory barrier. The URCU library has one version that does this using standard primitives: it sends signals to all other threads; in their signal handlers the other threads issue barriers and indicate so; the caller waits until every thread has done so. This is very heavyweight and inefficient because it requires running all of the threads in the process, even those that aren’t currently executing! Any thread that isn’t scheduled now has no reason to execute a barrier: it will execute one as part of getting rescheduled. Mathieu Desnoyers attempted to address this by adding a membarrier() system call to Linux that would force barriers in all other running threads in the process; after more than a dozen posted patches to LKML and a lot of back and forth, it got silently dropped.

While pondering this dilemma I thought of another way to force other threads to issue a barrier: by modifying the page table in a way that would force an invalidation of the Translation Lookaside Buffer (TLB) that caches page table entries! This can be done pretty easily with mprotect or munmap.

Full details in the patch commit message.

http://www.msully.net/blog/2015/02/24/forcing-memory-barriers-on-other-cpus-with-mprotect2/

|

|

Nicholas Nethercote: Fix your damned data races |

Nathan Froyd recently wrote about how he has been using ThreadSanitizer to find data races in Firefox, and how a number of Firefox developers — particular in the networking and JS GC teams — have been fixing these.

This is great news. I want to re-emphasise and re-state one of the points from Nathan’s post, which is that data races are a class of bug that almost everybody underestimates. Unless you have, say, authored a specification of the memory model for a systems programming language, your intuition about the potential impact of many data races is probably wrong. And I’m going to give you three links to explain why.

Hans Boehm’s paper How to miscompile programs with “benign” data races explains very clearly that it’s possible to correctly conclude that a data race is benign at the level of machine code, but it’s almost impossible at the level of C or C++ code. And if you try to do the latter by inspecting the code generated by a C or C++ compiler, you are not allowing for the fact that other compilers (including future versions of the compiler you used) can and will generate different code, and so your conclusion is both incomplete and temporary.

Dmitri Vyukov’s blog post Benign data races: what could possibly go wrong? covers similar ground, giving more examples of how compilers can legitimately compile things in surprising ways. For example, at any point the storage used by a local variable can be temporarily used to hold a different variable’s value (think register spilling). If another thread reads this storage in an racy fashion, it could read the value of an unrelated value.

Finally, John Regehr’s blog has many posts that show how C and C++ compilers take advantage of undefined behaviour to do surprising (even shocking) program transformations, and how the extent of these transformations has steadily increased over time. Compilers genuinely are getting smarter, and are increasingly pushing the envelope of what a language will let them get away with. And the behaviour of a C or C++ programs is undefined in the presence of data races. (This is sometimes called “catch-fire” semantics, for reasons you should be able to guess.)

So, in summary: if you write C or C++ code that deals with threads in Firefox — and that probably includes everybody who writes C or C++ code in Firefox — you should have read at least the first two links I’ve given above. If you haven’t, please do so now. If you have read them and they haven’t made you at least slightly terrified, you should read them again. And if TSan identifies a data race in code that you are familiar with, please take it seriously, and fix it. Thank you.

https://blog.mozilla.org/nnethercote/2015/02/24/fix-your-damned-data-races/

|

|

Planet Mozilla Interns: Michael Sullivan: Why We Fight |

Why We Fight, or

Why Your Language Needs A (Good) Memory Model, or

The Tragedy Of memory_order_consume’s Unimplementability

This, one of the most terrifying technical documents I’ve ever read, is why we fight: https://www.kernel.org/doc/Documentation/RCU/rcu_dereference.txt.

Background

For background, RCU is a mechanism used heavily in the Linux kernel for locking around read-mostly data structures; that is, data structures that are read frequently but fairly infrequently modified. It is a scheme that allows for blazingly fast read-side critical sections (no atomic operations, no memory barriers, not even any writing to cache lines that other CPUs may write to) at the expense of write-side critical sections being quite expensive.

The catch is that writers might be modifying the data structure as readers access it: writers are allowed to modify the data structure (often a linked list) as long as they do not free any memory removed until it is “safe”. Since writers can be modifying data structures as readers are reading from it, without any synchronization between them, we are now in danger of running afoul of memory reordering. In particular, if a writer initializes some structure (say, a routing table entry) and adds it to an RCU protected linked list, it is important that any reader that sees that the entry has been added to the list also sees the writes that initialized the entry! While this will always be the case on the well-behaved x86 processor, architectures like ARM and POWER don’t provide this guarantee.

The simple solution to make the memory order work out is to add barriers on both sides on platforms where it is need: after initializing the object but before adding it to the list and after reading a pointer from the list but before accessing its members (including the next pointer). This cost is totally acceptable on the write-side, but is probably more than we are willing to pay on the read-side. Fortunately, we have an out: essentially all architectures (except for the notoriously poorly behaved Alpha) will not reorder instructions that have a data dependency between them. This means that we can get away with only issuing a barrier on the write-side and taking advantage of the data dependency on the read-side (between loading a pointer to an entry and reading fields out of that entry). In Linux this is implemented with macros “rcu_assign_pointer” (that issues a barrier if necessary, and then writes the pointer) on the write-side and “rcu_dereference” (that reads the value and then issues a barrier on Alpha) on the read-side.

There is a catch, though: the compiler. There is no guarantee that something that looks like a data dependency in your C source code will be compiled as a data dependency. The most obvious way to me that this could happen is by optimizing “r[i ^ i]” or the like into “r[0]”, but there are many other ways, some quite subtle. This document, linked above, is the Linux kernel team’s effort to list all of the ways a compiler might screw you when you are using rcu_dereference, so that you can avoid them.

This is no way to run a railway.

Language Memory Models

Programming by attempting to quantify over all possible optimizations a compiler might perform and avoiding them is a dangerous way to live. It’s easy to mess up, hard to educate people about, and fragile: compiler writers are feverishly working to invent new optimizations that will violate the blithe assumptions of kernel writers! The solution to this sort of problem is that the language needs to provide the set of concurrency primitives that are used as building blocks (so that the compiler can constrain its code transformations as needed) and a memory model describing how they work and how they interact with regular memory accesses (so that programmers can reason about their code). Hans Boehm makes this argument in the well-known paper Threads Cannot be Implemented as a Library.

One of the big new features of C++11 and C11 is a memory model which attempts to make precise what values can be read by threads in concurrent programs and to provide useful tools to programmers at various levels of abstraction and simplicity. It is complicated, and has a lot of moving parts, but overall it is definitely a step forward.

One place it falls short, however, is in its handling of “rcu_dereference” style code, as described above. One of the possible memory orders in C11 is “memory_order_consume”, which establishes an ordering relationship with all operations after it that are data dependent on it. There are two problems here: first, these operations deeply complicate the semantics; the C11 memory model relies heavily on a relation called “happens before” to determine what writes are visible to reads; with consume, this relation is no longer transitive. Yuck! Second, it seems to be nearly unimplementable; tracking down all the dependencies and maintaining them is difficult, and no compiler yet does it; clang and gcc both just emit barriers. So now we have a nasty semantics for our memory model and we’re still stuck trying to reason about all possible optimizations. (There is work being done to try to repair this situation; we will see how it turns out.)

Shameless Plug

My advisor, Karl Crary, and I are working on designing an alternate memory model (called RMC) for C and C++ based on explicitly specifying the execution and visibility constraints that you depend on. We have a paper on it and I gave a talk about it at POPL this year. The paper is mostly about the theory, but the talk tried to be more practical, and I’ll be posting more about RMC shortly. RMC is quite flexible. All of the C++11 model apart from consume can be implemented in terms of RMC (although that’s probably not the best way to use it) and consume style operations are done in a more explicit and more implementable (and implemented!) way.

|

|

Mozilla WebDev Community: Beer and Tell – February 2015 |

Once a month, web developers from across the Mozilla Project get together to speedrun classic video games. Between runs, we find time to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

Michael Kelly: Refract

Osmose (that’s me!) started off with Refract, a website that can turn any website into an installable application. It does this by generating an Open Web App on the fly that does nothing but redirect to the specified site as soon as it is opened. The name and icon of the generated app are auto-detected from the site, or they can be customized by the user.

Michael Kelly: Sphere Online Judge Utility

Next, Osmose shared spoj, a Python-based command line tool for working on problems from the Sphere Online Judge. The tool lets you list and read problems, as well as create solutions and test them against the expected input and output.

Adrian Gaudebert: Spectateur

Next up was adrian, who shared Spectateur, a tool to run reports against the Crash-Stats API. The webapp lets you set up a data model using attributes available from the API, and then process that data via JavaScript that the user provides. The JavaScript is executed in a sandbox, and the resulting view is displayed at the bottom of the page. Reports can also be saved and shared with others.

Peter Bengtsson: Autocompeter

Peterbe stopped by to share Autocompeter, which is a service for very fast auto-completion. Autocompeter builds upon peterbe’s previous work with fast autocomplete backed by Redis. The site is still not production-ready, but soon users will be able to request an API key to send data to the service for indexing, and Air Mozilla will be one of the first sites using it.

Pomax: inkdb

The ever-productive Pomax returns with inkdb.org, a combination of the many color- and ink-related tools he’s been sharing recently. Among other things, inkdb lets you browse fountain pen inks, map them on a graph based on similarity, and find inks that match the colors in an image. The website is also a useful example of the Mozilla Foundation Client-side Prototype in action.

Matthew Claypotch: rockbot

Lastly, potch shared a web interface for suggesting songs to a Rockbot station. Rockbot currently only has Android and iOS apps, and potch decided to create a web interface to allow people without Rockbot accounts or phones to suggest songs.

No one could’ve anticipated willkg’s incredible speedrun of Mario Paint. When interviewed after his blistering 15 hour and 24 minute run, he refused to answer any questions and instead handed out fliers for the grand opening of his cousin’s Inkjet Cartridge and Unlicensed Toilet Tissue Outlet opening next Tuesday at Shopper’s World on Worcester Road.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2015/02/23/beer-and-tell-february-2015/

|

|

Nick Cameron: Creating a drop-in replacement for the Rust compiler |

The kind of things I have in mind are tools like rustdoc or a future rustfmt. These want to operate as closely as possible to real compilation, but have totally different outputs (documentation and formatted source code, respectively). Another use case is a customised compiler. Say you want to add a custom code generation phase after macro expansion, then creating a new tool should be easier than forking the compiler (and keeping it up to date as the compiler evolves).

I have gradually been trying to improve the API of librustc to make creating a drop-in tool easier to produce (many others have also helped improve these interfaces over the same time frame). It is now pretty simple to make a tool which is as close to rustc as you want it to be. In this tutorial I'll show how.

Note/warning, everything I talk about in this tutorial is internal API for rustc. It is all extremely unstable and likely to change often and in unpredictable ways. Maintaining a tool which uses these APIs will be non- trivial, although hopefully easier than maintaining one that does similar things without using them.

This tutorial starts with a very high level view of the rustc compilation process and of some of the code that drives compilation. Then I'll describe how that process can be customised. In the final section of the tutorial, I'll go through an example - stupid-stats - which shows how to build a drop-in tool.

Continue reading on GitHub...

http://featherweightmusings.blogspot.com/2015/02/creating-drop-in-replacement-for-rust.html

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20150223/

|

|

Andreas Gal: Search works differently than you may think |

Search is the main way we all navigate the Web, but it works very differently than you may think. In this blog post I will try to explain how it worked in the past, why it works differently today and what role you play in the process.

The services you use for searching, like Google, Yahoo and Bing, are called a search engines. The very name suggests that they go through a huge index of Web pages to find every one that contains the words you are searching for. 20 years ago search engines indeed worked this way. They would “crawl” the Web and index it, making the content available for text searches.

As the Web grew larger, searches would often find the same word or phrase on more and more pages. This was starting to make search results less and less useful because humans don’t like to read through huge lists to manually find the page that best matches their search. A search for the word “door” on Google, for example, gives you more than 1.9 billion results. It’s impractical — even impossible — for anyone look through all of them to find the most relevant page.

To help navigate the ever growing Web, search engines introduced algorithms to rank results by their relevance. In 1996, two Stanford graduate students, Larry Page and Sergey Brin, discovered a way to use the information available on the Web itself to rank results. They called it PageRank.

Pages on the Web are connected by links. Each link contains anchor text that explains to readers why they should follow the link. The link itself points to another page that the author of the source page felt was relevant to the anchor text. Page and Brin discovered that they could rank results by analyzing the incoming links to a page and treating each one as a vote for its quality. A result is more likely to be relevant if many links point to it using anchor text that is similar to the search terms. Page and Brin founded a search engine company in 1998 to commercialize the idea: Google.

PageRank worked so well that it completely changed the way people interact with search results. Because PageRank correctly offered the most relevant results at the top of the page, users started to pay less attention to anything below that. This also meant that pages that didn’t appear on top of the results page essentially started to become “invisible”: users stopped finding and visiting them.

To experience the “invisible Web” for yourself, head over to Google and try to look through more than just the first page of results. So few users ever wander beyond the first page that Google doesn’t even bother displaying all the 1.9 billion search results it claims to have found for “door.” Instead, the list just stops at page 63, about a 100 million pages short of what you would have expected.

Despite reporting over 1.9 billion results, in reality Google’s search results for “door” are quite finite and end at page 63.

With publishers and online commerce sites competing for that small number of top search results, a new business was born: search engine optimization (or SEO). There are many different methods of SEO, but the principal goal is to game the PageRank algorithm in your favor by increasing the number of incoming links to your own page and tuning the anchor text. With sites competing for visitors — and billions in online revenue at stake — PageRank eventually lost this arms race. Today, links and anchor text are no longer useful to determine the most relevant results and, as a result, the importance of PageRank has dramatically decreased.

Search engines have since evolved to use machine learning to rank results. People perform 1.2 trillion searches a year on Google alone — that’s about 3 billion a day and 40,000 a second. Each search becomes part of this massive query stream as the search engine simultaneously “sees” what billions of people are searching for all over the world. For each search, it offers a range of results and remembers which one you considered most relevant. It then uses these past searches to learn what’s most relevant to the average user to provide the most relevant results for future searches.

Machine learning has made text search all but obsolete. Search engines can answer 90% or so of searches by looking at previous search terms and results. They no longer search the Web in most cases — they instead search past searches and respond based on the preferred result of previous users.

This shift from PageRank to machine learning also changed your role in the process. Without your searches — and your choice of results — a search engine couldn’t learn and provide future answers to others. Every time you use a search engine, the search engine uses you to rank its results on a massive scale. That makes you its most important asset.

Filed under: Mozilla

http://andreasgal.com/2015/02/23/search-works-differently-than-you-may-think/

|

|

David Tenser: User Success – We’re hiring! |

Just a quick +1 to Roland’s plug for the Senior Firefox Community Support Lead:

- Ever loved a piece of software so much that you learned everything you

could about it and helped others with it? - Ever coordinated an online community? Especially one around supporting users?

- Ever measured and tweaked a website’s content so that more folks could find it and learn from it?

Got 2 out of 3 of the above?

Then work with me (since Firefox works closely with my area: Firefox for Android and in the future iOS via cloud services like Sync) and the rest of my colleagues on the fab Mozilla User Success team (especially my fantastic Firefox savvy colleagues over at User Advocacy).

And super extra bonus: you’ll also work with our fantastic community like all Mozilla employees AND Firefox product management, marketing and engineering.

Take a brief detour and head over to Roland’s blog to get a sense of one of the awesome people you’d get to work closely with in this exciting role (trust me, you’ll want to work with Roland!). After that, I hope you know what to do! :)

|

|

Ben Kelly: That Event Is So Fetch |

The Service Workers builds have been updated as of yesterday, February 22:

Notable contributions this week were:

- Josh Mathews landed Fetch Event support in Nightly. This is important, of course, because without the Fetch Event you cannot actually intercept any network requests with your Service Worker. | bug 1065216

- Catalin Badea landed more of the Service Worker API in Nightly, including

the ability to communicate with the Service Worker using

postMessage(). | bug 982726 - Nikhil Marathe landed some more of his spec implementations to handle unloading documents correctly and to treat activations atomically. | bug 1041340 | bug 1130065

- Andrea Marchesini landed fixes for FirefoxOS discovered by the team in Paris. | bug 1133242

- Jose Antonio Olivera Ortega contributed a work-in-progress patch to force

Service Worker scripts to update when

dom.serviceWorkers.test.enabledis set. | bug 1134329 - I landed my implementation of the Fetch Request and Response

clone()methods. | bug 1073231

As always, please let us know if you run into any problems. Thank you for testing!

https://blog.wanderview.com/blog/2015/02/23/that-event-is-so-fetch/

|

|

Mozilla Release Management Team: Firefox 36 beta10 to rc |

For the RC build, we landed a few last minutes changes. We disabled because of a last minute issue, we landed a compatibility fix for addons and, last but not least, some graphic crash fixes.

Note that a RC2 has been build from the same source code in order to tackle the AMD CPU bug (see comment #22).

- 11 changesets

- 32 files changed

- 220 insertions

- 48 deletions

| Extension | Occurrences |

| cpp | 6 |

| js | 5 |

| jsm | 2 |

| ini | 2 |

| xml | 1 |

| sh | 1 |

| json | 1 |

| hgtags | 1 |

| h | 1 |

| Module | Occurrences |

| mobile | 12 |

| gfx | 5 |

| browser | 5 |

| toolkit | 3 |

| dom | 2 |

| testing | 1 |

| parser | 1 |

List of changesets:

| Robert Strong | Bug 945192 - Followup to support Older SDKs in loaddlls.cpp. r=bbondy a=Sylvestre - cce919848572 |

| Armen Zambrano Gasparnian | Bug 1110286 - Pin mozharness to 2264bffd89ca instead of production. r=rail a=testing - 948a2c2e31d4 |

| Jared Wein | Bug 1115227 - Loop: Add part of the UITour PageID to the Hello tour URLs as a query parameter. r=MattN, a=sledru - 1a2baaf50371 |

| Boris Zbarsky | Bug 1134606 - Disable in Firefox 36 pending some loose ends being sorted out. r=sstamm, a=sledru - 521cf86d194b |

| Milan Sreckovic | Bug 1126918 - NewShSurfaceHandle can return null. Guard against it. r=jgilbert, a=sledru - 89cfa8ff9fc5 |

| Ryan VanderMeulen | Merge beta to m-r. a=merge - 2f2abd6ffebb |

| Matt Woodrow | Bug 1127925 - Lazily open shared handles in DXGITextureHostD3D11 to avoid holding references to textures that might not be used. r=jrmuizel, a=sledru - 47ec64cc562f |

| Rob Wu | Bug 1128478 - sdk/panel's show/hide events not emitted if contentScriptWhen != 'ready'. r=erikvold, a=sledru - c2a6bab25617 |

| Matt Woodrow | Bug 1128170 - Use UniquePtr for TextureClient KeepAlive objects to make sure we don't leak them. r=jrmuizel, a=sledru - 67d9db36737e |

| Hector Zhao | Bug 1129287 - Fix not rejecting partial name matches for plugin blocklist entries. r=gfritzsche, a=sledru - 7d4016a05dd3 |

| Ryan VanderMeulen | Merge beta to m-r. a=merge - a2ffa9047bf4 |

http://release.mozilla.org/statistics/36/2015/02/23/fx-36-b10-to-rc.html

|

|

Adam Lofting: The week ahead 23 Feb 2015 |

First, I’ll note that even taking the time to write these short ‘note to self’ type blog posts each week takes time and is harder to do than I expected. Like so many priorities, the long term important things often battle with the short term urgent things. And that’s in a culture where working open is more than just acceptable, it’s encouraged.

Anyway, I have some time this morning sitting in an airport to write this, and I have some time on a plane to catch up on some other reading and writing that hasn’t made it to the top of the todo list for a few weeks. I may even get to post a blog post or two in the near future.

This week, I have face-to-face time with lots of colleagues in Toronto. Which means a combination of planning, meetings, running some training sessions, and working on tasks where timezone parity is helpful. It’s also the design team work week, and though I’m too far gone from design work to contribute anything pretty, I’m looking forward to seeing their work and getting glimpses of the future Webmaker product. Most importantly maybe, for a week like this, I expect unexpected opportunities to arise.

One of my objectives this week is working with Ops to decide where my time is best spent this year to have the most impact, and to set my goals for the year. That will get closer to a metrics strategy this year to improve on last years ‘reactive’ style of work.

If you’re following along for the exciting stories of my shed>to>office upgrades. I don’t have much to show today, but I’m building a new desk next and insulation is my new favourite thing. This photo shows the visible difference in heat loss after fitting my first square of insulation material to the roof.

If you’re following along for the exciting stories of my shed>to>office upgrades. I don’t have much to show today, but I’m building a new desk next and insulation is my new favourite thing. This photo shows the visible difference in heat loss after fitting my first square of insulation material to the roof.

http://feedproxy.google.com/~r/adamlofting/blog/~3/5JJYODjCysw/

|

|

The Mozilla Blog: MWC 2015: Experience the Latest Firefox OS Devices, Discover what Mozilla is Working on Next |

Preview TVs and phones powered by Firefox OS and demos such as an NFC payment prototype at the Mozilla booth. Hear Mozilla speakers discuss privacy, innovation for inclusion and future of the internet.

Panasonic unveiled their new line of 4K Ultra HD TVs powered by Firefox OS at their convention in Frankfurt today. The Panasonic 2015 4k UHD (Ultra HD) LED VIERA TV, which will be shipping this spring, will also be showcased at Mozilla’s stand at Mobile World Congress 2015 in Barcelona. Like last year, Firefox OS will take its place in Hall 3, Stand 3C30, alongside major operators and device manufacturers.

In addition to the Panasonic TV and the latest Firefox OS smartphones announced, visitors have the opportunity to learn more about Mozilla’s innovation projects during talks at the “Fox Den” at Mozilla’s stand, Hall 3, Stand 3C30. Just one example from the demo program:

Mozilla, in collaboration with its partners at Deutsche Telekom Innovation Labs (centers in Silicon Valley and Berlin) and T-Mobile Poland, developed the design and implementation of Firefox OS’s NFC infrastructure to enable several applications including mobile payments, transportation services, door access and media sharing. The mobile wallet demo covering ‘MasterCard® Contactless’ technology together with few non-payment functionalities will be showcased in “FoxDen” talks.

Visit www.firefoxos.com/mwc for the full list of topics and schedule of “Fox Den” talks.

Schedule of Events and Speaking Appearances

Hear from Mozilla executives on trending topics in mobile at the following sessions:

‘Digital Inclusion: Connecting an additional one billion people to the mobile internet’ Seminar

Executive Director of the Mozilla Foundation Mark Surman will join a seminar that will explore the barriers and opportunities relating to the growth of mobile connectivity in developing markets, particularly in rural areas.

Date: Monday 2 March 12:00 – 13:30 CET

Location: GSMA Seminar Theatre CC1.1

‘Ensuring User-Centred Privacy in a Connected World’ Panel

Denelle Dixon-Thayer, SVP of business and legal affairs at Mozilla, will take part in a session that explores user-centric privacy in a connected world.

Date: Monday, 2 March 16:00 – 17:30 CET

Location: Hall 4, Auditorium 3

‘Innovation for Inclusion’ Keynote Panel

Mozilla Executive Chairwoman and Co-Founder Mitchell Baker will discuss how mobile will continue to empower individuals and societies.

Date: Tuesday, 3 March 11:15 – 12:45 CET

Location: Hall 4, Auditorium 1 (Main Conference Hall)

‘Connected Citizens, Managing Crisis’ Panel

Mark Surman, Executive Director of the Mozilla Foundation, will contribute to a panel on how mobile technology is playing an increasingly central role in shaping responses to some of the most critical humanitarian problems facing the global community today.

Date: Tuesday, 3 March 14:00 – 15:30 CET

Location: Hall 4, Auditorium 2

‘Defining the Future of the Internet’ Panel

Andreas Gal, CTO at Mozilla, will take part in a session that explores the future of the Internet, bringing together industry leaders to the forefront of the net neutrality debate.

Date: Wednesday, 4 March 15:15 – 16:15 CET

Location: Hall 4, Auditorium 5

More information:

- Please visit Mozilla and experience Firefox OS in Hall 3, Stand 3C30, at the Fira Gran Via, Barcelona from March 2-5, 2015

- To learn more about Mozilla at MWC, please visit: www.firefoxos.com/mwc

- For further details or to schedule a meeting at the show please contact press@mozilla.com

- For additional resources, such as high-resolution images and b-roll video, visit: https://blog.mozilla.org/press

|

|

Nick Thomas: FileMerge bug |

FileMerge is a nice diff and merge tool for OS X, and I use it a lot for larger code reviews where lots of context is helpful. It also supports intra-line diff, which comes in pretty handy.

However in recent releases, at least in v2.8 which comes as part of XCode 6.1, it assumes you want to be merging and shows that bottom pane. Adjusting it away doesn’t persist to the next time you use it, *gnash gnash gnash*.

The solution is to open a terminal and offer this incantation:

defaults write com.apple.FileMerge MergeHeight 0

Unfortunately, if you use the merge pane then you’ll have to do that again. Dear Apple, pls fix!

|

|