Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: Bug finding is slow in spite of many eyeballs |

“given enough eyeballs, all bugs are shallow”

The saying (also known as Linus’ law) doesn’t say that the bugs are found fast and neither does it say who finds them. My version of the law would be much more cynical, something like: “eventually, bugs are found“, emphasizing the ‘eventually’ part.

(Jim Zemlin apparently said the other day that it can work the Linus way, if we just fund the eyeballs to watch. I don’t think that’s the way the saying originally intended.)

Because in reality, many many bugs are never really found by all those given “eyeballs” in the first place. They are found when someone trips over a problem and is annoyed enough to go searching for the culprit, the reason for the malfunction. Even if the code is open and has been around for years it doesn’t necessarily mean that any of all the people who casually read the code or single-stepped over it will actually ever discover the flaws in the logic. The last few years several world-shaking bugs turned out to have existed for decades until discovered. In code that had been read by lots of people – over and over.

So sure, in the end the bugs were found and fixed. I would argue though that it wasn’t because the projects or problems were given enough eyeballs. Some of those problems were found in extremely popular and widely used projects. They were found because eventually someone accidentally ran into a problem and started digging for the reason.

Time until discovery in the curl project

I decided to see how it looks in the curl project. A project near and dear to me. To take it up a notch, we’ll look only at security flaws. Not only because they are the probably most important bugs we’ve had but also because those are the ones we have the most carefully noted meta-data for. Like when they were reported, when they were introduced and when they were fixed.

We have no less than 30 logged vulnerabilities for curl and libcurl so far through-out our history, spread out over the past 16 years. I’ve spent some time going through them to see if there’s a pattern or something that sticks out that we should put some extra attention to in order to improve our processes and code. While doing this I gathered some random info about what we’ve found so far.

On average, each security problem had been present in the code for 2100 days when fixed – that’s more than five and a half years. On average! That means they survived about 30 releases each. If bugs truly are shallow, it is still certainly not a fast processes.

Perhaps you think these 30 bugs are really tricky, deeply hidden and complicated logic monsters that would explain the time they took to get found? Nope, I would say that every single one of them are pretty obvious once you spot them and none of them take a very long time for a reviewer to understand.

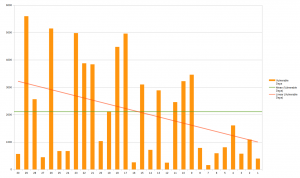

This first graph (click it for the large version) shows the period each problem remained in the code for the 30 different problems, in number of days. The leftmost bar is the most recent flaw and the bar on the right the oldest vulnerability. The red line shows the trend and the green is the average.

The trend is clearly that the bugs are around longer before they are found, but since the project is also growing older all the time it sort of comes naturally and isn’t necessarily a sign of us getting worse at finding them. The average age of flaws is aging slower than the project itself.

Reports per year

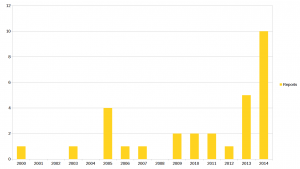

How have the reports been distributed over the years? We have a fairly linear increase in number of lines of code but yet the reports were submitted like this (now it goes from oldest to the left and most recent on the right – click for the large version):

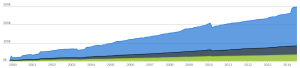

Compare that to this chart below over lines of code added in the project (chart from openhub and shows blanks in green, comments in grey and code in blue, click it for the large version):

We received twice as many security reports in 2014 as in 2013 and we got half of all our reports during the last two years. Clearly we have gotten more eyes on the code or perhaps users pay more attention to problems or are generally more likely to see the security angle of problems? It is hard to say but clearly the frequency of security reports has increased a lot lately. (Note that I here count the report year, not the year we announced the particular problems, as they sometimes were done on the following year if the report happened late in the year.)

On average, we publish information about a found flaw 19 days after it was reported to us. We seem to have became slightly worse at this over time, the last two years the average has been 25 days.

Did people find the problems by reading code?

In general, no. Sure people read code but the typical pattern seems to be that people run into some sort of problem first, then dive in to investigate the root of it and then eventually they spot or learn about the security problem.

(This conclusion is based on my understanding from how people have reported the problems, I have not explicitly asked them about these details.)

Common patterns among the problems?

I went over the bugs and marked them with a bunch of descriptive keywords for each flaw, and then I wrote up a script to see how the frequent the keywords are used. This turned out to describe the flaws more than how they ended up in the code. Out of the 30 flaws, the 10 most used keywords ended up like this, showing number of flaws and the keyword:

9 TLS

9 HTTP

8 cert-check

8 buffer-overflow

6 info-leak

3 URL-parsing

3 openssl

3 NTLM

3 http-headers

3 cookie

I don’t think it is surprising that TLS, HTTP or certificate checking are common areas of security problems. TLS and certs are complicated, HTTP is huge and not easy to get right. curl is mostly C so buffer overflows is a mistake that sneaks in, and I don’t think 27% of the problems tells us that this is a problem we need to handle better. Also, only 2 of the last 15 flaws (13%) were buffer overflows.

The discussion following this blog post is on hacker news.

http://daniel.haxx.se/blog/2015/02/23/bug-finding-is-slow-in-spite-of-many-eyeballs/

|

|

Nick Desaulniers: Hidden in Plain Sight - Public Key Crypto |

How is it possible for us to communicate securely when there’s the possibility of a third party eavesdropping on us? How can we communicate private secrets through public channels? How do such techniques enable us to bank online and carry out other sensitive transactions on the Internet while trusting numerous relays? In this post, I hope to explain public key cryptography, with actual code examples, so that the concepts are a little more concrete.

First, please check out this excellent video on public key crypto:

Hopefully that explains the gist of the technique, but what might it actually look like in code? Let’s take a look at example code in JavaScript using the Node.js crypto module. We’ll later compare the upcoming WebCrypto API and look at a TLS handshake.

Meet Alice. Meet Bob. Meet Eve. Alice would like to send Bob a secret message. Alice would not like Eve to view the message. Assume Eve can intercept, but not tamper with, everything Alice and Bob try to share with each other.

Alice chooses a modular exponential key group, such as modp4, then creates a public and private key.

1 2 3 | |

A modular exponential key group is simply a “sufficiently large” prime number, paired with a generator (specific number), such as those defined in RFC2412 and RFC3526.

The public key is meant to be shared; it is ok for Eve to know the public key. The private key must not ever be shared, even with the person communicating to.

Alice then shares her public key and group with Bob.

1 2 3 4 | |

Bob now creates a public and private key pair with the same group as Alice.

1 2 | |

Bob shares his public key with Alice.

1 2 | |

Alice and Bob now compute a shared secret.

1 2 | |

Alice and Bob have now derived a shared secret from each others’ public keys.

1

| |

Meanwhile, Eve has intercepted Alice and Bob’s public keys and group. Eve tries to compute the same secret.

1 2 3 4 5 | |

This is because Alice’s secret is derived from Alice and Bob’s private keys, which Eve does not have. Eve may not realize her secret is not the same as Alice and Bob’s until later.

That was asymmetric encryption; using different keys. The shared secret may now be used in symmetric encryption; using the same keys.

Alice creates a symmetric block cypher using her favorite algorithm, a hash of their secret as a key, and random bytes as an initialization vector.

1 2 3 4 5 | |

Alice then uses her cypher to encrypt her message to Bob.

1

| |

Alice then sends the cypher text, cypher, and hash to Bob.

1 2 3 4 5 6 | |

Bob now constructs a symmetric block cypher using the algorithm from Alice, and a hash of their shared secret.

1 2 | |

Bob now decyphers the encrypted message (cypher text) from Alice.

1 2 | |

Eve has intercepted the cypher text, cypher, hash, and tries to decrypt it.

1 2 3 4 5 | |

http://nickdesaulniers.github.io/blog/2015/02/22/public-key-crypto-code-example/

|

|

Rizky Ariestiyansyah: Github Pages Auto Publication Based on Master Git Commits |

A simple configuration to auto publish the git commits on master to

gh-pages,just add few line code (git command) in .git/config file. Here is

the code to mirrored the master branch to gh-pages

http://oonlab.com/github-pages-auto-publication-based-on-master-git-commits

|

|

Nick Fitzgerald: Memory Management In Oxischeme |

I've recently been playing with the Rust programming language, and what better way to learn a language than to implement a second language in the language one wishes to learn?! It almost goes without saying that this second language being implemented should be Scheme. Thus, Oxischeme was born.

Why implement Scheme instead of some other language? Scheme is a dialect of LISP and inherits the simple parenthesized list syntax of its LISP-y origins, called s-expressions. Thus, writing a parser for Scheme syntax is rather easy compared to doing the same for most other languages' syntax. Furthermore, Scheme's semantics are also minimal. It is a small language designed for teaching, and writing a metacircular interpreter (ie a Scheme interpreter written in Scheme itself) takes only a few handfuls of lines of code. Finally, Scheme is a beautiful language: its design is rooted in the elegant

|

|

Tantek Celik: November Project Book Survey Answers #NP_Book |

The November Project recently wrapped up a survey for a book project. I had the tab open and finally submitted my answers, but figured why not post them on my own site as well. Some of this I've blogged about before, some of it is new.

The basics

- Tribe Location

- San Francisco

- Member Name

- Tantek Celik

- Date of Birth

- March 11th

- Profession

- Internet

- Date and Location of First NP Workout

- 2013-10-30 Alamo Square, San Francisco, CA, USA

- Contact Info

- tantek.com

Pre-NP fitness

Describe your pre-NP fitness background and routine.

- 2011 started mixed running/jogging/walking every week, short distances 0.5-3 miles.

- 2008 started bicycling regularly around SF

- 2007 started rock climbing, eventually 3x a week

- 1998 started regular yoga and pilates as part of recovering from a back injury

First hear about NP

How did you first hear about the group?

I saw chalkmarks in Golden Gate Park for "NovemberProject 6:30am Kezar!" and thought what the heck is that? 6:30am? Sounds crazy. More: Learning About NP

First NP workout

Recount your first workout, along with the vibe, and how they may have differed from your expectations.

My first NovemberProject workout was a 2013 NPSF PR Wednesday workout, and it was the hardest physical workout I'd ever done. However before it destroyed me, I held my hand up as a newbie, and was warmly welcomed and hugged. My first NP made a strong positive impression. More: My First Year at NP: Newbie

Meeting BG and Bojan

For those who've crossed paths, what was your first impression of BG? Of Bojan?

I first met BG and Bojan at a traverbal Boston destination deck workout. BG and Bojan were (are!) larger than life, with voices to match. Yet their booming matched with self-deprecating humor got everyone laughing and feeling like they belonged.

First Harvard Stadium workout

Boston Only: If you had a particularly memorable newbie meeting and virgin workout at Harvard Stadium, I'd like to know about it for a possible separate section. If so, please describe.

My first Boston Harvard Stadium workout was one to remember. Two days after my traverbal Boston destination deck workout, I joined the newbie orientation since I hadn't done the stadium before. I couldn't believe how many newbies there were. By the time we got to the starting steps I was ready to bolt. I completed 26 sections, far more than I thought I would.

Elevated my fitness

How has NP elevated your fitness level? How have you measured this?

NP has made me a lot faster. After a little over 6 months of NPSF, I cut over 16 minutes in my Bay To Breakers 12km personal record.

Affected personal life

Give an example of how NP has affected your personal life and/or helped you overcome a challenge.

NP turned me from a night person to a morning person, with different activities, and different people. NP inspired me to push myself to overcome my inability to run hills, one house at a time until I could run half a block uphill, then I started running NPSF hills. More: My First Year at NP: Scared of Hills

Impacted relationship with my city

How has NP impacted your relationship with your city?

I would often run into NPSF regulars on my runs to and from the workout, so I teamed up with a couple of them and started an unofficial "rungang". We posted times and corners of our running routes, including to hills. NPSF founder Laura challenged our rungang to run ~4 miles (more than halfway across San Francisco) south to a destination hills workout at Bernal Heights and a few of us did. After similar pre-workout runs North to the Marina, and East to the Embarcadero, I feel like I can confidently run to anywhere in the city, which is an amazing feeling.

Why rapid traction?

Why do you think NP has gained such traction so rapidly?

Two words: community positivity. Yes there's a workout too, but there are lots of workout groups. What makes NP different (beyond that it's free), are the values of community and barrier-breaking positivity that the leaders instill into every single workout. More: My First Year at NP: Positive Community — Just Show Up

Most memorable moment

Describe your most memorable workout or a quintessential NP moment.

Catching the positivity award when it was thrown at me across half of NPSF.

Weirdest thing

Weirdest thing about NP?

That so many people get up before sunrise, nevermind in sub-freezing temperatures in many cities, to go to a workout. Describe that to anyone who isn't in NP, and it sounds beyond weird.

NP and regular life

How has NP bled into your "regular" life? (Do you inadvertently go in for a hug when meeting a new client? Do you drop F-bombs at inopportune times? Have you gone from a cubicle brooder to the meeting goofball? Are you kinder to strangers?)

I was already a bit of a hugger, but NP has taught me to better recognize when people might quietly want (or be ok with) a hug, even outside of NP. #huglife

The Positivity Award

If you've ever won the Positivity Award, please describe that moment and what it meant to you.

It's hard to describe. I certainly was not expecting it. I couldn't believe how excited people were that I was getting it. There was a brief moment of fear when Braden tossed it at me over dozens of my friends, all the sound suddenly muted while I watched it flying, hands outstretched. Caught it solidly with both hands, and I could hear again. It was a beautiful day, the sun had just risen, and I could see everyone's smiling faces. More than the award itself, it meant a lot to me to see the joy in people's faces.

Non-NP friends and family

What do your non-NP friends and family think of your involvement?

My family is incredibly supportive and ecstatic with my increased fitness. My non-NP friends are anywhere from curious (at best), to wary or downright worried that it's a cult, which they only half-jokingly admit.

NP in one word

Describe NP in one word.

Community

Additional Thoughts

Additional thoughts? Include them here.

You can follow my additional thoughts on NP, fitness, and other things on my site & blog: tantek.com.

|

|

Cameron Kaiser: Biggus diskus (plus: 31.5.0 and how to superphish in your copious spare time) |

One of the many great advances that Mac OS 9 had over the later operating system was the extremely flexible (and persistent!) RAM disk feature, which I use on almost all of my OS 9 systems to this day as a cache store for Classilla and temporary work area. It's not just for laptops!

While OS X can configure and use RAM disks, of course, it's not as nicely integrated as the RAM Disk in Classic is and it isn't natively persistent, though the very nice Esperance DV prefpane comes pretty close to duplicating the earlier functionality. Esperance will let you create a RAM disk up to 2GB in size, which for most typical uses of a transient RAM disk (cache, scratch volume) would seem to be more than enough, and can back it up to disk when you exit. But there are some heavy duty tasks that 2GB just isn't enough for -- what if you, say, wanted to compile a PowerPC fork of Firefox in one, he asked nonchalantly, picking a purpose at random not at all intended to further this blog post?

The 2GB cap actually originates from two specific technical limitations. The first applies to G3 and G4 systems: they can't have more than 2GB total physical RAM anyway. Although OS X RAM disks are "sparse" and only actually occupy the amount of RAM needed to store their contents, if you filled up a RAM disk with 2GB of data even on a 2GB-equipped MDD G4 you'd start spilling memory pages to the real hard disk and thrashing so badly you'd be worse off than if you had just used the hard disk in the first place. The second limit applies to G5 systems too, even in Leopard -- the RAM disk is served by /System/Library/PrivateFrameworks/DiskImages.framework/Resources/diskimages-helper, a 32-bit process limited to a 4GB address space minus executable code and mapped-in libraries (it didn't become 64-bit until Snow Leopard). In practice this leaves exactly 4629672 512-byte disk blocks, or approximately 2.26GB, as the largest possible standalone RAM disk image on PowerPC. A full single-architecture build of TenFourFox takes about 6.5GB. Poop.

It dawned on me during one of my careful toilet thinking sessions that the way awound, er, around this pwobproblem was a speech pathology wefewwal to RAID volumes together. I am chagrined that others had independently came up with this idea before, but let's press on anyway. At this point I'm assuming you're going to do this on a G5, because doing this on a G4 (or, egad, G3) would be absolutely nuts, and that your G5 has at least 8GB of RAM. The performance improvement we can expect depends on how the RAM disk is constructed (10.4 gives me the choices of concatenated, i.e., you move from component volume process to component volume process as they fill up, or striped, i.e., the component volume processes are interleaved [RAID 0]), and how much the tasks being performed on it are limited by disk access time. Building TenFourFox is admittedly a rather CPU-bound task, but there is a non-trivial amount of disk access, so let's see how we go.

Since I need at least 6.5GB, I decided the easiest way to handle this was 4 2+GB images (roughly 8.3GB all told). Obviously, the 8GB of RAM I had in my Quad G5 wasn't going to be enough, so (an order to MemoryX and) a couple days later I had a 16GB memory kit (8 x 2GB) at my doorstep for installation. (As an aside, this means my quad is now pretty much maxed out: between the 16GB of RAM and the Quadro FX 4500, it's now the most powerful configuration of the most powerful Power Mac Apple ever made. That's the same kind of sheer bloodymindedness that puts 256MB of RAM into a Quadra 950.)

Now to configure the RAM disk array. I ripped off a script from someone on Mac OS X Hints and modified it to be somewhat more performant. Here it is (it's a shell script you run in the Terminal, or you could use Platypus or something to make it an app; works on 10.4 and 10.5):

% cat ~/bin/ramdisk

#!/bin/sh

/bin/test -e /Volumes/BigRAM && exit

diskutil erasevolume HFS+ r1 \

`hdiutil attach -nomount ram://4629672` &

diskutil erasevolume HFS+ r2 \

`hdiutil attach -nomount ram://4629672` &

diskutil erasevolume HFS+ r3 \

`hdiutil attach -nomount ram://4629672` &

diskutil erasevolume HFS+ r4 \

`hdiutil attach -nomount ram://4629672` &

wait

diskutil createRAID stripe BigRAM HFS+ \

/Volumes/r1 /Volumes/r2 /Volumes/r3 /Volumes/r4

Notice that I'm using stripe here -- you would substitute concat for stripe above if you wanted that mode, but read on first before you do that. Open Disk Utility prior to starting the script and watch the side pane as it runs if you want to understand what it's doing. You'll see the component volume processes start, reconfigure themselves, get aggregated, and then the main array come up. It's sort of a nerdily beautiful disk image ballet.

One complication, however, is you can't simply unmount the array and expect the component RAM volumes to go away by themselves; instead, you have to go seek and kill the component volumes first and then the array will go away by itself. If you fail to do that, you'll run out of memory verrrrry quickly because the RAM will not be reclaimed! Here's a script for that too. I haven't tested it on 10.5, but I don't see why it wouldn't work there either.

% cat ~/bin/noramdisk

#!/bin/sh

/bin/test -e /Volumes/BigRAM || exit

diskutil unmountDisk /Volumes/BigRAM

diskutil checkRAID BigRAM | tail -5 | head -4 | \

cut -c 3-10 | grep -v 'Unknown' | \

sed 's/s3//' | xargs -n 1 diskutil eject

This script needs a little explanation. What it does is unmount the RAM disk array so it can be modified, then goes through the list of its component processes, isolates the diskn that backs them and ejects those. When all the disk array's components are gone, OS X removes the array, and that's it. Naturally shutting down or restarting will also wipe the array away too.

(If you want to use these scripts for a different sized array, adjust the number of diskutil erasevolume lines in the mounter script, and make sure the last line has the right number of images [like /Volumes/r1 /Volumes/r2 by themselves for a 2-image array]. In the unmounter script, change the tail and head parameters to 1+images and images respectively [e.g., tail -3 | head -2 for a 2-image array].)

Since downloading the source code from Mozilla is network-bound (especially on my network), I just dumped it to the hard disk, and patched it on the disk as well so a problem with the array would not require downloading and patching everything again. Once that was done, I made a copy on the RAM disk with hg clone esr31g /Volumes/BigRAM/esr31g and started the build. My hard disk, for comparison, is a 7200rpm 64MB buffer Western Digital SATA drive; remember that all PowerPC OS X-compatible controllers only support SATA I. Here's the timings, with the Quad G5 in Highest performance mode:

hard disk: 2 hours 46 minutes

concatenated: 2 hours 15 minutes (18.7% improvement)

striped: 2 hours 8 minutes (22.9% improvement)

Considering how much of this is limited by the speed of the processors, this is a rather nice boost, and I bet it will be even faster with unified builds in 38ESR (these are somewhat more disk-bound, particularly during linking). Since I've just saved almost two hours of build time over all four CPU builds, this is the way I intend to build TenFourFox in the future.

The 5.2% delta observed here between striping and concatenation doesn't look very large, but it is statistically significant, and actually the difference is larger than this test would indicate -- if our task were primarily disk-bound, the gulf would be quite wide. The reason striping is faster here is because each 2GB slice of the RAM disk array is an independent instance of diskimages-helper, and since we have four slices, each slice can run on one of the Quad's cores. By spreading disk access equally among all the processes, we share it equally over all the processors and achieve lower latency and higher efficiencies. This would probably not be true if we had fewer cores, and indeed for dual G5s two slices (or concatenating four) may be better; the earliest single processor G5s should almost certainly use concatenation only.

Some of you will ask how this compares to an SSD, and frankly I don't know. Although I've done some test builds in an SSD, I've been using a Patriot Blaze SATA III drive connected to my FW800 drive toaster to avoid problems with interfacing, so I doubt any numbers I'd get off that setup would be particularly generalizable and I'd rather use the RAM disk anyhow because I don't have to worry about TRIM, write cycles or cleaning up. However, I would be very surprised if an SSD in a G5 achieved speeds faster than RAM, especially given the (comparatively, mind you) lower SATA bandwidth.

And, with that, 31.5.0 is released for testing (release notes, hashes, downloads). This only contains ESR security/stability fixes; you'll notice the changesets hash the same as 31.4.0 because they are, in fact, the same. The build finalizes Monday PM Pacific as usual.

31.5.0 would have been out earlier (experiments with RAM disks notwithstanding) except that I was waiting to see what Mozilla would do about the Superfish/Komodia debacle: the fact that Lenovo was loading adware that MITM-ed HTTPS connections on their PCs ("Superfish") was bad enough, but the secret root certificate it possessed had an easily crackable private key password allowing a bad actor to create phony certificates, and now it looks like the company that developed the technology behind Superfish, Komodia, has by their willful bad faith actions caused the same problem to exist hidden in other kinds of adware they power.

Assuming you were not tricked into accepting their root certificate in some other fashion (their nastyware doesn't run on OS X and near as I can tell never has), your Power Mac is not at risk, but these kinds of malicious, malfeasant and incredibly ill-constructed root certificates need to be nuked from orbit (as well as the companies that try to sneak them on user's machines; I suggest napalm, castration and feathers), and they will be marked as untrusted in future versions of TenFourFox and Classilla so that false certificates signed with them will not be honoured under any circumstances, even by mistake. Unfortunately, it's also yet another example of how the roots are the most vulnerable part of secure connections (previously, previously).

Development on IonPower continues. Right now I'm trying to work out a serious bug with Baseline stubs and not having a lot of luck; if I can't get this working by 38.0, we'll ship 38 with PPCBC (targeting a general release by 38.0.2 in that case). But I'm trying as hard as I can!

http://tenfourfox.blogspot.com/2015/02/biggus-diskus-plus-3150-and-how-to.html

|

|

Marco Zehe: Social networks and accessibility: A not so sad picture |

This post originally was written in December 2011 and had a slightly different title. Fortunately, the landscape has changed dramatically since then, so it is finally time to update it with more up to date information.

Social networks are part of many people’s lives nowadays. In fact if you’re reading this, chances are pretty high that you came from Twitter, Facebook or some other social network. The majority of referrers to my blog posts come from social networks nowadays, those who read me via an RSS feed seem to be getting less and less.

So let’s look at some of the well-known social networks and see what their state of accessibility is nowadays, both when considering the web interface as well as the native apps for mobile devices most of them have.

In recent years, several popular social networks moved from a fixed relaunch schedule of their services to a more agile, incremental development cycle.. Also, most, if not all, social network providers we’ll look at below have added personell dedicated to either implementing or training other engineers in accessibility skills. Those efforts show great results. There is over-all less breakage of accessibility features, and if something breaks, the teams are usually very quick to react to reports, and the broken feature is fixed in a near future update. So let’s have a look!

Twitter has come a long way since I wrote the initial version of this post. New Twitter was here to stay, but ever since a very skilled engineer boarded the ship, a huge improvement has taken place. One can nowadays use Twitter with keyboard shortcuts to navigate tweets, reply, favorite, retweet and do all sorts of other actions. Screen reader users might want to try turning off their virtual buffers and really use the web site like a desktop app. It works really quite well! I also recommend taking a look at the keyboard shortcut list, and memorizing them when you use Twitter more regularly. You’ll be much much more productive! I wrote something more about the Twitter accessibility team in 2013.

Clients

Fortunately, there are a lot of accessible clients out there that allow access to Twitter. The Twitter app for iOS is very accessible now for both iPhone and iPad. The Android client is very accessible, too. Yes, there is the occasional breakage of a feature, but as stated above, the team is very good at reacting to bug reports and fixing them. Twitter releases updates very frequently now, so one doesn’t have to wait long for a fix.

There’s also a web client called Easy Chirp (formerly Accessible Twitter) by Mr. Web Axe Dennis Lembree. It’s now in incarnation 2. This one is marvellous, it offers all the features one would expect from a Twitter client, in your browser, and it’s all accessible to people with varying disabilities! It uses all the good modern web standard stuff like WAI-ARIA to make sure even advanced interaction is done accessibly. I even know many non-disabled people using it for its straight forward interface and simplicity. One cool feature it has is that you can post images and provide an alternative description for visually impaired readers, without having to spoil the tweet where the picture might be the punch line. You just provide the alternative description in an extra field, and when the link to the picture is opened, the description is provided right there. How fantastic is that!

For iOS, there are two more Apps I usually recommend to people. For the iPhone, my Twitter client of choice was, for a long time, TweetList Pro, an advanced Twitter client that has full VoiceOver support, and they’re not even too shy to say it in their app description! They have such things as muting users, hash tags or clients, making it THE Twitter client of choice for many for all intents and purposes. The reason why I no longer use it as my main Twitter client is the steep decline of updates. It’s now February 2015, and as far as I know, it hasn’t even been updated to iOS 8 yet. The last update was some time in October 2013, so it lags behind terribly in recent Twitter API support changes, doesn’t support the iPhone 6 and 6 Plus screens natively, etc.

Another one, which I use on the iPhone and iPad, is Twitterrific by The Icon Factory. Their iPhone and iPad app is fully accessible, the Mac version, on the other hand, is totally inaccessible and outdated. On the Mac, I use the client Yorufukurou (night owl).

Oh yes and if you’re blind and on Windows, there are two main clients available, TheQube, and Chicken Nugget. TheQube is designed specifically for the blind with hardly any visual UI, and it requires a screen reader or at least installed speech synthesizer to talk. Chicken Nugget can be run in UI or non-UI mode, and in non-UI mode, definitely also requires a screen reader to run. Both are updated frequently, so it’s a matter of taste which one you choose.

In short, for Twitter, there is a range of clients, one of which, the EasyChirp web application, is truly cross-platform and useable anywhere, others are for specific platforms. But you have accessible means to get to Twitter services without having to use their web site.

Facebook has come a long long way since my original post as well. When I wrote about the web site originally, it had just relaunched and completely broken accessibility. I’m happy to report that nowadays, the FB desktop and mobile sites both are largely accessible, and Facebook also has a dedicated team that responds to bug reports quickly. They also have a training program in place where they teach other Facebook engineers the skills to make new features accessible and keep existing ones that way when they get updated. I wrote more about the Facebook accessibility changes here, and things constantly got better since then.

Clients

Like the web interfaces, the iOS and Android clients for Facebook and Messenger have come a long way and frequently receive updates to fix remaining accessibility problems. Yes, here too, there’s the occasional breakage, but the team is very responsive to bug reports in this area, too, and since FB updates their apps on a two week basis, sometimes even more often if critical issues are discovered, waiting for fixes usually doesn’t take long. If you’re doing messaging on the desktop, you can also integrate FaceBook Messenger/Chat with Skype, which is very accessible on both Mac and Windows. Some features like group chats are, however, reserved for the Messenger clients and web interface.

Google Plus

Google Plus anyone? ![]() It was THE most hyped thing of the summer of 2011, and as fast as summer went, so did people lose interest in it. Even Google seem to slowly but surely abandon it, cutting back on the requirement to have a Google+ account for certain activities bit by bit. But in terms of accessibility, it is actually quite OK nowadays. As with many of their widgets, Google+ profits from them reusing components that were found in Gmail and elsewhere, giving both keyboard accessibility and screen reader information exposure. Their Android app is also quite accessible from the last time I tried it in the summer of 2014. Their iOS app still seems to be in pretty bad shape, which is surprising considering how well Gmail, Hangouts, and even the Google Docs apps work nowadays. I don’t use it much, even though I recreated an account some time in 2013. But whenever I happen to stumble in, I’m not as dismayed as I was when I wrote the original version of this post.

It was THE most hyped thing of the summer of 2011, and as fast as summer went, so did people lose interest in it. Even Google seem to slowly but surely abandon it, cutting back on the requirement to have a Google+ account for certain activities bit by bit. But in terms of accessibility, it is actually quite OK nowadays. As with many of their widgets, Google+ profits from them reusing components that were found in Gmail and elsewhere, giving both keyboard accessibility and screen reader information exposure. Their Android app is also quite accessible from the last time I tried it in the summer of 2014. Their iOS app still seems to be in pretty bad shape, which is surprising considering how well Gmail, Hangouts, and even the Google Docs apps work nowadays. I don’t use it much, even though I recreated an account some time in 2013. But whenever I happen to stumble in, I’m not as dismayed as I was when I wrote the original version of this post.

Yammer

Yammer is an enterprise social network we at Mozilla and in a lot of other companies use for some internal communication. It was bought by Microsoft some time in 2012, and since then, a lot of its accessibility issues have been fixed. When you tweet them, you usually get a response pointing to a bug entry form, and issues are dealt with satisfactorily.

iOS client

The iOS client is updated quite frequently. It has problems on and off, but the experience got more stable in recent versions, so one can actually use it.

identi.ca

identi.ca from Status.net is a microblogging service similar to Twitter. And unlike Twitter, it’s accessible out of the box! This is good since it does not have a wealth of clients supporting it like Twitter does, so with its own interface being accessible right away, this is a big help! It is, btw, the only open-source social network in these tests. Mean anything? Probably!

Conclusion

All social networks I tested either made significant improvements over the last three years, or they remained accessible (in the case of the last candidate).

In looking for reasons why this is, there are two that come to mind immediately. For one, the introduction of skilled and dedicated personell versed in accessibility matters, or willing to dive in deep and really get the hang of it. These big companies finally understood the social responsibility they have when providing a social network, and leveraged the fact that there is a wealth of information out there on accessible web design. And there’s a community that is willing to help if pinged!

Another reason is that these companies realized that putting in accessibility up-front, making inclusive design decisions, and increasing the test coverage to include accessibility right away not only reduces the cost as opposed to making it bolt-on, but also helps to make a better product for everybody.

A suggestion remains: Look at what others are doing! Learn from them! Don’t be shy to ask questions! If you look at what others! have been doing, you can draw from it! They’ll do that with stuff you put out there, too! And don’t be shy to talk about the good things you do! The Facebook accessibility team does this in monthly updates where they highlight stuff they fixed in the various product lines. I’ve seen signs of that from Twitter engineers, but not as consistent as with Facebook. Talking about the successes in accessibility also serves as an encouragement to others to put inclusive design patterns in their work flows.

https://www.marcozehe.de/2015/02/21/social-networks-and-accessibility-a-rather-sad-picture/

|

|

Jet Villegas: An imposter among you |

I’m blessed to live and work with the smartest people I know. I’ve been at it for many years. In fact, I’m quite certain some of them are among the smartest people on the planet. I cherish the knowledge and support they freely share yet I frequently wonder why they tolerate me in their midst. I’ve experienced Imposter Syndrome from an early age, and I’m still driven to tears when I think about it. Why do I deserve to be in the midst of the greatness I’ve surrounded myself with? It’s worse when I get credit, as I feel like I’ll surely be figured out as the fraud now that everyone’s looking.

I’ll just leave this here as I suspect there are many others around me who have this affliction. If nothing else, I’m right there with you—for real.

|

|

Rizky Ariestiyansyah: Naringu - A Hacker like jekyll theme |

Hey there, last night i've created a jekyll theme based on Poole, I call the theme naringu. This theme contain built in feature like 404 pages, RSS feed, mobile friend and support syntax highlighting, also naringu come with reversed sidebar so you can choose the sidebar location to left or right.

|

|

Rizky Ariestiyansyah: Naringu - A Hacker like jekyll theme |

Hey there, last night i've created a jekyll theme based on Poole, I call the theme naringu. This theme contain built in feature like 404 pages, RSS feed, mobile friend and support syntax highlighting, also naringu come with reversed sidebar so you can choose the sidebar location to left or right.

|

|

Manish Goregaokar: Thoughts of a Rustacean learning Go |

Recently, for a course I had to use Go. This was an interesting opportunity; Rust and Go have been compared a lot as the "hot new languages", and finally I'd get to see the other side of the argument.

Before I get into the experience, let me preface this by mentioning that Rust and Go don't exactly target the same audiences. Go is garbage collected and is okay with losing out on some performance for ergonomics; whereas Rust tries to keep everything as a compile time check as much as possible. This makes Rust much more useful for lower level applications.

In my specific situation, however, I was playing around with distributed systems via threads (or goroutines), so this fit perfectly into the area of applicability of both languages.

This post isn't exactly intended to be a comparison between the two. I understand that as a newbie at Go, I'll be trying to do things the wrong way and make bad conclusions off of this. My way of coding may not be the "Go way" (I'm mostly carrying over my Rust style to my Go code since I don't know better); so everything may seem like a hack to me. Please keep this in mind whilst reading the post, and feel free to let me know the "Go way" of doing the things I was stumbling with.

This is more of a sketch of my experiences with the language, specifically from the point of view of someone coming from Rust; used to the Rusty way of doing things. It might be useful as an advisory to Rustaceans thinking about trying the language out, and what to expect. Please don't take this as an attack on the language.

What I liked

Despite the performance costs, having a GC at your disposal after using Rust for very long is quite liberating. For a while my internalized borrow checker would throw red flags on me tossing around data indiscriminately, but I learned to ignore it as far as Go code goes. I was able to quickly share state via pointers without worrying about safety, which was quite useful.Having channels as part of the language itself was also quite ergonomic. data <- chan and chan <- data syntax is fun to use, and whilst it's not very different from .send() and .recv() in Rust, I found it surprisingly easy to read. Initially I got confused often by which side the channel was, but after a while I got used to it. It also has an in built select block for selecting over channels (Rust has a macro).

gofmt. The Go style of coding is different from the Rust one (tabs vs spaces, how declarations look), but I continued to use the Rust style because of the muscle memory (also too lazy to change the settings in my editor). gofmt made life easy since I could just run it in a directory and it would fix everything. Eventually I was able to learn the proper style by watching my code get corrected. I'd love to see a rustfmt, in fact, this is one of the proposed Summer of Code projects under Rust!

Go is great for debugging programs with multiple threads, too. It can detect deadlocks and post traces for the threads (with metadata including what code the thread was spawned from, as well as its current state). It also posts such traces when the program crashes. These are great and saved me tons of time whilst debugging my code (which at times had all sorts of cross interactions between more than ten goroutines in the tests). Without a green threading framework, I'm not sure how easy it will be to integrate this into Rust (for debug builds, obviously), but I'd certainly like it to be.

Go has really great

Edit: Andrew Gallant reminded me about Go's testing support, which I'd intended to write about but forgot.

Go has really good in built support and tooling for tests (Rust does too). I enjoyed writing tests in Go quite a bit due to this.

What I didn't like

Sadly, there are a lot of things here, but bear in mind what I mentioned above about me being new to Go and not yet familiar with the "Go way" of doing things.

No enums

enum Shape {

Rectangle(Point, Point),

Circle(Point, u8),

Triangle(Point, Point, Point)

}

and when matching/destructuring, you get type-safe access to the contents of the variant.

This is extremely useful for sending typed messages across channels. In this model. For example, in Servo we use such an enum for sending details about the progress of a fetch to the corresponding XHR object. Another such enum is used for communication between the constellation and the compositor/script.

This gives us a great degree of type safety; I can send messages with different data within them, however I can only send messages that the other end will know how to handle since they must all be of the type of the message enum.

In Go there's no obvious way to get this. On the other hand, Go has the type called interface {} which is similar to Box

Of course, I could implement a custom interface MyMessage on the various types, but this will behave exactly like interface{} (implemented on all types) unless I add a dummy method to it, which seems hackish. This brings me to my next point:

Smart interfaces

Packages and imports

Documentation

Generics

Visibility

Conclusion

I highly suggest you at least try it once, though!

http://inpursuitoflaziness.blogspot.com/2015/02/thoughts-of-rustacean-learning-go.html

|

|

Soledad Penades: webpack vs browserify |

I saw a project yesterday that was built using webpack, and I started to wonder what makes webpack better than Browserify? The website didn’t really explain to me, so I asked in Twitter because I was sure there would be a bunch of webpack experts ready to tell me—and I wasn’t wrong! ![]()

TL;DR

Webpack comes with “batteries included” and preconfigured, so you get more “out of the box”, it’s easier to get started, and the default outputs are really decent. Whereas with browserify you need to choose the modules you want to use, and essentially assemble the whole thing together: it’s slower to learn, but way more configurable.

Some of the most insightful replies:

@supersole it has way more out of the box, gives better control over optimizing how modules are bundled together. http://t.co/Xb81znvDn4

— James “Jimmy” Long (@jlongster) February 19, 2015

@supersole webpack is more opinionated, has more features in core (bullet point engineering). great if you dont like using npm search

— =^._.^= (@maxogden) February 19, 2015

@supersole It's much faster. Generates slightly smaller output by default. Custom loaders are easier to write.

— Kornel

|

|

David Tenser: User Success in 2015 – Part 1: More than SUMO |

Once again, happy belated 2015 – this time wearing a slightly different but equally awesome hat! In this series of blog posts, I’m going to set the stage for User Success in 2015 and beyond. Let’s start with a couple of quick clarifications:

- SUMO = support.mozilla.org

- SUMO != User Success

SUMO has come to mean a number of things over the years: a team, a support website, an underlying web platform, and/or a vibrant community. Within the team, we think of SUMO as the online support website itself, support.mozilla.org, including its contents. And then the amazing community of both volunteers and paid staff helping our users is simply called the SUMO community. But we don’t refer to SUMO as the name of a team, because what we do together goes beyond SUMO.

Crucially, SUMO is a subset of User Success, which consists of a number of teams and initiatives with a shared mission to make our users more successful with our products. User Success is not just our fabulous website which millions of users and many, many volunteers help out with. User Success is both proactive and reactive, as illustrated in this hilariously exciting collection of circles in circles:

Our job starts as soon as somebody starts using one of our products (Firefox, Firefox for Android, Firefox OS and more). Sometimes a user has an issue and goes to our website to look for a solution. Our job is then to make sure that user leaves our website with an answer to their question. But our job doesn’t stop there – we also need to make sure that engineers and product leads are aware of the top issues so they can solve the root cause of the issue in the product itself, leading to many more satisfied users.

Other times a user might just want to leave some feedback about their experience on input.mozilla.org. Our job is then to make sure that this feedback is delivered to our product and engineering teams in an aggregated and actionable way that enables them to make the right priorities about what goes into the future versions of the product.

The proactive side of User Success consists of:

- User Advocacy – A team looking at all our user interaction points, from support, social media, telemetry and other data to better understand what our users need so we can then help engineering with getting bugs fixed, eliminating the need for reactive support for these issues in the future.

- Education – This includes things like our in-product information, tutorials, how-to’s, and other Engagement content we collaborate with other teams to create).

- Self Service – This includes our vast knowledge base of solutions to common problems users experience when using our products.

The reactive side of User Success consists of:

- Community Support – Our forums, the Army of Awesome on Twitter.

- Helpdesk – Paid staff looking at issues that others aren’t able to answer.

When you add up our reactive and proactive initiatives, you get the complete equation for User Success, and that’s what I’ve been calling my team at Mozilla since 2014.

Next up: User Success in 2015 – Part 2: What are we doing this year? (Will update this post with a link once that post is published.)

http://djst.org/2015/02/20/user-success-in-2015-part-1-more-than-sumo/

|

|

Air Mozilla: Bay Area Rust Meetup February 2015 |

This meetup will be focused on the blocking IO system part of the standard library, and asynchronous IO systems being built upon mio.

This meetup will be focused on the blocking IO system part of the standard library, and asynchronous IO systems being built upon mio.

|

|

Armen Zambrano: Pinning of mozharness completed |

If you land mozharness code this affects you as you will have to:

- land on the default branch

- land a change on an integration branch to bump the revision of mozharness

- only at that moment your code will be in use

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/OzL4p3rA_h0/pinning-of-mozharness-completed.html

|

|

Manish Goregaokar: Superfish, and why you should be worried |

For non-techies, skip to the last paragraph of the post for instructions on how to get rid of this adware.

Today a rather severe vulnerability on certain Lenovo laptops was discovered.

Software (well, adware) called "Superfish" came preinstalled on some of their machines (it seems like it's the Yoga series).

The software ostensibly scans images of products on the web to provide the user with alternative (perhaps cheaper) offers. Sounds like a mix of annoying and slightly useful, right?

Except to achieve this, they do something extremely unsafe. They install a new root CA certificate into the Windows certificate store. The software works via a local proxy server which does a man in the middle attack on your web requests. Of course, most websites of importance these days use HTTPS, so to be able to successfully decrypt and inject content on these, the proxy needs to have the ability to issue arbitrary certificates. It's a single certificate as far as we can tell (you can find it here in plaintext form)

It also leaves the certificate in the system even if the user does not accept the terms of use.

This means that a nontrivial portion of the population has a untrusted certificate on their machines.

It's actually worse than that, because to execute the MITM attack, the proxy server should have the private key for that certificate.

So a nontrivial portion of the population has the private key to said untrusted certificate. Anyone owning one of these laptops who has some reverse engineering skills has the ability to intercept, modify, and duplicate the connections of anyone else owning one of these laptops. Bank logins, email, credit card numbers, everything. One usually needs physical proximity or control of a network to do this right, but it's quite feasible that this key could be sold to someone who has this level of access (e.g. a secretly evil ISP). Update: The key is now publicly known

This is really bad.

Installing random crapware on laptops is pretty much the norm now; and that's not the issue. Installing crapware which causes a huge security vulnerability? No thanks. What's especially annoying is their attitude towards it; they haven't even acknowledged the security hole they've caused.

The EFF has a rather nice article on this here.

Superfish does NOT infect Firefox.

Chrome uses the OS's root CA store, so it is affected. So is IE.

It turns out that internally they're using something called Komodia to generate the MITM; and Komodia uses a similar (broken) framework everywhere -- the private key is bundled with it; and the password is "komodia".

Update: Microsoft has pulled the program and certificates via Windows Defender! Yay! Firefox is probably going to follow suit -- now that the program itself should be gone, blacklisting the certificate won't make infected users have unusable browsers.

If you own a Lenovo laptop that came preinstalled with Windows (especially one of these models), please check in your task manager for an app called "VisualDiscovery" or "Superfish". Here's a small guide on how to do this. It's slightly outdated, but the section on uninstalling the program itself should work. Then, follow the steps here to remove all certificates with the name "Superfish" from the root store. Then go and change all your passwords; and check your bank history amongst other things (/email/paypal/etc) for any suspicious transactions. Chances are that you haven't been targeted, but it's good to be sure.

Alternatively, open Windows Defender, update and scan. There are more methods here

http://inpursuitoflaziness.blogspot.com/2015/02/superfish-and-why-you-should-be-worried.html

|

|

Tess John: February 7:Sharing experience as an OPW intern at Mozilla Quality Assurance |

February 7 ,2015 has been a really special day in event life.I had shared my experience as an OPW intern at Mozilla Web Maker Party organised at Rajagiry School of Engineering and Technology,Kochi. Thank you Rebecca Billings for connecting me with the mozilla reps in my region and thanking Vigneshwar Dhinakaran who trusted me to take the session.

It was my first ever conference in which I handled a session, and it was next to awesome.The maker party gave me an opportunity to network with other mozilla people at my region. Further in this post I shall my experience of the event.

I reached the venue at 9.00 a.m.and met Vigneshar Dhinakaran.Vigneshwar is the Mozilla rep and main organiser of the event.He proved to be friendly host and smart organizer. RSETians where waiting with enthusiasm to for the event.A few hours later I met another team of Mozilla reps who co-organised with Vigneshawar including Nidhya,Praveen,Abid.Praveen was GSOC intern in one of the previous round. Praveen blog is quite informative. Click here to read his blog

The session started with transforming ideas into prototype,followed by an introduction to web technologies and mozilla web maker,after that Praveen and myself shared our experience as an intern at Mozilla and SMC(Swantantra Malayalam Computing).We spoke about opportunities like Google Summer Code , OPW and Rails Girls summer code.

We both explained our respective projects Oneanddone. It was interesting to know about his project developing and adding support for Indic language layouts for Firefox OS.

I spoke about the upcoming opw round, and gave some tips to get started.We talked about irc,encouraged them to subscribe to mailing list,use irc and learn github. We were able to help them in finding resources for learning like openhatch,trygit etc.

We found that students really doesn’t get a chance to contribute in open source projects. There is a popular misconception that open source projects has place only for highly skilled developers.We presented open source as a platform for developing skills.I gave a brief description about how they can be a part of documentation,quality and testing etc.

To conclude open source is an important part of technical education.But students are not aware of how they can contribute in open source. Providing them proper mentorship could help them bringing to forefront. Opportunities like OPW and GSOC could be a good dive into open source.To achieve this the mozilla reps has decided to organise a boot camp in the upcoming week.Stay tuned

|

|

Mike Conley: The Joy of Coding (Episode 2) |

The second episode is up! We seem to have solved the resolution problem this time around – big thanks to Richard Milewski for his work there. This time, however, my microphone levels were just a bit low for the first half-hour. That’s my bad – I’ll make sure my gain is at the right level next time before I air.

Here are the notes for the bug I was working on.

And let me know if there’s anything else I can do to make these episodes more useful or interesting.

http://mikeconley.ca/blog/2015/02/18/the-joy-of-coding-episode-2/

|

|

Soledad Penades: How to keep contributors when they are not even contributors yet |

Today I wrote this post describing my difficulties in getting started with Ember.js, a quite well known open source project.

Normally, when I find small issues in an existing project, I try to help by sending a PR–some things are just faster to describe by coding them than by writing a bug report. But the case here was something that requires way more involvement than me filing a trivial bug. I tried to offer constructive feedback, and do that in as much detail as possible so that the authors could understand what happens in somebody else’s mind when they approach their project. From a usability and information design perspective, this is great information to have!

Shortly after, Tom Dale contacted me and thanked me for the feedback, and said they were working on improving all these aspects. That was great.

What is not great is when other people insta-ask you to send PRs or file bugs:

@supersole as the core would say, those are considered bug reports so feel free to file them on github! it really helps the writers as well

— Ricardo Mendes (@locks) February 18, 2015

Look, I already gave you A FULL LIST OF BUGS in excruciating detail. At this stage my involvement with the project is timid and almost insignificant, but I cared enough to give you good actionable feedback instead of just declaring that your project sucks in a ranty tweet.

The way you handle these initial interactions is crucial as to whether someone decides to stay and get more engaged in your community, or run away from you as fast as they can.

A response like Tom’s is good. He recognises I have more things to do, but offers to listen to me if I have feedback after they fix these issues. I can’t be sure I will work more with Ember in the future, but now I might check it out from time to time. The potential is still there! Everyone’s happy.

An entitled response that doesn’t acknowledge the time I’ve already invested and demands even more time from me is definitely bad. Don’t do this. Ever. What you should do instead is act on my feedback. Go through it and file bugs for the issues I mentioned. Give me the bug numbers and I might even subscribe to them to stay in the loop. I might even offer more feedback! You might even need more input! Everyone wins!

This is the same approach I use when someone who might not necessarily be acquainted with Bugzilla or Firefox tells me they have an issue with Firefox. I don’t just straight tell them to “FILE A BUG… OR ELSE” (unless they’re my friends in which case, yes, go file a bug!).

What I do is try to lead by example: I gather as much information from them as I can, then file the bug, and send them the bug URL. Sometimes this also involves building a test case, or extracting a reduced test case from their own failing code. This is work, but it is work that pays off.

These reporters have gone off to build more tests and even file bugs by themselves later on, not only because they saw which steps to follow, but also because they felt respected and valued from the beginning.

So next time someone gives you feedback… think twice before answering with a “PATCHES WELCOME” sort of answer—you might be scaring contributors away! ![]()

|

|

William Lachance: Measuring e10s vs. non-e10s performance with Perfherder |

For the past few months I’ve been working on a sub-project of Treeherder called Perfherder, which aims to provide a workflow that will let us more easily detect and manage performance regressions in our products (initially just those detected in Talos, but there’s room to expand on that later). This is a long term project and we’re still sorting out the details of exactly how it will work, but I thought I’d quickly announce a milestone.

As a first step, I’ve been hacking on a graphical user interface to visualize the performance data we’re now storing inside Treeherder. It’s pretty bare bones so far, but already it has two features which graphserver doesn’t: the ability to view sub-test results (i.e. the page load time for a specific page in the tp5 suite, as opposed to the geometric mean of all of them) and the ability to see results for e10s builds.

Here’s an example, comparing the tp5o 163.com page load times on windows 7 with e10s enabled (and not):

Green is e10s, red is non-e10s (the legend picture doesn’t reflect this because we have yet to deploy a fix to bug 1130554, but I promise I’m not lying). As you can see, the gap has been closing (in particular, something landed in mid-January that improved the e10s numbers quite a bit), but page load times are still measurably slower with this feature enabled.

http://wrla.ch/blog/2015/02/measuring-e10s-vs-non-e10s-performance-with-perfherder/

|

|