Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Stormy Peters: Can or Can’t? |

What I love about open source is that it’s a “can” world by default. You can do anything you think needs doing and nobody will tell you that you can’t. (They may not take your patch but they won’t tell you that you can’t create it!)

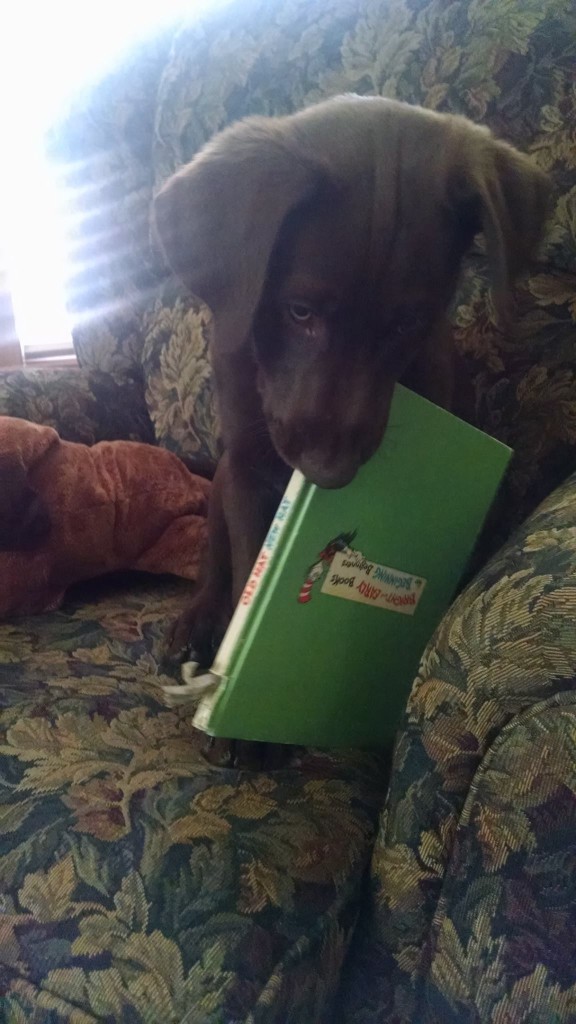

It’s often easier to define things by what they are not or what we can’t do. And the danger of that is you create a culture of “can’t”. Any one who has raised kids or animals knows this. “No, don’t jump.” You can’t jump on people. “No, off the sofa.” You can’t be on the furniture. “No, don’t lick!” You can’t slobber on me. And hopefully when you realize it, you can fix it. “You can have this stuffed animal (instead of my favorite shoe). Good dog!”

Often when we aren’t sure how to do something, we fill the world with can’ts. “I don’t know how we should do this, but I know you can’t do that on a proprietary mailing list.” “I don’t know how I should lose weight, but I know you can’t have dessert.” I don’t know. Can’t. Don’t know. Can’t. Unsure. Can’t.

Watch the world around you. Is your world full of can’ts or full of “can do”s? Can you change it for the better?

Related posts:

http://feedproxy.google.com/~r/StormysCornerMozilla/~3/iHwO-W3PUgo/can-or-cant.html

|

|

Nathan Froyd: examples of poor API design, 1/N – pldhash functions |

The other day in the #content IRC channel:

I have learned so many things about how to not define APIs in my work with Mozilla code ;) (probably lots more to learn, though)

I, too, am still learning a lot about what makes a good API. Like a lot of other things, it’s easier to point out poor API design than to describe examples of good API design, and that’s what this blog post is about. In particular, the venerable XPCOM datastructure PLDHashTable has been undergoing a number of changes lately, all aimed at bringing it up to date. (The question of why we have our own implementations of things that exist in the C++ standard library is for a separate blog post.)

The whole effort started with noticing that PL_DHashTableOperate is not a well-structured API. It’s necessary to quote some more of the API surface to fully understand what’s going on here:

typedef enum PLDHashOperator {

PL_DHASH_LOOKUP = 0, /* lookup entry */

PL_DHASH_ADD = 1, /* add entry */

PL_DHASH_REMOVE = 2, /* remove entry, or enumerator says remove */

PL_DHASH_NEXT = 0, /* enumerator says continue */

PL_DHASH_STOP = 1 /* enumerator says stop */

} PLDHashOperator;

typedef PLDHashOperator

(* PLDHashEnumerator)(PLDHashTable *table, PLDHashEntryHdr *hdr, uint32_t number,

void *arg);

uint32_t

PL_DHashTableEnumerate(PLDHashTable *table, PLDHashEnumerator etor, void *arg);

PLDHashEntryHdr*

PL_DHashTableOperate(PLDHashTable* table, const void* key, PLDHashOperator op);

(PL_DHashTableOperate no longer exists in the tree due to other cleanup bugs; the above is approximately what it looked like at the end of 2014.)

There are several problems with the above slice of the API:

PL_DHashTableOperate(table, key, PL_DHASH_ADD)is a long way to spell what should have been namedPL_DHashTableAdd(table, key)- There’s another problem with the above: it’s making a runtime decision (based on the value of

op) about what should have been a compile-time decision: this particular call will always and forever be an add operation. We shouldn’t have the (admittedly small) runtime overhead of dispatching onop. It’s worth noting that compiling with LTO and a quality inliner will remove that runtime overhead, but we might as well structure the code so non-LTO compiles benefit and the code at callsites reads better. - Given the above definitions, you can say

PL_DHashTableOperate(table, key, PL_DHASH_STOP)and nothing will complain. ThePL_DHASH_NEXTandPL_DHASH_STOPvalues are really only for a function of typePLDHashEnumeratorto return, but nothing about the above definition enforces that in any way. Similarly, you can returnPL_DHASH_LOOKUPfrom aPLDHashEnumeratorfunction, which is nonsensical. - The requirement to always return a

PLDHashEntryHdr*fromPL_DHashTableOperatemeans doing aPL_DHASH_REMOVEhas to return something; it happens to returnnullptralways, but it really should returnvoid. In a similar fashion,PL_DHASH_LOOKUPalways returns a non-nullptrpointer (!); one has to checkPL_DHASH_ENTRY_IS_{FREE,BUSY}on the returned value. The typical style for an API like this would be to returnnullptrif an entry for the given key didn’t exist, and a non-nullptrpointer if such an entry did. The free-ness or busy-ness of a given entry should be a property entirely internal to the hashtable implementation (it’s possible that some scenarios could be slightly more efficient with direct access to the busy-ness of an entry).

We might infer corresponding properties of a good API from each of the above issues:

- Entry points for the API produce readable code.

- The API doesn’t enforce unnecessary overhead.

- The API makes it impossible to talk about nonsensical things.

- It is always reasonably clear what return values from API functions describe.

Fixing the first two bulleted issues, above, was the subject of bug 1118024, done by Michael Pruett. Once that was done, we really didn’t need PL_DHashTableOperate, and removing PL_DHashTableOperate and related code was done in bug 1121202 and bug 1124920 by Michael Pruett and Nicholas Nethercote, respectively. Fixing the unusual return convention of PL_DHashTableLookup is being done in bug 1124973 by Nicholas Nethercote. Maybe once all this gets done, we can move away from C-style PL_DHashTable* functions to C++ methods on PLDHashTable itself!

Next time we’ll talk about the actual contents of a PL_DHashTable and how improvements have been made there, too.

https://blog.mozilla.org/nfroyd/2015/01/27/examples-of-poor-api-design-1n-pldhash-functions/

|

|

Gregory Szorc: Commit Part Numbers and MozReview |

It is common for commit messages in Firefox to contains strings like Part 1, Part 2, etc. See this push for bug 784841 for an extreme multi-part example.

When code review is conducted in Bugzilla, these identifiers are necessary because Bugzilla orders attachments/patches in the order they were updated or their patch title (I'm not actually sure!). If part numbers were omitted, it could be very confusing trying to figure out which order patches should be applied in.

However, when code review is conducted in MozReview, there is no need for explicit part numbers to convey ordering because the ordering of commits is implicitly defined by the repository history that you pushed to MozReview!

I argue that if you are using MozReview, you should stop writing Part N in your commit messages, as it provides little to no benefit.

I, for one, welcome this new world order: I've previously wasted a lot of time rewriting commit messages to reflect new part ordering after doing history rewriting. With MozReview, that overhead is gone and I barely pay a penalty for rewriting history, something that often produces a more reviewable series of commits and makes reviewing and landing a complex patch series significantly easier.

http://gregoryszorc.com/blog/2015/01/27/commit-part-numbers-and-mozreview

|

|

Cameron Kaiser: And now for something completely different: the Pono Player review and Power Macs (plus: who's really to blame for Dropbox?) |

IonPower crossed phase 2 (compilation) yesterday -- it builds and links, and nearly immediately asserts after some brief codegen, but at this phase that's entirely expected. Next, phase 3 is to get it to build a trivial script in Baseline mode ("var i=0") and run to completion without crashing or assertions, and phase 4 is to get it to pass the test suite in Baseline-only mode, which will make it as functional as PPCBC. Phase 5 and 6 are the same, but this time for Ion. IonPower really repays most of our technical debt -- no more fragile glue code trying to keep the JaegerMonkey code generator working, substantially fewer compiler warnings, and a lot less hacks to the JIT to work around oddities of branching and branch optimization. Plus, many of the optimizations I wrote for PPCBC will transfer to IonPower, so it should still be nearly as fast in Baseline-only mode. We'll talk more about the changes required in a future blog post.

Now to the Power Mac scene. I haven't commented on Dropbox dropping PowerPC support (and 10.4/10.5) because that's been repeatedly reported by others in the blogscene and personally I rarely use Dropbox at all, having my own server infrastructure for file exchange. That said, there are many people who rely on it heavily, even a petition (which you can sign) to bring support back. But let's be clear here: do you really want to blame someone? Do you really want to blame the right someone? Then blame Apple. Apple dropped PowerPC compilation from Xcode 4; Apple dropped Rosetta. Unless you keep a 10.6 machine around running Xcode 3, you can't build (true) Universal binaries anymore -- let alone one that compiles against the 10.4 SDK -- and it's doubtful Apple would let such an app (even if you did build it) into the App Store because it's predicated on deprecated technology. Except for wackos like me who spend time building PowerPC-specific applications and/or don't give a flying cancerous pancreas whether Apple finds such work acceptable, this approach already isn't viable for a commercial business and it's becoming even less viable as Apple actively retires 10.6-capable models. So, sure, make your voices heard. But don't forget who screwed us first, and keep your vintage hardware running.

That said, I am personally aware of someoneTM who is working on getting the supported Python interconnect running on OS X Power Macs, and it might be possible to rebuild Finder integration on top of that. (It's not me. Don't ask.) I'll let this individual comment if he or she wants to.

Onto the main article. As many of you may or may not know, my undergraduate degree was actually in general linguistics, and all linguists must have (obviously) some working knowledge of acoustics. I've also been a bit of a poseur audiophile too, and while I enjoy good music I especially enjoy good music that's well engineered (Alan Parsons is a demi-god).

The Por Pono Player, thus, gives me pause. In acoustics I lived and died by the Nyquist-Shannon sampling theorem, and my day job today is so heavily science and research-oriented that I really need to deal with claims in a scientific, reproducible manner. That doesn't mean I don't have an open mind or won't make unusual decisions on a music format for non-auditory reasons. For example, I prefer to keep my tracks uncompressed, even though I freely admit that I'm hard pressed to find any difference in a 256kbit/s MP3 (let alone 320), because I'd like to keep a bitwise exact copy for archival purposes and playback; in fact, I use AIFF as my preferred format simply because OS X rips directly to it, everything plays it, and everything plays it with minimum CPU overhead despite FLAC being lossless and smaller. And hard disks are cheap, and I can convert it to FLAC for my Sansa Fuze if I needed to.

So thus it is with the Por Pono Player. For $400, you can get a player that directly pumps uncompressed, high-quality remastered 24-bit audio at up to 192kHz into your ears with no downsampling and allegedly no funny business. Immediately my acoustics professor cries foul. "Cameron," she says as she writes a big fat F on this blog post, "you know perfectly well that a CD using 44.1kHz as its sampling rate will accurately reproduce sounds up to 22.05kHz without aliasing, and 16-bit audio has indistinguishable quantization error in multiple blinded studies." Yes, I know, I say sheepishly, having tried to create high-bit rate digital playback algorithms on the Commodore 64 and failed because the 6510's clock speed isn't fast enough to pump samples through the SID chip at anything much above telephone call frequencies. But I figured that if there was a chance, if there was anything, that could demonstrate a difference in audio quality that I could uncover it with a Pono Player and a set of good headphones (I own a set of Grado SR125e cans, which are outstanding for the price). So I preordered one and yesterday it arrived, in a fun wooden box:

It includes a MicroUSB charger (and cable), an SDXC MicroSD card (64GB, plus the 64GB internal storage), a fawning missive from Neil Young, the instigator of the original Kickstarter, the yellow triangular unit itself (available now in other colours), and no headphones (it's BYO headset):

My original plan was to do an A-B comparison with Pink Floyd's Dark Side of the Moon because it was originally mastered by the godlike Alan Parsons, I have the SACD 30th Anniversary master, and the album is generally considered high quality in all its forms. When I tried to do that, though, several problems rapidly became apparent:

First, the included card is SDXC, and SDXC support (and exFAT) wasn't added to OS X until 10.6.4. Although you can get exFAT support on 10.5 with OSXFUSE, I don't know how good their support is on PowerPC and it definitely doesn't work on Tiger (and I'm not aware of a module for the older MacFUSE that does run on Tiger). That limits you to SDHC cards up to 32GB at least on 10.4, which really hurts on FLAC or ALAC and especially on AIFF.

Second, the internal storage is not accessible directly to the OS. I plugged in the Pono Player to my iMac G4 and it showed up in System Profiler, but I couldn't do anything with it. The 64GB of internal storage is only accessible to the music store app, which brings us to the third problem:

Third, the Pono Music World app (a skinned version of JRiver Media Center) is Intel-only, 10.6+. You can't download tracks any other way right now, which also means you're currently screwed if you use Linux, even on an Intel Mac. And all they had was Dark Side in 44.1kHz/16 bit ... exactly the same as CD!

So I looked around for other options. HDTracks didn't have Dark Side, though they did have The (weaksauce) Endless River and The Division Bell in 96kHz/24 bit. I own both of these, but 96kHz wasn't really what I had in mind, and when I signed up to try a track it turns out they need a downloader also which is also a reskinned JRiver! And their reasoning for this in the FAQ is total crap.

Eventually I was able to find two sites that offer sample tracks I could download in TenFourFox (I had to downsample one for comparison). The first offers multiple formats in WAV, which your Power Mac actually can play, even in 24-bit (but it may be downsampled for your audio chip; if you go to /Applications/Utilities/Audio MIDI Setup.app you can see the sample rate and quantization for your audio output -- my quad G5 offers up to 24/96kHz but my iMac only has 16/44.1). The second was in FLAC, which Audacity crashed trying to convert, MacAmp Lite X wouldn't even recognize, and XiphQT (via QuickTime) played like it was being held underwater by a chainsaw (sample size mismatch, no doubt); I had to convert this by hand. I then put them onto a SDHC card and installed it in the Pono.

Yuck. I was very disappointed in the interface and LCD. I know that display quality wasn't a major concern, but it looks clunky and ugly and has terrible angles (see for yourself!) and on a $400 device that's not acceptable. The UI is very slow sometimes, even with the hardware buttons (just volume and power, no track controls), and the touch screen is very low quality. But I duly tried the built-in Neil Young track, which being an official Por Pono track turns on a special blue light to tell you it's special, and on my Grados it sounded pretty good, actually. That was encouraging. So I turned off the display and went through a few cycles of A-B testing with a random playlist between the two sets of tracks.

And ... well ... my identification abilities were almost completely statistical chance. In fact, I was slightly worse than chance would predict on the second set of tracks. I can only conclude that Harry Nyquist triumphs. With high quality headphones, presumably high quality DSPs and presumably high quality recordings, it's absolutely bupkis difference for me between CD-quality and Pono-quality.

Don't get me wrong: I am happy to hear that other people are concerned about the deficiencies in modern audio engineering -- and making it a marketable feature. We've all heard the "loudness war," for example, which dramatically compresses the dynamic range of previously luxurious tracks into a bafflingly small amplitude range which the uncultured ear, used only to quantity over quality, apparently prefers. Furthermore, early CD masters used RIAA equalization, which overdrove the treble and was completely unnecessary with digital audio, though that grave error hasn't been repeated since at least 1990 or earlier. Fortunately, assuming you get audio engineers who know what they're doing, a modern CD is every bit as a good to the human ear as a DVD-Audio disc or an SACD. And if modern music makes a return to quality engineering with high quality intermediates (where 24-bit really does make a difference) and appropriate dynamic range, we'll all be better off.

But the Pono Player doesn't live up to the hype in pretty much any respect. It has line out (which does double as a headphone port to share) and it's high quality for what it does play, so it'll be nice for my hi-fi system if I can get anything on it, but the Sansa Fuze is smaller and more convenient as a portable player and the Pono's going back in the wooden box. Frankly, it feels like it was pushed out half-baked, it's problematic if you don't own a modern Mac, and the imperceptible improvements in audio mean it's definitely not worth the money over what you already own. But that's why you read this blog: I just spent $400 so you don't have to.

http://tenfourfox.blogspot.com/2015/01/and-now-for-something-completely.html

|

|

Tarek Ziad'e: Charity Python Code Review |

Raising 2500 euros for a charity is hard. That's what I am trying to do for the Berlin Marathon on Alvarum.

Mind you, this is not to get a bib - I was lucky enough to get one from the lottery. It's just that it feels right to take the opportunity of this marathon to raise money for Doctors without Borders. Whatever my marathon result will be. I am not getting any money out of this, I am paying for all my Marathon fees. Every penny donated goes to MSF (Doctors without Borders).

It's the first time I am doing a fundraising for a foundation and I guess that I've exhausted all the potentials donators in my family, friends and colleagues circles.

I guess I've reached the point where I have to give back something to the people that are willing to donate.

So here's a proposal: I have been doing Python coding for quite some time, wrote some books in both English and French on the topic, and working on large scale projects using Python. I have also gave a lot of talks in Python conferences around the world.

I am not an expert of any specific fields like scientific Python, but I am good in "general Python" and in designing stuff that scales.

I am offering one of the following service:

- Python code review

- Slides review

- Documentation review or translation from English to French

The contract (gosh this is probably very incomplete):

- Your project have to be under an open source license, and available online.

- I am looking from small reviews, between 30mn and 4 hours of work I guess.

- You are responsible for the intial guidance. e.g. explain what specific review you want me to do.

- I am allowed to say no (mostly if by any chance I have tons of proposals, or if I don't feel like I am the right person to review your code.)

- This is on my free time so I can't really give deadlines - however depending on the project and amount of work I will be able to roughly estimate how long is going to take and when I should be able to do it.

- If I do the work you can't back off if you don't like the result of my reviews. If you do without a good reason, this is mean and I might cry a little.

- I won't be responsible for any damage or liability done to your project because of my review.

- I am not issuing any invoice or anything like that. The fundraising site however will issue a classical invoice when you do the donation. I am not part of that transaction nor responsible for it.

- Once the work will be done, I will tell you how long it took, and you are free to give wathever you think is fair and I will happily accept whatever you give my fundraising. If you give 1 euro for 4 hours of work I might make a sad face, but I will just accept it.

Interested ? Mail me! tarek@ziade.org

And if you just want to give to the fundraising it's here: http://www.alvarum.com/tarekziade

http://blog.ziade.org/2015/01/27/charity-python-code-review/

|

|

Air Mozilla: Engineering Meeting |

The weekly Mozilla engineering meeting.

The weekly Mozilla engineering meeting.

|

|

Michael Kaply: What About Firefox Deployment? |

You might have noticed that I spend most of my resources around configuring Firefox and not around deploying Firefox. There are a couple reasons for that:

- There really isn’t a "one size fits all" solution for Firefox deployment because there are so many products that can be used to deploy software within different organizations.

- Most discussions around deployment devolve into a "I wish Mozilla would do a Firefox MSI" discussion.

That being said, there are some things I can recommend around deploying Firefox on Windows.

If you want to modify the Firefox installer, I’ve done a few posts on this in the past:

If you need to integrate add-ons into that install, I've posted about that as well:

You could also consider asking on the Enterprise Working Group mailing list. There's probably someone that's already figured it out for your software deployment solution.

If you really need an MSI, check out FrontMotion. They've been doing MSI work for quite a while.

And if you really want Firefox to have an official MSI, consider working on bug 598647. That's where an MSI implementation got started but never finished.

http://mike.kaply.com/2015/01/27/what-about-firefox-deployment/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1122269] no longer have access to https://bugzilla.mozilla.org/cvs-update.log

- [1119184] Securemail incorrectly displays ” You will have to contact bugzilla-admin@foo to reset your password.” for whines

- [1122565] editversions.cgi should focus the version field on page load to cut down on need for mouse

- [1124254] form.dev-engagement-event: More changes to default NEEDINFO

- [1119988] form.dev-engagement-event: disabled accounts causes invalid/incomplete bugs to be created

- [616197] Wrap long bug summaries in dependency graphs, to avoid horizontal scrolling

- [1117345] Can’t choose a resolution when trying to resolve a bug (with canconfirm rights)

- [1125320] form.dev-engagement-event: Two new questions

- [1121594] Mozilla Recruiting Requisition Opening Process Template

- [1124437] Backport upstream bug 1090275 to bmo/4.2 to whitelist webservice api methods

- [1124432] Backport upstream bug 1079065 to bmo/4.2 to fix improper use of open() calls

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2015/01/28/happy-bmo-push-day-124/

|

|

Jess Klein: Quality Assurance reviews for Design, Functionality and Communications |

This week a few of the features that I have been writing about will be shipping on webmaker.org - the work for Privacy Day and the new on-boarding experience. You might be wondering what we've been up to during that period of time after the project gets coded until the time it goes live. Two magical words: quality assurance (QA). We are still refining the process, and I am very open to suggestions as to how to improve it and streamline it. For the time being, let me walk you through this round of QA on the Privacy Day content.

It all starts out with a github issue

... and a kickoff meeting

The same team who worked on the prototyping phase of the Privacy Day campaign work are responsible for the quality assurance. We met to kick off and map out our plan for going live. This project required three kinds of reviews - that more or less had to happen simultaneously. We broke down the responsibilities like this:

Paul - (communication/marketing) - responsible for preparing the docs and leading a marketing review

Jess - (lead designer) - responsible for preparing docs and leading design review

Bobby - (product manager) - responsible for recruiting participants to do the reviews and to wrangle bug triage.

Cassie - (quality) - responsible for final look and thumbs up to say if the feature is acceptable to ship

Each of us who were responsible for docs wrote up instructions for QA reviewers to follow:

We recruited staff and community to user test on a variety of different devices:

The most effective issues have a screenshot with the problem and a recommended solution. Additionally, it's important to note if this problem is blocking the feature from shipping or not.

We acknowledge when user testers found something useful:

and identified when a problem was out of scope to fix before shipping:

We quickly iterated on fixing bugs and closing issues as a team:

and gave each other some indication when we thought that the problem was fixed sufficiently:

When we are all happy and got the final thumbsup regarding quality, we then....

Close the github issue and celebrate:

Then we start to make preparations to push the feature live (and snoopy dance a little):

http://jessicaklein.blogspot.com/2015/01/quality-assurance-reviews-for-design_27.html

|

|

Ben Hearsum: Testing syndication with Nikola |

Just a test...

|

|

Doug Belshaw: Considerations when creating a Privacy badge pathway |

Between June and October 2014 I chaired the Badge Alliance working group for Digital and Web Literacies. This was an obvious fit for me, having previously been on the Open Badges team at Mozilla, and currently being Web Literacy Lead.

We used a Google Group to organise our meetings. Our Badge Alliance liaison was my former colleague Carla Casilli. The group contained 208 people, although only around 10% of that number were active at any given time.

The deliverable we decided upon was a document detailing considerations individuals/organisations should take into account when creating a Privacy badge pathway.

We used Mozilla’s Web Literacy Map as a starting point for this work, mainly because many of us had been part of the conversations that led to the creation of it. Our discussions moved from monthly, to fortnightly, to weekly. They were wide-ranging and included many options. However, the guidance we ended up providing is as simple and as straightforward as possible.

For example, we advocated the creation of five badges:

- Identifying rights retained and removed through user agreements

- Taking steps to secure non-encrypted connections

- Explaining ways in which computer criminals are able to gain access to user information

- Managing the digital footprint of an online persona

- Identifying and taking steps to keep important elements of identity private

We presented options for how learners would level-up using these badges:

- Trivial Pursuit approach

- Majority approach

- Cluster approach

More details on the badges and approaches can be found in the document. We also included more speculative material around federation. This involved exploring the difference between pathways, systems and ecosystems.

The deliverable from this working is currently still on Google Docs, but if there’s enough interest we’ll port it to GitHub pages so it looks a bit like the existing Webmaker whitepaper. This work is helping inform an upcoming whitepaper around Learning Pathways which should be ready by end of Q1 2014.

Karen Smith, co-author of the new whitepaper and part of the Badge Alliance working group, is also heading up a project (that I’m involved with in a small way) for the Office of the Privacy Commissioner of Canada. This is also informed in many ways by this work.

Comments? Questions? Comment directly on the document, tweet me (@dajbelshaw) or email me: doug@mozillafoundation.org

|

|

Stormy Peters: Your app is not a lottery ticket |

Many app developers are secretly hoping to win the lottery. You know all those horrible free apps full of ads? I bet most of them were hoping to be the next Flappy Bird app. (The Flappy Bird author was making $50K/day from ads for a while.)

The problem is that when you are that focused on making millions, you are not focused on making a good app that people actually want. When you add ads before you add value, you’ll end up with no users no matter how strategically placed your ads are.

So, the secret to making millions with your app?

- Find a need or problem that people have that you can solve.

- Solve the problem.

- Make your users awesome. Luke first sent me a pointer to Kathy Sierra’s idea of making your users awesome. Instagram let people create awesome pictures. Then their friends asked them how they did it …

- Then monetize. (You can think about this earlier but don’t focus on it until you are doing well.)

If you are a good app developer or web developer, you’ll probably find it easier to do well financially helping small businesses around you create the apps and web pages they need than you will trying to randomly guess what game people might like. (If you have a good idea for a game, that you are sure you and your friends and then perhaps others would like to play, go for it!)

Related posts:

|

|

Alistair Laing: Right tool for the Job |

I’m still keen as ever and enjoying the experience of developing a browser extension. Last week was the first time I hung out in Google Hangouts with Jan and Florent. On first impressions Google hangouts is pretty sweet. It was smooth and clear(I’m not sure how much of that was down to broadband speeds and connection quality). I learnt so much in that first one hour session and enjoyed chatting to them face-to-face (in digital terms).

TOO COOL to Minify & TOO SASS’y for tools

One of things I learnt was how to approach JS/CSS now my front-end Developer head tells me to always minify and concatenate files to reduce HTTP request. While from a maintenance side, look to using a CSS pre-processor for variables etc. Now when it comes to developing browser extensions you do not have the same issues because of the following reasons:

- No HTTP requests are done because the files are actually packaged with the extension and therefore installed on the client machine anyway. Theres also NO network latency because of this.

- File sizes aren’t that important as browser extensions (for Firefox at least). The extensions are packaged up in such an effective way that its basically zipping all the contents together so “reducing” the file sizes anyway.

- Whilst attempting to fix an issue I came across Mozilla’s implementation of CSS variables, which sort of solves the issue around css variables and modularise the code.

Later today, I’m scheduled to hangout with Jan again and I’m thinking about writing another post about XUL

[contact-form]https://alistairlaing.wordpress.com/2015/01/27/right-tool-for-the-job/

|

|

Mozilla Release Management Team: Firefox 36 beta3 to beta4 |

In this beta release, for both Desktop & Mobile, we fixed some issues in Javascript, some stability fixes, etc. We also increased the memory size of some components to decrease the number of crashes (example: bugs 869208 & 1124892)

- 40 changesets

- 121 files changed

- 1528 insertions

- 1107 deletions

| Extension | Occurrences |

| cpp | 28 |

| h | 21 |

| c | 16 |

| 1 | 1 |

| java | 9 |

| html | 7 |

| py | 4 |

| js | 4 |

| mn | 3 |

| ini | 3 |

| mk | 2 |

| cc | 2 |

| xml | 1 |

| xhtml | 1 |

| svg | 1 |

| sh | 1 |

| in | 1 |

| idl | 1 |

| dep | 1 |

| css | 1 |

| build | 1 |

| Module | Occurrences |

| security | 46 |

| js | 15 |

| dom | 15 |

| mobile | 10 |

| browser | 10 |

| editor | 6 |

| gfx | 5 |

| testing | 4 |

| toolkit | 3 |

| ipc | 2 |

| xpcom | 1 |

| services | 1 |

| layout | 1 |

| image | 1 |

List of changesets:

| Cameron McCormack | Bug 1092363 - Disable Bug 931668 optimizations for the time being. r=dbaron a=abillings - 126d92ac00e9 |

| Tim Taubert | Bug 1085369 - Move key wrapping/unwrapping tests to their own test file. r=rbarnes, a=test-only - afab84ec4e34 |

| Tim Taubert | Bug 1085369 - Move other long-running tests to separate test files. r=keeler, a=test-only - d0660bbc79a1 |

| Tim Taubert | Bug 1093655 - Fix intermittent browser_crashedTabs.js failures. a=test-only - 957b4a673416 |

| Benjamin Smedberg | Bug 869208 - Increase the buffer size we're using to deliver network streams to OOPP plugins. r=aklotz, a=sledru - cb0fd5d9a263 |

| Nicholas Nethercote | Bug 1122322 (follow-up) - Fix busted paths in worker memory reporter. r=bent, a=sledru - a99eabe5e8ea |

| Bobby Holley | Bug 1123983 - Don't reset request status in MediaDecoderStateMachine::FlushDecoding. r=cpearce, a=sledru - e17127e00300 |

| Jean-Yves Avenard | Bug 1124172 - Abort read if there's nothing to read. r=bholley, a=sledru - cb103a939041 |

| Jean-Yves Avenard | Bug 1123198 - Run reset parser state algorithm when aborting. r=cajbir, a=sledru - 17830430e6be |

| Martyn Haigh | Bug 1122074 - Normal Tabs tray has an empty state. r=mcomella, a=sledru - c1e9f11144a5 |

| Michael Comella | Bug 1096958 - Move TilesRecorder instance into TopSitesPanel. r=bnicholson, a=sledru - d6baa06d52b4 |

| Michael Comella | Bug 1110555 - Use real device dimensions when calculating LWT bitmap sizes. r=mhaigh, a=sledru - 2745f66dac6f |

| Michael Comella | Bug 1107386 - Set internal container height as height of MenuPopup. r=mhaigh, a=sledru - e4e2855e992c |

| Ehsan Akhgari | Bug 1120233 - Ensure that the delete command will stay enabled for password fields. r=roc, ba=sledru - 34330baf2af6 |

| Philipp Kewisch | Bug 1084066 - plugins and extensions moved to wrong directory by mozharness. r=ted,a=sledru - 64fb35ee1af6 |

| Bob Owen | Bug 1123245 Part 1: Enable an open sandbox on Windows NPAPI processes. r=josh, r=tabraldes, a=sledru - 2ab5add95717 |

| Bob Owen | Bug 1123245 Part 2: Use the USER_NON_ADMIN access token level for Windows NPAPI processes. r=tabraldes, a=sledru - f7b5148c84a1 |

| Bob Owen | Bug 1123245 Part 3: Add prefs for the Windows NPAPI process sandbox. r=bsmedberg, a=sledru - 9bfc57be3f2c |

| Makoto Kato | Bug 1121829 - Support redirection of kernel32.dll for hooking function. r=dmajor, a=sylvestre - d340f3d3439d |

| Ting-Yu Chou | Bug 989048 - Clean up emulator temporary files and do not overwrite userdata image. r=ahal, a=test-only - 89ea80802586 |

| Richard Newman | Bug 951480 - Disable test_tokenserverclient on Android. a=test-only - 775b46e5b648 |

| Jean-Yves Avenard | Bug 1116007 - Disable inconsistent test. a=test-only - 5d7d74f94d6a |

| Kai Engert | Bug 1107731 - Upgrade Mozilla 36 to use NSS 3.17.4. a=sledru - f4e1d64f9ab9 |

| Gijs Kruitbosch | Bug 1098371 - Create localized version of sslv3 error page. r=mconley, a=sledru - e6cefc687439 |

| Masatoshi Kimura | Bug 1113780 - Use SSL_ERROR_UNSUPPORTED_VERSION for SSLv3 error page. r=gijs, a=sylvestre (see Bug 1098371) - ea3b10634381 |

| Jon Coppeard | Bug 1108007 - Don't allow GC to observe uninitialized elements in cloned array. r=nbp, a=sledru - a160dd7b5dda |

| Byron Campen [:bwc] | Bug 1123882 - Fix case where offset != 0. r=derf, a=abillings - 228ee06444b5 |

| Mats Palmgren | Bug 1099110 - Add a runtime check before the downcast in BreakSink::SetCapitalization. r=jfkthame, a=sledru - 12972395700a |

| Mats Palmgren | Bug 1110557. r=mak, r=gavin, a=abillings - 3f71dcaa9396 |

| Glenn Randers-Pehrson | Bug 1117406 - Fix handling of out-of-range PNG tRNS values. r=jmuizelaar, a=abillings - a532a2852b2f |

| Tom Schuster | Bug 1111248. r=Waldo, a=sledru - 7f44816c0449 |

| Tom Schuster | Bug 1111243 - Implement ES6 proxy behavior for IsArray. r=efaust, a=sledru - bf8644a5c52a |

| Ben Turner | Bug 1122750 - Remove unnecessary destroy calls. r=khuey, a=sledru - 508190797a80 |

| Mark Capella | Bug 851861 - Intermittent testFlingCorrectness, etc al. dragSync() consumers. r=mfinkle, a=sledru - 3aca4622bfd5 |

| Jan de Mooij | Bug 1115776 - Fix LApplyArgsGeneric to always emit the has-script check. r=shu, a=sledru - 9ac8ce8d36ef |

| Nicolas B. Pierron | Bug 1105187 - Uplift the harness changes to fix jit-test failures. a=test-only - b17339648b55 |

| Nicolas Silva | Bug 1119019 - Avoid destroying a SharedSurface before its TextureClient/Host pair. r=sotaro, a=abillings - 6601b8da1750 |

| Markus Stange | Bug 1117304 - Also do the checks at the start of CopyRect in release builds. r=Bas, a=sledru - 4417d345698a |

| Markus Stange | Bug 1117304 - Make sure the tile filter doesn't call CopyRect on surfaces with different formats. r=Bas, a=sledru - bc7489448a98 |

| David Major | Bug 1124892 - Adjust Breakpad reservation for xul.dll inflation. r=bsmedberg, a=sledru - 59aa16cfd49f |

http://release.mozilla.org/statistics/36/2015/01/27/fx-36-b3-to-b4.html

|

|

Ian Bicking: A Product Journal: To MVP Or Not To MVP |

I’m going to try to journal the process of a new product that I’m developing in Mozilla Cloud Services. My previous post was The Tech Demo, and the first in the series is Conception.

The Minimal Viable Product

The Minimal Viable Product is a popular product development approach at Mozilla, and judging from Hacker News it is popular everywhere (but that is a wildly inaccurate way to judge common practice).

The idea is that you build the smallest thing that could be useful, and you ship it. The idea isn’t to make a great product, but to make something so you can learn in the field. A couple definitions:

The Minimum Viable Product (MVP) is a key lean startup concept popularized by Eric Ries. The basic idea is to maximize validated learning for the least amount of effort. After all, why waste effort building out a product without first testing if it’s worth it.

– from How I built my Minimum Viable Product (emphasis in original)

I like this phrase “validated learning.” Another definition:

A core component of Lean Startup methodology is the build-measure-learn feedback loop. The first step is figuring out the problem that needs to be solved and then developing a minimum viable product (MVP) to begin the process of learning as quickly as possible. Once the MVP is established, a startup can work on tuning the engine. This will involve measurement and learning and must include actionable metrics that can demonstrate cause and effect question.

– Lean Startup Methodology (emphasis added)

I don’t like this model at all: “once the MVP is established, a startup can work on tuning the engine.” You tune something that works the way you want it to, but isn’t powerful or efficient or fast enough. You’ve established almost nothing when you’ve created an MVP, no aspect of the product is validated, it would be premature to tune. But I see this antipattern happen frequently: get an MVP out quickly, often shutting down critically engaged deliberation in order to Just Get It Shipped, then use that product as the model for further incremental improvements. Just Get It Shipped is okay, incrementally improving products is okay, but together they are boring and uncreative.

There’s another broad discussion to be had another time about how to enable positive and constructive critical engagement around a project. It’s not easy, but that’s where learning happens, and the purpose of the MVP is to learn, not to produce. In contrast I find myself impressed by the shear willfulness of the Halflife development process which apparently involved months of six hour design meetings, four days a week, producing large and detailed design documents. Maybe I’m impressed because it sounds so exhausting, a feat of endurance. And perhaps it implies that waterfall can work if you invest in it properly.

Plan plan plan

I have a certain respect for this development pattern that Dijkstra describes:

Q: In practice it often appears that pressures of production reward clever programming over good programming: how are we progressing in making the case that good programming is also cost effective?

A: Well, it has been said over and over again that the tremendous cost of programming is caused by the fact that it is done by cheap labor, which makes it very expensive, and secondly that people rush into coding. One of the things people learn in colleges nowadays is to think first; that makes the development more cost effective. I know of at least one software house in France, and there may be more because this story is already a number of years old, where it is a firm rule of the house, that for whatever software they are committed to deliver, coding is not allowed to start before seventy percent of the scheduled time has elapsed. So if after nine months a project team reports to their boss that they want to start coding, he will ask: “Are you sure there is nothing else to do?” If they say yes, they will be told that the product will ship in three months. That company is highly successful.

– from Interview Prof. Dr. Edsger W. Dijkstra, Austin, 04–03–1985

Or, a warning from a page full of these kind of quotes: “Weeks of programming can save you hours of planning.” The planning process Dijkstra describes is intriguing, it says something like: if you spend two weeks making a plan for how you’ll complete a project in two weeks then it is an appropriate investment to spend another week of planning to save half a week of programming. Or, if you spend a month planning for a month of programming, then you haven’t invested enough in planning to justify that programming work – to ensure the quality, to plan the order of approach, to understand the pieces that fit together, to ensure the foundation is correct, ensure the staffing is appropriate, and so on.

I believe “Waterfall Design” gets much of its negative connotation from a lack of good design. A Waterfall process requires the design to be very very good. With Waterfall the design is too important to leave it to the experts, to let the architect arrange technical components, the program manager to arrange schedules, the database architect to design the storage, and so on. It’s anti-collaborative, disengaged. It relies on intuition and common sense, and those are not powerful enough. I’ll quote Dijkstra again:

The usual way in which we plan today for tomorrow is in yesterday’s vocabulary. We do so, because we try to get away with the concepts we are familiar with and that have acquired their meanings in our past experience. Of course, the words and the concepts don’t quite fit because our future differs from our past, but then we stretch them a little bit. Linguists are quite familiar with the phenomenon that the meanings of words evolve over time, but also know that this is a slow and gradual process.

It is the most common way of trying to cope with novelty: by means of metaphors and analogies we try to link the new to the old, the novel to the familiar. Under sufficiently slow and gradual change, it works reasonably well; in the case of a sharp discontinuity, however, the method breaks down: though we may glorify it with the name “common sense”, our past experience is no longer relevant, the analogies become too shallow, and the metaphors become more misleading than illuminating. This is the situation that is characteristic for the “radical” novelty.

Coping with radical novelty requires an orthogonal method. One must consider one’s own past, the experiences collected, and the habits formed in it as an unfortunate accident of history, and one has to approach the radical novelty with a blank mind, consciously refusing to try to link it with what is already familiar, because the familiar is hopelessly inadequate. One has, with initially a kind of split personality, to come to grips with a radical novelty as a dissociated topic in its own right. Coming to grips with a radical novelty amounts to creating and learning a new foreign language that can not be translated into one’s mother tongue. (Any one who has learned quantum mechanics knows what I am talking about.) Needless to say, adjusting to radical novelties is not a very popular activity, for it requires hard work. For the same reason, the radical novelties themselves are unwelcome.

– from EWD 1036, On the cruelty of really teaching computing science

Research

All this praise of planning implies you know what you are trying to make. Unlikely!

Coding can be a form of planning. You can’t research how interactions feel without having an actual interaction to look at. You can’t figure out how feasible some techniques are without trying them. Planning without collaborative creativity is dull, planning without research is just documenting someone’s intuition.

The danger is that when you are planning with code, it feels like execution. You can plan to throw one away to put yourself in the right state of mind, but I think it is better to simply be clear and transparent about why you are writing the code you are writing. Transparent because the danger isn’t just that you confuse your coding with execution, but that anyone else is likely to confuse the two as well.

So code up a storm to learn, code up something usable so people will use it and then you can learn from that too.

My own conclusion…

I’m not making an MVP. I’m not going to make a maximum viable product either – rather, the next step in the project is not to make a viable product. The next stage is research and learning. Code is going to be part of that. Dogfooding will be part of it too, because I believe that’s important for learning. I fear thinking in terms of “MVP” would let us lose sight of the why behind this iteration – it is a dangerous abstraction during a period of product definition.

Also, if you’ve gotten this far, you’ll see I’m not creating minimal viable blog posts. Sorry about that.

http://www.ianbicking.org/blog/2015/01/product-journal-mvp.html

|

|

Stormy Peters: 7 reasons asynchronous communication is better than synchronous communication in open source |

Traditionally, open source software has relied primarily on asynchronous communication. While there are probably quite a few synchronous conversations on irc, most project discussions and decisions will happen on asynchronous channels like mailing lists, bug tracking tools and blogs.

I think there’s another reason for this. Synchronous communication is difficult for an open source project. For any project where people are distributed. Synchronous conversations are:

- Inconvenient. It’s hard to schedule synchronous meetings across time zones. Just try to pick a good time for Australia, Europe and California.

- Logistically difficult. It’s hard to schedule a meeting for people that are working on a project at odd hours that might vary every day depending on when they can fit in their hobby or volunteer job.

- Slower. If you have more than 2-3 people you need to get together every time you make a decision, things will move slower. I currently have a project right now that we are kicking off and the team wants to do everything in meetings. We had a several meeting last week and one this week. Asynchronously we could have had several rounds of discussion by now.

- Expensive for many people. When I first started at GNOME, it was hard to get some of our board members on a phone call. They couldn’t call international numbers, or couldn’t afford an international call and they didn’t have enough bandwidth for an internet voice call. We ended up using a conference call line from one of our sponsor companies. Now it’s video.

- Logistically difficult. Mozilla does most of our meetings as video meetings. Video is still really hard for many people. Even with my pretty expensive, supposedly high end internet in a developed country, I often have bandwidth problems when participating in video calls. Now imagine I’m a volunteer from Nigeria. My electricity might not work all the time, much less my high speed internet.

- Language. Open source software projects work primarily in English and most of the world does not speak English as their first language. Asynchronous communication gives them a chance to compose their messages, look up words and communicate more effectively.

- Confusing. Discussions and decisions are often made by a subset of the project and unless the team members are very diligent the decisions and rationale are often not communicated out broadly or effectively. You lose the history behind decisions that way too.

There are some major benefits to synchronous conversation:

- Relationships. You build relationships faster. It’s much easier to get to know the person.

- Understanding. Questions and answers happen much faster, especially if the question is hard to formulate or understand. You can quickly go back and forth and get clarity on both sides. They are also really good for difficult topics that might be easily misinterpreted or misunderstood over email where you don’t have tone and body language to help convey the message.

- Quicker. If you only have 2-3 people, it’s faster to talk to them then to type it all out. Once you have more than 2-3, you lose that advantage.

I think as new technologies, both synchronous and asynchronous become main stream, open source software projects will have to figure out how to incorporate them. For example, at Mozilla, we’ve been working on how video can be a part of our projects. Unfortunately, they usually just add more synchronous conversations that are hard to share widely but we work on taking notes, sending notes to mailing lists and recording meetings to try to get the relationship and communication benefits of video meetings while maintaining good open source software project practices. I personally would like to see us use more asynchronous tools as I think video and synchronous tools benefit full time employees at the expense of volunteer involvement.

How does your open source software project use asynchronous and synchronous communication tools? How’s the balance working for you?

Related posts:

|

|

Darrin Henein: Rapid Prototyping with Gulp, Framer.js and Sketch: Part One |

When I save my Sketch file, my Framer.js prototype updates and reloads instantly.

Rationale

The process of design is often thought of as being entirely generative–people who design things study a particular problem, pull out their sketchbooks, markers and laptops, and produce artifacts which slowly but surely progress towards some end result which then becomes “The Design” of “The Thing”. It is seen as an additive process, whereby each step builds upon the previous, sometimes with changes or modifications which solve issues brought to light by the earlier work.

Early in my career, I would sit at my desk and look with disdain at all the crumpled-paper that filled my trash bin and cherish that one special solution that made the cut. The bin was filled with all of my “bad ideas”. It was overflowing with “failed” attempts before I finally “got it right”. It took me some time, but I’ve slowly learned that the core of my design work is defined not by that shiny mockup or design spec I deliver, but more truly by the myriad of sketches and ideas that got me there. If your waste bin isn’t full by the end of a project, you may want to ask yourself if you’ve spent enough time exploring the solution space.

I really love how Facebook’s Product Design Director Julie Zhuo put it in her essay “Junior Designers vs. Senior Designers”, where she illustrates (in a very non-scientific, but effective way) the difference in process that experience begets. The key delta to me is the singularity of the Junior Designer’s process, compared to the exploratory, branching, subtractive process of the more seasoned designer. Note all the dead ends and occasions where the senior designer just abandons an idea or concept. They clearly have a full trash bin by the end of this journey. Through the process of evaluation and subtraction, a final result is reached. The breadth of ideas explored and abandoned is what defines the process, rather than the evolution of a single idea. It is important to achieve this breadth of ideation to ensure that the solution you commit to was not just a lucky one, but a solution that was vetted against a variety of alternatives.

The unfortunate part of this realization is that often it is just that – an idealized process which faces little conceptual opposition but (in my experience) is often sacrificed in the name of speed or deadlines. Generating multiple sketches is not a huge cost, and is one of the primary reasons so much exploration should take place at that fidelity. Interactions, behavioural design and animations, however, are much more costly to generate, and so the temptation there is to iterate on an idea until it feels right. While this is not inherently a bad thing, wouldn’t it be nice if we could iterate and explore things like animations with the same efficiency we experience with sketching?

As a designer with the ability to write some code, my first goal with any project is to eliminate any inefficiencies – let me focus on the design and not waste time elsewhere. I’m going to walk through a framework I’ve developed during a recent project, but the principle is universal – eliminate or automate the things you can, and maximize the time you spend actually problem-solving and designing.

Designing an Animation Using Framer.js and Sketch

Get the Boilerplate Project on GithubUser experience design has become a much more complex field as hardware and software have evolved to allow increasingly fluid, animated, and dynamic interfaces. When designing native applications (especially on mobile platforms such as Android or iOS) there is both an expectation and great value to leverage animation in our UI. Whether to bring attention to an element, educate the user about the hierarchy of the screens in an app, or just to add a moment of delight, animation can be a powerful tool when used correctly. As designers, we must now look beyond Photoshop and static PNG files to define our products, and leverage tools like Keynote or HTML to articulate how these interfaces should behave.

My current tool of choice is a library called framer.js, which is an open-source framework for prototyping UI. For visual design I use Sketch. I’m going to show you how I combine these two tools to provide me with a fast, automated, and iterative process for designing animations.

I am also aware that Framer Studio exists, as well as Framer Generator. These are both amazing tools. However, I am looking for something as automated and low-friction as possible; both of these tools require some steps between modifying the design and seeing the results. Lets look at how I achieved a fully automated solution to this problem.

Automating Everything With Gulp

Here is the goal: let me work in my Sketch and/or CoffeeScript file, and just by saving, update my animated prototype with the new code and images without me having to do anything. Lofty, I know, but let’s see how it’s done.

Gulp is a Javascript-based build tool, the latest in a series of incredible node-powered command line build tools.

The gulpfile is just a list of tasks, or commands, that we can run in different orders or timings. Let’s breakdown my gulpfile.js:

var gulp = require('gulp');

var coffee = require('gulp-coffee');

var gutil = require('gulp-util');

var watch = require('gulp-watch');

var sketch = require('gulp-sketch');

var browserSync = require('browser-sync');

This section at the top just requires (imports) the external libraries I’m going to use. These include Gulp itself, CoffeeScript support (which for me is faster than writing Javascript), a watch utility to run code whenever a file changes, and a plugin which lets me parse and export from Sketch files.

gulp.task('build', ['copy', 'coffee', 'sketch']);

gulp.task('default', ['build', 'watch']);

Next, I setup the tasks I’d like to be able to run. Notice that the build and default tasks are just sets of other tasks. This lets me maintain a separation of concern and have tasks that do only one thing.

gulp.task('watch', function(){

gulp.watch('./src/*.coffee', ['coffee']);

gulp.watch('./src/*.sketch', ['sketch']);

browserSync({

server: {

baseDir: 'build'

},

browser: 'google chrome',

injectChanges: false,

files: ['build/**/*.*'],

notify: false

});

});

This is the watch task. I tell Gulp to watch my src folder for CoffeeScript files and Sketch files; these are the only source files that define my prototype and will be the ones I change often. When a CoffeeScript or Sketch file changes, the coffee or sketch tasks are run, respectively.

Next, I set up browserSync to push any changed files within the build directory to my browser, which in this case is Chrome. This keeps my prototype in the browser up-to-date without having to hit refresh. Notice I’m also specifying a server: key, which essentially spins up a web server with the files in my build directory.

gulp.task('coffee', function(){

gulp.src('src/*.coffee')

.pipe(coffee({bare: true}).on('error', gutil.log))

.pipe(gulp.dest('build/'))

});

The second major task is coffee. This, as you may have guessed, simply transcompiles any *.coffee files in my src folder to Javascript, and places the resulting JS file in my build folder. Because we are containing our prototype in one app.coffee file, there is no need for concatenation or minification.

gulp.task('sketch', function(){

gulp.src('src/*.sketch')

.pipe(sketch({

export: 'slices',

format: 'png',

saveForWeb: true,

scales: 1.0,

trimmed: false

}))

.pipe(gulp.dest('build/images'))

});

The sketch task is also aptly named, as it is responsible for exporting the slices I have defined in my Sketch file to pngs, which can then be used in the prototype. In Sketch, you can mark a layer or group as “exportable”, and this task only looks for those assets.

gulp.task('copy', function(){

gulp.src('src/index.html')

.pipe(gulp.dest('build'))

gulp.src('src/lib/**/*.*')

.pipe(gulp.dest('build/lib'))

gulp.src('src/images/**/*.{png, jpg, svg}')

.pipe(gulp.dest('build/images'));

});

The last task is simply housekeeping. It is only run once, when you first start the Gulp process on the command line. It copies any HTML files, JS libraries, or other images I want available to my prototype. This let’s me keep everything in my src folder, which is a best practice. As a general rule of thumb for build systems, avoid placing anything in your output directory (in this case, build), as you jeopardize your ability to have repeatable builds.

Recall my default task was defined above, as:

gulp.task('default', ['build', 'watch']);

This means that by running $ gulp in this directory from the command line, my default task is kicked off. It won’t exit without ctrl-C, as watch will run indefinitely. This lets me run this command only once, and get to work.

$ gulp

So where are we now? If everything worked, you should see your prototype available at http://localhost:3000. Saving either app.coffee or app.sketch should trigger the watch we setup, and compile the appropriate assets to our build directory. This change of files in the build directory should trigger BrowserSync, which will then update our prototype in the browser. Voila! We can now work in either of 2 files (app.coffee or app.sketch), and just by saving them have our shareable, web-based prototype updated in place. And the best part is, I only had to set this up once! I can now use this framework with mynext project and immediately begin designing, with a hyper-fast iteration loop to facilitate that work.

The next step is to actually design the animation using Sketch and framer.js, which deserves it’s own post altogether and will be covered in Part Two of this series.

Follow me on twitter @darrinhenein to be notified when part two is available.

|

|

Mark Surman: Mozilla Participation Plan (draft) |

Mozilla needs a more creative and radical approach to participation in order to succeed. That is clear. And, I think, pretty widely agreed upon across Mozilla at this stage. What’s less clear: what practical steps do we take to supercharge participation at Mozilla? And what does this more creative and radical approach to participation look like in the everyday work and lives of people involved Mozilla?

This post outlines what we’ve done to begin answering these questions and, importantly, it’s a call to action for your involvement. So read on.

Over the past two months, we’ve written a first draft Mozilla Participation Plan. This plan is focused on increasing the impact of participation efforts already underway across Mozilla and on building new methods for involving people in Mozilla’s mission. It also calls for the creation of new infrastructure and ways of working that will help Mozilla scale its participation efforts. Importantly, this plan is meant to amplify, accelerate and complement the many great community-driven initiatives that already exist at Mozilla (e.g. SuMo, MDN, Webmaker, community marketing, etc.) — it’s not a replacement for any of these efforts.

At the core of the plan is the assumption that we need to build a virtuous circle between 1) participation that helps our products and programs succeed and 2) people getting value from participating in Mozilla. Something like this:

This is a key point for me: we have to simultaneously pay attention to the value participation brings to our core work and to the value that participating provides to our community. Over the last couple of years, many of our efforts have looked at just one side or the other of this circle. We can only succeed if we’re constantly looking in both directions.

With this in mind, the first steps we will take in 2015 include: 1) investing in the ReMo platform and the success of our regional communities and 2) better connecting our volunteer communities to the goals and needs of product teams. At the same time, we will: 3) start a Task Force, with broad involvement from the community, to identify and test new approaches to participation for Mozilla.

The belief is that these activities will inject the energy needed to strengthen the virtuous circle reasonably quickly. We’ll know we’re succeeding if a) participation activities are helping teams across Mozilla measurably advance product and program goals and b) volunteers are getting more value out of their participation out of Mozilla. These are key metrics we’re looking at for 2015.

Over the longer run, there are bigger ambitions: an approach to participation that is at once massive and diverse, local and global. There will be many more people working effectively and creatively on Mozilla activities than we can imagine today, without the need for centralized control. This will result in a different and better, more diverse and resilient Mozilla — an organization that can consistently have massive positive impact on the web and on people’s lives over the long haul.

Making this happen means involvement and creativity from people across Mozilla and our community. However, a core team is needed to drive this work. In order to get things rolling, we are creating a small set of dedicated Participation Teams:

- A newly formed Community Development Team that will focus on strengthening ReMo and tying regional communities into the work of product and program groups.

- A participation ‘task force’ that will drive a broad conversation and set of experiments on what new approaches could look like.

- And, eventually, a Participation Systems Team will build out new infrastructure and business processes that support these new approaches across the organization.

For the time being, these teams will report to Mitchell and me. We will likely create an executive level position later in the year to lead these teams.

As you’ll see in the plan itself, we’re taking very practical and action oriented steps, while also focusing on and experimenting with longer-term questions. The Community Development Team is working on initiatives that are concrete and can have impact soon. But overall we’re just at the beginning of figuring out ‘radical participation’.

This means there is still a great deal of scope for you to get involved — the plans are still evolving and your insights will improve our process and the plan. We’ll come out with information soon on more structured ways to engage with what we’re calling the ‘task force’. In the meantime, we strongly encourage your ideas right away on ways the participation teams could be working with products and programs. Just comment here on this post or reach out to Mitchell or me.

PS. I promised a follow up on my What is radical participation? post, drawing on comments people made. This is not that. Follow up post on that topic still coming.

Filed under: mozilla, opensource

https://commonspace.wordpress.com/2015/01/26/participationplan/

|

|

Mozilla Reps Community: Rep of the month: January 2015 |

Irvin Chen has been an inspiring contributor last month and we want to recognize his great work as a Rep.

Irvin has been organizing weekly MozTW Lab and also other events to spread Mozilla in the local community space in Taiwan, such as Spark meetup, d3.js meetup or Wikimedia mozcafe.

Irvin has been organizing weekly MozTW Lab and also other events to spread Mozilla in the local community space in Taiwan, such as Spark meetup, d3.js meetup or Wikimedia mozcafe.

He also helped to run an l10n sprint for video subtitle/Mozilla links/SUMO and webmaker on transifex.

Congratulations Irvin for your awesome work!

Don’t forget to congratulate him on Discourse!

https://blog.mozilla.org/mozillareps/2015/01/26/rep-of-the-month-january-2015/

|

|

Ben Kero: Attempts source large E-Ink screens for a laptop-like device |

One idea that’s been bouncing around in my head for the last few years has been a laptop with an E-Ink display. I would have thought this would be a niche that had been carved out already, but it doesn’t seem that any companies are interested in exploring it.

I use my laptop in some non-traditional environments, such as outdoors in direct sunlight. Almost all laptops are abysmal in a scenario like this. E-Ink screens are a natural response to this requirement. Unlike traditional TFT-LCD screens, E-Ink panels are meant to be viewed with an abundance of natural light. As a human, I too enjoy natural light.

Besides my fantasies of hacking on the beach, these would be very useful to combat the raster burn that seems to be so common among regular computer users. Since TFT-LCDs act as an artificial sunlight, they can have very negative side-effects on the eyes, and indirectly on the brain. Since E-Ink screens work without a backlight they are not susceptible to these problems. This has the potential to help me reclaim some of the time that I spend without a device before bedtime for health reasons.

The limitations of E-Ink panels are well known to anybody who has used one. The refresh rate is not nearly as good, the color saturation varies between abysmal to non-existent, and the available size are much more limited than LCD panels (smaller). Despite all these reasons, the panels do have advantages. They do not give the user raster burn like other backlit panels. They are cheap, standardized, and easy to replace. They are also useable in direct sunlight. Until recently they offered competitive DPI compared to laptop panels as well.

As a computer professional many of these downsides of LCD panels concern me. I spend a large amount of my work day staring at the displays. I fear this will have a lasting effect on me and many others who do the same.

The E-Ink manufacturer offerings are surprisingly sparse, with no devices that I can find targeted towards consumers or hobbyists. Traditional LCDs are available over a USB interface, able to be used as external displays on any embedded or workstation system. Interfaces for E-Ink displays are decidedly less advanced. The panels that Amazon sources use an undocumented DTO protocol/connector. The panels that everybody else seems to use also have a specific protocol/connector, but some controllers are available.

The one panel I’ve been able to source to try to integrate into a laptop-like object is PervasiveDisplay’s 9.7'' panel with SPI controller. This would allow a computer to speak SPI to the controller board, which would then translate the calls into operations to manage drawing to the panel. Although this is useful, availability is limited to a few component wholesale sites and Digikey. Likewise it’s not exactly cheap. Although the SPI controller board is only $28, the set of controller and 9.7'' panel is $310. Similar replacement Kindle DX panels cost around $85 elsewhere on the internet.

It would be cheaper to buy an entire Kindle DX, scrap the computer and salvage the panel than to buy the PervasiveDisplays evaluation kit on Digikey. To be fair this is comparing a used consumer device to a niche evaluation kit, so of course the former device is going to be cheaper.

To their credit, they’re also trying to be active in the Open Hardware community. They’ve launched RePaper.org, which is a site advocating freeing ePaper technology from the hands of the few companies and into the hands of open hardware enthusiasts and low-run product manufacturers.

From their site:

We recognize ePaper is a new technology and we’re asking your help in making it better known. Up till now, all industry players have kept the core technologies closed. We want to change this. If the history of the Internet has proven anything, it is that open technologies lead to unbounded innovation and unprecedented value added to the entire economy.

There are some panels listed up on SparkFun and Adafruit, although those are limited to 1.44 inch to 2.0 inch displays, which are useless for my use case. Likewise, these are geared towards Arduino compatibility, while I need something that is performant through a (relatively) fast and high bandwidth interface like exists on my laptop mainboard.

Bunnie/Xobs of the Kosagi Novena open laptop project clued me in to the fact that the iMX6 SoC present in the aforementioned device contains an EPD (Electronic Paper Display) controller. Although the pins on the chip likely aren’t broken out to the board, it gives me hope. My hope is that in the future devices such as the Raspberry Pi, CubieBoard, or other single-board computers will break out the controller to a header on the main board.

I think that making this literal stockpile of panels available to open hardware enthusiasts, we can empower them to create anything from innovations in the eBook reader market to creating an entirely new class of device.

http://bke.ro/attempts-source-large-e-ink-screens-for-a-laptop-like-device/

|

|