[»з песочницы] ѕростой WebScraping на R через API hh.ru |

ѕростой WebScraping на R через API hh.ru

ƒоброго времени суток, уважаемые читатели

Ќе так давно преподаватель дал задание: cкачать данные с некоторого сайта на выбор. Ќе знаю почему, но первое, что пришло мне в голову Ч это hh.ru.

ƒалее встал вопрос: "ј что же собственно будем выкачивать?", ведь на сайте пор€дка 5 млн. резюме и 100.000 вакансий.

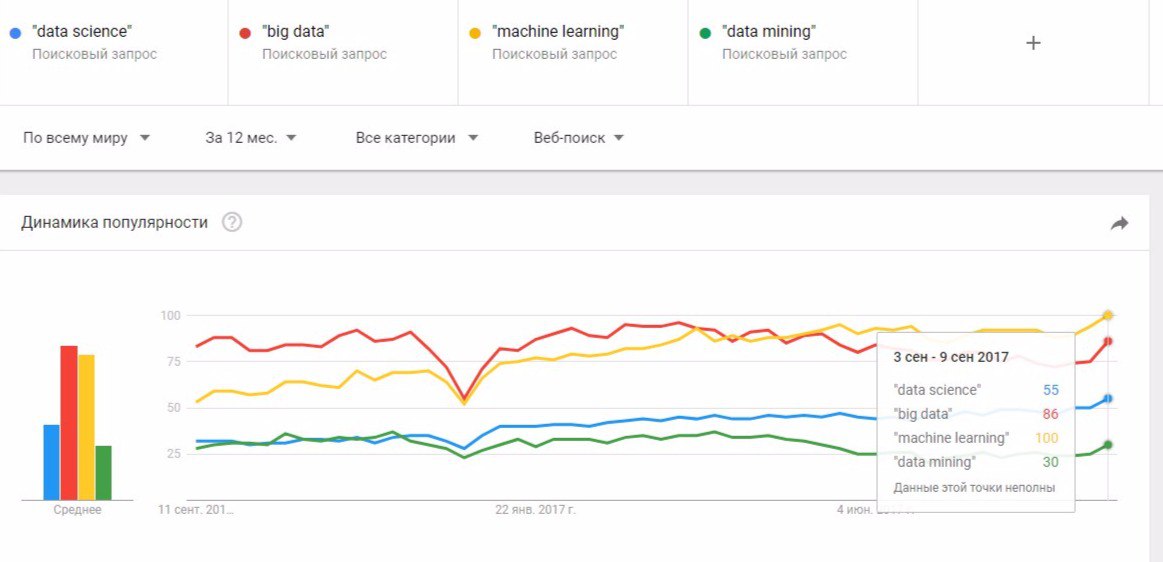

–ешив посмотреть, какими навыками мне придетс€ овладеть в будущем, € набрал в поисковой строке "data science" и призадумалс€. ћожет быть по синонимичному запросу найдетс€ больше вакансий и резюме? Ќужно узнать, какие формулировки попул€рны в данный момент. ƒл€ этого удобно использовать сервис GoogleTrends.

ќтсюда видно, что выгоднее всего искать по запросу "machine learning". стати, это действительно так.

—оберем вначале вакансии. »х довольно мало, всего 411. API hh.ru поддерживает поиск по ваканси€м, поэтому задача становитс€ тривиальной. ≈динственное, нам необходимо работать с JSON. ƒл€ этой цели € использовал пакет jsonlite и его метод fromJSON() принимающий на вход URL и возвращающий разобранную структуру данных.

data <- fromJSON(paste0("https://api.hh.ru/vacancies?text=\"machine+learning\"&page=", pageNum) # «десь pageNum - номер страницы. Ќа странице отображаетс€ 20 вакансий.# Scrap vacancies

vacanciesdf <- data.frame(

Name = character(), # Ќазвание компании

Currency = character(), # ¬алюта

From = character(), # ћинимальна€ оплата

Area = character(), # √ород

Requerement = character(), stringsAsFactors = T) # “ребуемые навыки

for (pageNum in 0:20) { # ¬сего страниц

data <- fromJSON(paste0("https://api.hh.ru/vacancies?text=\"machine+learning\"&page=", pageNum))

vacanciesdf <- rbind(vacanciesdf, data.frame(

data$items$area$name, # √ород

data$items$salary$currency, # ¬алюта

data$items$salary$from, # ћинимальна€ оплата

data$items$employer$name, # Ќазвание компании

data$items$snippet$requirement)) # “ребуемые навыки

print(paste0("Upload pages:", pageNum + 1))

Sys.sleep(3)

}«аписав все данные в DataFrame, давайте немного его почистим. ѕереведем все зарплаты в рубли и избавимс€ от столбца Currency, так же заменим NA значени€ в Salary на нулевые.

# —делаем приличные названи€ столбцов

names(vacanciesdf) <- c("Area", "Currency", "Salary", "Name", "Skills")

# ¬место зарплаты NA будет нулева€

vacanciesdf[is.na(vacanciesdf$Salary),]$Salary <- 0

# ѕереведем зарплаты в рубли

vacanciesdf[!is.na(vacanciesdf$Currency) & vacanciesdf$Currency == 'USD',]$Salary <- vacanciesdf[!is.na(vacanciesdf$Currency) & vacanciesdf$Currency == 'USD',]$Salary * 57

vacanciesdf[!is.na(vacanciesdf$Currency) & vacanciesdf$Currency == 'UAH',]$Salary <- vacanciesdf[!is.na(vacanciesdf$Currency) & vacanciesdf$Currency == 'UAH',]$Salary * 2.2

vacanciesdf <- vacanciesdf[, -2] # Currency нам больше не нужна

vacanciesdf$Area <- as.character(vacanciesdf$Area)ѕосле этого имеем DataFrame вида:

ѕользу€сь случаем, посмотрим сколько вакансий в городах и кака€ у них средн€€ зарплата.

vacanciesdf %>% group_by(Area) %>% filter(Salary != 0) %>%

summarise(Count = n(), Median = median(Salary), Mean = mean(Salary)) %>%

arrange(desc(Count))

ƒл€ scraping`a в R обычно используетс€ пакет rvest, имеющий два ключевых метода read_html() и html_nodes(). ѕервый позвол€ет скачивать страницы из интернета, а второй обращатьс€ к элементам страницы с помощью xPath и CSS-селектора. API не поддерживает возможность поиска по резюме, но дает возможность получить информацию о нем по id. Ѕудем выгружать все id, а затем уже через API получать данные из резюме. ¬сего резюме на сайте по данному запросу Ч 1049.

hhResumeSearchURL <- 'https://hh.ru/search/resume?exp_period=all_time&order_by=relevance&text=machine+learning&pos=full_text&logic=phrase&clusters=true&page=';

# «агрузим очередную страницу с номером pageNum

hDoc <- read_html(paste0(hhResumeSearchURL, as.character(pageNum)))

# ¬ыделим все аттрибуты ссылок на странице

ids <- html_nodes(hDoc, css = 'a') %>% as.character()

# ¬ыделим из ссылок необходимые id ( последовательности букв и цифр длины 38 )

ids <- as.vector(ids) %>% `[`(str_detect(ids, fixed('/resume/'))) %>%

str_extract(pattern = '/resume/.{38}') %>% str_sub(str_count('/resume/') + 1)

ids <- ids[4:length(ids)] # ¬ первых 3х мусорѕосле этого уже известным нам методом fromJSON получим информацию, содержащуюс€ в резюме.

resumes <- fromJSON(paste0("https://api.hh.ru/resumes/", id))hhResumeSearchURL <- 'https://hh.ru/search/resume?exp_period=all_time&order_by=relevance&text=machine+learning&pos=full_text&logic=phrase&clusters=true&page=';

for (pageNum in 0:51) { # ¬сего 51 страница

#¬ытащим id резюме

hDoc <- read_html(paste0(hhResumeSearchURL, as.character(pageNum)))

ids <- html_nodes(hDoc, css = 'a') %>% as.character()

# ¬ыделим все аттрибуты ссылок на странице

ids <- as.vector(ids) %>% `[`(str_detect(ids, fixed('/resume/'))) %>%

str_extract(pattern = '/resume/.{38}') %>% str_sub(str_count('/resume/') + 1)

ids <- ids[4:length(ids)] # ¬ первых 3х мусор

Sys.sleep(1) # ѕодождем на вс€кий случай

for (id in ids) {

resumes <- fromJSON(paste0("https://api.hh.ru/resumes/", id))

skills <- if (is.null(resumes$skill_set)) "" else resumes$skill_set

buffer <- data.frame(

Age = if(is.null(resumes$age)) 0 else resumes$age, # ¬озраст

if (is.null(resumes$area$name)) "NoCity" else resumes$area$name,# √ород

if (is.null(resumes$gender$id)) "NoGender" else resumes$gender$id, # ѕол

if (is.null(resumes$salary$amount)) 0 else resumes$salary$amount, # «арплата

if (is.null(resumes$salary$currency)) "NA" else resumes$salary$currency, # ¬алюта

# —писок навыков одной строкой через ,

str_c(if (!length(skills)) "" else skills, collapse = ","))

write.table(buffer, 'resumes.csv', append = T, fileEncoding = "UTF-8",col.names = F)

Sys.sleep(1) # ѕодождем на вс€кий случай

}

print(paste("—качал страниц:", pageNum))

}“акже почистим получившийс€ DataFrame, конвертиру€ валюты в рубли и удалив NA из столбцов.

Ќайдем топ Ч 15 навыков, чаще остальных встречающихс€ в резюме

SkillNameDF <- data.frame(SkillName = str_split(str_c(

resumes$Skills, collapse = ','), ','), stringsAsFactors = F)

names(SkillNameDF) <- 'SkillName'

mostSkills <- head(SkillNameDF %>% group_by(SkillName) %>%

summarise(Count = n()) %>% arrange(desc(Count)), 15 )

ѕосмотрим, сколько женщин и мужчин знают machine learning, а так же на какую зарплату претендуют

resumes %>% group_by(Gender) %>% filter(Salary != 0) %>%

summarise(Count = n(), Median = median(Salary), Mean = mean(Salary)

» напоследок, топ Ч 10 самых попул€рных возрастов специалистов по машинному обучению

resumes %>% filter(Age!=0) %>% group_by(Age) %>%

summarise(Count = n()) %>% arrange(desc(Count))

| омментировать | « ѕред. запись — дневнику — —лед. запись » | —траницы: [1] [Ќовые] |