Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Patrick Cloke: Matrix Live Interview |

I was interviewed for Matrix Live as part of last week’s This Week in Matrix. I talked a bit about my background and my experiences contributing to Mozilla (as part of Instantbird and Thunderbird projects) as well as what I will be working on for Synapse, the reference implementation …

https://patrick.cloke.us/posts/2020/03/12/matrix-live-interview/

|

|

Mozilla Addons Blog: Friend of Add-ons: Zhengping |

Please meet our newest Friend of Add-ons, Zhengping! A little more than two years ago, Zhengping decided to switch careers and become a software developer. After teaching himself the basics of web development, he started looking for real-world projects where he could hone his skills. After fixing a few frontend bugs on addons.mozilla.org (AMO), Zhengping began contributing code the add-ons code manager, a new tool to help keep add-on users safe.

In the following months, he tackled increasingly harder issues, like using TypeScript with React to create complex UI with precision and efficiency. His contributions helped the add-ons team complete the first iteration of the code manager, and he continued to provide important patches based on feedback from add-on reviewers.

“The comments from staff members in code review helped me deepen my understanding of what is good code,” Zhengping notes. “People on the add-ons team, staff and contributors, are very friendly and willing to help,” he says. “It is a wonderful experience to work with them.”

When he isn’t coding, Zhengping enjoys skiing.

Thank you so much for all of your wonderful contributions to the Firefox add-ons community, Zhengping!

If you are interested in getting involved with the add-ons community, please take a look at our current contribution opportunities.

The post Friend of Add-ons: Zhengping appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/03/12/friend-of-add-ons-zhengping/

|

|

Botond Ballo: Trip Report: C++ Standards Meeting in Prague, February 2020 |

Summary / TL;DR

| Project | What’s in it? | Status |

| C++20 | See Reddit report | Technically complete |

| Library Fundamentals TS v3 | Library utilities incubating for standardization | Under development |

| Concepts | Constrained templates | Shipping as part of C++20 |

| Parallelism TS v2 | Task blocks, library vector types and algorithms, and more | Published! |

| Executors | Abstraction for where/how code runs in a concurrent context | Targeting C++23 |

| Concurrency TS v2 | Concurrency-related infrastructure (e.g. fibers) and data structures | Under active development |

| Networking TS | Sockets library based on Boost.ASIO | Published! Not in C++20. |

| Ranges | Range-based algorithms and views | Shipping as part of C++20 |

| Coroutines | Resumable functions (generators, tasks, etc.) | Shipping as part of C++20 |

| Modules | A component system to supersede the textual header file inclusion model | Shipping as part of C++20 |

| Numbers TS | Various numerical facilities | Under active development |

| C++ Ecosystem TR | Guidance for build systems and other tools for dealing with Modules | Under active development |

| Contracts | Preconditions, postconditions, and assertions | Under active development |

| Pattern matching | A match-like facility for C++ |

Under active development |

| Reflection TS | Static code reflection mechanisms | Publication imminent |

| Reflection v2 | A value-based constexpr formulation of the Reflection TS facilities, along with more advanced features such as code injection |

Under active development |

A few links in this blog post may not resolve until the committee’s post-meeting mailing is published (expected any day). If you encounter such a link, please check back in a few days.

Introduction

A few weeks ago I attended a meeting of the ISO C++ Standards Committee (also known as WG21) in Prague, Czech Republic. This was the first committee meeting in 2020; you can find my reports on 2019’s meetings here (November 2019, Belfast), here (July 2019, Cologne), and here (February 2019, Kona), and previous ones linked from those. These reports, particularly the Belfast one, provide useful context for this post.

This meeting once again broke attendance records, with about ~250 people present. It also broke the record for the number of national standards bodies being physically represented at a meeting, with reps from Austria and Israel joining us for the first time.

The Prague meeting wrapped up the C++20 standardization cycle as far as technical work is concerned. The highest-priority work item for all relevant subgroups was to continue addressing any remaining comments on the C++20 Committee Draft, a feature-complete C++20 draft that was circulated for feedback in July 2019 and received several hundred comments from national standards bodies (“NB comments”). Many comments had been addressed already at the previous meeting in Belfast, and the committee dealt with the remaining ones at this meeting.

The next step procedurally is for the committee to put out a revised draft called the Draft International Standard (DIS) which includes the resolutions of any NB comments. This draft, which was approved at the end of the meeting, is a technically complete draft of C++20. It will undergo a further ballot by the national bodies, which is widely expected to pass, and the official standard revision will be published by the end of the year. That will make C++20 the third standard revision to ship on time as per the committee’s 3-year release schedule.

I’m happy to report that once again, no major features were pulled from C++20 as part of the comment resolution process, so C++20 will go ahead and ship with all the major features (including modules, concepts, coroutines, and library goodies like ranges, date handling and text formatting) that were present in the Committee Draft. Thanks to this complement of important and long-anticipated features, C++20 is widely viewed by the community as the language’s most significant release since C++11.

Subgroups which had completed processing of NB comments for the week (which was most study groups and the Evolution groups for most of the week) proceeded to process post-C++20 proposals, of which there are plenty in front of the committee.

As with my blog post about the previous meeting, this one will also focus on proceedings in the Evolution Working Group Incubator (EWG-I) which I co-chaired at this meeting (shout-out to my co-chair Erich Keane who was super helpful and helped keep things running smoothly), as well as drawing attention to a few highlights from the Evolution Working Group and the Reflection Study Group. For a more comprehensive list of what features are in C++20, what NB comment resolutions resulted in notable changes to C++20 at this meeting, and which papers each subgroup looked at, I will refer you to the excellent collaborative Reddit trip report that fellow committee members have prepared.

As a reminder, since the past few meetings the committee has been tracking its proposals in GitHub. For convenience, I will also be linking to proposals’ GitHub issues (rather than the papers directly) from this post. I hope as readers you find this useful, as the issues contain useful information about a proposal’s current status; the actual papers are just one further click away. (And shout-out to @m_ou_se for maintaining wg21.link which makes it really easy for me to do this.)

Evolution Working Group Incubator (EWG-I)

EWG-I is a relatively new subgroup whose purpose is to give feedback on and polish proposals that include core language changes — particularly ones that are not in the purview of any of the domain-specific subgroups, such as SG2 (Modules), SG7 (Reflection), etc. — before they proceed to the Evolution Working Group (EWG) for design review.

EWG-I met for three days at this meeting, and reviewed around 22 proposals (all post-C++20 material).

In this section, I’ll go through the proposals that were reviewed, categorized by the review’s outcome.

Forwarded to EWG

The following proposals were considered ready to progress to EWG in their current state:

- A type trait to detect narrowing conversions. This is mainly a library proposal, but core language review was requested to make sure the specification doesn’t paint us into a corner in terms of future changes we might make to the definition of narrowing conversion.

- Guaranteed copy elision for named return objects. This codifies a set of scenarios where all implementations were already eliding a copy, thereby making such code well-formed even for types that are not copyable or movable.

- Freestanding language: optional

::operator new. This is one piece of a larger effort to make some language and library facilities optional in environments that may not be able to support them (e.g. embedded environments or kernel drivers). The paper was favourably reviewed by both EWG-I, and later in the week, by EWG itself.

Forwarded to EWG with modifications

For the following proposals, EWG-I suggested specific revisions, or adding discussion of certain topics, but felt that an additional round of EWG-I review would not be helpful, and the revised paper should go directly to EWG. The revisions requested were typically minor, sometimes as small as adding a feature test macro:

- Language support for class layout control. This introduces a mechanism to control the order in which class data members are laid out in memory. This was previously reviewed by the Refection Study Group which recommended allowing the order to be specified via a library function implemented using reflection facilities. However, as such reflection facilities are still a number of years away, EWG-I felt there was room for a small number of ordering strategies specified in core wording, and forwarded the paper to EWG with one initial strategy, to yield the smallest structure size.

- Object relocation in terms of move and destroy. This aims to address a long-standing performance problem in the language caused by the fact that a move must leave an object in a valid state, and a moved-from object still needs to be destroyed. There is another proposal in this space but EWG-I felt they are different enough that they should advance independently.

- Generalized pack declaration and usage. This proposal significantly enhances the language’s ability to work with variadic parameter packs and tuple-like types. It was reviewed previously by EWG-I, and in this update was reworked to address the feedback from that review. The group felt the proposal was thorough and mature and largely ready to progress to EWG, although there was one outstanding issue of ambiguity that remained to be resolved.

- Types with array-like object representations. This provides a mechanism for enforcing that a structure containing several fields of the same type is laid out in memory exactly the same as a corresponding array type, and the two types can be freely punned.

- C++ identifier syntax using Unicode standard Annex 31. While identifiers in C++ source code can now contain Unicode characters, we do want to maintain some sanity, and so this proposal restricts the set of characters that can appear in identifiers to certain categories (excluding, for example, “invisible” characters).

- Member templates for local classes. Since C++14 introduced generic lambdas (which are syntactic sugar for objects of a local class type defined on the fly, with a templated member call operator), the restriction against explicitly-defined local classes having member templates has been an artificial one, and this proposal lifts it.

- Enable variable template template parameters. Another fairly gratuitous restriction; EWG-I forwarded it, with a suggestion to add additional motivating examples to the paper.

Forwarded to another subgroup

The following proposals were forwarded to a domain-specific subgroup:

- In-source mechanism to identify importable headers. Headers which are sufficiently modular can be imported into a module as if they were modules themselves (this feature is called header units, and is a mechanism for incrementally transitioning large codebases to modules). Such headers are currently identified using some out-of-band mechanism (such as build system metadata). This proposal aims to allow annotating the headers as such in their source itself. EWG-I liked the idea but felt it was in the purview of the Tooling Study Group (SG15).

- Stackable, thread local, signal guards. This aims to bring safer and more modern signal handling facilities to C++. EWG-I reviewed the proposal favourably, and sent it onward to the Library Evolution Incubator and the Concurrency Study Group (the latter saw the proposal later in the week and provided additional technical feedback related to concurrency).

Feedback given

For the following proposals, EWG-I gave the author feedback, but did not consider it ready to forward to another subgroup. A revised proposal would come back to EWG-I.

move = bitcopies. This is other paper in the object relocation space, aiming for a more limited solution which can hopefully gain consensus sooner. The paper was reviewed favourably and will return after revisions.- Just-in-time compilation. Many attendees indicated this is something they’d find useful in their application domains, and several aspects of the design were discussed. The paper was also seen by the Reflection Study Group earlier in the week.

- Universal template parameters. This allows parameterizing a template over the kind of its template parameters (or, put another way, having template parameters of “wildcard” kind which can match non-type, type, or template template arguments). EWG-I felt the idea was useful but some of the details need to be refined. The proposed syntax is

typename auto Param. - A pipeline-rewrite operator. This proposes to automatically rewrite

a |> f(b)asf(a, b), thereby allowing a sequence of compositions of operations to be expressed in a more “linear” way in code (e.g.x |> f(y) |> g(z)instead ofg(f(x, y), z)). It partly brings to mind previous attempts at a unified function call syntax, but avoids many of the issues with that by using a new syntax rather than trying to make the existing member-call (dot) syntax work this way. Like “spaceship” (<=>), this new operator ought to have a fun name, so it’s dubbed the “pizza” operator (too bad calling it the “slice” operator would be misleading). - Partially mutable lambda captures. This proposal seeks to provide finer-grained control over which of a lambda’s captured data members are mutable (currently, they’re all

constby default, or you can make them all mutable by adding a trailingmutableto the lambda declarator). EWG-I suggested expanding the paper’s approach to allow eithermutableorconston any individual capture (the latter useful if combined with a trailingmutable), as well as to explore other integrations such as amutablecapture-default.

No consensus in current form

The following proposals had no consensus to continue to progress in their current form. However, a future revision may still be seen by EWG-I if additional motivation is provided or new information comes to light. In some cases, such as with Epochs, there was a strong desire to solve the problems the proposal aims to solve, and proposals taking new approaches to tackling these problems would certainly be welcome.

- Narrowing and widening conversions. This proposal aims to extend the notion of narrowing vs. widening conversions to user-defined conversions, and tweak the overload resolution rules to avoid ambiguity in more cases by preferring widening conversions to narrowing ones (and among widening conversions, prefer the “least widening” one). EWG-I felt that a change as scary as touching the overload resolution rules needed more motivation.

- Improve rules of standard layout. There wasn’t really encouragement of any specific direction, but there was a recognition that “standard layout” serves multiple purposes some of which (e.g. which types are usable in

offsetof) could potentially be split out. - Epochs: a backward-compatible language evolution mechanism. As at the last meeting, this proposal — inspired heavily by Rust’s editions — attracted the largest crowds and garnered quite a lot of discussion. Overall, the room felt that technical concerns about the handling of templates and the complexity of having to define how features interact across different epochs made the proposal as-is not viable. However, as mentioned, there was strong interest in solving the underlying problems, so I wouldn’t be terribly surprised to see a different formulation of a feature along these lines come back at some point.

- Namespace templates. EWG-I felt the motivation was not sufficiently compelling to justify the technical complexity this proposal would entail.

- Using

? :to reduce the scope ofconstexpr if. This proposes to allow? :in type expressions, as in e.g.using X = cond ? Foo : Bar;. EWG-I didn’t really find the motivation compelling enough to encourage further work on the proposal.

Thoughts on the role of EWG-I

I wrote in my previous post about EWG-I being a fairly permissive group that lets a lot of proposals sail through it. I feel like at this meeting the group was a more effective gatekeeper. However, we did have low attendance at times, which impacted the quantity and quality of feedback that some proposals received. If you’re interested in core language evolution and attend meetings, consider sitting in EWG-I while it’s running — it’s a chance to provide input to proposals at an earlier stage than most other groups!

Other Highlights

Here are some highlights of what happened in some of the other subgroups, with a focus on Evolution and Reflection (the rooms I sat in when I wasn’t in EWG-I):

Planning and Organization

As we complete C++20 and look ahead to C++23, the committee has been taking the opportunity to refine its processes, and tackle the next standards cycle with a greater level of planning and organization than ever before. A few papers touched on these topics:

- To boldly suggest an overall plan for C++23. This is a proposal for what major topics the committee should focus on for C++23. A previous version of this paper contained a similar plan for C++20, but one thing that’s new is that the latest version also contains guidance for subgroups for how to prioritize proposals procedurally to achieve the policy objectives laid out in the paper.

- C++ IS Schedule. This formalizes the committee’s 3-year release schedule in paper form, including what milestones we aim for at various parts of the cycle (e.g. a deadline to merge TS’es, a deadline to release a Committee Draft, things like that).

- Direction for ISO C++. Authored by the committee’s Direction Group, this sets out high-level goals and direction for the language, looking forward not just to the next standard release but the language’s longer-term evolution.

- Process proposal: double-check Evolutionary material via a Tentatively Ready status. This is a procedural tweak where proposals approved by Evolution do not proceed to Core for wording review immediately, but rather after one meeting of delay. The intention is to give committee members with an interest in the proposal’s topic but who were perhaps unable to attend its discussions in Evolution (or were unaware of the proposal altogether) — keep in mind, with committee meetings having a growing number of parallel tracks (nine at this meeting), it’s hard to stay on top of everything — to raise objections or chime in with other design-level feedback before the proposal graduates to Core.

ABI Stability

In one of the week’s most notable (and talked-about) sessions, Evolution and Library Evolution met jointly to discuss a paper about C++’s approach to ABI stability going forward.

The main issue that has precipitated this discussion is the fact that the Library Evolution group has had to reject multiple proposals for improvements to existing library facilities over the past several years, because they would be ABI-breaking, and implementers have been very reluctant to implement ABI-breaking changes (and when they did, like with std::string in C++11, the C++ ecosystem’s experience with the break hasn’t been great). The paper has a list of such rejected improvements, but one example is not being able to change unordered_map to take advantage of more efficient hashing algorithms.

The paper argues that rejections of such library improvements demonstrate that the C++ community faces a tradeoff between ABI stability and performance: if we continue to enforce a requirement that C++ standard library facilities remain ABI-compatible with their older versions, as time goes by the performance of these facilities will lag more and more behind the state of the art.

There was a lengthy discussion of this issue, with some polls at the end, which were far from unanimous and in some cases not very conclusive, but the main sentiments were:

- We would like to break the ABI “at some point”, but not “now” (not for C++23, which would be our earliest opportunity). It is unclear what the path is to getting to a place where we would be willing to break the ABI.

- We are more willing to undertake a partial ABI break than a complete one. (In this context, a partial break means some facilities may undergo ABI changes on an as-needed basis, but if you don’t use such facilities at ABI boundaries, you can continue to interlink translation units compiled with the old and new versions. The downside is, if you do use such facilities at ABI boundaries, the consequence is usually runtime misbehaviour. A complete break would mean all attempts to interlink TUs from different versions are prevented at link time.)

- Being ABI-breaking should not cause a library proposal to be automatically rejected. We should consider ABI-breaking changes on a case-by-case basis. Some implementers mentioned there may be opportunities to apply implementation tricks to avoid or mitigate the effects of some breaks.

There was also a suggestion that we could add new language facilities to make it easier to manage the evolution of library facilities — for example, to make it easier to work with two different versions of a class (possibly with different mangled names under the hood) in the same codebase. We may see some proposals along these lines being brought forward in the future.

Reflection and Metaprogramming

The Reflection Study Group (SG7) met for one day. The most contentious item on the agenda concerned exploration of a potential new metaprogramming model inspired by the Circle programming language, but I’ll first mention some of the other papers that were reviewed:

- Just-in-time compilation. This is not really a reflection proposal, but it needs a mechanism to create a descriptor for a template instantiation to JIT-compile at runtime, and there is potential to reuse reflection facilities there. SG7 was in favour of reusing reflection APIs for this rather than creating something new.

- Reflection-based lazy evaluation. This was a discussion paper that demonstrated how reflection facilities could be leveraged in a lazy evaluation feature. While this is not a proposal yet, SG7 did affirm that the group should not rule out the possibility of reflecting on expressions (which is not yet a part of any current reflection proposal), since that can enable interesting use cases like this one.

- Constraint refinement for special-cased functions. This paper aims to fix some issues with parameter constraints, a feature proposed as part of a recent reflection proposal, but the authors withdrew it because they’ve since found a better approach.

- Tweaks to the design of source code fragments. This is another enhancement to the reflection proposal mentioned above, related to source code injection. SG7 encouraged further work in this direction.

- Using

? :to reduce the scope ofconstexpr if. Like EWG-I, SG7 did not find this proposal to be sufficiently motivating. - Function parameter constraints are fragile. This paper was mooted by the withdrawal of this one (and of parameter constraints more generally) and was not discussed.

Now onto the Circle discussion. Circle is a set of extensions to C++ that allow for arbitrary code to run at compile time by actually invoking (as opposed to interpreting or emulating) that code at compile time, including having the compiler call into arbitrary third-party libraries.

Circle has come up in the context of considering C++’s approach to metaprogramming going forward. For the past few years, the committee has been trying to make metaprogramming be more accessible by making it more like regular programming, hence the shift from template metaprogramming to constexpr-based metaprogramming, and the continuing increase of the set of language constructs allowed in constexpr code (“constexpr all the things”).

However, this road has not been without bumps. A recent paper argues that constexpr programming is still quite a bit further from regular programming that we’d like, due to the variety of restrictions on constexpr code, and the gotchas / limitations of facilities like std::is_constant_evaluated and promotion of dynamically allocated storage to runtime. The paper argues that if C++ were to adopt Circle’s metaprogramming model, then compile-time code could look exactly the same as runtime code, thereby making it more accessible and facilitating more code reuse.

A response paper analyzes the Circle metaprogramming model and argues that it is not a good fit for C++.

SG7 had an extensive discussion of these two papers. The main concerns that were brought up were the security implications of allowing the compiler to execute arbitrary C++ code at compile time, and the fact that running (as opposed to interpreting) C++ code at compile time presents a challenge for cross-compilation scenarios (where e.g. sizeof(int) may be different on the host than the target; existing compile-time programming facilities interpret code as if it were running on the target, using the target’s sizes for types and such).

Ultimately, SG7 voted against allowing arbitrary C++ code to run at compile-time, and thus against a wholesale adoption of Circle’s metaprogramming model. It was observed that there may be some aspects of Circle’s model that would still be useful to adopt into C++, such as its model for handling state and side effects, and its syntactic convenience.

Evolution Highlights

Some proposals which went through EWG-I earlier in the week were also reviewed by EWG — in this case, all favourably:

- A type trait to detect narrowing conversions

- Freestanding language: optional

::operator new. - Guaranteed copy elision for name return objects

Here are some other highlights from EWG this week:

- Pattern matching continues to be under active development and is a priority item for Evolution as per the overall plan. Notable topics of discussion this week included whether pattern matching should be allowed in both statement and expression contexts and, if allowed in expression contexts, whether non-exhaustiveness should be a compile-time error or undefined / other runtime behaviour (and if it’s a compile-time error, in what cases we can expect the compiler to prove exhaustiveness).

- Deducing

*thishas progressed to a stage where the design is pretty mature and EWG asked for an implementation prior to approving it. auto(x): decay-copy in the language. EWG likedauto(x), and asked the Library Working Group to give an opinion on how useful it would be inside library implementations. EWG was not convinced of the usefulness ofdecltype(auto)(x)as a shorthand for forwarding.fiber_context– fibers without scheduler. EWG was in favour of having this proposal target a Technical Specification (TS), but wanted the proposal to contain a list of specific questions that they’d like answered through implementation and use experience with the TS. Several candidate items for that list came up during the discussion.if consteval, a language facility that aims to address some of the gotchas withstd::is_constant_evaluated, will not be pursued in its current form for C++23, but other ideas in this space may be.- Top-level is constant evaluated is one such other idea, aiming as it does to replace

std::is_constant_evaluatedwith a way to provide two function bodies for a function: aconstexprone and a runtime one. EWG felt this was a less general solution thanif constexprand not worth pursuing. - A proposal for a new study group for safety-critical applications. EWG encouraged collaboration among people interested in this topic in the wider community. A decision of actually launching a Study Group has been deferred until we see that there is a critical mass of such interest.

=delete‘ing variable templates. EWG encouraged further work on a more general solution that could also apply to other things besides variable templates.

Study Group Highlights

While I wasn’t able to attend other Study Groups besides Reflection, a lot of interesting topics were discussed in other Study Groups. Here is a very brief mention of some highlights:

- In the Concurrency Study Group, Executors have design approval on a long-in-the-works consensus design, and will proceed to wording review at the next meeting, with a view to merging them into the C++23 working draft in the early 2021 timeframe, and thereby unblocking important dependencies like Networking.

- In the Networking Study Group, a proposal to introduce lower-level I/O abstractions that what are currently in the Networking TS was reviewed. Further exploration was encouraged, without blocking the existing Networking TS facilities.

- In the Transactional Memory Study Group, a “Transactional Memory Lite” proposal is being reviewed with an aim to produce a TS based on it.

- The Numerics Study Group is collaborating with the Low Latency and Machine Learning Study Groups on library proposals related to linear algebra and unit systems.

- The Undefined and Unspecified Behaviour Study Group is continuing its collaboration with MISRA and the Programming Language Vulnerabilities Working Group. A revised proposal for a mechanism to mitigate Spectre v1 in C++ code was also reviewed.

- The I/O Study Group reviewed feedback papers concerning audio and graphics. One outcome of the graphics discussion is that the group felt the existing graphics proposal should be more explicit about what use cases are in and out of scope. One interesting observation that was made is that is that learning use cases that just require a simple canvas-like API could be met by using the actual web Canvas API via WebAssembly.

- The Low Latency Study Group reviewed a research paper about low-cost deterministic exceptions in C++, among many other things.

- The Machine Learning Study Group reviewed a proposal for language or library suppport for automatic differentiation.

- The Unicode Study Group decided that

std::regexneeds to be deprecated due to severe performance problems that are unfixable due to ABI constraints. - The Tooling Study Group reviewed two papers concerning the debuggability of C++20 coroutines, as well as several Modules-related papers. There were also suggestions that the topic of profiling may come up, e.g. in the form of extensions to

std::threadmotivated by profiling. - The Contracts Study Group reviewed a paper summarizing the issues that were controversial during past discussions (which led to Contracts slipping from C++20), and a paper attempting to clarify the distinction between assertions and assumptions.

Next Meeting

The next meeting of the Committee will (probably) be in Varna, Bulgaria, the week of June 1st, 2020.

Conclusion

As always, this was a busy and productive meeting. The headline accomplishment is completing outsanding bugfixes for C++20 and approving the C++20 Draft International Standard, which means C++20 is technically complete and is expected to be officially published by the end of the year. There was also good progress made on post-C++20 material such as pattern matching and reflection, and important discussions about larger directional topics in the community such as ABI stability.

There is a lot I didn’t cover in this post; if you’re curious about something I didn’t mention, please feel free to ask in a comment.

Other Trip Reports

In addition to the collaborative Reddit report which I linked to earlier, here are some other trip reports of the Prague meeting that you could check out:

- Hana Dusikova’s CppCast report

- Inbal Levi’s trip report

- Herb Sutter’s trip report

- Ben Craig’s update on the state of “Freestanding”

|

|

Spidermonkey Development Blog: Newsletter 3 (Firefox 74-75) |

|

|

The Talospace Project: Firefox 74 on POWER |

|

|

The Rust Programming Language Blog: Announcing Rust 1.42.0 |

The Rust team is happy to announce a new version of Rust, 1.42.0. Rust is a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.42.0 is as easy as:

rustup update stable

If you don't have it already, you can get rustup from the appropriate page on our website, and check out the detailed release notes for 1.42.0 on GitHub.

What's in 1.42.0 stable

The highlights of Rust 1.42.0 include: more useful panic messages when unwrapping, subslice patterns, the deprecation of Error::description, and more. See the detailed release notes to learn about other changes not covered by this post.

Useful line numbers in Option and Result panic messages

In Rust 1.41.1, calling unwrap() on an Option::None value would produce an error message looking something like this:

thread 'main' panicked at 'called `Option::unwrap()` on a `None` value', /.../src/libcore/macros/mod.rs:15:40

Similarly, the line numbers in the panic messages generated by unwrap_err, expect, and expect_err, and the corresponding methods on the Result type, also refer to core internals.

In Rust 1.42.0, all eight of these functions produce panic messages that provide the line number where they were invoked. The new error messages look something like this:

thread 'main' panicked at 'called `Option::unwrap()` on a `None` value', src/main.rs:2:5

This means that the invalid call to unwrap was on line 2 of src/main.rs.

This behavior is made possible by an annotation, #[track_caller]. This annotation is not yet available to use in stable Rust; if you are interested in using it in your own code, you can follow its progress by watching this tracking issue.

Subslice patterns

In Rust 1.26, we stabilized "slice patterns," which let you match on slices. They looked like this:

fn foo(words: &[&str]) {

match words {

[] => println!("empty slice!"),

[one] => println!("one element: {:?}", one),

[one, two] => println!("two elements: {:?} {:?}", one, two),

_ => println!("I'm not sure how many elements!"),

}

}

This allowed you to match on slices, but was fairly limited. You had to choose the exact sizes you wished to support, and had to have a catch-all arm for size you didn't want to support.

In Rust 1.42, we have expanded support for matching on parts of a slice:

fn foo(words: &[&str]) {

match words {

["Hello", "World", "!", ..] => println!("Hello World!"),

["Foo", "Bar", ..] => println!("Baz"),

rest => println!("{:?}", rest),

}

}

The .. is called a "rest pattern," because it matches the rest of the slice. The above example uses the rest pattern at the end of a slice, but you can also use it in other ways:

fn foo(words: &[&str]) {

match words {

// Ignore everything but the last element, which must be "!".

[.., "!"] => println!("!!!"),

// `start` is a slice of everything except the last element, which must be "z".

[start @ .., "z"] => println!("starts with: {:?}", start),

// `end` is a slice of everything but the first element, which must be "a".

["a", end @ ..] => println!("ends with: {:?}", end),

rest => println!("{:?}", rest),

}

}

If you're interested in learning more, we published a post on the Inside Rust blog discussing these changes as well as more improvements to pattern matching that we may bring to stable in the future! You can also read more about slice patterns in Thomas Hartmann's post.

matches!

This release of Rust stabilizes a new macro, matches!. This macro accepts an expression and a pattern, and returns true if the pattern matches the expression. In other words:

// Using a match expression:

match self.partial_cmp(other) {

Some(Less) => true,

_ => false,

}

// Using the `matches!` macro:

matches!(self.partial_cmp(other), Some(Less))

You can also use features like | patterns and if guards:

let foo = 'f';

assert!(matches!(foo, 'A'..='Z' | 'a'..='z'));

let bar = Some(4);

assert!(matches!(bar, Some(x) if x > 2));

use proc_macro::TokenStream; now works

In Rust 2018, we removed the need for extern crate. But procedural macros were a bit special, and so when you were writing a procedural macro, you still needed to say extern crate proc_macro;.

In this release, if you are using Cargo, you no longer need this line when working with the 2018 edition; you can use use like any other crate. Given that most projects will already have a line similar to use proc_macro::TokenStream;, this change will mean that you can delete the extern crate proc_macro; line and your code will still work. This change is small, but brings procedural macros closer to regular code.

Libraries

iter::Emptynow implementsSendandSyncfor anyT.Pin::{map_unchecked, map_unchecked_mut}no longer require the return type to implementSized.io::Cursornow implementsPartialEqandEq.Layout::newis nowconst.

Stabilized APIs

CondVar::wait_while&CondVar::wait_timeout_whileDebugMap::key&DebugMap::valueManuallyDrop::takeptr::slice_from_raw_parts_mut&ptr::slice_from_raw_parts

Other changes

There are other changes in the Rust 1.42.0 release: check out what changed in Rust, Cargo, and Clippy.

Compatibility Notes

We have two notable compatibility notes this release: a deprecation in the standard library, and a demotion of 32-bit Apple targets to Tier 3.

Error::Description is deprecated

Sometimes, mistakes are made. The Error::description method is now considered to be one of those mistakes. The problem is with its type signature:

fn description(&self) -> &str

Because description returns a &str, it is not nearly as useful as we wished it would be. This means that you basically need to return the contents of an Error verbatim; if you wanted to say, use formatting to produce a nicer description, that is impossible: you'd need to return a String. Instead, error types should implement the Display/Debug traits to provide the description of the error.

This API has existed since Rust 1.0. We've been working towards this goal for a long time: back in Rust 1.27, we "soft deprecated" this method. What that meant in practice was, we gave the function a default implementation. This means that users were no longer forced to implement this method when implementing the Error trait. In this release, we mark it as actually deprecated, and took some steps to de-emphasize the method in Error's documentation. Due to our stability policy, description will never be removed, and so this is as far as we can go.

Downgrading 32-bit Apple targets

Apple is no longer supporting 32-bit targets, and so, neither are we. They have been downgraded to Tier 3 support by the project. For more details on this, check out this post from back in January, which covers everything in detail.

Contributors to 1.42.0

Many people came together to create Rust 1.42.0. We couldn't have done it without all of you. Thanks!

|

|

Julien Vehent: Video-conferencing the right way |

I work from home. I have been doing so for the last four years, ever since I joined Mozilla. Some people dislike it, but it suits me well: I get the calm, focused, relaxing environment needed to work on complex problems all in the comfort of my home.

Even given the opportunity, I probably wouldn't go back to working in an office. For the kind of work that I do, quiet time is more important than high bandwidth human interaction.

Yet, being able to talk to my colleagues and exchanges ideas or solve problems is critical to being productive. That's where the video-conferencing bit comes in. At Mozilla, we use Vidyo Zoom primarily, sometimes Hangout and more rarely Skype. We spend hours every week talking to each other via webcams and microphones, so it's important to do it well.

Having a good video setup is probably the most important and yet least regarded aspect of working remotely. When you start at Mozilla, you're given a laptop and a Zoom account. No one teaches you how to use it. Should I have an external webcam or use the one on your laptop? Do I need headphones, earbuds, a headset with a microphone? What kind of bandwidth does it use? Those things are important to good telepresence, yet most of us only learn them after months of remote work.

When your video setup is the main interface between you and the rest of your team, spending a bit of time doing it right is far from wasted. The difference between a good microphone and a shitty little one, or a quiet room and taking calls from the local coffee shop, influence how much your colleagues will enjoy working with you. I'm a lot more eager to jump on a call with someone I know has good audio and video, than with someone who will drag me in 45 minutes of ambient noise and coughing in his microphone.

This is a list of tips and things that you should care about, for yourself, and for your coworkers. They will help you build a decent setup with no to minimal investment.

The place

It may seem obvious, but you shouldn't take calls from a noisy place. Airports, coffee shops, public libraries, etc. are all horribly noisy environments. You may enjoy working from those places, but your interlocutors will suffer from all the noise. Nowadays, I refuse to take calls and cut meetings short when people try to force me into listening to their surrounding. Be respectful of others and take meetings from a quiet space.

Bandwidth

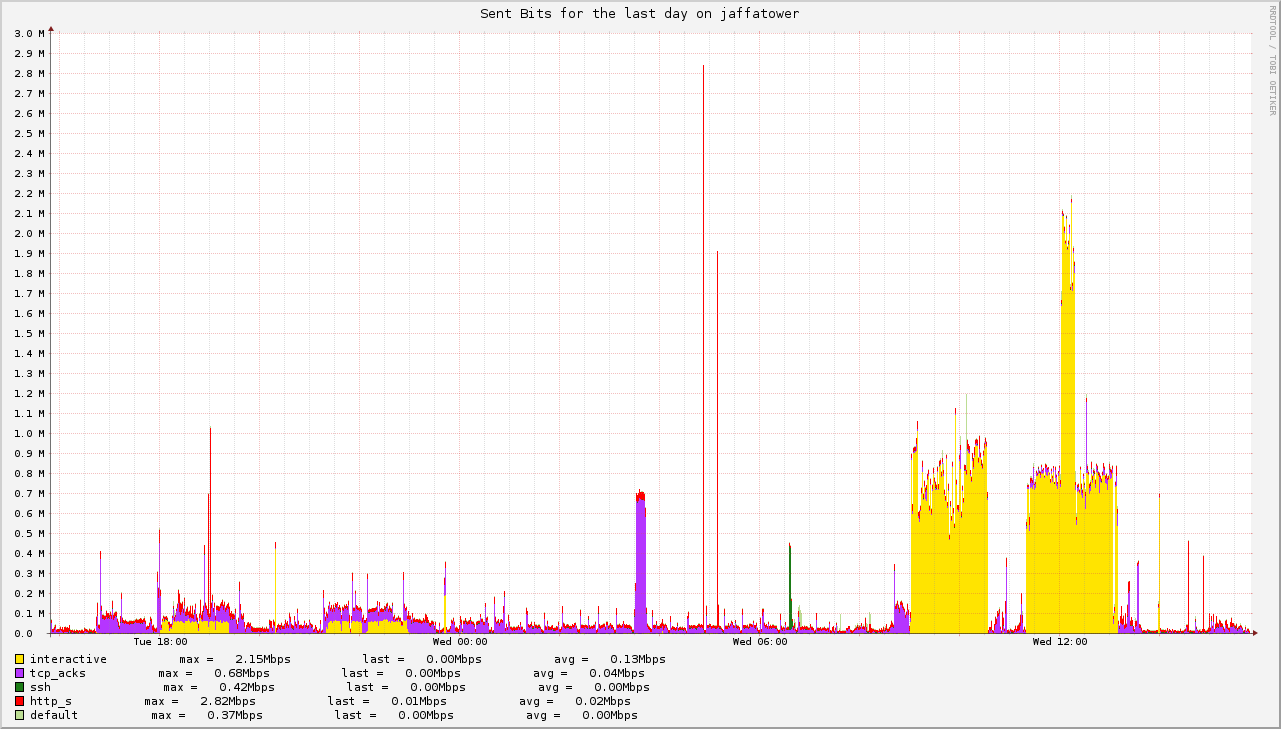

Despite what ISPs are telling you, no one needs 300Mbps of upstream bandwidth. Take a look at the graph below. It measures the egress point of my gateway. The two yellow spikes are video meetings. They don't even reach 1Mbps! In the middle of the second one, there's a short spike at 2Mbps when I set Vidyo to send my stream at 1080p, but shortly reverted because that software is broken and the faces of my coworkers disappeared. Still, you get the point: 2Mbps is the very maximum you'll need for others to see you, and about the same amount is needed to download their streams.

You do want to be careful about ping: latency can increase up to 200ms without issue, but even 5% packet drop is enough to make your whole experience miserable. Ask Tarek what bad connectivity does to your productivity: he works from a remote part of france where bandwidth is scarce and latency is high. I coined him the inventor of the Tarek protocol, where you have to repeat each word twice for others to understand what you're saying. I'm joking, but the truth is that it's exhausting for everyone. Bad connectivity is tough on remote workers.

(Tarek thought it'd be worth mentioning that he tried to improve his connectivity by subscribing to a satellite connection, but ran into issues in the routing of his traffic: 700ms latency was actually worse than his broken DSL.)

Microphone

Perhaps the single most important aspect of video-conferencing is the quality of your microphone and how you use it. When everyone is wearing headphones, voice quality matters a lot. It is the difference between a pleasant 1h conversation, or a frustrating one that leaves you with a headache.

Rule #1: MUTE!

Let me say that again: FREAKING MUTE ALREADY!

Video softwares are terrible at routing the audio of several people at the same time. This isn't the same as a meeting room, where your brain will gladly separate the voice of someone you're speaking to from the keyboard of the dude next to you. On video, everything is at the same volume, so when you start answering that email while your colleagues are speaking, you're pretty much taking over their entire conversation with keyboard noises. It's terrible, and there's nothing more annoying than having to remind people to mute every five god damn minutes. So, be a good fellow, and mute!

Rule #2: no coughing, eating, breathing, etc... It's easy enough to mute or move your microphone away from your mouth that your colleagues shouldn't have to hear you breathing like a marathoner who just finished the olympics. We're going back to rule #1 here.

Now, let's talk about equipment. A lot of people neglect the value of a good microphone, but it really helps in conversations. Don't use your laptop microphone, it's crap. And so is the mic on your earbuds (yes, even the apple ones). Instead, use a headset with a microphone.

If you have a good webcam, it's somewhat ok to use the microphone that comes with it. The Logitech C920 is a popular choice. The downside of those mics is they will pick up a lot of ambient noise and make you sound distant. I don't like them, but it's an acceptable trade-off.

If you want to go all out, try one of those fancy podcast microphones, like the Blue Yeti.

You most definitely don't need that for good mic quality, but they sound really nice. Here's a recording comparing my camera's embedded mic, Planctonic headsets, a Blue Yeti and a Deity S-Mic 2 shotgun microphone.

Webcam

This part is easy because most laptops already come with 720p webcam that provide decent video quality. I do find the Logitech renders colors and depth better than the webcam embedded on my Lenovo Carbon X1, but the difference isn't huge.

The most important part of your webcam setup should be its location. It's a bit strange to have someone talk to you without looking straight at you, but this is often what happens when people place their webcam to the side of their screen.

I've experimented a bit with this, and my favorite setup is to put the webcam right in the middle of my screen. That way, I'm always staring right at it.

It does consume a little space in the middle of my display, but with a large enough screen - I use an old 720p 35" TV - doesn't really bother me.

Lighting and background are important parameters too. Don't bring light from behind, or your face will look dark, and don't use a messy background so people can focus on what you're saying. These factors contribute to helping others read your facial expressions, which are an important part of good communication. If you don't believe me, ask Cal Lightman ;).

Spread the word!

In many ways, we're the first generation of remote workers, and people are learning how to do it right. I believe video-conferencing is an important part of that process, and I think everyone should take a bit of time and improve their setup. Ultimately, we're all a lot more productive when communication flows easily, so spread the word, and do tell your coworkers when they setup is getting in the way of good conferencing.

https://j.vehent.org/blog/index.php?post/2017/01/18/Video-conferencing-the-right-way

|

|

Daniel Stenberg: curl 7.69.1 better patch than sorry |

This release comes but 7 days since the previous and is a patch release only, hence called 7.69.1.

Numbers

the 190th release

0 changes

7 days (total: 8,027)

27 bug fixes (total: 5,938)

48 commits (total: 25,405

0 new public libcurl function (total: 82)

0 new curl_easy_setopt() option (total: 270)

0 new curl command line option (total: 230)

19 contributors, 6 new (total: 2,133)

7 authors, 1 new (total: 772)

0 security fixes (total: 93)

0 USD paid in Bug Bounties

Unplanned patch release

Quite obviously this release was not shipped aligned with our standard 8-week cycle. The reason is that we had too many semi-serious or at least annoying bugs that were reported early on after the 7.69.0 release last week. They made me think our users will appreciate a quick follow-up that addresses them. See below for more details on some of those flaws.

How can this happen in a project that soon is 22 years old, that has thousands of tests, dozens of developers and 70+ CI jobs for every single commit?

The short answer is that we don’t have enough tests that cover enough use cases and transfer scenarios, or put another way: curl and libcurl are very capable tools that can deal with a nearly infinite number of different combinations of protocols, transfers and bytes over the wire. It is really hard to cover all cases.

Also, an old wisdom that we learned already many years ago is that our code is always only properly widely used and tested the moment we do a release and not before. Everything can look good in pre-releases among all the involved developers, but only once the entire world gets its hands on the new release it really gets to show what it can or cannot do.

This time, a few of the changes we had landed for 7.69.0 were not good enough. We then go back, fix issues, land updates and we try again. So here comes 7.69.1 – better patch than sorry!

Bug-fixes

As the numbers above show, we managed to land an amazing number of bug-fixes in this very short time. Here are seven of the more important ones, from my point of view! Not all of them were regressions or even reported in 7.69.0, some of them were just ripe enough to get landed in this release.

unpausing HTTP/2 transfers

When I fixed the pausing and unpausing of HTTP/2 streams for 7.69.0, the fix was inadequate for several of the more advanced use cases and unfortunately we don’t have good enough tests to detect those. At least two browsers built to use libcurl for their HTTP engines reported stalled HTTP/2 transfers due to this.

I reverted the previous change and I’ve landed a different take that seems to be a more appropriate one, based on early reports.

pause: cleanups

After I had modified the curl_easy_pause function for 7.69.0, we also got reports about crashes with uses of this function.

It made me do some additional cleanups to make it more resilient to bad uses from applications, both when called without a correct handle or when it is called to just set the same pause state it is already in

socks: connection regressions

I was so happy with my overhauled SOCKS connection code in 7.69.0 where it was made entirely non-blocking. But again it turned out that our test cases for this weren’t entirely mimicking the real world so both SOCKS4 and SOCKS5 connections where curl does the name resolving could easily break. The test cases probably worked fine there because they always resolve the host name really quick and locally.

SOCKS4 connections are now also forced to be done over IPv4 only, as that was also something that could trigger a funny error – the protocol doesn’t support IPv6, you need to go to SOCKS5 for that!

Both version 4 and 5 of the SOCKS proxy protocol have options to allow the proxy to resolve the server name or you can have the client (curl) do it. (Somewhat described in the CURLOPT_PROXY man page.) These problems were found for the cases when curl resolves the server name.

libssh: MD5 hex comparison

For application users of the libcurl CURLOPT_SSH_HOST_PUBLIC_KEY_MD5 option, which is used to verify that curl connects to the right server, this change makes sure that the libssh backend does the right thing and acts exactly like the libssh2 backend does and how the documentation says it works…

libssh2: known hosts crash

In a recent change, libcurl will try to set a preferred method for the knownhost matching libssh2 provides when connecting to a SSH server, but the code unfortunately contained an easily triggered NULL pointer dereference that no review caught and obviously no test either!

c-ares: duphandle copies DNS servers too

curl_easy_duphandle() duplicates a libcurl easy handle and is frequently used by applications. It turns out we broke a little piece of the function back in 7.63.0 as a few DNS server options haven’t been duplicated properly since then. Fixed now!

curl_version: thread-safer

The curl_version and curl_version_info functions are now both thread-safe without the use of any global context. One issue less left for having a completely thread-safe future curl_global_init.

Schedule for next release

This was an out-of-schedule release but the plan is to stick to the established release schedule, which will have the effect that the coming release window will be one week shorter than usual and the full cycle will complete in 7 weeks instead of 8.

Release video

https://daniel.haxx.se/blog/2020/03/11/curl-7-69-1-better-patch-than-sorry/

|

|

Robert O'Callahan: Debugging Gdb Using rr: Ptrace Emulation |

Someone tried using rr to debug gdb and reported an rr issue because it didn't work. With some effort I was able to fix a couple of bugs and get it working for simple cases. Using improved debuggers to improve debuggers feels good!

The main problem when running gdb under rr is the need to emulate ptrace. We had the same problem when we wanted to debug rr replay under rr. In Linux a process can only have a single ptracer. rr needs to ptrace all the processes it's recording — in this case gdb and the process(es) it's debugging. Gdb needs to ptrace the process(es) it's debugging, but they can't be ptraced by both gdb and rr. rr circumvents the problem by emulating ptrace: gdb doesn't really ptrace its debuggees, as far as the kernel is concerned, but instead rr emulates gdb's ptrace calls. (I think in principle any ptrace user, e.g. gdb or strace, could support nested ptracing in this way, although it's a lot of work so I'm not surprised they don't.)

Most of the ptrace machinery that gdb needs already worked in rr, and we have quite a few ptrace tests to prove it. All I had to do to get gdb working for simple cases was to fix a couple of corner-case bugs. rr has to synthesize SIGCHLD signals sent to the emulated ptracer; these signals weren't interacting properly with sigsuspend. For some reason gdb spawns a ptraced process, then kills it with SIGKILL and waits for it to exit; that wait has to be emulated by rr because in Linux regular "wait" syscalls can only wait for a non-child process if the waiter is ptracing the target process, and under rr gdb is not really the ptracer, so the native wait doesn't work. We already had logic for that, but it wasn't working for process exits triggered by signals, so I had to rework that, which was actually pretty hard (see the rr issue for horrible details).

After I got gdb working I discovered it loads symbols very slowly under rr. Every time gdb demangles a symbol it installs (and later removes) a SIGSEGV handler to catch crashes in the demangler. This is very sad and does not inspire trust in the quality of the demangling code, especially if some of those crashes involve potentially corrupting memory writes. It is slow under rr because rr's default syscall handling path makes cheap syscalls like rt_sigaction a lot more expensive. We have the "syscall buffering" fast path for the most frequent syscalls, but supporting rt_sigaction along that path would be rather complicated, and I don't think it's worth doing at the moment, given you can work around the problem using maint set catch-demangler-crashes off. I suspect that (with KPTI especially) doing 2-3 syscalls per symbol demangle (sigprocmask is also called) hurts gdb performance even without rr, so ideally someone would fix that. Either fix the demangling code (possibly writing it in a safe language like Rust), or batch symbol demangling to avoid installing and removing a signal handler thousands of times, or move it to a child process and talk to it asynchronously over IPC — safer too!

http://robert.ocallahan.org/2020/03/debugging-gdb-using-rr-ptrace-emulation.html

|

|

Hacks.Mozilla.Org: Security means more with Firefox 74 |

Today sees the release of Firefox number 74. The most significant new features we’ve got for you this time are security enhancements: Feature Policy, the Cross-Origin-Resource-Policy header, and removal of TLS 1.0/1.1 support. We’ve also got some new CSS text property features, the JS optional chaining operator, and additional 2D canvas text metric features, along with the usual wealth of DevTools enhancements and bug fixes.

As always, read on for the highlights, or find the full list of additions in the following articles:

Security enhancements

Let’s look at the security enhancement we’ve got in 74.

Feature Policy

We’ve finally enabled Feature Policy by default. You can now use the allow attribute and the Feature-Policy HTTP header to set feature permissions for your top level documents and IFrames. Syntax examples follow:

Feature-Policy: microphone 'none'; geolocation 'none'CORP

We’ve also enabled support for the Cross-Origin-Resource-Policy (CORP) header, which allows web sites and applications to opt in to protection against certain cross-origin requests (such as those coming from

https://hacks.mozilla.org/2020/03/security-means-more-with-firefox-74-2/

|

|

Mozilla Addons Blog: Support for extension sideloading has ended |

Today marks the release of Firefox 74 and as we announced last fall, developers will no longer be able to install extensions without the user taking an action. This installation method was typically done through application installers, and is commonly referred to as “sideloading.”

If you are the developer of an extension that installs itself via sideloading, please make sure that your users can install the extension from your own website or from addons.mozilla.org (AMO).

We heard several questions about how the end of sideloading support affects users and developers, so we wanted to clarify what to expect from this change:

- Starting with Firefox 74, users will need to take explicit action to install the extensions they want, and will be able to remove previously sideloaded extensions when they want to.

- Previously installed sideloaded extensions will not be uninstalled for users when they update to Firefox 74. If a user no longer wants an extension that was sideloaded, they must uninstall the extension themselves.

- Firefox will prevent new extensions from being sideloaded.

- Developers will be able to push updates to extensions that had previously been sideloaded. (If you are the developer of a sideloaded extension and you are now distributing your extension through your website or AMO, please note that you will need to update both the sideloaded .xpi and the distributed .xpi; updating one will not update the other.)

Enterprise administrators and people who distribute their own builds of Firefox (such as some Linux and Selenium distributions) will be able to continue to deploy extensions to users. Enterprise administrators can do this via policies. Additionally, Firefox Extended Support Release (ESR) will continue to support sideloading as an extension installation method.

We will continue to support self-distributed extensions. This means that developers aren’t required to list their extensions on AMO and users can install extensions from sites other than AMO. Developers just won’t be able to install extensions without the user taking an action. Users will also continue being able to manually install extensions.

We hope this helps clear up any confusion from our last post. If you’re a user who has had difficulty uninstalling sideloaded extensions in the past, we hope that you will find it much easier to remove unwanted extensions with this update.

The post Support for extension sideloading has ended appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/03/10/support-for-extension-sideloading-has-ended/

|

|

The Rust Programming Language Blog: The 2020 RustConf CFP is Now Open! |

Greetings fellow Rustaceans!

The 2020 RustConf Call for Proposals is now open!

Got something to share about Rust? Want to talk about the experience of learning and using Rust? Want to dive deep into an aspect of the language? Got something different in mind? We want to hear from you! The RustConf 2020 CFP site is now up and accepting proposals.

If you may be interested in speaking but aren't quite ready to submit a proposal yet, we are here to help you. We will be holding speaker office hours regularly throughout the proposal process, after the proposal process, and up to RustConf itself on August 20 and 21, 2020. We are available to brainstorm ideas for proposals, talk through proposals, and provide support throughout the entire speaking journey. We need a variety of perspectives, interests, and experience levels for RustConf to be the best that it can be - if you have questions or want to talk through things please don't hesitate to reach out to us! Watch this blog for more details on speaker office hours - they will be posted very soon.

The RustConf CFP will be open through Monday, April 5th, 2020, hope to see your proposal soon!

|

|

Daniel Stenberg: curl ootw: –quote |

Previous command line options of the week.

This option is called -Q in its short form, --quote in its long form. It has existed for as long as curl has existed.

Quote?

The name for this option originates from the traditional unix command ‘ftp’, as it typically has a command called exactly this: quote. The quote command for the ftp client is a way to send an exact command, as written, to the server. Very similar to what --quote does.

FTP, FTPS and SFTP

This option was originally made for supported only for FTP transfers but when we added support for FTPS, it worked there too automatically.

When we subsequently added SFTP support, even such users occasionally have a need for this style of extra commands so we made curl support it there too. Although for SFTP we had to do it slightly differently as SFTP as a protocol can’t actually send commands verbatim to the server as we can with FTP(S). I’ll elaborate a bit more below.

Sending FTP commands

The FTP protocol is a command/response protocol for which curl needs to send a series of commands to the server in order to get the transfer done. Commands that log in, changes working directories, sets the correct transfer mode etc.

Asking curl to access a specific ftp:// URL more or less converts into a command sequence.

The --quote option provides several different ways to insert custom FTP commands into the series of commands curl will issue. If you just specify a command to the option, it will be sent to the server before the transfer takes places – even before it changes working directory.

If you prefix the command with a minus (-), the command will instead be send after a successful transfer.

If you prefix the command with a plus (+), the command will run immediately before the transfer after curl changed working directory.

As a second (!) prefix you can also opt to insert an asterisk (*) which then tells curl that it should continue even if this command would cause an error to get returned from the server.

The actually specified command is a string the user specifies and it needs to be a correct FTP command because curl won’t even try to interpret it but will just send it as-is to the server.

FTP examples

For example, remove a file from the server after it has been successfully downloaded:

curl -O ftp://ftp.example/file -Q '-DELE file'

Issue a NOOP command after having logged in:

curl -O ftp://user:password@ftp.example/file -Q 'NOOP'

Rename a file remotely after a successful upload:

curl -T infile ftp://upload.example/dir/ -Q "-RNFR infile" -Q "-RNTO newname"

Sending SFTP commands

Despite sounding similar, SFTP is a very different protocol than FTP(S). With SFTP the access is much more low level than FTP and there’s not really a concept of command and response. Still, we’ve created a set of command for the --quote option for SFTP that lets the users sort of pretend that it works the same way.

Since there is no sending of the quote commands verbatim in the SFTP case, like curl does for FTP, the commands must instead be supported by curl and get translated into their underlying SFTP binary protocol bits.

In order to support most of the basic use cases people have reportedly used with curl and FTP over the years, curl supports the following commands for SFTP: chgrp, chmod, chown, ln, mkdir, pwd, rename, rm, rmdir and symlink.

The minus and asterisk prefixes as described above work for SFTP too (but not the plus prefix).

Example, delete a file after a successful download over SFTP:

curl -O sftp://example/file -Q '-rm file'

Rename a file on the target server after a successful upload:

curl -T infile sftp://example/dir/ -Q "-rename infile newname"

SSH backends

The SSH support in curl is powered by a third party SSH library. When you build curl, there are three different libraries to select from and they will have a slightly varying degree of support. The libssh2 and libssh backends are pretty much feature complete and have been around for a while, where as the wolfSSH backend is more bare bones with less features supported but at much smaller footprint.

Related options

--request changes the actual command used to invoke the transfer when listing directories with FTP.

|

|

Wladimir Palant: Yahoo! and AOL: Where two-factor authentication makes your account less secure |

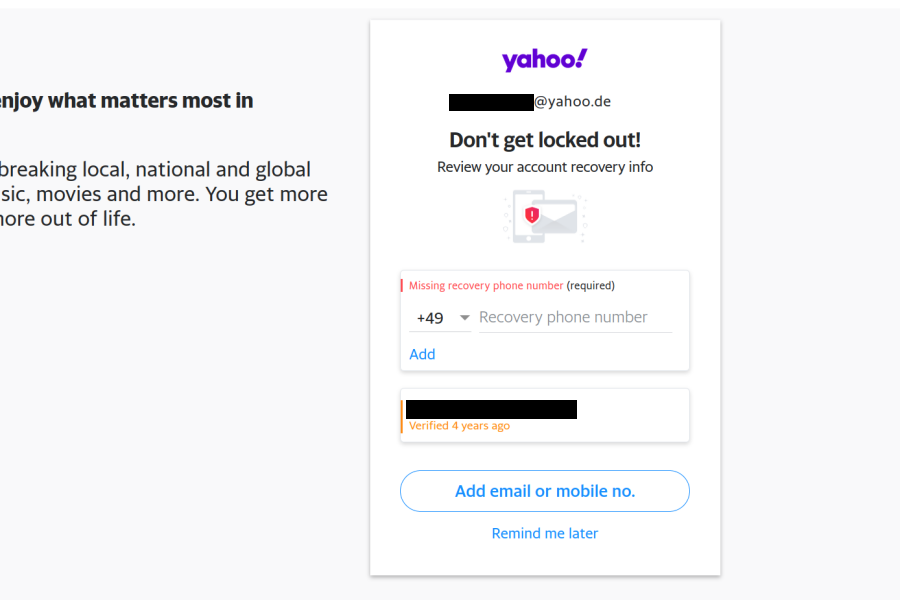

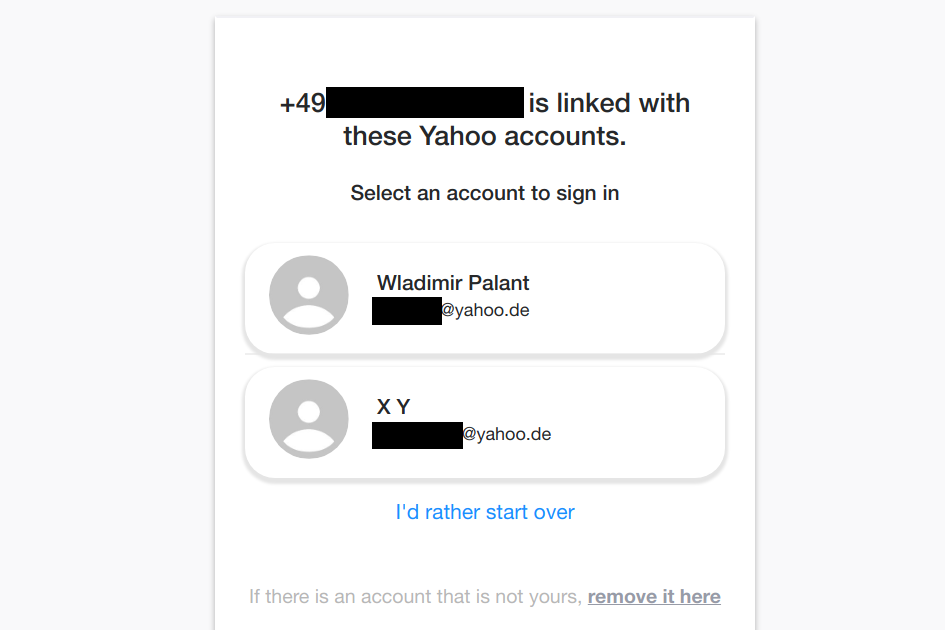

If you are reading this, you probably know already that you are supposed to use two-factor authentication for your most important accounts. This way you make sure that nobody can take over your account merely by guessing or stealing your password, which makes an account takeover far less likely. And what could be more important than your email account that everything else ties into? So you probably know, when Yahoo! greets you like this on login – it’s only for your own safety:

Yahoo! makes sure that “Remind me later” link is small and doesn’t look like an action, so it would seem that adding a phone number is the only way out here. And why would anybody oppose adding it anyway? But here is the thing: complying reduces the security of your account considerably. This is due to the way Verizon Media (the company which acquired Yahoo! and AOL a while ago) implements account recovery. And: yes, everything I say about Yahoo! also applies to AOL accounts.

Summary of the findings

I’m not the one who discovered the issue. A Yahoo! user wrote me:

I entered my phone number to the Yahoo! login, and it asked me if I wanted to receive a verification key/access key (2fa authentication). So I did that, and typed in the access key… Surprise, I logged in ACCIDENTALLY to the Yahoo! mail of the previous owner of my current phone number!!!

I’m not even the first one to write about this issue. For example, Brian Krebs mentioned this a year ago. Yet here we still are: anybody can take over a Yahoo! or AOL account as long as they control the recovery phone number associated with it.

So if you’ve got a new phone number recently, you could check whether its previous owner has a Yahoo! or AOL account. Nothing will stop you from taking over that account. And not just that: adding a recovery phone number doesn’t necessarily require verification! So when I tested it out, I was offered access to a Yahoo! account which was associated with my phone number even though the account owner almost certainly never proved owning this number. No, I did not log into their account…

How two-factor authentication is supposed to work

The idea behind two-factor authentication is making account takeover more complicated. Instead of logging in with merely a password (something you know), you also have to demonstrate access to a device like your phone (something you have). There is a number of ways how malicious actors could learn your password, e.g. if you are in the habit of reusing passwords; chances are that your password has been compromised in one of the numerous data breaches. So it’s a good idea to set the bar for account access higher.

The already mentioned article by Brian Krebs explains why phone numbers aren’t considered a good second factor. Not only do phone numbers change hands quite often, criminals have been hijacking them en masse via SIM swapping attacks. Still, despite sending SMS messages to a phone number being considered a weak authentication scheme, it provides some value when used in addition to querying the password.

The Yahoo! and AOL account recovery process

But that’s not how it works with Yahoo! and AOL accounts. I added a recovery phone to my Yahoo! account and enabled two-factor authentication with the same phone number (yes, you have to do it separately). So my account should have been as secure as somehow possible.

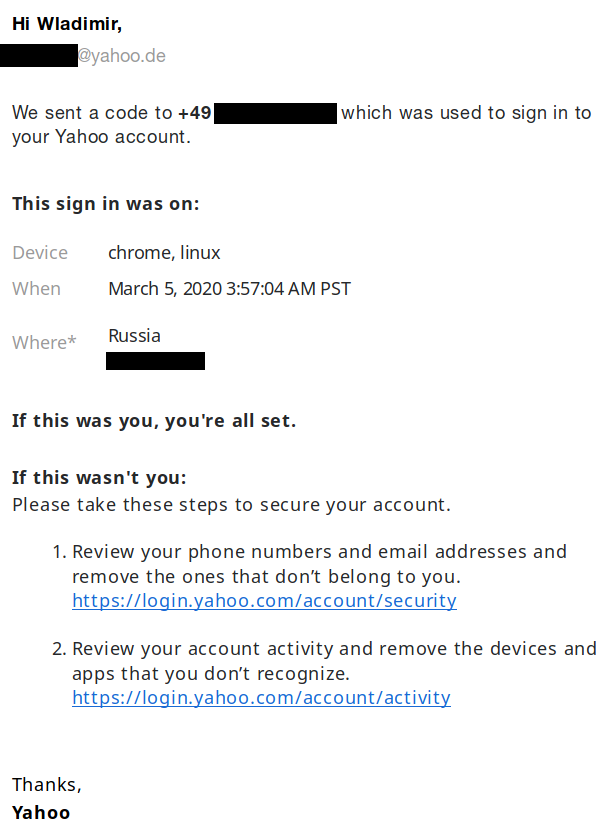

And then I tried “recovering” my account. From a different browser. Via a Russian proxy. While still being logged into this account in my regular browser. That should have been enough for Yahoo! to notice something being odd, right?

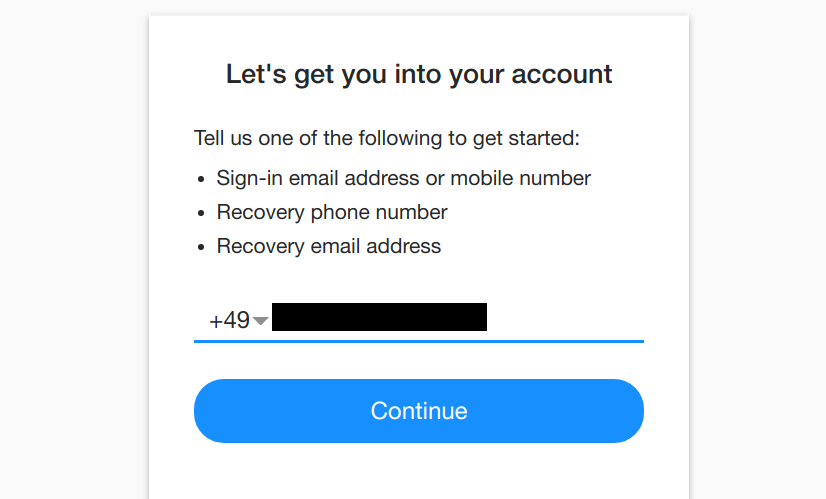

Clicking “Forgot username” brought me to a form asking me for a recovery phone number or email address. I entered the phone number and received a verification code via SMS. Entered it into the web page and voil`a!

Now it’s all very straightforward: I click on my account, set a new password and disable two-factor authentication. The session still open in my regular browser is logged out. As far as Yahoo! is concerned, somebody from Russia just took over my account using only the weak SMS-based authentication, not knowing my password or even my name. Yet Yahoo! didn’t notice anything suspicious about this and didn’t feel any need for additional checks. But wait, there is a notification sent to the recovery email address!

Hey, big thanks Yahoo! for carefully documenting the issue. But you could have shortened the bottom part as “If this wasn’t you then we are terribly sorry but the horse has already left the barn.” If somebody took over my account and changed my password, I’ll most likely not get a chance to review my email addresses and phone numbers any more.

Aren’t phone numbers verified?

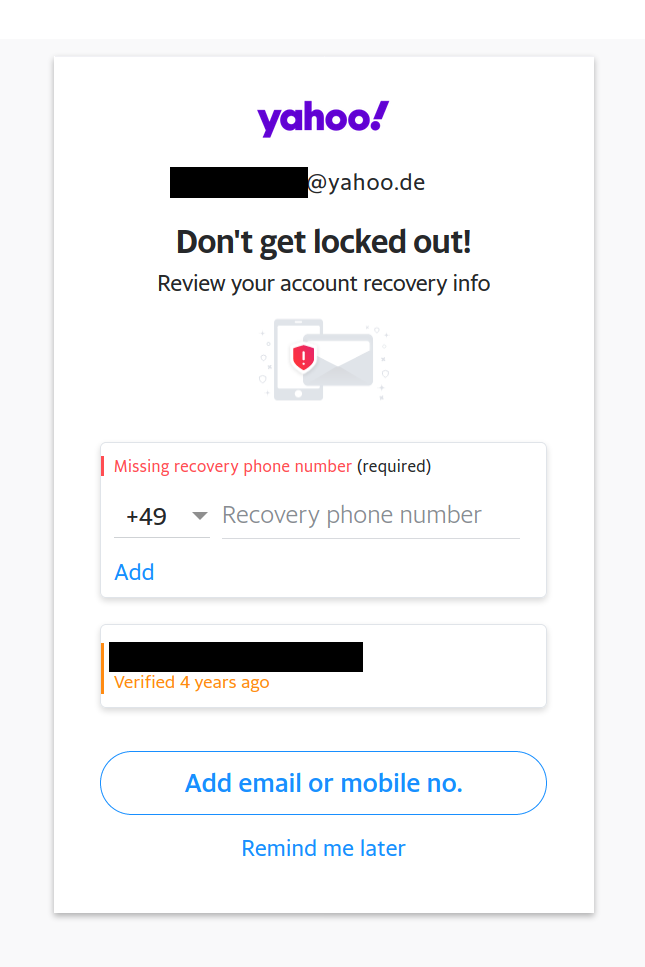

Now you are probably wondering: who is that other “X Y” account? Is that my test account? No, it’s not. It’s some poor soul who somehow managed to enter my phone number as their recovery phone. Given that this phone number has many repeating digits, it’s not too surprising that somebody typed it in merely to avoid Yahoo! nagging them. The other detail is surprising however: didn’t they have to verify that they actually own this number?

Now I had to go back to Yahoo!’s nag screen:

If I enter a phone number into that text field and click the small “Add” link below it, the next step will require entering a verification code that I receive via SMS. However, if I click the much bigger and more obvious “Add email or mobile no.” button, it will bring me to another page where I can enter my phone number. And there the phone number will be added immediately, with the remark “Not verified” and a suggestion to verify it later. Yet the missing verification won’t prevent this the phone number from being used in account recovery.

With the second flow being the more obvious one, I suspect that a large portion of Yahoo! and AOL users never verified that they actually own the phone number they set as their recovery phone. They might have made a typo, or they might have simply invented a number. These accounts can be compromised by the rightful owner of that number at any time, and Verizon Media will just let them.

What does Verizon Media think about that?

Do the developers at Verizon Media realize that their trade-off is tilted way too much towards convenience and sacrifices security as a result? One would think so, at the very least after a big name like Brian Krebs wrote about this issue. Then again, having dealt with the bureaucratic monstrosity that is Yahoo! even before they got acquired, I was willing to give them the benefit of the doubt.

Of course, there is no easy way of reaching the right people at Yahoo!. Their own documentation suggests reporting issues via their HackerOne bug bounty program, and I gave it a try. Despite my explicitly stating that the point was making the right team at Verizon aware of the issue, my report was immediately closed as a duplicate by HackerOne staff. The other report (filed last summer) was also closed by HackerOne staff, stating that exploitation potential wasn’t proven. There is no indication that either report ever made it to the people responsible.

So it seems that the only way of getting a reaction from Verizon Media is by asking publicly and having as many people as possible chime in. Google and Microsoft make account recovery complicated for a reason, the weakest factor is not enough there. So Verizon Media, why don’t you? Do you care so little about security?

|

|

Allen Wirfs-Brock: Teaser—JavaScript: The First 20 Years |

Our HOPL paper is done—all 190 pages of it. The preprint will be posted this week. In the meantime, here’s a little teaser.

JavaScript: The First 20 Years

By Allen Wirfs-Brock and Brendan Eich

Introduction

In 2020, the World Wide Web is ubiquitous with over a billion websites accessible from billions of Web-connected devices. Each of those devices runs a Web browser or similar program which is able to process and display pages from those sites. The majority of those pages embed or load source code written in the JavaScript programming language. In 2020, JavaScript is arguably the world’s most broadly deployed programming language. According to a Stack Overflow [2018] survey it is used by 71.5% of professional developers making it the world’s most widely used programming language.

This paper primarily tells the story of the creation, design, and evolution of the JavaScript language over the period of 1995–2015. But the story is not only about the technical details of the language. It is also the story of how people and organizations competed and collaborated to shape the JavaScript language which dominates the Web of 2020.

This is a long and complicated story. To make it more approachable, this paper is divided into four major parts—each of which covers a major phase of JavaScript’s development and evolution. Between each of the parts there is a short interlude that provides context on how software developers were reacting to and using JavaScript.

In 1995, the Web and Web browsers were new technologies bursting onto the world, and Netscape Communications Corporation was leading Web browser development. JavaScript was initially designed and implemented in May 1995 at Netscape by Brendan Eich, one of the authors of this paper. It was intended to be a simple, easy to use, dynamic language that enabled snippets of code to be included in the definitions of Web pages. The code snippets were interpreted by a browser as it rendered the page, enabling the page to dynamically customize its presentation and respond to user interactions.

Part 1, The Origins of JavaScript, is about the creation and early evolution of JavaScript. It examines the motivations and trade-offs that went into the development of the first version of the JavaScript language at Netscape. Because of its name, JavaScript is often confused with the Java programming language. Part 1 explains the process of naming the language, the envisioned relationship between the two languages, and what happened instead. It includes an overview of the original features of the language and the design decisions that motivated them. Part 1 also traces the early evolution of the language through its first few years at Netscape and other companies.

A cornerstone of the Web is that it is based upon non-proprietary open technologies. Anybody should be able to create a Web page that can be hosted by a variety of Web servers from different vendors and accessed by a variety of browsers. A common specification facilitates interoperability among independent implementations. From its earliest days it was understood that JavaScript would need some form of standard specification. Within its first year Web developers were encountering interoperability issues between Netscape’s JavaScript and Microsoft’s reverse-engineered implementation. In 1996, the standardization process for JavaScript was begun under the auspices of the Ecma International standards organization. The first official standard specification for the language was issued in 1997 under the name “ECMAScript”

Two additional revised and enhanced editions, largely based upon Netscape’s evolution of the language, were issued by the end of 1999.

Part 2, Creating a Standard, examines how the JavaScript standardization effort was initiated, how the specifications were created, who contributed to the effort, and how decisions were made.