Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Patrick Cloke: Boston Python: Twisted async networking framework |

Yesterday, Stephen DiCato and I gave a talk for Boston Python titled: Twisted async networking framework. It was an introduction to intermediate level talk about using the Twisted networking framework based on our experiences at Percipient Networks.

The talk, available on our GitHub (PDF) covered a few basic topics:

- What is asynchronous programming?

- What is Twisted?

- When/why to use Twisted?

- What is the event loop (reactor)?

- What are Deferreds and how do you use them?

- What are protocols (and related objects) and how do you use them?

Additionally there was a ‘bonus’ section: Using Twisted to build systems & services.

We used an example of a very simple chat server (NetCatChat: where the official client is netcat) to demonstrate these principles. All of our (working!) demo code is included in the repository.

There was a great turn out (almost 100 people showed up) and I greatly enjoyed the experience. Thanks to everyone who came, the sponsors for the night, Boston Python for setting this up, and Stephen for co-presenting! Please let us know if you have any questions or comments.

http://patrick.cloke.us/posts/2015/08/28/boston-python-twisted-talk/

|

|

Rub'en Mart'in: Bringing better support to regional communities |

During this third quarter, one of the main goals for the Participation team at Mozilla is to better support Reps and Regional communities.

We want to focus our efforts this quarter in 10 countries to be more efficient with the resources we have and be able to:

- Tailor country profiles and a community health dashboard.

- Develop a mid-term plan with at least three communities.

- Systematize a coaching framework with volunteers.

As part of the Reps/Regional group I’m currently involved in these efforts, focusing in three European countries: Germany, France and UK.

During the past and following weeks I’ll be meeting volunteers from these communities to know more about them and to figure out where to get some information that would help to develop the country profiles and the community dashboard, an important initiative to have a clear overview about our community status.

Also, I’m working with the awesome German community to meet and work together in a plan to align and improve the community in the next 6 months.

On top of all the previous things, we are starting a set of 1:1 meetings with key volunteers inside these communities to bring coaching and support in a more personal way, understanding everyone’s views and learning the best ways to help people’s skills and motivation.

Finally, I’m working to improve the Reps/Regional team accountability and work-flow productivity exploring better ways to manage our work as a team and working with the Reps Council to put together a Rep program profile doc to understand better the current status and what should be changed/improved.

You can know more about the Participation team Q3 goals and key results, as well as individual team members goals, in this public document and follow our daily work in our github page.

http://www.nukeador.com/28/08/2015/bringing-better-support-to-regional-communities/

|

|

Emily Dunham: Apache Licenses |

Apache Licenses

At the bottom fo the Apache 2.0 License file, there’s an appendix:

APPENDIX: How to apply the Apache License to your work. ... Copyright [yyyy] [name of copyright owner] ...

Does that look like an invitation to fill in the blanks to you? It sure does to me, and has for others in the Rust community as well.

Today I was doing some licensing housekeeping and made the same embarrassing mistake.

This is a PSA to double check whether inviting blanks are part of the appendix before filling them out in Apache license texts.

|

|

Christian Heilmann: ES6 for now: Template strings |

ES6 is the future of JavaScript and it is already here. It is a finished specification, and it brings a lot of features a language requires to stay competitive with the needs of the web of now. Not everything in ES6 is for you and in this little series of posts I will show features that are very handy and already usable.

If you look at JavaScript code I’ve written you will find that I always use single quotes to define strings instead of double quotes. JavaScript is OK with either, the following two examples do exactly the same thing:

var animal = "cow"; var animal = 'cow'; |

The reason why I prefer single quotes is that, first of all, it makes it easier to assemble HTML strings with properly quoted attributes that way:

// with single quotes, there's no need to // escape the quotes around the class value var but = ''; // this is a syntax error: var but = ""; // this works: var but = "big\">Save"; |

The only time you need to escape now is when you use a single quote in your HTML, which should be a very rare occasion. The only thing I can think of is inline JavaScript or CSS, which means you are very likely to do something shady or desperate to your markup. Even in your texts, you are probably better off to not use a single quote but the typographically more pleasing ‘.

Aside: Of course, HTML is forgiving enough to omit the quotes or to use single quotes around an attribute, but I prefer to create readable markup for humans rather than relying on the forgiveness of a parser. We made the HTML5 parser forgiving because people wrote terrible markup in the past, not as an excuse to keep doing so.

I’ve suffered enough in the DHTML days of document.write to create a document inside a frameset in a new popup window and other abominations to not want to use the escape character ever again. At times, we needed triple ones, and that was even before we had colour coding in our editors. It was a mess.

Expression substition in strings?

Another reason why I prefer single quotes is that I wrote a lot of PHP in my time for very large web sites where performance mattered a lot. In PHP, there is a difference between single and double quotes. Single quoted strings don’t have any substitution in them, double quoted ones have. That meant back in the days of PHP 3 and 4 that using single quotes was much faster as the parser doesn’t have to go through the string to substitute values. Here is an example what that means:

JavaScript didn’t have this substitution, which is why we had to concatenate strings to achieve the same result. This is pretty unwieldy, as you need to jump in and out of quotes all the time.

var animal = 'cow'; var sound = 'moo'; alert('The animal is ' + animal + ' and its sound is ' + sound); // => "The animal is cow and its sound is moo" |

Multi line mess

This gets really messy with longer and more complex strings and especially when we assemble a lot of HTML. And, most likely you will sooner or later end up with your linting tool complaining about trailing whitespace after a + at the end of a line. This is based on the issue that JavaScript has no multi-line strings:

// this doesn't work var list = ' |

Client side templating solutions

In order to work around the mess that is string handling and concatenation in JavaScript, we did what we always do – we write a library. There are many HTML templating libraries with Mustache.js probably having been the seminal one. All of these follow an own – non standardised – syntax and work in that frame of mind. It’s a bit like saying that you write your content in markdown and then realising that there are many different ideas of what “markdown” means.

Enter template strings

With the advent of ES6 and its standardisation we now can rejoice as JavaScript has now a new kid on the block when it comes to handling strings: Template Strings. The support of template strings in current browsers is encouraging: Chrome 44+, Firefox 38+, Microsoft Edge and Webkit are all on board. Safari, sadly enough, is not, but it’ll get there.

The genius of template strings is that it uses a new string delimiter, which isn’t in use either in HTML nor in normal texts: the backtick (`).

Using this one we now have string expression substitution in JavaScript:

var animal = 'cow'; var sound = 'moo'; alert(`The animal is ${animal} and its sound is ${sound}`); // => "The animal is cow and its sound is moo" |

The ${} construct can take any JavaScript expression that returns a value, you can for example do calculations, or access properties of an object:

var out = `ten times two totally is ${ 10 * 2 }`; // => "ten times two totally is 20" var animal = { name: 'cow', ilk: 'bovine', front: 'moo', back: 'milk', } alert(` The ${animal.name} is of the ${animal.ilk} ilk, one end is for the ${animal.front}, the other for the ${animal.back} `); // => /* The cow is of the bovine ilk, one end is for the moo, the other for the milk */ |

That last example also shows you that multi line strings are not an issue at all any longer.

Tagged templates

Another thing you can do with template strings is prepend them with a tag, which is the name of a function that is called and gets the string as a parameter. For example, you could encode the resulting string for URLs without having to resort to the horridly named encodeURIComponent all the time.

function urlify (str) { return encodeURIComponent(str); } urlify `http://beedogs.com`; // => "http%3A%2F%2Fbeedogs.com" urlify `woah$lb$%lb^$"`; // => "woah%24%C2%A3%24%25%C2%A3%5E%24%22" // nesting also works: var str = `foo ${urlify `&&`} bar`; // => "foo %26%26 bar" |

This works, but relies on implicit array-to-string coercion. The parameter sent to the function is not a string, but an array of strings and values. If used the way I show here, it gets converted to a string for convenience, but the correct way is to access the array members directly.

Retrieving strings and values from a template string

Inside the tag function you can not only get the full string but also its parts.

function tag (strings, values) { console.log(strings); console.log(values); console.log(strings[1]); } tag `you ${3+4} it`; /* => Array [ "you ", " it" ] 7 it */ |

There is also an array of the raw strings provided to you, which means that you get all the characters in the string, including control characters. Say for example you add a linebreak with \n you will get the double whitespace in the string, but the \n characters in the raw strings:

function tag (strings, values) { console.log(strings); console.log(values); console.log(strings[1]); console.log(string.raw[1]); } tag `you ${3+4} \nit`; /* => Array [ "you ", " it" ] 7 it \nit */ |

Conclusion

Template strings are one of those nifty little wins in ES6, that can be used right now. If you have to support older browsers, you can of course transpile your ES6 to ES5, you can do a feature test for template string support using a library like featuretests.io or with the following code:

var templatestrings = false; try { new Function( "`{2+2}`" ); templatestrings = true; } catch (err) { templatestrings = false; } if (templatestrings) { // … } |

More articles on template strings:

- Understanding ECMAScript 6: Template Strings

- Getting Literal With ES6 Template Strings

- ES6 In Depth: Template strings

- New string features in ECMAScript 6

- Understanding ES6: Template Strings

- HTML templating with ES6 template strings

http://christianheilmann.com/2015/08/28/es6-for-now-template-strings/

|

|

Cameron Kaiser: 38.2.1 delayed due to hardware failure |

In the meantime, a fair bit of the wiki has been updated and rewritten for Github. I am also exploring an idea from bug 1160228 by disabling incremental garbage collection entirely. This was a bad idea on 31 where incremental GC was better than nothing, but now that we have generational garbage collection and the nursery is regularly swept, the residual tenured heap seems small enough to make periodic full GCs more efficient. On a tier 1 platform the overhead of lots of incremental cycles may well be below the noise floor, but on the pathological profile in the bug even a relatively modern system had a noticeable difference disabling incremental GC. On this G5 occasionally I get a pause in the browser for 20 seconds or so, but that happens very infrequently, and otherwise now that the browser doesn't have to schedule partial passes it seems much sprightlier and stays so longer. The iBook G4 saw an even greater benefit. Please note that this has not been tested well with multiple compartments or windows, so your mileage may vary, but with that said please see what you think: in about:config set javascript.options.mem.gc_incremental to false and restart the browser to flush everything out. If people generally find this superior, it may become the default in 38.3.

http://tenfourfox.blogspot.com/2015/08/3821-delayed-due-to-hardware-failure.html

|

|

Gervase Markham: Top 50 DOS Problems Solved: Sorting Directory Listings |

Q: Could you tell me if it’s possible to make the DIR command list files in alphabetical order?

A: Earlier versions of DOS didn’t allow this but there’s a way round it. MS-DOS 5 gives you an /ON switch to use with DIR, for instance:

DIR *.TXT /ON /P

would list all the files with names ending in .TXT, pause the listing every screenful (/P) and sort the names into alphabetical order (/ON).

…

Users of earlier DOS programs can shove the output from DIR through a utility program that sorts the listing before printing it on the screen. That utility is SORT.EXE, supplied with DOS. … [So:]

DIR | SORT

diverts the output from DIR into SORT, which sorts the directory listing and sends it to the screen. Put this in a batch file called SDIR.BAT and you will have a sorted directory command called SDIR.

I guess earlier versions of DIR followed the Unix philosophy of “do one thing”…

http://feedproxy.google.com/~r/HackingForChrist/~3/3h3YSF51Kc0/

|

|

Daniel Stenberg: Content over HTTP/2 |

Roughly a week ago, on August 19, cdn77.com announced that they are the “first CDN to publicly offer HTTP/2 support for all customers, without ‘beta’ limitations”. They followed up just hours later with a demo site showing off how HTTP/2 might perform side by side with a HTTP/1.1 example. And yes, the big competitor CDNs are not yet offering HTTP/2 support it seems.

Roughly a week ago, on August 19, cdn77.com announced that they are the “first CDN to publicly offer HTTP/2 support for all customers, without ‘beta’ limitations”. They followed up just hours later with a demo site showing off how HTTP/2 might perform side by side with a HTTP/1.1 example. And yes, the big competitor CDNs are not yet offering HTTP/2 support it seems.

Their demo site initially got critized for not being realistic and for showing HTTP/2 to be way better in comparison that what a real life scenario would be more likely to look like, and it was also subsequently updated fairly quickly. It is useful to compare with the similarly designed previously existing demo sites hosted by Akamai and the Go project.

![]() cdn77’s offering is built on nginx’s alpha patch for HTTP/2 that was anounced just two weeks ago. I believe nginx’s full release is still planned to happen by the end of this year.

cdn77’s offering is built on nginx’s alpha patch for HTTP/2 that was anounced just two weeks ago. I believe nginx’s full release is still planned to happen by the end of this year.

I’ve talked with cdn77’s Jakub Straka and their lead developer Honza about their HTTP/2 efforts, and since I suspect there are a few others in my audience who’re also similarly curious I’m offering this interview-style posting here, intertwined with my own comments and thoughts. It is not just a big ad for this company, but since they’re early players on this field I figure their view and comments on this are worth reading!

I’ve been in touch with more than one person who’ve expressed their surprise and awe over the fact that they’re using this early patch for nginx to run in production. So I had to ask them about that. Below, their comments are all prefixed with CDN77 and shown using italics.

nginx

CDN77: “Yes, we are running the alpha patch, which is basically a slightly modified SPDY. In the past we have been in touch with the Nginx team and exchanged tips and ideas, the same way we plan to work on the alpha patch in the future.

We’re actually pretty careful when deploying new and potentially unstable packages into production. We have separate servers for http2 and we are monitoring them 24/7 for any exceptions. We also have dedicated developers who debug any issues we are facing with these machines. We would never jeopardize the stability of our current network.”

I’m not an expert on neither server-side HTTP/2 nor nginx in particular , but I think I read somewhere that the nginx HTTP/2 patch removes the SPDY support in favor of the new protocol.

CDN77: “You are right. HTTP/2 patch rewrites

|

|

Air Mozilla: Intern Presentations |

Bernardo Rittmeyer Jatin Chhikara Steven Englehardt Gabriel Luong Karim Benhmida Eduoard Oger Jonathan Almeida Huon Wilson Mariusz Kierski Koki Yoshida

Bernardo Rittmeyer Jatin Chhikara Steven Englehardt Gabriel Luong Karim Benhmida Eduoard Oger Jonathan Almeida Huon Wilson Mariusz Kierski Koki Yoshida

|

|

Mozilla WebDev Community: Beer and Tell – August 2015 |

Once a month, web developers from across the Mozilla Project get together to spend an hour of overtime to honor our white-collar brethren at Amazon. As we watch our productivity fall, we find time to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

openjck: Discord

openjck was up first and shared Discord, a Github webhook that scans pull requests for CSS compatibility issues. When it finds an issue, it leaves a comment on the offending line with a short description and which browsers are affected. The check is powered by doiuse, and projects can add a .doiuse file (using browserslist syntax) that specifies which browser versions they want to be tested against. Discord currently checks CSS and [Stylus][] files.

The MDN team is looking for sites to test Discord out. Work on the site is currently suspended (which is why it’s a side project, openjck and friends won’t stop working on it) so that feedback can be gathered to determine where the site should go next. If you’re interested in trying out Discord, let groovecoder know!

peterbe: Activity and Fanout.io

Next up was peterbe, with an update to Activity. The site now uses Fanout.io and a message queue to improve how activity items are fetched from GitHub and other sources. The site queues up jobs to fetch data from the Github API, and as the jobs complete, they send their results to Fanout. Fanout’s JavaScript library maintains an open WebSocket with their service, and when Fanout receives the data from the completed jobs, it notifies the client of the new data, which gets written to localStorage and updates the React state. This allows Activity to remain structured as an offline-ready application while still receiving seamless updates if the user has an internet connection.

There’s a donation jar near the exit; for just $25 you can pay for an hour of time for an Amazon engineer to spend with their family. Checks may be made payable to No Questions Asked Laundry, LLC.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2015/08/27/beer-and-tell-august-2015/

|

|

Matt Thompson: Impact |

Video recording of the Aug 26 Mozilla Learning community call

For the Mozilla Learning plan right now, we’re focused on impact. What impact will our advocacy and leadership work will have in the world over the next three years? How do we state that in a way that’s memorable, manageable, measurable and motivational?

How do other orgs do it? As a way to think big and step back, we asked participants in Tuesday’s community call to give examples of organizations or projects that inspire them right now. Here’s our list.

Who inspires you?

- Free Code Camp — learn to code by helping non-profit organizations (Amira)

- 18F — kicking ass when it comes to bringing open source to government (Kaitlin)

- The Inter-Agency Network for Education in Emergencies — cool network and community of practice for 15,000 people teaching in refugee camps and other emergency settings around the world (Surman)

- The Engine Room — small and scrappy, but doing amazing work with teaching open tools for social change (Michelle)

- GDS — because they somehow manage to work like MoFo, even though they are part of Government (Adam)

- Keyboardio — open source mechanical keyboard with a wonderful backlight, shipped with a screwdriver so that you can tinker around and reprogram. (Shreyas)

- Born Accessible — thinking about web content as “born accessible.”(Emma)

- WikiSpeed — a non-profit that’s building open source, energy-efficient cars in 17 countries, with no org chart or management structure (@OpenMatt)

- NESTA — engaged in some interesting thought leadership that relates well to our work (Sam)

- Ocean Cleanup — addressing “The Great Pacific Garbage Patch” with business / philanthropy / sponsorship / science / data / youth vision all coming together to stem it (Rebecca)

- Conservation International — I’m digging their current campaign: “Nature doesn’t need people, people need nature” (Paul)

- Mercy for Animals — they take a big, often controversial topic and make it approachable — and they have a massive, engaged volunteer force (Lindsey)

- Truth and Reconciliation Commission Canada (Simona)

- Generation Squeeze — taking on the impossible task of advocating for worklife balance, childcare and affordable housing on a living wage (ErikaD)

- NYT documentary of bieber + skrillex + diplo – Love the focus on storytelling and combo of graphics / animation. (Cassie)

- model view culture — cranky and continuous analytic deconstructions of intersections between technology, inclusion, diversity with anger and no apologies and a paper journal that arrives on a regular basis. (@leahatplay)

- Colors magazine — open contribution (Jordan)

- the Unilever rapper campaign — because it was a long-stale pollution problem that was revitalized with creativity (Andrea)

- Hollaback — uses online tools to work with young people and confront street harassment (Sara)

- Craigslist — because their success is based on the assumption that most people are good. (David)

- Dark Mountain — thinking through how WebLit does / does not survive in the anthropocene. (Chad)

- NPR – They strike a successful balance between mass appeal and education. (Simon)

takeaways?

The above examples are…

- Crisp. Our group was able to communicate the story for each of these projects — in their own words, off the top of their head, in a single sentence. That means the mission is telegraphic, simple and sticky.

- Viral. Each of these organizations has succeeded in creating an influential, mini-evangelist to spread their story for them: you!

- Edgy. Many of these examples have a bit of punk rock or social justice grit. They’re not wearing a bow tie.

- Diverse. There’s a broad range of stuff here, not just the usual tech / ed tech suspects. This is a party you’d want to be at.

- Real. There’s no jargon or planning language in any of the descriptions people provided — the language is authentic and human, because no one’s trying too hard. It’s just natural and unscripted.

Can we get to this same level of natural, edgy crispness for MoFo and our core strategies? Would others put us on a list like this? Food for thought.

|

|

Air Mozilla: German speaking community bi-weekly meeting |

https://wiki.mozilla.org/De/Meetings

https://wiki.mozilla.org/De/Meetings

https://air.mozilla.org/german-speaking-community-bi-weekly-meeting-20150827/

|

|

Andrew Halberstadt: Looking beyond Try Syntax |

Today marks the 5 year anniversary of try syntax. For the uninitiated, try syntax is a string that you put into your commit message which a parser then uses to determine the set of builds and tests to run on your try push. A common try syntax might look like this:

try: -b o -p linux -u mochitest -t none

Since inception, it has been a core part of the Mozilla development workflow. For many years it has served us well, and even today it serves us passably. But it is almost time for try syntax to don the wooden overcoat, and this post will explain why.

A brief history on try syntax

In the old days, pushing to try involved a web interface called sendchange.cgi. Pushing is

probably the wrong word to use, as at no point did the process involve version control. Instead, patches

were uploaded to the web service, which in turn invoked a buildbot sendchange with all the

required arguments. Like today try server was often overloaded, sometimes taking over 4 hours for

results to come back. Unlike today there was no way to pick and choose which builds and tests you

wanted, every try push ran the full set.

The obvious solution was to create a mechanism for people to do that. It was while brainstorming this problem that ted, bhearsum and jorendorff came up with the idea of encoding this information in the commit message. Try syntax was first implemented by lsblakk in bug 473184 and landed on August 27th, 2010. It was a simple time; the list of valid builders could fit into a single 30 line config file; Fennec still hadn't picked up full steam; and B2G wasn't even a figment of anyone's wildest imagination.

It's probably not a surprise to anyone that as time went on, things got more complicated. As more build types, platforms and test jobs were added, the try syntax got harder to memorize. To help deal with this, lsblakk created the trychooser syntax builder just a few months later. In 2011, pbiggar created the trychooser mercurial extension (which was later forked and improved by sfink). These tools were (and still are) the canonical way to build a try syntax string. Little has changed since then, with the exception of the mach try command that chmanchester implemented around June 2015.

One step forward, two steps back

Since around 2013, the number of platforms and test configurations have grown at an unprecendented rate. So much so, that the various trychooser tools have been perpetually out of date. Any time someone got around to adding a new job to the tools, two other jobs had already sprung up in its place. Another problem caused by this rapid growth, was that try syntax became finicky. There were a lot of edge cases, exceptions to the rule and arbitrary aliases. Often jobs would mysteriously not show up when they should, or mysteriously show up when they shouldn't.

Both of those problems were exacerbated by the fact that the actual try parser code has never had a definite owner. Since it was first created, there have never been more than 11 commits in a year. There have been only two commits to date in 2015.

Two key insights

At this point, there are two things that are worth calling out:

- Generating try strings from memory is getting harder and harder, and for many cases is nigh impossible. We rely more and more on tools like trychooser.

- Try syntax is sort of like an API on which these tools are built on top of.

What this means is that primary generators of try syntax have shifted from humans to tools. A command line encoded in a commit message is convenient if you're a human generating the syntax manually. But as far as tooling goes, try syntax is one god awful API. Not only do the tools need to figure out the magic strings, they need to interact with version control, create an empty commit and push it to a remote repository.

There is also tooling on the other side of the see saw, things that process the try syntax post push. We've already seen buildbot's try parser but taskcluster has a separate try parser as well. This means that your try push has different behaviour, depending on whether the jobs are scheduled in buildbot or taskcluster. There are other one off tools that do some try syntax parsing as well, including but not limited to try tools in mozharness, the try re-trigger bot and the AWSY dashboard. These tools are all forced to share and parse the same try syntax string, so they have to be careful not to step on each other's toes.

The takeaway here is that for tools, a string encoded as a commit message is quite limiting and a lot less convenient than say, calling a function in a library.

Despair not, young Padawan

So far we've seen how try syntax is finicky, how the tools that use it are often outdated and how it fails as an API. But what is the alternative? Fortunately, over the course of 2015 a lot of progress has been made on projects that for the first time, give us a viable alternative to try syntax.

First and foremost, is mozci. Mozci, created by armenzg and adusca, is a tool that hooks into the build api (with early support for taskcluster as well). It can do things like schedule builds and tests against any arbitrary pushes, and is being used on the backend for tools like adusca's try-extender with integration directly into treeherder planned.

Another project that improves the situation is taskcluster itself. With taskcluster, job configuration and scheduling all lives in tree. Thanks to bhearsum's buildbot bridge, we can even use taskcluster to schedule jobs that still live in buildbot. There's an opportunity here to leverage these new tools in conjunction with mozci to gain complete and total control over how jobs are scheduled on try.

Finally I'd like to call out mach try once more. It is more than a thin wrapper around try syntax that handles your push for you. It actually lets you control how the harness gets run within a job. For now this is limited to test paths and tags, but there is a lot of potential to do some cool things here. One of the current limiting factors is the unexpressiveness of the try syntax API. Hopefully this won't be a problem too much longer. Oh yeah, and mach try also works with git.

A glimpse into the crystal ball

So we have several different projects all coming together at once. The hard part is figuring out how they all tie in together. What do we want to tackle first? How might the future look? I want to be clear that none of this is imminent. This is a look into what might be, not what will be.

There are two places we mainly care about scheduling jobs on try.

First imagine you push your change to try. You open up treeherder, except no jobs are scheduled. Instead you see every possible job in a distinct greyed out colour. Scheduling what you want is as simple as clicking the desired job icons. Hold on a sec, you don't have to imagine it. Adusca already has a prototype of what this might look like. Being able to schedule your try jobs this way has a huge benefit: you don't need to mentally correlate job symbols to job names. It's as easy as point and click.

Second, is pushing a predefined set of jobs to try from the command line, similar to how things work now. It's often handy to have the try command for a specific job set in your shell history and it's a pain to open up treeherder for a simple push that you've memorized and run dozens of times. There are a few improvements we can do here:

- We can move the curses ui feature of the hg trychooser extension into mach try.

- We can use mozci to automatically keep the known list of jobs up to date. This is useful for things like generating the curses ui on the fly, validation and tab completion.

- We can use mozci + taskcluster + buildbot bridge to provide a much more expressive API for scheduling jobs. For example, you could easily push a T-style try run.

- We can expand some of the functionality in mach try for controlling how the harnesses are run, for example we could use it to enable some of the debugging features of the harness while investigating test failures.

Finally for those who are stuck in their ways, it should still be possible to have a "classic try syntax" front-end to the new mozci backend. As large as this change sounds, it could be mostly transparent to the user. While I'm certainly not a fan of the current try syntax, there's no reason to begrudge the people who are.

Closing words

Try syntax has served us well for 5 long years. But it's almost time to move on to something better. Soon a lot of new avenues will be open and tools will be created that none of us have thought of yet. I'd like to thank all of the people mentioned in this post for their contributions in this area and I'm very excited for what the future holds.

The future is bright, and change is for the better.

|

|

Air Mozilla: Web QA Weekly Meeting |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Mike Taylor: Dynamically updating <meta viewport> in the year 2015. |

18 months after writing the net-ward-winning article Dynamically updating in the year 2014, I wrote some patches for Firefox for Android to make it possible to update a page's existing meta[name=viewport] element's content attribute and have the viewport be updated accordingly.

So when version 43 ships (at some point in 2015), code like this will work in more places than it did in 2014:

if(screen.width < 760) {

viewport = document.querySelector("meta[name=viewport]");

viewport.setAttribute('content', 'width=768');

}

if(screen.width > 760) {

viewport = document.querySelector("meta[name=viewport]");

viewport.setAttribute('content', 'width=1024');

}

I'll just go ahead and accept the 2015 netaward now, thanks for the votes everyone, wowowow.

https://miketaylr.com/posts/2015/08/dynamically-updating-meta-viewport-in-2015.html

|

|

Ian Bicking: Conway’s Corollary |

Conway’s Law states:

organizations which design systems are constrained to produce designs which are copies of the communication structures of these organizations

I’ve always read this as an accusation: we are doomed to recreate the structure of our organizations in the structure of software projects. And further: projects cannot become their True Selves, cannot realize the most superior design, unless the organization is itself practically structureless. That only without the constraints of structure can the engineer make the truly correct choices. Michelangelo sculpted from marble, a smooth and uniform stone, not from an aggregate, where any hit with the chisel might reveal only the chaotic structure and fault lines of the rock and not his vision.

But most software is built, not revealed. I’m starting to believe that Conway’s observation is a corollary, not so clearly cause-and-effect. Maybe we should work with it, not struggle against it. (With age I’ve lost the passion for pointless struggle.) It’s not that developers can’t imagine a design that goes contrary to the organizational structure, it’s that they can’t ship those designs. What we’re seeing is natural selection. And when through force of will such a design is shipped, that it survives and is maintained depends on whether that the organization changed in the process, whether a structure was created to support that design.

A second skepticism: must a particular construction and modularity of code be paramount? Code is malleable, and its modularity is for the purpose of humans. Most of what we do disappears anyway when the machine takes over – functions are inlined, types erased, the pieces become linked, and the machine doesn’t care one whit about everything we’ve done to make the software comprehensible. Modularity is to serve our purposes. And sometimes organization structure serves a purpose; we change it to meet goals, and we shouldn’t assume the people who change it are just busybodies. But those changes are often aspirational, and so those changes are setting ourselves up for conflict as the new structure probably does not mirror the software design.

If the parts of an organization (e.g. teams, departments, or subdivisions) do not closely reflect the essential parts of the product, or if the relationship between organizations do not reflect the relationships between product parts, then the project will be in trouble… Therefore: Make sure the organization is compatible with the product architecture – Coplien and Harrison

So change the architecture! There’s more than one way to resolve these tensions.

A last speculation: as described in the Second System Effect we see teams rearchitect systems with excessive modularity and abstraction. Maybe because they remember all these conflicts, they remember all the times organizational structure and product motivations didn’t match architecture. The team makes an incorrect response by creating an architecture that can simultaneously embody all imagined organizational structures, a granularity that embodies not just current organizational tensions but also organizational boundaries that have come and gone. But the value is only in predicting future changes in structure, and only then if you are lucky.

Maybe we should look at Conway’s Law as a prescription: projects should only have hard boundaries where there are organizational boundaries. Soft boundaries and definitions still exist everywhere: just like we give local variables meaningful names (even though outside the function no one can tell the difference), we might also create abstractions and modularity that serve immediate and concrete purposes. But they should only be built for the moment and the task at hand. Extra effort should be applied to being ready to refactor in the future, not predicting and embodying those predictions in present modularity. Perhaps this is another rephrasing of Agile and YAGNI. Code is a liability, agency over that code is an asset.

http://www.ianbicking.org/blog/2015/08/conways-corollary.html

|

|

Air Mozilla: Bay Area Rust Meetup August 2015 |

The SF Rust Meetup for August.

The SF Rust Meetup for August.

|

|

Air Mozilla: Bugzilla Development Meeting |

Help define, plan, design, and implement Bugzilla's future!

Help define, plan, design, and implement Bugzilla's future!

https://air.mozilla.org/bugzilla-development-meeting-20150826/

|

|

Tantek Celik: Vacation Mode @Yahoo? How About Evening Mode, Original Content Mode, and Walkie Talkies With Texting? |

Called it. I recently asked “When did you last eat without using digital devices at all?” and proposed a “dumb camera mode” where you could only take/review photos/videos and perhaps also write/edit text notes on your otherwise “smart” phone that usually made you dumber through notification distractions.

Five days later @Yahoo published a news article titled: “The One Feature Every Smartphone Needs: Vacation Mode” — something I’m quite familiar with, having recently completed a one week Alaska cruise during which I was nearly completely off the grid.

Evening Mode rather than Vacation Mode

Despite the proposals in the Yahoo article, I still think a “dumb” capture/view mode would still be better on a vacation, where all you could do with your device was capture photos/text/GPS and subsequently view/edit what you captured. Even limited notifications distract and detract from a vacation.

However, the idea of “social media updates only from people you’re close to, either geographically or emotionally” would be useful when not on vacation. I'd use that as an Evening Mode most nights.

Original content rather than “shares”

In addition, the ability to filter and only see “original content — no shared news stories on Facebook, no retweets on Twitter” would be great as reading prioritization — I only have a minute, show me only original content, or show me original content from the past 24h before any (re)shares/bookmarks etc.

This strong preference to prioritize viewing original content is I think what has moved me to read my Instagram feed, and in contrast nearly ignore my Twitter feed / home page, as well as actively avoid Facebook’s News Feed.

Ideally I’d use an IndieWeb reader, but they too have yet to find a way to distinguish original content posts in contrast to bookmarks or brief quotes / commentary / shares of “news” articles.

Tame your inbox? No, vacation should mean no inbox

The Yahoo article suggests: “tame your inbox in the same fashion, showing messages from your important contacts as they arrive but hiding everything else” and completely misses the point of disconnecting from all inbox stress while on vacation.

SMS smart phone texting frustrations vs stress-free iPod

While I was on the Alaska cruise, other members of my family did txt/SMS each other a bit, but due to the unreliability of the shipboard cell tower, it was more frustrating to them than not.

With my iPod, I completely opted out of all such electronic text comms, and thus never stressed about checking my device to coordinate.

IRL coordination FTW

Instead I coordinated as I remember doing as a kid (and even teenager) — we made plans when we were together, about the next time and place we would meetup, and our general plans for the day. Then we’d adjust our plans by having *in-person* conversations whenever we next saw each other.

Or if we needed to find each other, we would wander around the ship, to our staterooms, the pool decks, the buffet, the gym, knowing that it was a small enough world that we’d likely run into each other, which we did several times.

During the entire trip there was only one time that I lost touch with everyone and actually got frustrated. But even that just took a bit longer of a ship search. Of course even for that situation there are solutions.

Walkie Talkies!

My nephews and niece used walkie-talkies that their father brought on board, and that actually worked in many ways better than anyone’s fancy smart phones.

Except walkie-talkies can be a bit intrusive.

Walkie Texting?

My question is:

If walkie-talkies can send high quality audio back and forth in broadcast mode, why can’t they broadcast short text messages to everyone else on that same “channel” as well?

Then I found this on Amazon:

TriSquare eXRS TSX300-2VP 900MHz FHSS Digital Two-Way Radio

- Digital Two-Way Radio

- spread spectrum and encrypted

- text mesaging between radios

(Discontinued by Manufacturer)

Anybody have one or a similar two-way radio that also supports texting?

Or would it be possible to do peer-to-peer audio/texting purely in software on smart “phones” peer-to-peer over bluetooth or wifi without having to go through a central router/tower?

That would seem ideal for a weekend road trip, say to Tahoe, or to the desert, or perhaps even for camping, again, maybe in the desert, like when you choose to escape from the rest of civilization for a week or more.

http://tantek.com/2015/238/b1/smartphone-vacation-mode-called-it

|

|

Nicholas Nethercote: What does the OS X Activity Monitor’s “Energy Impact” actually measure? |

[Update: this post has been updated with significant new information. Look to the end.]

Activity Monitor is a tool in Mac OS X that shows a variety of real-time process measurements. It is well-known and its “Energy Impact” measure (which was added in Mac OS X 10.9) is often consulted by users to compare the power consumption of different programs. Apple support documentation specifically recommends it for troubleshooting battery life problems, as do countless articles on the web.

However, despite its prominence, the exact meaning of the “Energy Impact” measure is unclear. In this blog post I use a combination of code inspection, measurements, and educated guesses to hypothesize how it is computed in Mac OS X 10.9 and 10.10.

What is known about “Energy Impact”?

The following screenshot shows the Activity Monitor’s “Energy” tab.

There are no units given for “Energy Impact” or “Avg Energy Impact”.

There are no units given for “Energy Impact” or “Avg Energy Impact”.

The Activity Monitor documentation says the following.

Energy Impact: A relative measure of the current energy consumption of the app. Lower numbers are better.

Avg Energy Impact: The average energy impact for the past 8 hours or since the Mac started up, whichever is shorter.

That is vague. Other Apple documentation says the following.

The Energy tab of Activity Monitor displays the Energy Impact of each open app based on a number of factors including CPU usage, network traffic, disk activity and more. The higher the number, the more impact an app has on battery power.

More detail, but still vague. Enough so that various other people have wondered what it means. The most precise description I have found says the following.

If my recollection of the developer presentation slide on App Nap is correct, they are an abstract unit Apple created to represent several factors related to energy usage meant to compare programs relatively.

I don’t believe you can directly relate them to one simple unit, because they are from an arbitrary formula of multiple factors.

[…] To get the units they look at CPU usage, interrupts, and wakeups… track those using counters and apply that to the energy column as a relative measure of an app.

This sounds plausible, and we will soon see that it appears to be close to the truth.

A detour: top

First, a necessary detour. top is a program that is similar to Activity Monitor, but it runs from the command-line. Like Activity Monitor, top performs periodic measurements of many different things, including several that are relevant to power consumption: CPU usage, wakeups, and a “power” measure. To see all these together, invoke it as follows.

top -stats pid,command,cpu,idlew,power -o power -d(A non-default invocation is necessary because the wakeups and power columns aren’t shown by default unless you have an extremely wide screen.)

It will show real-time data, updated once per second, like the following.

PID COMMAND %CPU IDLEW POWER

50300 firefox 12.9 278 26.6

76256 plugin-container 3.4 159 11.3

151 coreaudiod 0.9 68 4.3

76505 top 1.5 1 1.6

76354 Activity Monitor 1.0 0 1.0The PID, COMMAND and %CPU columns are self-explanatory.

The IDLEW column is the number of package idle exit wakeups. These occur when the processor package (containing the cores, GPU, caches, etc.) transitions from a low-power idle state to the active state. This happens when the OS schedules a process to run due to some kind of event. Common causes of wakeups include scheduled timers going off and blocked I/O system calls receiving data.

What about the POWER column? top is open source, so its meaning can be determined conclusively by reading the powerscore_insert_cell function in the source code. (The POWER measure was added to top in OS X 10.9.0 and the code has remain unchanged all the way through to OS X 10.10.2, which is the most recent version for which the code is available.)

The following is a summary of what the code does, and it’s easier to understand if the %CPU and POWER computations are shown side-by-side.

|elapsed_us| is the length of the sample period

|used_us| is the time this process was running during the sample period

%CPU = (used_us * 100.0) / elapsed_us

POWER = if is_a_kernel_process()

0

else

((used_us + IDLEW * 500) * 100.0) / elapsed_us

The %CPU computation is as expected.

The POWER computation is a function of CPU and IDLEW. It’s basically the same as %CPU but with a “tax” of 500 microseconds for each wakeup and an exception for kernel processes. The value of this function can easily exceed 100 — e.g. a program with zero CPU usage and 3,000 wakeups per second will have a POWER score of 150 — so it is not a percentage. In fact, POWER is a unitless measure because it is a semi-arbitrary combination of two measures with incompatible units.

Back to Activity Monitor and “Energy Impact”

MacBook Pro running Mac OS X 10.9.5

First, I did some measurements with a MacBook Pro with an i7-4960HQ processor running Mac OS X 10.9.5.

I did extensive testing with a range of programs: ones that trigger 100% CPU usage; ones that trigger controllable numbers of idle wakeups; ones that stress the memory system heavily; ones that perform frequent disk operations; and ones that perform frequent network operations.

In every case, Activity Monitor’s “Energy Impact” was the same as top‘s POWER measure. Every indication is that the two are computed identically on this machine.

For example, consider the data in the following table, The data was gathered with a small test program that fires a timer N times per second; other than extreme cases (see below) each timer firing causes an idle platform wakeup.

-----------------------------------------------------------------------------

Hz CPU ms/s Intr Pkg Idle Pkg Power Act.Mon. top

-----------------------------------------------------------------------------

2 0.14 2.00 1.80 2.30W 0.1 0.1

100 4.52 100.13 95.14 3.29W 5 5

500 9.26 499.66 483.87 3.50W 25 25

1000 19.89 1000.15 978.77 5.23W 50 50

5000 17.87 4993.10 4907.54 14.50W 240 240

10000 32.63 9976.38 9194.70 17.61W 485 480

20000 66.66 19970.95 17849.55 21.81W 910 910

30000 99.62 28332.79 25899.13 23.89W 1300 1300

40000 132.08 37255.47 33070.19 24.43W 1610 1650

50000 160.79 46170.83 42665.61 27.31W 2100 2100

60000 281.19 58871.47 32062.39 29.92W 1600 1650

70000 276.43 67023.00 14782.03 31.86W 780 750

80000 304.16 81624.60 258.22 35.72W 43 45

90000 333.20 90100.26 153.13 37.93W 40 42

100000 363.94 98789.49 44.18 39.31W 38 38

The table shows a variety of measurements for this program for different values of N. Columns 2–5 are from powermetrics, and show CPU usage, interrupt frequency, and package idle wakeup frequency, respectively. Column 6 is Activity Monitor’s “Energy Impact”, and column 7 is top‘s POWER measurement. Column 6 and 7 (which are approximate measurements) are identical, modulo small variations due to the noisiness of these measurements.

MacBook Air running Mac OS X 10.10.4

I also tested a MacBook Air with an i5-4250U processor running Mac OS X 10.10.4. The results were substantially different.

----------------------------------------------------------------------------- Hz CPU ms/s Intr Pkg Idle Pkg Power Act.Mon. top ----------------------------------------------------------------------------- 2 0.21 2.00 2.00 0.63W 0.0 0.1 100 6.75 99.29 96.69 0.81W 2.4 5.2 500 22.52 499.40 475.04 1.15W 10 25 1000 44.07 998.93 960.59 1.67W 21 48 3000 109.71 3001.05 2917.54 3.80W 60 145 5000 65.02 4996.13 4781.43 3.79W 90 230 7500 107.53 7483.57 7083.90 4.31W 140 350 10000 144.00 9981.25 9381.06 4.37W 190 460

The results from top are very similar to those from the other machine. But Activity Monitor’s “Energy Impact” no longer matches top‘s POWER measure. As a result it is much harder to say with confidence what “Energy Impact” represents on this machine. I tried tweaking the previous formula so that the idle wakeup “tax” drops from 500 microseconds to 180 or 200 microseconds and that gives results that appear to be in the ballpark but don’t match exactly. I’m a bit skeptical whether Activity Monitor is doing all its measurements at the same time or not. But it’s also quite possible that other inputs have been added to the function that computes “Energy Impact”.

What about “Avg Energy Impact”?

What about the “Avg Energy Impact”? It seems reasonable to assume it is computed in the same way as “Energy Impact”, but averaged over a longer period. In fact, we already know that period from the Apple documentation that says it is the “average energy impact for the past 8 hours or since the Mac started up, whichever is shorter.”

Indeed, when the Energy tab of Activity Monitor is first opened, the “Avg Energy Impact” column is empty and the title bar says “Activity Monitor (Processing…)”. After a few seconds the “Avg Energy Impact” column is populated with values and the title bar changes to “Activity Monitor (Applications in last 8 hours)”. If you have top open during those 5–10 seconds can you see that systemstats is running and using a lot of CPU, and so presumably the measurements are obtained from it.

systemstats is a program that runs all the time and periodically measures, among other things, CPU usage and idle wakeups for each running process (visible in the “Processes” section of its output.) I’ve done further tests that indicate that the “Avg Energy Impact” is almost certainly computed using the same formula as “Energy Impact”. The difference is that the the measurements are from the past 8 hours of wake time — i.e. if a laptop is closed for several hours and then reopened, those hours are not included in the calculation — as opposed to the 1, 2 or 5 seconds of wake time used for “Energy Impact”.

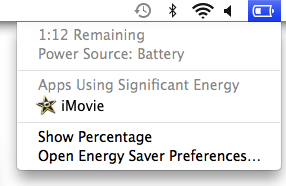

battery status menu

Even more prominent than Activity Monitor is OS X’s battery status menu. When you click on the battery icon in the OS X menu bar you get a drop-down menu which includes a list of “Apps Using Significant Energy”.

How is this determined? When you open this menu for the first time in a while it says “Collecting Power Usage Information” for a few seconds, and if you have top open during that time you see that, once again, systemstats is running and using a lot of CPU. Furthermore, if you click on an application name in the menu Activity Monitor will be opened and that application’s entry will be highlighted. Based on these facts it seems reasonable to assume that “Energy Impact” is again being used to determine which applications show up in the battery status menu.

I did some more tests (on my MacBook Pro running 10.9.5) and it appears that once an energy-intensive application is started it takes about 20 or 30 seconds for it to show up in the battery status menu. And once the application stops using high amounts of energy I’ve seen it take between 4 and 10 minutes to disappear. The exception is if the application is closed, in which case it disappears immediately.

Finally, I tried to determine the significance threshold. It appears that a program with an “Energy Impact” of roughly 20 or more will eventually show up as significant, and programs that have much higher “Energy Impact” values tend to show up more quickly.

All of these battery status menu observations are difficult to make reliably and so should be treated with caution. They may also be different in OS X 10.10. It is clear, however, that the window used by the battery status menu is measured in seconds or minutes, which is much less than the 8 hour window used for “Avg Energy Impact”.

An aside: systemstats is always running on OS X. The particular invocation used for the long-running instance — the one used by both Activity Monitor and the battery status menu — takes the undocumented --xpc flag. When I tried running it with that flag I got an error message saying “This mode should only be invoked by launchd”. So it’s hard to know how often it’s making measurements. The output from vanilla command-line invocations indicate it’s about every 10 minutes.

But it’s worth noting that systemstats has a -J option which causes the CPU usage and wakeups for child processes to be attributed to their parents. It seems likely that the --xpc option triggers the same behaviour because the Activity Monitor does not show “Avg Energy Impact” for child processes (as can be seen in the screenshot above for the login, bash and vim processes that are children of the Terminal process). This hypothesis also matches up with the battery status menu, which never shows child processes. One consequence of this is that if you ssh into a Mac and run a power-intensive program from the command line it will not show up in Activity Monitor’s energy tab or the battery status menu, because it’s not attributable to a top-level process such as Terminal! Such processes will show up in top and in Activity Monitor’s CPU tab, however.

How good a measure is “Energy Impact”?

We’ve now seen that “Energy Impact” is used widely throughout OS X. How good a measure is it?

The best way to measure power consumption is to actually measure power consumption. One way to do this is to use an ammeter, but this is difficult. Another way is to measure how long it takes for the battery to drain, which is easier but slow and requires steady workloads. Alternatively, recent Intel hardware provides high-quality estimates of processor and memory power consumption that are relatively easy to obtain.

These approaches all have the virtue of measuring or estimating actual power consumption (i.e. Watts). But the big problem is that they are machine-wide measures that cannot be used on a per-process basis. This is why Activity Monitor uses several proxy measures — ones that correlate with power consumption — which can be measured on a per-process basis. “Energy Impact” is a hybrid of at least two different proxy measures: CPU usage and wakeup frequency.

The main problem with this is that “Energy Impact” is an exaggerated measure. Look at the first table above, with data from the 10.9.5 machine. The variation in the “Pkg Power” column — which shows the package power from the above-mentioned Intel hardware estimates — is vastly smaller than the variation in the “Energy Impact” measurements. For example, going from 1,000 to 10,000 wakeups per second increases the package power by 3.4x, but the “Energy Impact” increases by 9.7x, and the skew gets even worse at higher wakeup frequencies. “Energy Impact” clearly weights wakeups too heavily. (In the second table, with data from the 10.10.4 machine, the weight given to wakeups is less, but still too high.)

Also, in the first table “Energy Impact” actually decreases when the timer frequency gets high enough. Presumably this is because the timer interval is so short that the OS has trouble putting the package into a idle power state. This leads to the absurd result that firing a timer at 1,000 Hz has about the same “Energy Impact” value as firing one at 100,000 Hz, when the package power of the latter is about 7.5x higher.

Having said all that, it’s understandable why Apple uses formulations of this kind for “Energy Impact”.

- CPU usage and wakeup frequency are probably the two most important factors affecting a process’s power consumption, and they are factors that can be measured on a per-process basis.

- Having a single measure makes things easy for users; evaluating the relative important of multiple measures is more difficult.

- The exception for kernel processes (which always have an “Energy Impact” of 0) avoids OS X itself being blamed for high power consumption. This makes a certain amount of sense — it’s not like users can close the kernel — while also being somewhat misleading.

If I were in charge of Apple’s Activity Monitor product, I’d do two things.

- I would compute a new formula for “Energy Impact”. I would measure the CPU usage, wakeup frequency (and any other inputs) and actual power consumption for a range of real-world programs, on a range of different Apple machines. From this data, hopefully a reasonably accurate model could be constructed. It wouldn’t be perfect, and it wouldn’t need to be perfect, but it should be possible to come up with something that reflects actual power consumption better than the existing formulations. Once formulated, I would then test the new version against synthetic microbenchmarks, like the ones I used above, to see how it holds up. Given the choice between accurately modelling real-world applications and accurately modelling synthetic microbenchmarks, I would definitely favour the former.

- I would publicly document the formula that is used so that developers can actually tell how their applications are being evaluated, and can optimize for that measure. You may think “but then developers will be optimizing for a synthetic measure rather than a real one” and you’d be right. That’s an inevitable consequence of giving a synthetic measure such prominence, and all the more reason for improving it.

Conclusion

“Energy Impact” is a flawed measure of an application’s power consumption. Nonetheless, it’s what many people use at this moment to evaluate the power consumption of OS X applications, so it’s worth understanding. And if you are an OS X application developer who wants to reduce the “Energy Impact” of your application, it’s clear that it’s best to focus first on reducing wakeup frequency, and then on reducing CPU usage.

Because Activity Monitor is closed source code I don’t know if I’ve characterized “Energy Impact” exactly correctly. The evidence given above indicates that I am close on 10.9.5, but not as close on 10.10.4. I’d love to hear if anybody has evidence that either corroborates or contradicts the conclusions I’ve made here. Thank you.

Update

A commenter named comex has done some great detective work and found on 10.10 and 10.11 Activity Monitor consults a Mac model-specific file in the /usr/share/pmenergy/ directory. (Thank you, comex.)

For example, my MacBook Air has a model number 7DF21CB3ED6977E5 and the file Mac-7DF21CB3ED6977E5.plist has the following list of key/value pairs under the heading “energy_constants”.

kcpu_time 1.0 kcpu_wakeups 2.0e-4

This matches the previously seen formula, but with the wakeups “tax” being 200 microseconds, which matches what I hypothesized above.

kqos_default 1.0e+00 kqos_background 5.2e-01 kqos_utility 1.0e+00 kqos_legacy 1.0e+00 kqos_user_initiated 1.0e+00 kqos_user_interactive 1.0e+00

“QoS” refers to quality of service classes which allow an application to mark some of its own work as lower priority. I’m not sure exactly how this is factored in, but from the numbers above it appears that operations done in the lowest-priority “background” class is considered to have an energy impact of about half that done in all the other classes.

kdiskio_bytesread 0.0 kdiskio_byteswritten 5.3e-10

These ones are straightforward. Note that the “tax” for disk reads is zero, and for disk writes it’s a very small number. I wrote a small program that wrote endlessly to disk and saw that the “Energy Impact” was slightly higher than the CPU percentage alone, which matches expectations.

kgpu_time 3.0e+00

It makes sense that GPU usage is included in the formula. It’s not clear if this refers to the integrated GPU or the separate (higher performance, higher power) GPU. It’s also interesting that the weighting is 3x.

knetwork_recv_bytes 0.0 knetwork_recv_packets 4.0e-6 knetwork_sent_bytes 0.0 knetwork_sent_packets 4.0e-6

These are also straightforward. In this case, the number of bytes sent is ignored, and only the number of packets matter, and the cost of reading and writing packets is considered equal.

So, in conclusion, on 10.10 and 10.11, the formula used to compute “Energy Impact” is machine model-specific, and includes the following factors: CPU usage, wakeup frequency, quality of service class usage, and disk, GPU, and network activity.

This is definitely an improvement over the formula used in 10.9, which is great to see. The parameters are also visible, if you know where to look! It would be wonderful if all these inputs, along with their relative weightings, could be seen at once in Activity Monitor. That way developers would have a much better sense of exactly how their application’s “Energy Impact” is determined.

|

|

Weekly Mozilla Reps call

Weekly Mozilla Reps call