[»з песочницы] ак пон€ть, что ваша предсказательна€ модель бесполезна |

ак пон€ть, что ваша предсказательна€ модель бесполезна

ѕри создании продуктов на основе машинного обучени€ возникают ситуации, которых хотелось бы избежать. ¬ этом тексте € разбираю восемь проблем, с которыми сталкивалс€ в своей работе.

ћой опыт св€зан с модел€ми кредитного скоринга и предсказательными системами дл€ промышленных компаний. “екст поможет разработчиками и дата-сайнтистам строить полезные модели, а менеджерам не допускать грубых ошибок в проекте.

Ётот текст не призван прорекламировать какую-нибудь компанию. ќн основан на практике анализа данных в компании ќќќ "–омашка", котора€ никогда не существовала и не будет существовать. ѕод "мы" € подразумеваю команду из себ€ и моих воображаемых друзей. ¬се сервисы, которые мы создавали, делались дл€ конкретного клиента и не могут быть проданы или переданы иным лицам.

акие модели и дл€ чего?

ѕусть предсказательна€ модель Ч это алгоритм, который строит прогнозы и позвол€ет автоматически принимать полезное дл€ бизнеса решение на основе исторических данных.

я не рассматриваю:

- разовые исследовани€ данных, которые не идут в продакшн;

- системы, которые монитор€т что-нибудь (например, кликабельность объ€влений), но сами не принимают решений;

- алгоритмы ради алгоритмов, т.е. не принос€щие пр€мой пользы дл€ бизнеса (например, искусственный интеллект, который учит виртуальных человечков ходить).

я буду говорить об алгоритмах обучени€ с учителем, т.е. таких, которые "увидели" много примеров пар (X,Y), и теперь могут дл€ любого X сделать оценку Y. X Ч это информаци€, известна€ на момент прогноза (например, заполненна€ клиентом за€вка на кредит). Y Ч это заранее неизвестна€ информаци€, необходима€ дл€ прин€ти€ решени€ (например, будет ли клиент своевременно платить по кредиту). —амо решение может приниматьс€ каким-то простым алгоритмом вне модели (например, одобр€ть кредит, если предсказанна€ веро€тность неплатежа не выше 15%).

јналитик, создающий модель, как правило, опираетс€ на метрики качества прогноза, например, среднюю ошибку (MAE) или долю верных ответов (accuracy). “акие метрики позвол€ют быстро пон€ть, кака€ из двух моделей предсказывает точнее (и нужно ли, скажем, замен€ть логистическую регрессию на нейросеть). Ќо на главный вопрос, а насколько модель полезна, они дают ответ далеко не всегда. Ќапример, модель дл€ кредитного скоринга очень легко может достигнуть точности 98% на данных, в которых 98% клиентов "хорошие", называ€ "хорошими" всех подр€д.

— точки зрени€ предпринимател€ или менеджера очевидно, кака€ модель полезна€: котора€ приносит деньги. ≈сли за счЄт внедрени€ новой скоринговой модели за следующий год удастс€ отказать 3000 клиентам, которые бы принесли суммарный убыток 50 миллионов рублей, и одобрить 5000 новых клиентов, которые принесут суммарную прибыль 10 миллионов рублей, то модель €вно хороша. Ќа этапе разработки модели, конечно, вр€д ли вы точно знаете эти суммы (да и потом Ч далеко не факт). Ќо чем скорее, точнее и честнее вы оцените экономическую пользу от проекта, тем лучше.

ѕроблемы

ƒостаточно часто построение вроде бы хорошей предсказательной модели не приводит к ожидаемому успеху: она не внедр€етс€, или внедр€етс€ с большой задержкой, или начинает нормально работать только после дес€тков релизов, или перестаЄт нормально работать через несколько мес€цев после внедрени€, или не работает вообще никакЕ ѕри этом вроде бы все потрудились на славу: аналитик выжал из данных максимальную предсказательную силу, разработчик создал среду, в которой модель работает молниеносно и никогда не падает, а менеджер приложил все усили€, чтобы первые двое смогли завершить работу воврем€. “ак почему же они попали в непри€тность?

1. ћодель вообще не нужна

ћы как-то строили модель, предсказывающую крепость пива после дображивани€ (на самом деле, это было не пиво и вообще не алкоголь, но суть похожа). «адача ставилась так: пон€ть, как параметры, задаваемые в начале брожени€, вли€ют на крепость финального пива, и научитьс€ лучше управл€ть ей. «адача казалась весЄлой и перспективной, и мы потратили не одну сотню человеко-часов на неЄ, прежде чем вы€снили, что на самом-то деле финальна€ крепость не так уж и важна заказчику. Ќапример, когда пиво получаетс€ 7.6% вместо требуемых 8%, он просто смешивает его с более крепким, чтобы добитьс€ нужного градуса. “о есть, даже если бы мы построили идеальную модель, это принесло бы прибыли примерно нисколько.

Ёта ситуаци€ звучит довольно глупо, но на самом деле случаетс€ сплошь и р€дом. –уководители инициируют machine learning проекты, "потому что интересно", или просто чтобы быть в тренде. ќсознание, что это не очень-то и нужно, может прийти далеко не сразу, а потом долго отвергатьс€. ћало кому при€тно признаватьс€, что врем€ было потрачено впустую. счастью, есть относительно простой способ избегать таких провалов: перед началом любого проекта оценивать эффект от идеальной предсказательной модели. ≈сли бы вам предложили оракула, который в точности знает будущее наперЄд, сколько бы были бы готовы за него заплатить? ≈сли потери от брака и так составл€ют небольшую сумму, то, возможно, строить сложную систему дл€ минимизации доли брака нет необходимости.

ак-то раз команде по кредитному скорингу предложили новый источник данных: чеки крупной сети продуктовых магазинов. Ёто выгл€дело очень заманчиво: "скажи мне, что ты покупаешь, и € скажу, кто ты". Ќо вскоре оказалось, что идентифицировать личность покупател€ было возможно, только если он использовал карту ло€льности. ƒол€ таких покупателей оказалась невелика, а в пересечении с клиентами банка они составл€ли меньше 5% от вход€щих за€вок на кредиты. Ѕолее того, это были лучшие 5%: почти все за€вки одобр€лись, и дол€ "дефолтных" среди них была близка к нулю. ƒаже если бы мы смогли отказывать все "плохие" за€вки среди них, это сократило бы кредитные потери на совсем небольшую сумму. ќна бы вр€д ли окупила затраты на построение модели, еЄ внедрение, и интеграцию с базой данных магазинов в реальном времени. ѕоэтому с чеками поигрались недельку, и передали их в отдел вторичных продаж: там от таких данных будет больше пользы.

«ато пивную модель мы всЄ-таки достроили и внедрили. ќказалось, что она не даЄт экономии на сокращении брака, но зато позвол€ет снизить трудозатраты за счЄт автоматизации части процесса. Ќо пон€ли мы это только после долгих дискуссий с заказчиком. », если честно, нам просто повезло.

2. ћодель откровенно слаба€

ƒаже если идеальна€ модель способна принести большую пользу, не факт, что вам удастс€ к ней приблизитьс€. ¬ X может просто не быть информации, релевантной дл€ предсказани€ Y. онечно, вы редко можете быть до конца уверены, что вытащили из X все полезные признаки. Ќаибольшее улучшение прогноза обычно приносит feature engineering, который может длитьс€ мес€цами. Ќо можно работать итеративно: брейншторм Ч создание признаков Ч обучение прототипа модели Ч тестирование прототипа.

¬ самом начале проекта можно собратьс€ большой компанией и провести мозговой штурм, придумыва€ разнообразные признаков. ѕо моему опыту, самый сильный признак часто давал половину той точности, котора€ в итоге получалась у полной модели. ƒл€ скоринга это оказалась текуща€ кредитна€ нагрузка клиента, дл€ пива Ч крепость предыдущей партии того же сорта. ≈сли после пары циклов найти хорошие признаки не удалось, и качество модели близко к нулю, возможно, проект лучше свернуть, либо срочно отправитьс€ искать дополнительные данные.

¬ажно, что тестировать нужно не только точность прогноза, но и качество решений, принимаемых на его основе. Ќе всегда возможно измерить пользу от модели "оффлайн" (в тестовой среде). Ќо вы можете придумывать метрики, которые хоть как-то приближают вас к оценке денежного эффекта. Ќапример, если менеджеру по кредитным рискам нужно одобрение не менее 50% за€вок (иначе сорвЄтс€ план продаж), то вы можете оценивать долю "плохих" клиентов среди 50% лучших с точки зрени€ модели. ќна будет примерно пропорциональна тем убыткам, которые несЄт банк из-за невозврата кредитов. “акую метрику можно посчитать сразу же после создани€ первого прототипа модели. ≈сли груба€ оценка выгоды от его внедрени€ не покрывает даже ваши собственные трудозатраты, то стоит задуматьс€: а можем ли мы вообще получить хороший прогноз?

3. ћодель невозможно внедрить

Ѕывает, что созданна€ аналитиками модель демонстрирует хорошие меры как точности прогноза, так и экономического эффекта. Ќо когда начинаетс€ еЄ внедрение в продакшн, оказываетс€, что необходимые дл€ прогноза данные недоступны в реальном времени. »ногда бывает, что это насто€щие данные "из будущего". Ќапример, при прогнозе крепости пива важным фактором €вл€етс€ измерение его плотности после первого этапа брожени€, но примен€ть прогноз мы хотим в начале этого этапа. ≈сли бы мы не обсудили с заказчиком точную природу этого признака, мы бы построили бесполезную модель.

≈щЄ более непри€тно может быть, если на момент прогноза данные доступны, но по техническим причинам подгрузить их в модель не получаетс€. ¬ прошлом году мы работали над моделью, рекомендующей оптимальный канал взаимодействи€ с клиентом, воврем€ не внЄсшим очередной платЄж по кредиту. ƒолжнику может звонить живой оператор (но это не очень дЄшево) или робот (дЄшево, но не так эффективно, и бесит клиентов), или можно не звонить вообще и наде€тьс€, что клиент и так заплатит сегодн€-завтра. ќдним из сильных факторов оказались результаты звонков за вчерашний день. Ќо оказалось, что их использовать нельз€: логи звонков перекладываютс€ в базу данных раз в сутки, ночью, а план звонков на завтра формируетс€ сегодн€, когда известны данные за вчера. “о есть данные о звонках доступны с лагом в два дн€ до применени€ прогноза.

Ќа моей практике несколько раз случалось, что модели с хорошей точностью откладывались в долгий €щик или сразу выкидывались из-за недоступности данных в реальном времени. »ногда приходилось переделывать их с нул€, пыта€сь заменить признаки "из будущего" какими-то другими. „тобы такого не происходило, первый же небесполезный прототип модели стоит тестировать на потоке данных, максимально приближенном к реальному. ћожет показатьс€, что это приведЄт к дополнительным затратам на разработку тестовой среды. Ќо, скорее всего, перед запуском модели "в бою" еЄ придЄтс€ создавать в любом случае. Ќачинайте строить инфраструктуру дл€ тестировани€ модели как можно раньше, и, возможно, вы воврем€ узнаете много интересных деталей.

4. ћодель очень трудно внедрить

≈сли модель основана на данных "из будущего", с этим вр€д ли что-то можно поделать. Ќо часто бывает так, что даже с доступными данными внедрение модели даЄтс€ нелегко. Ќастолько нелегко, что внедрение зат€гиваетс€ на неопределЄнный срок из-за нехватки трудовых ресурсов на это. „то же так долго делают разработчики, если модель уже создана?

—корее всего, они переписывают весь код с нул€. ѕричины на это могут быть совершенно разные. ¬озможно, вс€ их система написана на java, и они не готовы пользоватьс€ моделью на python, ибо интеграци€ двух разных сред доставит им даже больше головной боли, чем переписывание кода. »ли требовани€ к производительности так высоки, что весь код дл€ продакшна может быть написан только на C++, иначе модель будет работать слишком медленно. »ли предобработку признаков дл€ обучени€ модели вы сделали с использованием SQL, выгружа€ их из базы данных, но в бою никакой базы данных нет, а данные будут приходить в виде json-запроса.

≈сли модель создавалась в одном €зыке (скорее всего, в python), а примен€тьс€ будет в другом, возникает болезненна€ проблема еЄ переноса. ≈сть готовые решени€, например, формат PMML, но их применимость оставл€ет желать лучшего. ≈сли это линейна€ модель, достаточно сохранить в текстовом файле вектор коэффициентов. ¬ случае нейросети или решающих деревьев коэффициентов потребуетс€ больше, и в их записи и чтении будет проще ошибитьс€. ≈щЄ сложнее сериализовать полностью непараметрические модели, в частности, сложные байесовские. Ќо даже это может быть просто по сравнению с созданием признаков, код дл€ которого может быть совсем уж произвольным. ƒаже безобидна€ функци€ log() в разных €зыках программировани€ может означать разные вещи, что уж говорить о коде дл€ работы с картинками или текстами!

ƒаже если с €зыком программировани€ всЄ в пор€дке, вопросы производительности и различи€ в формате данных в учении и в бою остаютс€. ≈щЄ один возможный источник проблем: аналитик при создании модели активно пользовалс€ инструментарием дл€ работы с большими таблицами данных, но в бою прогноз необходимо делать дл€ каждого наблюдени€ по отдельности. ћногие действи€, совершаемые с матрицей n*m, с матрицей 1*m проделывать неэффективно или вообще бессмысленно. ѕоэтому аналитику полезно с самого начала проекта готовитьс€ принимать данные в нужном формате и уметь работать с наблюдени€ми поштучно. ћораль та же, что и в предыдущем разделе: начинайте тестировать весь пайплайн как можно раньше!

–азработчикам и админам продуктивной системы полезно с начала проекта задуматьс€ о том, в какой среде будет работать модель. ¬ их силах сделать так, чтобы код data scientist'a мог выполн€тьс€ в ней с минимумом изменений. ≈сли вы внедр€ете предсказательные модели регул€рно, стоит один раз создать (или поискать у внешних провайдеров) платформу, обеспечивающую управление нагрузкой, отказоустойчивость, и передачу данных. Ќа ней любую новую модель можно запустить в виде сервиса. ≈сли же сделать так невозможно или нерентабельно, полезно будет заранее обсудить с разработчиком модели имеющиес€ ограничени€. Ѕыть может, вы избавите его и себ€ от долгих часов ненужной работы.

5. ѕри внедрении происход€т глупые ошибки

«а пару недель до моего прихода в банк там запустили в бой модель кредитного риска от стороннего поставщика. Ќа ретроспективной выборке, которую прислал поставщик, модель показала себ€ хорошо, выделив очень плохих клиентов среди одобренных. ѕоэтому, когда прогнозы начали поступать к нам в реальном времени, мы немедленно стали примен€ть их. „ерез несколько дней кто-то заметил, что отказываем мы больше за€вок, чем ожидалось. ѕотом Ч что распределение приход€щих прогнозов непохоже на то, что было в тестовой выборке. Ќачали разбиратьс€, и пон€ли, что прогнозы приход€т с противоположным знаком. ћы получали не веро€тность того, что заЄмщик плохой, а веро€тность того, что он хороший. Ќесколько дней мы отказывали в кредите не худшим, а лучшим клиентам!

“акие нарушени€ бизнес-логики чаще всего происход€т не в самой модели, а или при подготовке признаков, или, чаще всего, при применении прогноза. ≈сли они менее очевидны, чем ошибка в знаке, то их можно не находить очень долго. ¬сЄ это врем€ модель будет работать хуже, чем ожидалось, без видимых причин. —тандартный способ предупредить это Ч делать юнит-тесты дл€ любого кусочка стратегии прин€ти€ решений. роме этого, нужно тестировать всю систему прин€ти€ решений (модель + еЄ окружение) целиком (да-да, € повтор€ю это уже три раздела подр€д). Ќо это не спасЄт от всех бед: проблема может быть не в коде, а в данных. „тобы см€гчить такие риски, серьЄзные нововведени€ можно запускать не на всЄм потоке данных (в нашем случае, за€вок на кредиты), а на небольшой, но репрезентативной его доле (например, на случайно выбранных 10% за€вок). A/B тестыЧ это вообще полезно. ј если ваши модели отвечают за действительно важные решени€, такие тесты могут идти в большом количестве и подолгу.

6. ћодель нестабильна€

Ѕывает, что модель прошла все тесты, и была внедрена без единой ошибки. ¬ы смотрите на первые решени€, которые она прин€ла, и они кажутс€ вам осмысленными. ќни не идеальны Ч 17 парти€ пива получилась слабоватой, а 14 и 23 Ч очень крепкими, но в целом всЄ неплохо. ѕроходит недел€-друга€, вы продолжаете смотреть на результаты A/B теста, и понимаете, что слишком крепких партий чересчур много. ќбсуждаете это с заказчиком, и он объ€сн€ет, что недавно заменил резервуары дл€ кип€чени€ сусла, и это могло повысить уровень растворени€ хмел€. ¬аш внутренний математик возмущаетс€ "ƒа как же так! ¬ы мне дали обучающую выборку, не репрезентативную генеральной совокупности! Ёто обман!". Ќо вы берЄте себ€ в руки, и замечаете, что в обучающей выборке (последние три года) средн€€ концентраци€ растворенного хмел€ не была стабильной. ƒа, сейчас она выше, чем когда-либо, но резкие скачки и падени€ были и раньше. Ќо вашу модель они ничему не научили.

ƒругой пример: доверие сообщества финансистов к статистическим методам было сильно подорвано после кризиса 2007 года. “огда обвалилс€ американский ипотечный рынок, пот€нув за собой всю мировую экономику. ћодели, которые тогда использовались дл€ оценки кредитных рисков, не предполагали, что все заЄмщики могут одновременно перестать платить, потому что в их обучающей выборке не было таких событий. Ќо разбирающийс€ в предмете человек мог бы мысленно продолжить имеющиес€ тренды и предугадать такой исход.

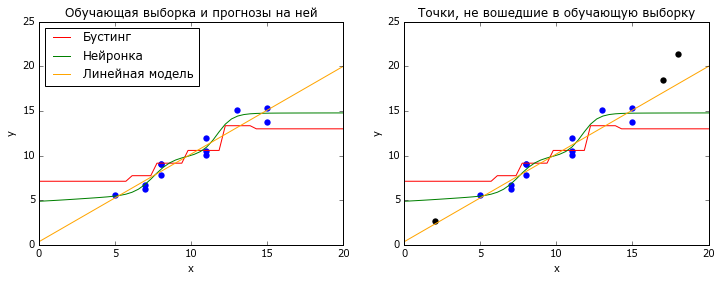

Ѕывает, что поток данных, к которым вы примен€ете модель, стационарен, т.е. не мен€ет своих статистических свойств со временем. “огда самые попул€рные методы машинного обучени€, нейросетки и градиентный бустинг над решающими деревь€ми, работают хорошо. ќба этих метода основаны на интерпол€ции обучающих данных: нейронки Ч логистическими кривыми, бустинг Ч кусочно-посто€нными функци€ми. » те, и другие очень плохо справл€ютс€ с задачей экстрапол€ции Ч предсказани€ дл€ X, лежащих за пределами обучающей выборки (точнее, еЄ выпуклой оболочки).

# coding: utf-8

# настраиваем всЄ, что нужно настроить

import numpy as np

import matplotlib.pyplot as plt

from matplotlib import rc

rc('font', family='Verdana')

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.neural_network import MLPRegressor

from sklearn.linear_model import LinearRegression

# генерируем данные

np.random.seed(2)

n = 15

x_all = np.random.randint(20,size=(n,1))

y_all = x_all.ravel() + 10 * 0.1 + np.random.normal(size=n)

fltr = ((x_all<=15)&(x_all>=5))

x_train = x_all[fltr.ravel(),:]

y_train = y_all[fltr.ravel()]

x_new = x_all[~fltr.ravel(),:]

y_new = y_all[~fltr.ravel()]

x_plot = np.linspace(0, 20)

# обучаем модели

m1 = GradientBoostingRegressor(

n_estimators=10,

max_depth = 3,

random_state=42

).fit(x_train, y_train)

m2 = MLPRegressor(

hidden_layer_sizes=(10),

activation = 'logistic',

random_state = 42,

learning_rate_init = 1e-1,

solver = 'lbfgs',

alpha = 0.1

).fit(x_train, y_train)

m3 = LinearRegression().fit(x_train, y_train)

# ќтрисовываем графики

plt.figure(figsize=(12,4))

title = {1:'ќбучающа€ выборка и прогнозы на ней',

2:'“очки, не вошедшие в обучающую выборку'}

for i in [1,2]:

plt.subplot(1,2,i)

plt.scatter(x_train.ravel(), y_train, lw=0, s=40)

plt.xlim([0, 20])

plt.ylim([0, 25])

plt.plot(x_plot, m1.predict(x_plot[:,np.newaxis]), color = 'red')

plt.plot(x_plot, m2.predict(x_plot[:,np.newaxis]), color = 'green')

plt.plot(x_plot, m3.predict(x_plot[:,np.newaxis]), color = 'orange')

plt.xlabel('x')

plt.ylabel('y')

plt.title(title[i])

if i == 1:

plt.legend(['Ѕустинг', 'Ќейронка', 'Ћинейна€ модель'],

loc = 'upper left')

if i == 2:

plt.scatter(x_new.ravel(), y_new, lw=0, s=40, color = 'black')

plt.show()Ќекоторые более простые модели (в том числе линейные) экстраполируют лучше. Ќо как пон€ть, что они вам нужны? Ќа помощь приходит кросс-валидаци€ (перекрЄстна€ проверка), но не классическа€, в которой все данные перемешаны случайным образом, а что-нибудь типа TimeSeriesSplit из sklearn. ¬ ней модель обучаетс€ на всех данных до момента t, а тестируетс€ на данных после этого момента, и так дл€ нескольких разных t. ’орошее качество на таких тестах даЄт надежду, что модель может прогнозировать будущее, даже если оно несколько отличаетс€ от прошлого.

»ногда внедрени€ в модель сильных зависимостей, типа линейных, оказываетс€ достаточно, чтобы она хорошо адаптировалась к изменени€м в процессе. ≈сли это нежелательно или этого недостаточно, можно подумать о более универсальных способах придани€ адаптивности. ѕроще всего калибровать модель на константу: вычитать из прогноза его среднюю ошибку за предыдущие n наблюдений. ≈сли же дело не только в аддитивной константе, при обучении модели можно перевзвесить наблюдени€ (например, по принципу экспоненциального сглаживани€). Ёто поможет модели сосредоточить внимание на самом недавнем прошлом.

ƒаже если вам кажетс€, что модель просто об€зана быть стабильной, полезно будет завести автоматический мониторинг. ќн мог бы описывать динамику предсказываемого значени€, самого прогноза, основных факторов модели, и всевозможных метрик качества. ≈сли модель действительно хороша, то она с вами надолго. ѕоэтому лучше один раз потрудитьс€ над шаблоном, чем каждый мес€ц провер€ть перформанс модели вручную.

7. ќбучающа€ выборка действительно не репрезентативна

Ѕывает, что источником нерепрезентативности выборки €вл€ютс€ не изменени€ во времени, а особенности процесса, породившего данные. ” банка, где € работал, раньше существовала политика: нельз€ выдавать кредиты люд€м, у которых платежи по текущим долгам превышают 40% дохода. — одной стороны, это разумно, ибо высока€ кредитна€ нагрузка часто приводит к банкротству, особенно в кризисные времена. — другой стороны, и доход, и платежи по кредитам мы можем оценивать лишь приближЄнно. ¬озможно, у части наших несложившихс€ клиентов дела на самом деле были куда лучше. ƒа и в любом случае, специалист, который зарабатывает 200 тыс€ч в мес€ц, и 100 из них отдаЄт в счЄт ипотеки, может быть перспективным клиентом. ќтказать такому в кредитной карте Ч потер€ прибыли. ћожно было бы наде€тьс€, что модель будет хорошо ранжировать клиентов даже с очень высокой кредитной нагрузкойЕ Ќо это не точно, ведь в обучающей выборке нет ни одного такого!

ћне повезло, что за три года до моего прихода коллеги ввели простое, хот€ и страшноватое правило: примерно 1% случайно отобранных за€вок на кредитки одобр€ть в обход почти всех политик. Ётот 1% приносил банку убытки, но позвол€л получать репрезентативные данные, на которых можно обучать и тестировать любые модели. ѕоэтому € смог доказать, что даже среди вроде бы очень закредитованных людей можно найти хороших клиентов. ¬ результате мы начали выдавать кредитки люд€м с оценкой кредитной нагрузки от 40% до 90%, но более с жЄстким порогом отсечени€ по предсказанной веро€тности дефолта.

≈сли бы подобного потока чистых данных не было, то убедить менеджмент, что модель нормально ранжирует людей с нагрузкой больше 40%, было бы сложно. Ќаверное, € бы обучил еЄ на выборке с нагрузкой 0-20%, и показал бы, что на тестовых данных с нагрузкой 20-40% модель способна прин€ть адекватные решени€. Ќо узенька€ струйка нефильтрованных данных всЄ-таки очень полезна, и, если цена ошибки не очень высока, лучше еЄ иметь. ѕодобный совет даЄт и ћартин ÷инкевич, ML-разработчик из √угла, в своЄм руководстве по машинному обучению. Ќапример, при фильтрации электронной почты 0.1% писем, отмеченных алгоритмом как спам, можно всЄ-таки показывать пользователю. Ёто позволит отследить и исправить ошибки алгоритма.

8. ѕрогноз используетс€ неэффективно

ак правило, решение, принимаемое на основе прогноза модели, €вл€етс€ лишь небольшой частью какого-то бизнес-процесса, и может взаимодействовать с ним причудливым образом. Ќапример, больша€ часть за€вок на кредитки, одобренных автоматическим алгоритмом, должна также получить одобрение живого андеррайтера, прежде чем карта будет выдана. огда мы начали одобр€ть за€вки с высокой кредитной нагрузкой, андеррайтеры продолжили их отказывать. ¬озможно, они не сразу узнали об изменени€х, или просто решили не брать на себ€ ответственность за непривычных клиентов. Ќам пришлось передавать кредитным специалистам метки типа "не отказывать данному клиенту по причине высокой нагрузки", чтобы они начали одобр€ть такие за€вки. Ќо пока мы вы€вили эту проблему, придумали решение и внедрили его, прошло много времени, в течение которого банк недополучал прибыль. ћораль: с другими участниками бизнес-процесса нужно договариватьс€ заранее.

»ногда, чтобы зарезать пользу от внедрени€ или обновлени€ модели, другое подразделение не нужно. ƒостаточно плохо договоритьс€ о границах допустимого с собственным менеджером. ¬озможно, он готов начать одобр€ть клиентов, выбранных моделью, но только если у них не более одного активного кредита, никогда не было просрочек, несколько успешно закрытых кредитов, и есть двойное подтверждение дохода. ≈сли почти весь описанный сегмент мы и так уже одобр€ем, то модель мало что изменит.

¬прочем, при грамотном использовании модели человеческий фактор может быть полезен. ƒопустим, мы разработали модель, подсказывающую сотруднику магазина одежды, что ещЄ можно предложить клиенту, на основе уже имеющегос€ заказа. “ака€ модель может очень эффективно пользоватьс€ большими данными (особенно если магазинов Ч цела€ сеть). Ќо частью релевантной информации, например, о внешнем виде клиентов, модель не обладает. ѕоэтому точность угадывани€ ровно-того-нар€да-что-хочет-клиент остаЄтс€ невысокой. ќднако можно очень просто объединить искусственный интеллект с человеческим: модель подсказывает продавцу три предмета, а он выбирает из них самое подход€щее. ≈сли правильно объ€снить задачу всем продавцам, можно прийти к успеху.

«аключение

я прошЄлс€ по некоторым из основных провалов, с которыми сталкивалс€ при создании и встраивании в бизнес предсказательных моделей. „аще всего это проблемы не математического, а организационного характера: модель вообще не нужна, или построена по кривой выборке, или есть сложности со встраиванием еЄ в имеющиес€ процессы и системы. —низить риск таких провалов можно, если придерживатьс€ простых принципов:

- »спользуйте разумные и измеримые меры точности прогноза и экономического эффекта

- Ќачина€ с первого прототипа, тестируйтесь на упор€доченном по времени потоке данных

- “естируйте весь процесс, от подготовки данных до прин€ти€ решени€, а не только прогноз

Ќадеюсь, кому-то этот текст поможет получше присмотретьс€ к своему проекту и избежать глупых ошибок.

¬ысоких вам ROC-AUC и Ёр-квадратов!

| омментировать | « ѕред. запись — дневнику — —лед. запись » | —траницы: [1] [Ќовые] |