»нтеграци€ Oculus Rift в десктопное Direct3D приложение на примере Renga |

¬сем привет! ¬ этой статье хочу разобрать процесс подключени€ шлема виртуальной реальности к десктопному приложению под Windows. –ечь пойдет об Oculus Rift.

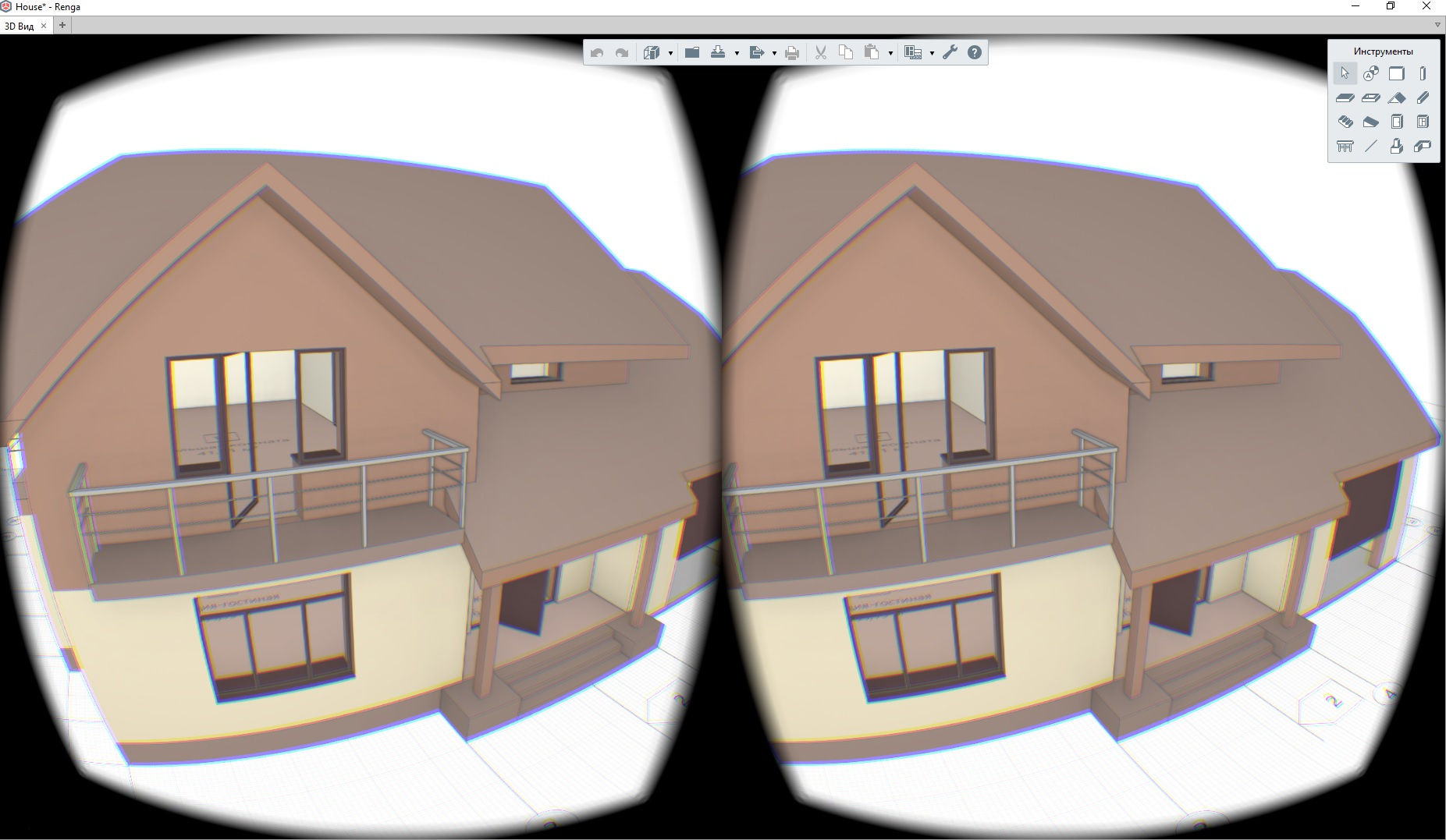

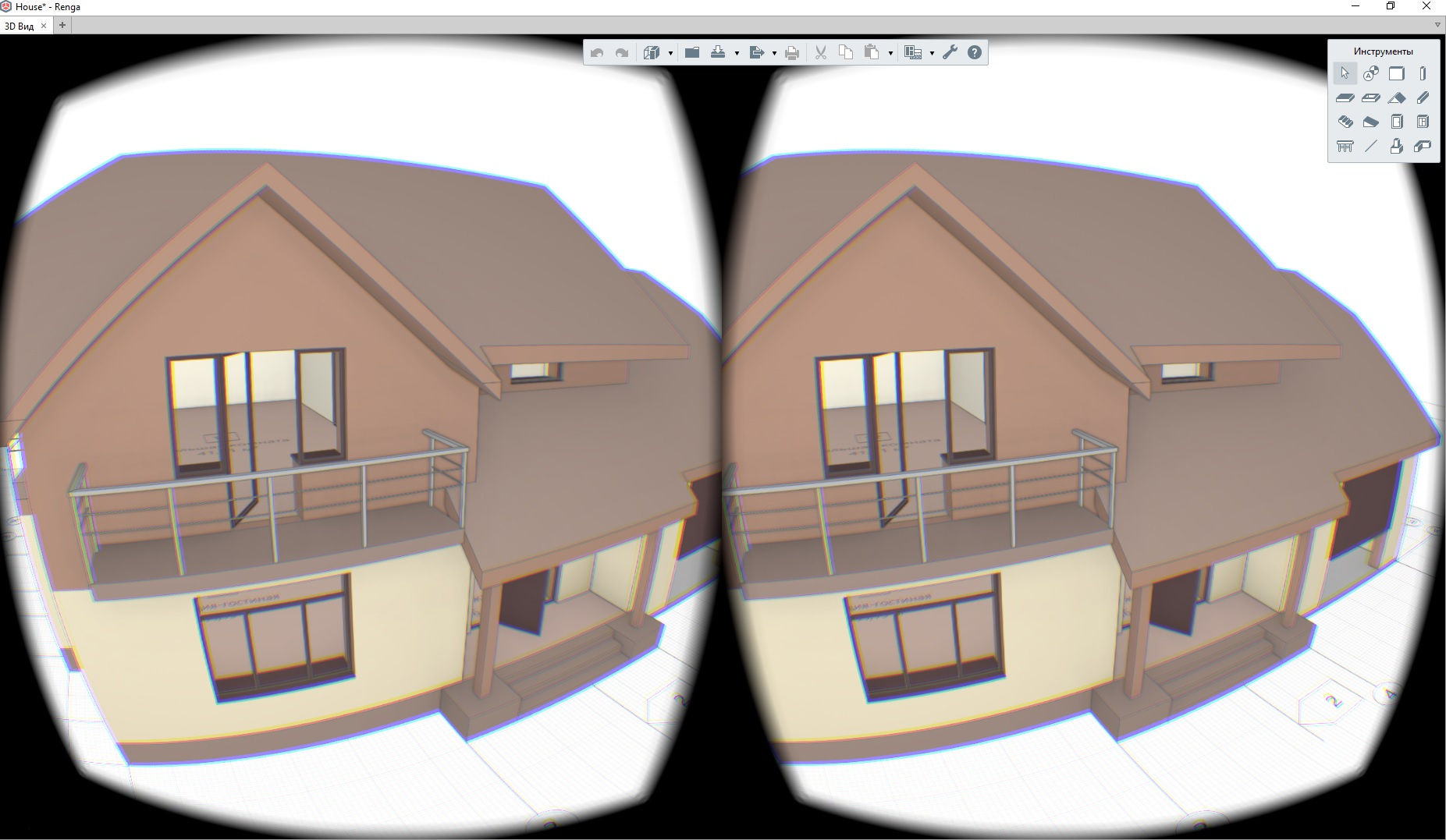

јрхитектурна€ визуализаци€ Ч очень благодатна€ тема дл€ различного рода экспериментов. ћы решили не отставать от тренда. ¬ одной из следующих версий наших BIM-систем (напомню, что € работаю в компании Renga Software, совместном предпри€тии ј— ќЌ и фирмы Ђ1—ї): Renga Architecture Ч дл€ архитектурно-строительного проектировани€ и Renga Structure Ч дл€ проектировани€ конструктивной части зданий и сооружений, по€витс€ возможность хождени€ по проектируемому зданию в шлеме виртуальной реальности. Ёто очень удобно дл€ демонстрации проекта заказчику и оценки тех или иных проектных решений с точки зрени€ эргономики.

Ќа сайте разработчика шлема доступно дл€ скачивани€ SDK. Ќа момент написани€ статьи последн€€ доступна€ верси€ 1.16. —уществует еще OpenVR от Valve. —ам € эту штуку не пробовал, но есть подозрени€, что она работает хуже, чем нативный дл€ Oculus SDK.

–азберем основные этапы подключени€ шлема к приложению. ¬начале необходимо инициализировать устройство:

»нициализаци€ завершена. “еперь у нас есть session Ч это указатель на внутреннюю структуру ovrHmdStruct. ≈го мы будем использовать дл€ всех запросов к oculus runtime. luid Ч это идентификатор графического адаптера, к которому подсоединилс€ шлем. ќн необходим дл€ конфигураций с несколькими видеокартами или ноутбуков. ѕриложение должно использовать дл€ отрисовки этот же адаптер.

ѕроцесс создани€ кадра в обычном режиме и дл€ шлема Oculus Rift отличаетс€ не очень сильно.

ƒл€ каждого глаза нам нужно создать текстуру вместе с SwapChain и RenderTarget.

ƒл€ создани€ текстур Oculus SDK предоставл€ет набор функций.

ѕример обертки дл€ создани€ и хранени€ SwapChain и RenderTarget дл€ каждого глаза:

— помощью этой обертки создаем дл€ каждого глаза текстуру, куда мы будем отрисовывать 3D-сцену. –азмер текстуры необходимо узнать у Oculus Runtime. ƒл€ этого нужно получить description устройства и с помощью функции ovr_GetFovTextureSize получить необходимый размер текстур дл€ каждого глаза:

≈ще удобно создать так называемую Mirror Texture. Ёту текстуру можно показывать в окне приложени€. ¬ эту текстуру Oculus Runtime будет копировать объединенное дл€ двух глаз изображение после постобработки.

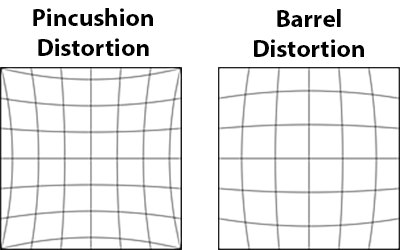

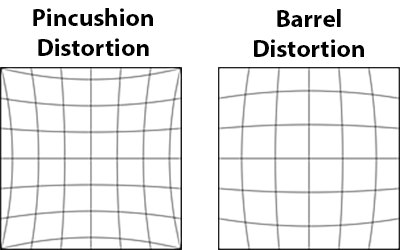

≈сли не делать пост обработку, то человек увидит в шлеме изображение, полученное с помощью оптической системы с положительной дисторсией (изображение слева). ƒл€ компенсации oculus накладывает эффект отрицательной дисторсии (изображение справа).

од создани€ mirror texture:

¬ажный момент при создании текстур. ѕри создании SwapChain с помощью функции ovr_CreateTextureSwapChainDX мы передаем желаемый формат текстуры. Ётот формат используетс€ в дальнейшем дл€ постобработки рантаймом Oculus.

„тобы все правильно работало, приложение должно создавать swap chain в sRGB цветовом пространстве. Ќапример, OVR_FORMAT_R8G8B8A8_UNORM_SRGB. ≈сли вы в своем приложении не делаете гамма-коррекцию, то необходимо создавать swap chain в формате sRGB. «адать флаг ovrTextureMisc_DX_Typeless в ovrTextureSwapChainDesc. —оздать Render Target в формате DXGI_FORMAT_R8G8B8A8_UNORM. ≈сли этого не сделать, то изображение на экране будет слишком светлым.

ѕосле того, как мы подключили шлем и создали текстуры дл€ каждого глаза, нужно отрисовать сцену в соответствующую текстуру. Ёто делаетс€ обычным способом, который предоставл€ет direct3d. “ут ничего интересного. Ќужно только получить от шлема его положение, делаетс€ это так:

“еперь при создании кадра нужно не забыть дл€ каждого глаза задать правильную матрицу вида с учетом положени€ шлема.

ѕосле того, как мы отрисовали сцену дл€ каждого глаза, нужно передать получившиес€ текстуры на постобработку в рантайм Oculus.

ƒелаетс€ это так:

ѕосле этого в шлеме по€витс€ готовое изображение. ƒл€ того чтобы показать в приложении то, что видит человек в шлеме, можно скопировать в back buffer окна приложени€ содержимое mirror texture, которую мы создали ранее:

Ќа этом все. ћного хороших примеров можно найти в Oculus SDK.

јрхитектурна€ визуализаци€ Ч очень благодатна€ тема дл€ различного рода экспериментов. ћы решили не отставать от тренда. ¬ одной из следующих версий наших BIM-систем (напомню, что € работаю в компании Renga Software, совместном предпри€тии ј— ќЌ и фирмы Ђ1—ї): Renga Architecture Ч дл€ архитектурно-строительного проектировани€ и Renga Structure Ч дл€ проектировани€ конструктивной части зданий и сооружений, по€витс€ возможность хождени€ по проектируемому зданию в шлеме виртуальной реальности. Ёто очень удобно дл€ демонстрации проекта заказчику и оценки тех или иных проектных решений с точки зрени€ эргономики.

Ќа сайте разработчика шлема доступно дл€ скачивани€ SDK. Ќа момент написани€ статьи последн€€ доступна€ верси€ 1.16. —уществует еще OpenVR от Valve. —ам € эту штуку не пробовал, но есть подозрени€, что она работает хуже, чем нативный дл€ Oculus SDK.

–азберем основные этапы подключени€ шлема к приложению. ¬начале необходимо инициализировать устройство:

#define OVR_D3D_VERSION 11 // in case direct3d 11

#include "OVR_CAPI_D3D.h"

bool InitOculus()

{

ovrSession session = 0;

ovrGraphicsLuid luid = 0;

// Initializes LibOVR, and the Rift

ovrInitParams initParams = { ovrInit_RequestVersion, OVR_MINOR_VERSION, NULL, 0, 0 };

if (!OVR_SUCCESS(ovr_Initialize(&initParams)))

return false;

if (!OVR_SUCCESS(ovr_Create(&session, &luid)))

return false;

// FloorLevel will give tracking poses where the floor height is 0

if(!OVR_SUCCESS(ovr_SetTrackingOriginType(session, ovrTrackingOrigin_EyeLevel)))

return false;

return true;

}

»нициализаци€ завершена. “еперь у нас есть session Ч это указатель на внутреннюю структуру ovrHmdStruct. ≈го мы будем использовать дл€ всех запросов к oculus runtime. luid Ч это идентификатор графического адаптера, к которому подсоединилс€ шлем. ќн необходим дл€ конфигураций с несколькими видеокартами или ноутбуков. ѕриложение должно использовать дл€ отрисовки этот же адаптер.

ѕроцесс создани€ кадра в обычном режиме и дл€ шлема Oculus Rift отличаетс€ не очень сильно.

ƒл€ каждого глаза нам нужно создать текстуру вместе с SwapChain и RenderTarget.

ƒл€ создани€ текстур Oculus SDK предоставл€ет набор функций.

ѕример обертки дл€ создани€ и хранени€ SwapChain и RenderTarget дл€ каждого глаза:

struct EyeTexture

{

ovrSession Session;

ovrTextureSwapChain TextureChain;

std::vector TexRtv;

EyeTexture() :

Session(nullptr),

TextureChain(nullptr)

{

}

bool Create(ovrSession session, int sizeW, int sizeH)

{

Session = session;

ovrTextureSwapChainDesc desc = {};

desc.Type = ovrTexture_2D;

desc.ArraySize = 1;

desc.Format = OVR_FORMAT_R8G8B8A8_UNORM_SRGB;

desc.Width = sizeW;

desc.Height = sizeH;

desc.MipLevels = 1;

desc.SampleCount = 1;

desc.MiscFlags = ovrTextureMisc_DX_Typeless;

desc.BindFlags = ovrTextureBind_DX_RenderTarget;

desc.StaticImage = ovrFalse;

ovrResult result = ovr_CreateTextureSwapChainDX(Session, pDevice, &desc, &TextureChain);

if (!OVR_SUCCESS(result))

return false;

int textureCount = 0;

ovr_GetTextureSwapChainLength(Session, TextureChain, &textureCount);

for (int i = 0; i < textureCount; ++i)

{

ID3D11Texture2D* tex = nullptr;

ovr_GetTextureSwapChainBufferDX(Session, TextureChain, i, IID_PPV_ARGS(&tex));

D3D11_RENDER_TARGET_VIEW_DESC rtvd = {};

rtvd.Format = DXGI_FORMAT_R8G8B8A8_UNORM;

rtvd.ViewDimension = D3D11_RTV_DIMENSION_TEXTURE2D;

ID3D11RenderTargetView* rtv;

DIRECTX.Device->CreateRenderTargetView(tex, &rtvd, &rtv);

TexRtv.push_back(rtv);

tex->Release();

}

return true;

}

~EyeTexture()

{

for (int i = 0; i < (int)TexRtv.size(); ++i)

{

Release(TexRtv[i]);

}

if (TextureChain)

{

ovr_DestroyTextureSwapChain(Session, TextureChain);

}

}

ID3D11RenderTargetView* GetRTV()

{

int index = 0;

ovr_GetTextureSwapChainCurrentIndex(Session, TextureChain, &index);

return TexRtv[index];

}

void Commit()

{

ovr_CommitTextureSwapChain(Session, TextureChain);

}

};

— помощью этой обертки создаем дл€ каждого глаза текстуру, куда мы будем отрисовывать 3D-сцену. –азмер текстуры необходимо узнать у Oculus Runtime. ƒл€ этого нужно получить description устройства и с помощью функции ovr_GetFovTextureSize получить необходимый размер текстур дл€ каждого глаза:

ovrHmdDesc hmdDesc = ovr_GetHmdDesc(session);

ovrSizei idealSize = ovr_GetFovTextureSize(session, (ovrEyeType)eye, hmdDesc.DefaultEyeFov[eye], 1.0f);

≈ще удобно создать так называемую Mirror Texture. Ёту текстуру можно показывать в окне приложени€. ¬ эту текстуру Oculus Runtime будет копировать объединенное дл€ двух глаз изображение после постобработки.

≈сли не делать пост обработку, то человек увидит в шлеме изображение, полученное с помощью оптической системы с положительной дисторсией (изображение слева). ƒл€ компенсации oculus накладывает эффект отрицательной дисторсии (изображение справа).

од создани€ mirror texture:

// Create a mirror to see on the monitor.

ovrMirrorTexture mirrorTexture = nullptr;

mirrorDesc.Format = OVR_FORMAT_R8G8B8A8_UNORM_SRGB;

mirrorDesc.Width = width;

mirrorDesc.Height =height;

ovr_CreateMirrorTextureDX(session, pDXDevice, &mirrorDesc, &mirrorTexture);

¬ажный момент при создании текстур. ѕри создании SwapChain с помощью функции ovr_CreateTextureSwapChainDX мы передаем желаемый формат текстуры. Ётот формат используетс€ в дальнейшем дл€ постобработки рантаймом Oculus.

„тобы все правильно работало, приложение должно создавать swap chain в sRGB цветовом пространстве. Ќапример, OVR_FORMAT_R8G8B8A8_UNORM_SRGB. ≈сли вы в своем приложении не делаете гамма-коррекцию, то необходимо создавать swap chain в формате sRGB. «адать флаг ovrTextureMisc_DX_Typeless в ovrTextureSwapChainDesc. —оздать Render Target в формате DXGI_FORMAT_R8G8B8A8_UNORM. ≈сли этого не сделать, то изображение на экране будет слишком светлым.

ѕосле того, как мы подключили шлем и создали текстуры дл€ каждого глаза, нужно отрисовать сцену в соответствующую текстуру. Ёто делаетс€ обычным способом, который предоставл€ет direct3d. “ут ничего интересного. Ќужно только получить от шлема его положение, делаетс€ это так:

ovrHmdDesc hmdDesc = ovr_GetHmdDesc(session);

ovrEyeRenderDesc eyeRenderDesc[2];

eyeRenderDesc[0] = ovr_GetRenderDesc(session, ovrEye_Left, hmdDesc.DefaultEyeFov[0]);

eyeRenderDesc[1] = ovr_GetRenderDesc(session, ovrEye_Right, hmdDesc.DefaultEyeFov[1]);

// Get both eye poses simultaneously, with IPD offset already included.

ovrPosef EyeRenderPose[2];

ovrVector3f HmdToEyeOffset[2] = { eyeRenderDesc[0].HmdToEyeOffset,

eyeRenderDesc[1].HmdToEyeOffset };

double sensorSampleTime; // sensorSampleTime is fed into the layer later

ovr_GetEyePoses(session, frameIndex, ovrTrue, HmdToEyeOffset, EyeRenderPose, &sensorSampleTime);

“еперь при создании кадра нужно не забыть дл€ каждого глаза задать правильную матрицу вида с учетом положени€ шлема.

ѕосле того, как мы отрисовали сцену дл€ каждого глаза, нужно передать получившиес€ текстуры на постобработку в рантайм Oculus.

ƒелаетс€ это так:

OculusTexture * pEyeTexture[2] = { nullptr, nullptr };

// ...

// Draw into eye textures

// ...

// Initialize our single full screen Fov layer.

ovrLayerEyeFov ld = {};

ld.Header.Type = ovrLayerType_EyeFov;

ld.Header.Flags = 0;

for (int eye = 0; eye < 2; ++eye)

{

ld.ColorTexture[eye] = pEyeTexture[eye]->TextureChain;

ld.Viewport[eye] = eyeRenderViewport[eye];

ld.Fov[eye] = hmdDesc.DefaultEyeFov[eye];

ld.RenderPose[eye] = EyeRenderPose[eye];

ld.SensorSampleTime = sensorSampleTime;

}

ovrLayerHeader* layers = &ld.Header;

ovr_SubmitFrame(session, frameIndex, nullptr, &layers, 1);

ѕосле этого в шлеме по€витс€ готовое изображение. ƒл€ того чтобы показать в приложении то, что видит человек в шлеме, можно скопировать в back buffer окна приложени€ содержимое mirror texture, которую мы создали ранее:

ID3D11Texture2D* tex = nullptr;

ovr_GetMirrorTextureBufferDX(session, mirrorTexture, IID_PPV_ARGS(&tex));

pDXContext->CopyResource(backBufferTexture, tex);

Ќа этом все. ћного хороших примеров можно найти в Oculus SDK.

| омментировать | « ѕред. запись — дневнику — —лед. запись » | —траницы: [1] [Ќовые] |