јвтоэнкодеры в Keras, „асть 2: Manifold learning и скрытые (latent) переменные |

—одержание

- „асть 1: ¬ведение

- „асть 2: Manifold learning и скрытые (latent) переменные

- „асть 3: ¬ариационные автоэнкодеры (VAE)

- „асть 4: Conditional VAE

- „асть 5: GAN (Generative Adversarial Networks) и tensorflow

- „асть 6: VAE + GAN

ƒл€ того, чтобы лучше понимать, как работают автоэнкодеры, а также чтобы в последствии генерировать из кодов что-то новое, стоит разобратьс€ в том, что такое коды и как их можно интерпретировать.

Manifold learning

»зображени€ цифр mnist (на которых примеры в прошлой части) Ч это элементы

ќднако среди всех изображений, изображени€ цифр занимают лишь ничтожную часть, абсолютное же большинство изображений Ч это просто шум.

— другой стороны, если вз€ть произвольное изображение цифры, то и все изображени€ из некоторой окрестности также можно считать цифрой.

ј если вз€ть два произвольных изображени€ цифры, то в изначальном 784-мерном пространстве скорее всего, можно найти непрерывную кривую, все точки вдоль которой можно также считать цифрами (хот€ бы дл€ изображений цифр одного лейбла), а вкупе с предыдущим замечанием, то и все точки некоторой области вдоль этой кривой.

“аким образом, в пространстве всех изображений есть некоторое подпространство меньшей размерности в области вокруг которого сосредоточились изображени€ цифр. “о есть, если наша генеральна€ совокупность Ч это все изображени€ цифр, которые могут быть нарисованы в принципе, то плотность веро€тности встретить такую цифру в пределах области сильно выше, чем вне.

јвтоэнкодеры с размерностью кода k ищут k-мерное многообразие в пространстве объектов, которое наиболее полно передает все вариации в выборке. ј сам код задает параметризацию этого многообрази€. ѕри этом энкодер сопоставл€ет объекту его параметр на многообразии, а декодер параметру сопоставл€ет точку в пространстве объектов.

„ем больше размерность кодов, тем больше вариаций в данных автоэнкодер сможет передать. ≈сли размерность кодов слишком мала, автоэнкодер запомнит нечто среднее по недостающим вариаци€м в заданной метрике (это одна из причин, почему mnist цифры все более размытые при снижении размерности кода в автоэнкодерах).

ƒл€ того, чтобы лучше пон€ть, что такое manifold learning, создадим простой двумерный датасет в виде кривой плюс шум и будем обучать на нем автоэнкодер.

од и визуализаци€

# »мпорт необходимых библиотек

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

# —оздание датасета

x1 = np.linspace(-2.2, 2.2, 1000)

fx = np.sin(x1)

dots = np.vstack([x1, fx]).T

noise = 0.06 * np.random.randn(*dots.shape)

dots += noise

# ÷ветные точки дл€ отдельной визуализации позже

from itertools import cycle

size = 25

colors = ["r", "g", "c", "y", "m"]

idxs = range(0, x1.shape[0], x1.shape[0]//size)

vx1 = x1[idxs]

vdots = dots[idxs]

# ¬изуализаци€

plt.figure(figsize=(12, 10))

plt.xlim([-2.5, 2.5])

plt.scatter(dots[:, 0], dots[:, 1])

plt.plot(x1, fx, color="red", linewidth=4)

plt.grid(False)

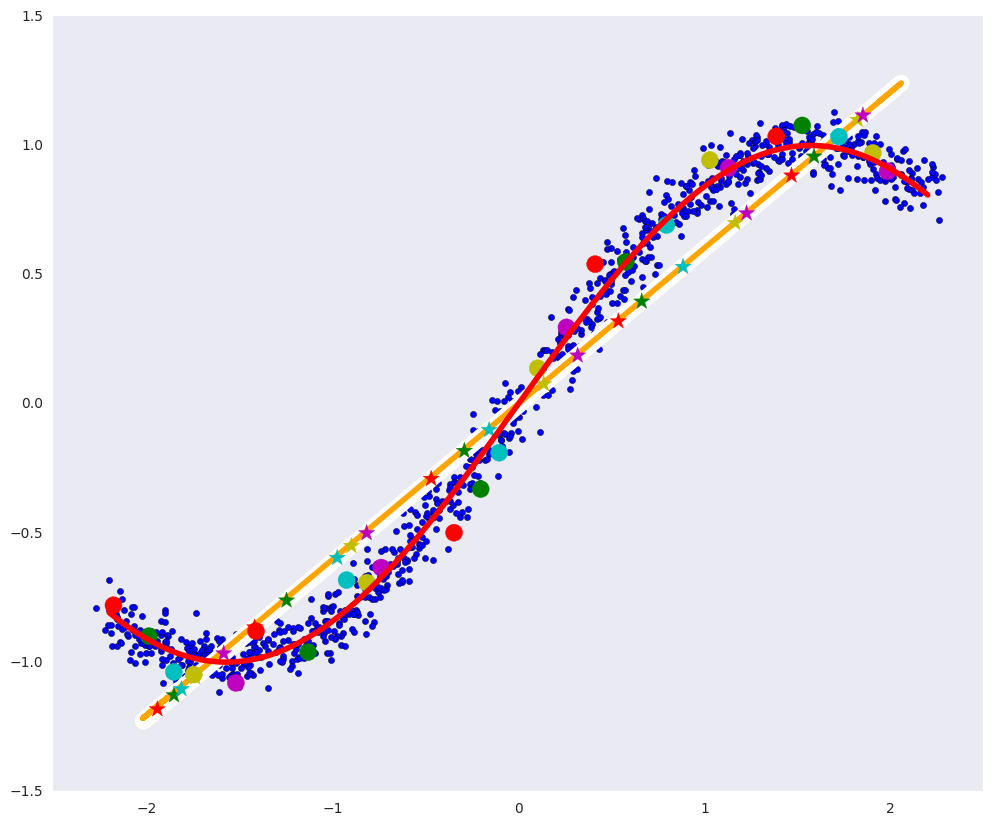

Ќа картинке выше: синие точки Ч данные, а красна€ крива€ Ц многообразие, определ€ющее наши данные.

Ћинейный сжимающий автоэнкодер

—амый простой автоэнкодер Ч это двухслойный сжимающий автоэнкодер с линейными функци€ми активации (больше слоев не имеет смысла при линейной активации).

“акой автоэнкодер ищет аффинное (линейное со сдвигом) подпространство в пространстве объектов, которое описывает наибольшую вариацию в объектах, тоже самое делает и PCA (метод главных компонент) и оба они наход€т одно и тоже подпространство

from keras.layers import Input, Dense

from keras.models import Model

from keras.optimizers import Adam

def linear_ae():

input_dots = Input((2,))

code = Dense(1, activation='linear')(input_dots)

out = Dense(2, activation='linear')(code)

ae = Model(input_dots, out)

return ae

ae = linear_ae()

ae.compile(Adam(0.01), 'mse')

ae.fit(dots, dots, epochs=15, batch_size=30, verbose=0)

# ѕрименение линейного автоэнкодера

pdots = ae.predict(dots, batch_size=30)

vpdots = pdots[idxs]

# ѕрименение PCA

from sklearn.decomposition import PCA

pca = PCA(1)

pdots_pca = pca.inverse_transform(pca.fit_transform(dots))

¬изуализаци€

# ¬изуализаци€

plt.figure(figsize=(12, 10))

plt.xlim([-2.5, 2.5])

plt.scatter(dots[:, 0], dots[:, 1], zorder=1)

plt.plot(x1, fx, color="red", linewidth=4, zorder=10)

plt.plot(pdots[:,0], pdots[:,1], color='white', linewidth=12, zorder=3)

plt.plot(pdots_pca[:,0], pdots_pca[:,1], color='orange', linewidth=4, zorder=4)

plt.scatter(vpdots[:,0], vpdots[:,1], color=colors*5, marker='*', s=150, zorder=5)

plt.scatter(vdots[:,0], vdots[:,1], color=colors*5, s=150, zorder=6)

plt.grid(False)

Ќа картинке выше:

- бела€ лини€ Ц многообразие, в которое переход€т синие точки данных после автоэнкодера, то есть попытка автоэнкодера построить многообразие, определ€ющее больше всего вариаций в данных,

- оранжева€ лини€ Ц многообразие, в которое переход€т синие точки данных после PCA,

- разноцветные кружки Ч точки, которые переход€т в звездочки соответствующего цвета после автоэнкодера,

- разноцветные звездочки Ц соответственно, образы кружков после автоэнкодера.

јвтоэнкодер, ищущий линейные зависимости, может быть не так полезен, как автоэнкодер, который может находить произвольные зависимости в данных. ѕолезно было бы, если бы и энкодер, и декодер могли аппроксимизировать произвольные функции. ≈сли добавить и в энкодер, и в декодер еще хот€ бы по одному слою достаточного размера и нелинейную функцию активации между ними, то они смогут находить произвольные зависимости.

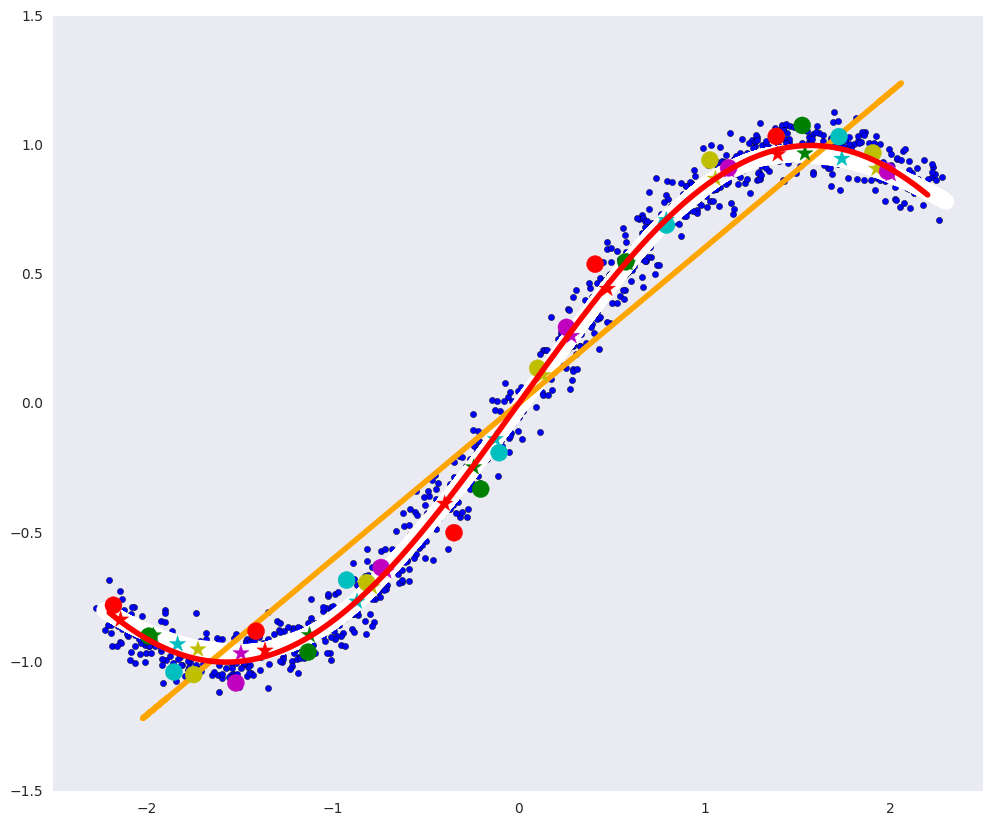

√лубокий автоэнкодер

” глубокого автоэнкодера больше число слоев и самое главное Ч нелинейна€ функци€ активации между ними (в нашем случае ELU Ч Exponential Linear Unit).

def deep_ae():

input_dots = Input((2,))

x = Dense(64, activation='elu')(input_dots)

x = Dense(64, activation='elu')(x)

code = Dense(1, activation='linear')(x)

x = Dense(64, activation='elu')(code)

x = Dense(64, activation='elu')(x)

out = Dense(2, activation='linear')(x)

ae = Model(input_dots, out)

return ae

dae = deep_ae()

dae.compile(Adam(0.003), 'mse')

dae.fit(dots, dots, epochs=200, batch_size=30, verbose=0)

pdots_d = dae.predict(dots, batch_size=30)

vpdots_d = pdots_d[idxs]

¬изуализаци€

# ¬изуализаци€

plt.figure(figsize=(12, 10))

plt.xlim([-2.5, 2.5])

plt.scatter(dots[:, 0], dots[:, 1], zorder=1)

plt.plot(x1, fx, color="red", linewidth=4, zorder=10)

plt.plot(pdots_d[:,0], pdots_d[:,1], color='white', linewidth=12, zorder=3)

plt.plot(pdots_pca[:,0], pdots_pca[:,1], color='orange', linewidth=4, zorder=4)

plt.scatter(vpdots_d[:,0], vpdots_d[:,1], color=colors*5, marker='*', s=150, zorder=5)

plt.scatter(vdots[:,0], vdots[:,1], color=colors*5, s=150, zorder=6)

plt.grid(False)

” такого автоэнкодера практически идеально получилось построить определ€ющее многообразие: бела€ крива€ почти совпадает с красной.

√лубокий автоэнкодер теоретически сможет найти многообразие произвольной сложности, например, такое, около которого лежат цифры в 784-мерном пространстве.

≈сли вз€ть два объекта и посмотреть на объекты, лежащие на произвольной кривой между ними, то скорее всего промежуточные объекты не будут принадлежать генеральной совокупности, т. к. многообразие на котором лежит генеральна€ совокупность может быть сильно искривленным и малоразмерным.

¬ернемс€ к датасету рукописных цифр из предыдущей части.

—начала двигаемс€ по пр€мой в пространстве цифр от одной 8-ки к другой:

од

from keras.layers import Conv2D, MaxPooling2D, UpSampling2D

from keras.datasets import mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test .astype('float32') / 255.

x_train = np.reshape(x_train, (len(x_train), 28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))

# —верточный автоэнкодер

def create_deep_conv_ae():

input_img = Input(shape=(28, 28, 1))

x = Conv2D(128, (7, 7), activation='relu', padding='same')(input_img)

x = MaxPooling2D((2, 2), padding='same')(x)

x = Conv2D(32, (2, 2), activation='relu', padding='same')(x)

x = MaxPooling2D((2, 2), padding='same')(x)

encoded = Conv2D(1, (7, 7), activation='relu', padding='same')(x)

# Ќа этом моменте представление (7, 7, 1) т.е. 49-размерное

input_encoded = Input(shape=(7, 7, 1))

x = Conv2D(32, (7, 7), activation='relu', padding='same')(input_encoded)

x = UpSampling2D((2, 2))(x)

x = Conv2D(128, (2, 2), activation='relu', padding='same')(x)

x = UpSampling2D((2, 2))(x)

decoded = Conv2D(1, (7, 7), activation='sigmoid', padding='same')(x)

# ћодели

encoder = Model(input_img, encoded, name="encoder")

decoder = Model(input_encoded, decoded, name="decoder")

autoencoder = Model(input_img, decoder(encoder(input_img)), name="autoencoder")

return encoder, decoder, autoencoder

c_encoder, c_decoder, c_autoencoder = create_deep_conv_ae()

c_autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

c_autoencoder.fit(x_train, x_train,

epochs=50,

batch_size=256,

shuffle=True,

validation_data=(x_test, x_test))

def plot_digits(*args):

args = [x.squeeze() for x in args]

n = min([x.shape[0] for x in args])

plt.figure(figsize=(2*n, 2*len(args)))

for j in range(n):

for i in range(len(args)):

ax = plt.subplot(len(args), n, i*n + j + 1)

plt.imshow(args[i][j])

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

# √омотопи€ по пр€мой между объектами или между кодами

def plot_homotopy(frm, to, n=10, decoder=None):

z = np.zeros(([n] + list(frm.shape)))

for i, t in enumerate(np.linspace(0., 1., n)):

z[i] = frm * (1-t) + to * t

if decoder:

plot_digits(decoder.predict(z, batch_size=n))

else:

plot_digits(z)

# √омотопи€ между первыми двум€ восьмерками

frm, to = x_test[y_test == 8][1:3]

plot_homotopy(frm, to)

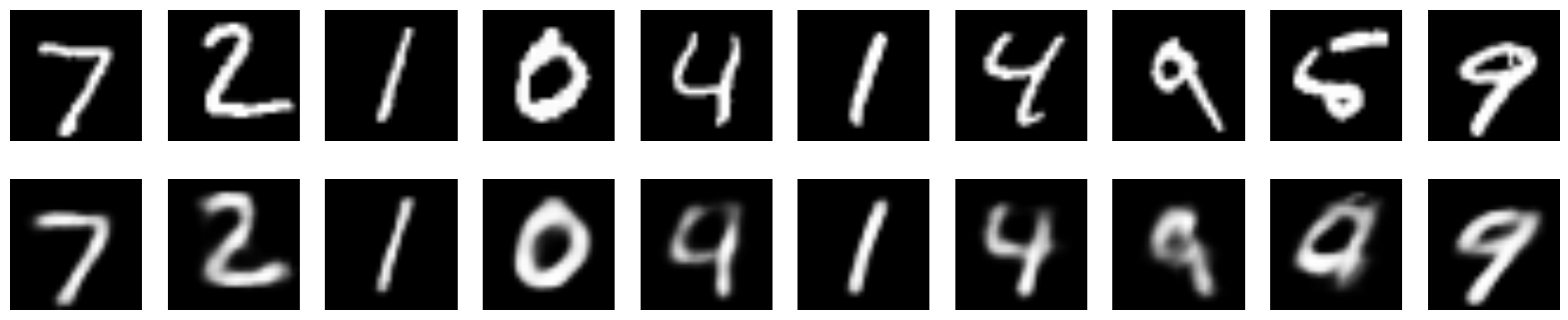

≈сли же двигатьс€ по кривой между кодами (и если многообразие кодов хорошо параметризовано), то декодер переведет эту кривую из пространства кодов, в кривую, не покидающую определ€ющее многообразие в пространстве объектов. “о есть промежуточные объекты на кривой будут принадлежать генеральной совокупности.

codes = c_encoder.predict(x_test[y_test == 8][1:3])

plot_homotopy(codes[0], codes[1], n=10, decoder=c_decoder)

ѕромежуточные цифры Ч вполне себе хорошие восьмерки.

“аким образом, можно сказать, что автоэнкодер, по крайней мере локально, выучил форму определ€ющего многообрази€.

ѕереобучение автоэнкодера

ƒл€ того чтобы автоэнкодер мог научитьс€ вычлен€ть какие-то сложные закономерности, обобщающие способности энкодера и декодера должны быть ограничены, иначе даже автоэнкодер с одномерным кодом сможет просто провести одномерную кривую через каждую точку в обучающей выборке, т.е. просто запомнить каждый объект. Ќо это сложное многообразие, которое построит автоэнкодер, не будет иметь много общего с определ€ющим генеральную совокупность многообразием.

¬озьмем ту же задачу с искусственными данными, обучим тот же глубокий автоэнкодер на очень маленьком подмножестве точек и посмотрим на получившеес€ многообразие:

од

dae = deep_ae()

dae.compile(Adam(0.0003), 'mse')

x_train_oft = np.vstack([dots[idxs]]*4000)

dae.fit(x_train_oft, x_train_oft, epochs=200, batch_size=15, verbose=1)

pdots_d = dae.predict(dots, batch_size=30)

vpdots_d = pdots_d[idxs]

plt.figure(figsize=(12, 10))

plt.xlim([-2.5, 2.5])

plt.scatter(dots[:, 0], dots[:, 1], zorder=1)

plt.plot(x1, fx, color="red", linewidth=4, zorder=10)

plt.plot(pdots_d[:,0], pdots_d[:,1], color='white', linewidth=6, zorder=3)

plt.plot(pdots_pca[:,0], pdots_pca[:,1], color='orange', linewidth=4, zorder=4)

plt.scatter(vpdots_d[:,0], vpdots_d[:,1], color=colors*5, marker='*', s=150, zorder=5)

plt.scatter(vdots[:,0], vdots[:,1], color=colors*5, s=150, zorder=6)

plt.grid(False)

¬идно, что бела€ крива€ прошла через каждую точку данных и слабо похожа на определ€ющую данные красную кривую: на лицо типичное переобучение.

—крытые переменные

ћожно рассмотреть генеральную совокупность как некоторый процесс генерации данных

- желаемой цифры,

- толщины штриха,

- наклона цифры,

- аккуратности,

- и т.д.

аждый из этих факторов имеет свое априорное распределение, например, веро€тность того, что будет нарисована восьмерка Ч это распределение Ѕернулли с веро€тностью 1/10, толщина штриха тоже имеет некоторое свое распределение и может зависеть как от аккуратности, так и от своих скрытых переменных, таких как толщина ручки или темперамент человека (оп€ть же со своими распределени€ми).

јвтоэнкодер сам в процессе обучени€ должен прийти к скрытым факторам, например, таким как перечисленные выше, каким-то их сложным комбинаци€м, или вообще к совсем другим. ќднако, то совместное распределение, которое он выучит, вовсе не об€зано быть простым, это может быть кака€-то сложна€ крива€ область. (ƒекодеру можно передать и значени€ извне этой области, вот только результаты уже не будут из определ€ющего многообрази€, а из его случайного непрерывного продолжени€).

»менно поэтому мы не можем просто генерировать новые

ƒл€ определенности введем некоторые обозначени€ на примере цифр:

Ч случайна€ величина картинки 28х28,

Ч случайна€ величина скрытых факторов, определ€ющих цифру на картинке,

Ч веро€тностное распределение изображений цифр на картинках, т.е. веро€тность конкретного изображени€ цифры в принципе быть нарисованным (если картинка не похожа на цифру, то эта веро€тность крайне мала),

Ч веро€тностное распределение скрытых факторов, например, распределение толщины штриха,

Ч распределение веро€тности скрытых факторов при заданной картинке (к одной и той же картинке могут привести различное сочетание скрытых переменных и шума),

Ч распределение веро€тности картинок при заданных скрытых факторах, одни и те же факторы могут привести к разным картинкам (один и тот же человек в одних и тех же услови€х не рисует абсолютно одинаковые цифры),

Ч совместное распределение

и

, наиболее полное понимание данных, необходимое дл€ генерации новых объектов.

ѕосмотрим, как распределены скрытые переменные в обычном автоэнкодере:

од

from keras.layers import Flatten, Reshape

from keras.regularizers import L1L2

def create_deep_sparse_ae(lambda_l1):

# –азмерность кодированного представлени€

encoding_dim = 16

# Ёнкодер

input_img = Input(shape=(28, 28, 1))

flat_img = Flatten()(input_img)

x = Dense(encoding_dim*4, activation='relu')(flat_img)

x = Dense(encoding_dim*3, activation='relu')(x)

x = Dense(encoding_dim*2, activation='relu')(x)

encoded = Dense(encoding_dim, activation='linear', activity_regularizer=L1L2(lambda_l1, 0))(x)

# ƒекодер

input_encoded = Input(shape=(encoding_dim,))

x = Dense(encoding_dim*2, activation='relu')(input_encoded)

x = Dense(encoding_dim*3, activation='relu')(x)

x = Dense(encoding_dim*4, activation='relu')(x)

flat_decoded = Dense(28*28, activation='sigmoid')(x)

decoded = Reshape((28, 28, 1))(flat_decoded)

# ћодели

encoder = Model(input_img, encoded, name="encoder")

decoder = Model(input_encoded, decoded, name="decoder")

autoencoder = Model(input_img, decoder(encoder(input_img)), name="autoencoder")

return encoder, decoder, autoencoder

encoder, decoder, autoencoder = create_deep_sparse_ae(0.)

autoencoder.compile(optimizer=Adam(0.0003), loss='binary_crossentropy')

autoencoder.fit(x_train, x_train,

epochs=100,

batch_size=64,

shuffle=True,

validation_data=(x_test, x_test))

n = 10

imgs = x_test[:n]

decoded_imgs = autoencoder.predict(imgs, batch_size=n)

plot_digits(imgs, decoded_imgs)

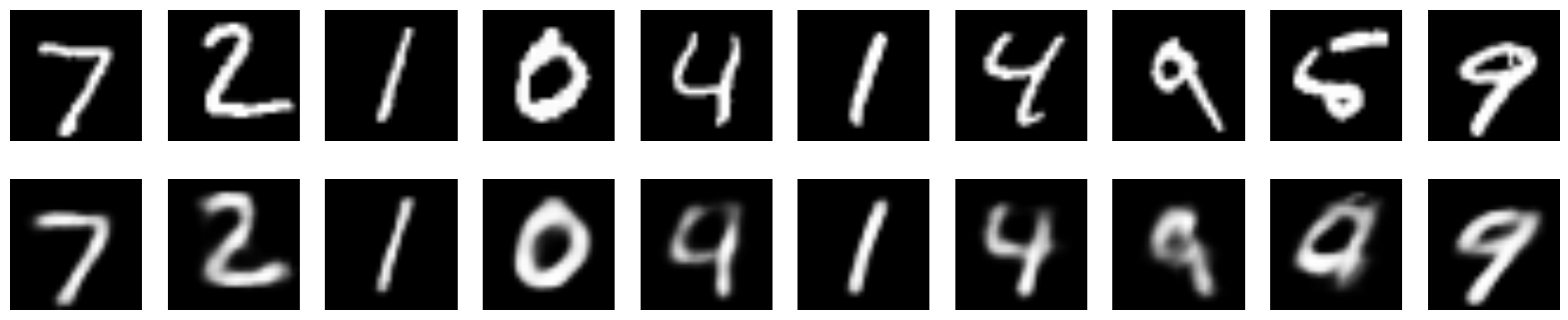

¬от так выгл€д€т восстановленные этим энкодером изображени€:

»зображени€

—овместное распределение скрытых переменных

codes = encoder.predict(x_test)

sns.jointplot(codes[:,1], codes[:,3])

¬идно, что совместное распределение

≈сть ли какой-то способ контролировать распределени€ скрытых переменных

—амый простой способ Ч добавить регул€ризатор

–егул€ризатор вынуждает автоэнкодер искать скрытые переменные, которые распределены по нужным законам, получитс€ ли у него Ч другой вопрос. ќднако это никак не заставл€ет делать их независимыми, т.е.

ѕосмотрим на совместное распределение скрытых параметров в разреженом автоэнкодере.

од и визуализаци€

s_encoder, s_decoder, s_autoencoder = create_deep_sparse_ae(0.00001)

s_autoencoder.compile(optimizer=Adam(0.0003), loss='binary_crossentropy')

s_autoencoder.fit(x_train, x_train, epochs=200, batch_size=256, shuffle=True,

validation_data=(x_test, x_test))

imgs = x_test[:n]

decoded_imgs = s_autoencoder.predict(imgs, batch_size=n)

plot_digits(imgs, decoded_imgs)

codes = s_encoder.predict(x_test)

snt.jointplot(codes[:,1], codes[:,3])

ќ том, как контролировать скрытое пространство, так, чтобы из него уже можно было осмысленно генерировать изображени€ Ч в следующей части про вариационные автоэнкодеры (VAE).

ѕолезные ссылки и литература

Ётот пост основан на главе про автоэнкодеры (в частности подглавы Learning maifolds with autoencoders) в Deep Learning Book.

| омментировать | « ѕред. запись — дневнику — —лед. запись » | —траницы: [1] [Ќовые] |