Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

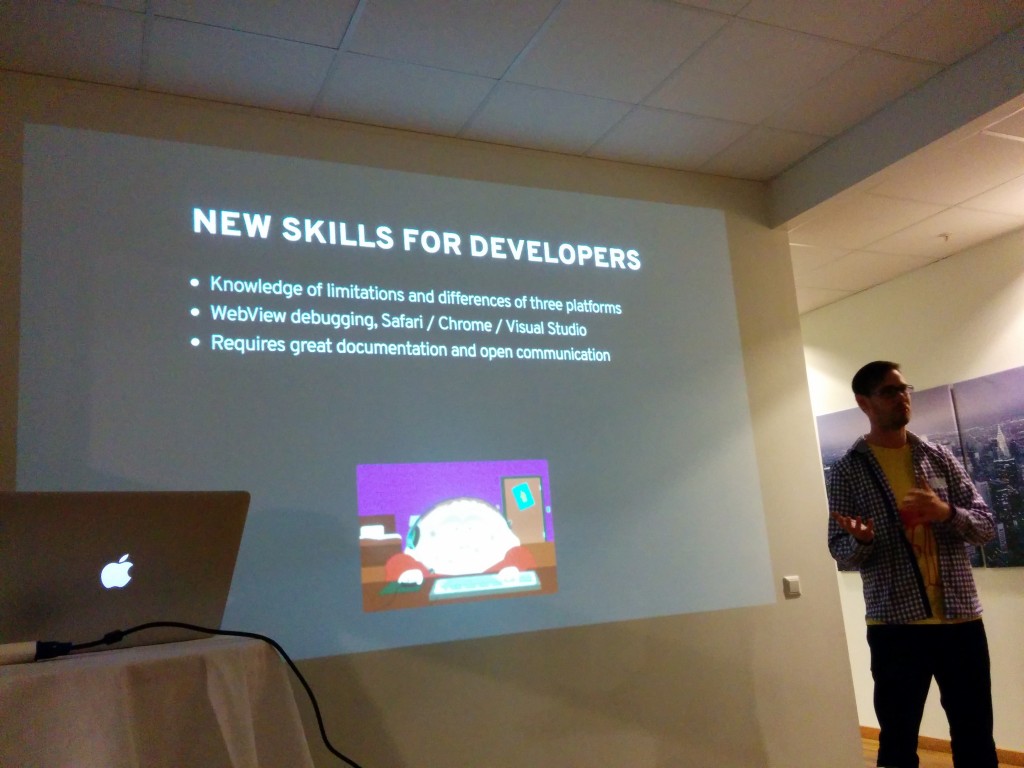

Christian Heilmann: [review] Hybrid and future web meetup at Jayway in Stockholm, Sweden |

Yesterday evening I went to Hybrid and Future Web Meetup at JayWay in Stockholm, Sweden.

The three hour meetup was an informal meeting with 3 speakers, great catering and drinks and some very interesting topics:

Andreas Hassell"of of Nordnet and Gustaf Nilsson Kotte of Jayway showed how they built the Nordnet banking app in a hybrid way. The interesting parts here to me where that they used Angular for the main app but native controls for the navigation elements of the UI to get the highest possible fidelity. They also created a messaging bus between the different parts using a pub/sub model for local storage. They are right now trying to find ways to open source their findings and I would love to see that get out. The other, very interesting part of this talk was how they used Crosswalk to inject a more modern Chromium into legacy Android devices to get much better performance.

Andreas Hassell"of of Nordnet and Gustaf Nilsson Kotte of Jayway showed how they built the Nordnet banking app in a hybrid way. The interesting parts here to me where that they used Angular for the main app but native controls for the navigation elements of the UI to get the highest possible fidelity. They also created a messaging bus between the different parts using a pub/sub model for local storage. They are right now trying to find ways to open source their findings and I would love to see that get out. The other, very interesting part of this talk was how they used Crosswalk to inject a more modern Chromium into legacy Android devices to get much better performance.

Anders Janmyr was next with a solid overview and live demo of Firefox OS app creation, distribution and debugging. Anders works for Jayway on Sony projects and it was interesting to see someone not from Mozilla or mobile partners talk about the topic I normally cover.

Anders Janmyr was next with a solid overview and live demo of Firefox OS app creation, distribution and debugging. Anders works for Jayway on Sony projects and it was interesting to see someone not from Mozilla or mobile partners talk about the topic I normally cover.

I closed the evening with a kind of preview of one of my sessions at Oredev tomorrow. In it, I talk about “modern” browser features that to me should be a “given” but got forgotten as we promised them too early to developers and legacy browsers did not support them. The slides are on Slideshare.

The unedited, raw screencast of my presentation is on YouTube (I say that because I made some mistakes in it – for example I bollocksed up the child selector explanation and one code example was missing a property):

This was the first time Jayway ran an event like this and I thoroughly enjoyed it. A good “after work” experience. Moar!

|

|

Mozilla Reps Community: Reps of the Month: October 2014 |

October has been an amazing month full of a lot of activities from our fellow Reps. For that reason this month we have two Reps of the month, congratulations!

Robert Sayles

Robbie has been leading volunteers at MozFest for the second year, he is the personification of what Reps can do, be and influence. Robbie was one of the hardest working Reps at MozFest. His help at MozFest was above and beyond, there were about 50% less volunteers than expected and he was obviously on top of that, with a smile – always.

Jefferson Duran

While we had a lot of Reps attending MozFest in London this year, there were others doing an amazing work on their home countries as well. During September and October, Jeff has been traveling around Colombia hosting webmaker parties non-stop. It’s just amazing the amount of work that he has been putting into making this happen to teach students and people around his country how to be a Maker.

Don’t forget to congratulate them on Discourse!

https://blog.mozilla.org/mozillareps/2014/11/04/reps-of-the-month-october-2014/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1090175] The size check for input

- [1088253] GET REST calls should allow arbitrary URL parameters to be passed in addition the values in the path

- [1092037] add the ability for administrators to limit the number of emails sent to a user per minute and hour

- [1092949] bugmail failing with “utf8 “\x82'' does not map to Unicode at /usr/lib64/perl5/Encode.pm line 174''

- [1090427] Login form lacks CSRF protection

- [1091149] Use of uninitialized value in string ne warnings from BugmailFilter extensiom

- [1083876] create a bugzilla template for whitelist applications

- [1093450] Sort by vote isn’t sorting correctly

note: there was an issue with sending of encrypted email to recipients with s/mime keys. this resulted in a large backlog of emails which was cleared following today’s push.

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/11/04/happy-bmo-push-day-117/

|

|

Eric Shepherd: The fast and the frustrating |

I love working at Mozilla.

I love the rapid progress we’re making in building a better Web for the future. I love that the documentation I help to produce makes it possible not only for current experts to use technology to create new things, but for kids to turn into the experts of the future. I love that, as I describe what I do to children, I teach programmers how to program new things.

I love the amazing amount of stuff there is to work on. I love the variety and the fun assortment of things to choose from as I look for the next thing to write about.

I love working in an organization where individual achievement can be had while at the same time being a team player, striving not to rake in dollars for the company, but to make the world a better place through technology and knowledge exchange.

It’s frustrating how fast things are changing. It’s frustrating to finish documentation for a technology only to almost immediately discover that this technology is about to be deprecated. It’s frustrating to know that the software and APIs we create can be used by bad people to do bad things if we make mistakes. It’s frustrating that my job keeps me from having as much free time as I’d like to have to work on my own projects.

It’s frustrating that I’m constantly on the brink of being totally overwhelmed by the frighteningly long list of things that need to be done. It’s frustrating knowing that my priorities will change before the end of each day. It’s frustrating how often something newly urgent comes up that needs to be dealt with right away.

It’s frustrating that no matter how hard I try, there are always people I’m never quite able to reach, or whom I simply can’t interface with for some reason.

There’s a lot to be frustrated about as a Mozillian. But being part of this wild, crazy adventure in software engineering (or, in my case, developer documentation) is (so far, at least!) worth the frustration.

I love being a Mozillian.

https://www.bitstampede.com/2014/11/04/the-fast-and-the-frustrating/

|

|

Nigel Babu: Weird IE8 error. Nginx to the rescue! |

As a server side developer, I don’t run into IE-specific errors very often. Last month, I ran into a very specific error, which is spectacular by itself. IE8 does not like downloads with cache control headers. The client has plenty of IE8 users and preferred we serve over HTTP for IE8 so that the site worked for sure.

Nginx has a very handy module called ngx_http_browser_module to help! All that I needed to do was less than 10 lines of Nginx config.

location / {

# every browser is to be considered modern

modern_browser unlisted;

# these particular browsers are ancient

ancient_browser "MSIE 6.0" "MSIE 7.0" "MSIE 8.0";

# redirect to HTTP if ancient

if ($ancient_browser) {

return 301 http://$server_name$request_uri;

}

# handle requests that are not redirected

proxy_pass http://127.0.0.1:8080;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

}

Yet another day I’m surprised by Nginx :)

http://nigelb.me/2014-11-04-ie8-ssl-woes-and-nginx-superhero.html

|

|

Rizky Ariestiyansyah: November Task Application Curation Board is Ready |

Wohoooo, november is coming and task for application curation board at Mozilla Marketplace is ready, last month (October) we are working on featured apps for specific theme like Action Game, Relaxing Apps and Seasonal shopping application, and all nominated apps already featured in october.

Have you visited Marketplace lately to app nominations in the spotlight? We just refreshed the ever-present “Great Games” collection. And a lot of apps populate the recent “Productivity” and “Creativity” collections, this time we are move move and move to prepare next month featured application on Firefox Marketplace aaaaandddd….

The November task are :

- Joshua Smith recommended we do a “Winter Games” collection. This is a good idea!

- “Holiday Apps” collection, this can be any kind of app related to December holidays (aka Christmas, Hannukah). This could be games, religious apps, or anything else related to the festive season.

- “Strategy Games” should comprise games that focus specifically on the strategy genre, such as turn-based strategy games, war games, board games, etc. I realize all games require a certain level of strategy, but in this case we’re talking about specific games where the primary emphasis is strategy, as opposed to action or puzzle games which may require elements of strategy.

OK that’s all the task for this month, we are try to serve better featured apps on Firefox Marketplace ![]() cheers and let’s do something wonderful for Open Web.

cheers and let’s do something wonderful for Open Web.

The post November Task Application Curation Board is Ready appeared first on oonlab.

|

|

Karl Dubost: The Message, The Trigger And The Ugly: Web Agencies Priorities And Skills |

The Message

In my ephemeral inconsequent blurbs of expressions, I recently tweeted:

There is a little bit inside me which is dying when I notice a Web agency releasing a new site without a proper Web optimization.

Action. Reaction. Alexa took me by surprise.

@karlpro sounds like the beginning of a blog post

And there is this deep feeling that I should have just shut up and probably not write this. But oh well. So let's see if I can make my life more miserable.

The Trigger

The tweet was triggered by a Web agency releasing their new Web site. Beautiful design, typography, responsive and even for your own bonus points of geekiness. Baked and not fried, which basically means that it is managed in a system which creates static pages. Cool thing. I navigate the site and it seems all cool. Curious as I am, I started to look under the hood. Opened the browser developer tools, and wandered to the Network Panel. Oops. A homepage with plenty of images and assets which is reaching 2 Mb and has no caching at all. Zero. Here you can insert a deep feeling of sadness sinking in. Yeah I know Halloween was so last week, but you know.

The Ugly: Web Agencies Priorities And Skills

Alexa's message helped me to go a bit further and go over my sadness.

What I think was important to me was not necessary understood by people releasing this Web site. I know the people behind this Web site. They are nice persons with excellent skills in design and typography applied to the Web. They have developed an excellence, an expertise in it. And what I could see was not necessary visible to them. The same way you can be listening a piece of music and not grasp certain subtleties because you have not been educated to do so (my case). I would probably miss an incongruity in typography or imbalance in the layout.

It's one of the issues realities with our business. We are not omniscient and specifically in the context of Web agencies. The issue is even more acute for small Web agencies. Put together a group of friends having shared core of interests and sensibility, decide to start a business around your skills, et voil`a you get a Web agency which starts delivering "interactive paper online" or a "performing hypermedia engine" or an "accessible transcript of a story".

Some Web agencies will focus on layout, some on Web mechanics, some on accessibility, and all of them will further entrench their competencies into the core set they have developed. It takes a lot of efforts, translations, education to understand the other aspects of a project. And that costs money which is sometimes not good for the business, specifically for small Web agencies.

The Web ecosystem being very tolerant on mistakes (and it's partly why it is so resilient), sometimes a Web site is just really beautiful paper pushed from one place to another under the form of bits using HTTP (the transport) without really using HTTP (the protocol).

I'm not necessary sure on how to deal with this issue or even have the start of an idea for solving it. But there is a little voice inside me, that people should sometimes reduce their expectations of excellence on some parts of the project to leave room (time and money) to address the other parts.

There are things in our daily life which seem obvious and becomes less so on the Web. Do I want to make the perfect layout for my shop window with beautiful lettering and colors so my customers are attracted by 10 fold? Or do I want to spend all my time on studying the entrance so it is large enough for wheelchairs, that the opening time is written in braille on the door and so on? Or do I want to spend all my time on using the best materials for having a solid and perfect built architecture?

Obviously, none of each, but some of all these. We better understand these choices. We grasp them because they are physical. We need to have a better understanding at integrated design. One where we understand that a Web project is made of a lot of small things.

Leave room for other things than your core competencies.

Postface

Now, do I contact my friend in this Web agency to explain how to fix it? Choose your own pain. Choose you own battle. We will see.

Otsukare.

http://www.otsukare.info/2014/11/04/web-agencies-priorities-skills

|

|

Nicholas Nethercote: Please grow your buffers exponentially |

If you record every heap allocation and re-allocation done by Firefox you find some interesting things. In particular, you find some sub-optimal buffer growth strategies that cause a lot of heap churn.

Think about a data structure that involves a contiguous, growable buffer, such as a string or a vector. If you append to it and it doesn’t have enough space for the appended elements, you need to allocate a new buffer, copy the old contents to the new buffer, and then free the old buffer. realloc() is usually used for this, because it does these three steps for you.

The crucial question: when you have to grow a buffer, how much do you grow it? One obvious answer is “just enough for the new elements”. That might seem space-efficient at first glance, but if you have to repeatedly grow the buffer it can quickly turn bad.

Consider a simple but not outrageous example. Imagine you have a buffer that starts out 1 byte long and you add single bytes to it until it is 1 MiB long. If you use the “just-enough” strategy you’ll cumulatively allocate this much memory:

1 + 2 + 3 + … + 1,048,575 + 1,048,576 = 549,756,338,176 bytes

Ouch. O(n2) behaviour really hurts when n gets big enough. Of course the peak memory usage won’t be nearly this high, but all those reallocations and copying will be slow.

In practice it won’t be this bad because heap allocators round up requests, e.g. if you ask for 123 bytes you’ll likely get something larger like 128 bytes. The allocator used by Firefox (an old, extensively-modified version of jemalloc) rounds up all requests between 4 KiB and 1 MiB to the nearest multiple of 4 KiB. So you’ll actually allocate approximately this much memory:

4,096 + 8,192 + 12,288 + … + 1,044,480 + 1,048,576 = 134,742,016 bytes

(This ignores the sub-4 KiB allocations, which in total are negligible.) Much better. And if you’re lucky the OS’s virtual memory system will do some magic with page tables to make the copying cheap. But still, it’s a lot of churn.

A strategy that is usually better is exponential growth. Doubling the buffer each time is the simplest strategy:

4,096 + 8,192 + 16,384 + 32,768 + 65,536 + 131,072 + 262,144 + 524,288 + 1,048,576 = 2,093,056 bytes

That’s more like it; the cumulative size is just under twice the final size, and the series is short enough now to write it out in full, which is nice — calls to malloc() and realloc() aren’t that cheap because they typically require acquiring a lock. I particularly like the doubling strategy because it’s simple and it also avoids wasting usable space due to slop.

Recently I’ve converted “just enough” growth strategies to exponential growth strategies in XDRBuffer and nsTArray, and I also found a case in SQLite that Richard Hipp has fixed. These pieces of code now match numerous places that already used exponential growth: pldhash, JS::HashTable, mozilla::Vector, JSString, nsString, and pdf.js.

Pleasingly, the nsTArray conversion had a clear positive effect. Not only did the exponential growth strategy reduce the amount of heap churn and the number of realloc() calls, it also reduced heap fragmentation: the “heap-overhead” part of the purple measurement on AWSY (a.k.a. “RSS: After TP5, tabs closed [+30s, forced GC]“) dropped by 4.3 MiB! This makes sense if you think about it: an allocator can fulfil power-of-two requests like 64 KiB, 128 KiB, and 256 KiB with less waste than it can awkward requests like 244 KiB, 248 KiB, 252 KiB, etc.

So, if you know of some more code in Firefox that uses a non-exponential growth strategy for a buffer, please fix it, or let me know so I can look at it. Thank you.

https://blog.mozilla.org/nnethercote/2014/11/04/please-grow-your-buffers-exponentially/

|

|

Robert O'Callahan: HTML5 Video Correctness Across Browsers |

A paper presents some results of cross-browser testing of HTML5 video. This sort of work is very helpful since it draws attention away from browser vendor PR efforts and towards the sort of issues that really impact Web developers, with actionable data.

Having said that, it's also very interesting to compare how browsers fare on correctness benchmarks designed without a browser-vendor axe to grind. Gecko usually does very well on such benchmarks and this one is no exception. If you read through the paper, we get almost everything right except a few event-firing issues (that I don't understand yet), more so than the other browsers.

Of course no test suite is perfect, and this one misses the massive Chrome canplaythrough bug: Chrome doesn't really implement canplaythrough at all. It simply fires canplaythrough immediately after canplay in all circumstances. That's still causing problems for other browsers which implement canplaythrough properly, since some Web sites have been written to depend on canplaythrough always firing immediately.

Still, this is good work and I'd like to see more like it.

http://robert.ocallahan.org/2014/11/html5-video-correctness-across-browsers.html

|

|

Gervase Markham: And It’s Also Less Tedious… |

Try not to let humans do what machines could do instead. As a rule of thumb, automating a common task is worth at least ten times the effort a developer would spend doing that task manually one time. For very frequent or very complex tasks, that ratio could easily go up to twenty or even higher.

— Karl Fogel, Producing Open Source Software

http://feedproxy.google.com/~r/HackingForChrist/~3/VZsm_4zGIf0/

|

|

Nathan Froyd: table-driven register reading in rr |

Many of the changes done for porting rr to x86-64 were straightforward, mechanical changes: change this type or format directive, add a template parameter to this function, search-and-replace all SYS_* occurrences and so forth. But the way register reading for rr’s GDB stub fell out was actually a marked improvement on what went before and is worth describing in a little more detail.

At first, if rr’s GDB stub wanted to know register values, it pulled them directly out of the Registers class:

enum DbgRegisterName {

DREG_EAX,

DREG_ECX,

// ...and so on.

};

// A description of a register for the GDB stub.

struct DbgRegister {

DbgRegisterName name;

long value;

bool defined;

};

static DbgRegister get_reg(const Registers* regs, DbgRegisterName which) {

DbgRegister reg;

reg.name = which;

reg.defined = true;

switch (which) {

case DREG_EAX:

reg.value = regs->eax;

return reg;

case DREG_ECX:

reg.value = regs->ecx;

return reg;

// ...and so on.

default:

reg.defined = false;

return reg;

}

}

That wasn’t going to work well if Registers supported several different architectures at once, so we needed to push that reading into the Registers class itself. Not all registers on a target fit into a long, either, so we needed to indicate how many bytes wide each register was. Finally, we also needed to indicate whether the target knew about the register or not: GDB is perfectly capable of asking for SSE (or even AVX) registers, but the chip running the debuggee might not support those registers, for instance. So we wound up with this:

struct DbgRegister {

unsigned int name;

uint8_t value[DBG_MAX_REG_SIZE];

size_t size;

bool defined;

};

static DbgRegster get_reg(const Registers* regs, unsigned int which) {

DbgRegister reg;

memset(®, 0, sizeof(reg));

reg.size = regs->read_register(®.value[0], which, ®.defined);

return reg;

}

...

template

static size_t copy_register_value(uint8_t* buf, T src) {

memcpy(buf, &src, sizeof(src));

return sizeof(src);

}

// GDBRegister is an enum defined in Registers.

size_t Registers::read_register(uint8_t* buf, GDBRegister regno, bool* defined) const {

*defined = true;

switch (regno) {

case DREG_EAX:

return copy_register_value(buf, eax);

case DREG_ECX:

return copy_register_value(buf, ecx);

// ...many more integer and segment registers...

case DREG_XMM0:

// XMM registers aren't supplied by Registers, but they are supplied someplace else.

*defined = false;

return 16;

// ...and so on

}

}

The above changes were serviceable, if a little repetitive. When it came time to add x86-64 support to Registers, however, writing out that same basic switch statement for x86-64 registers sounded unappealing.

And there were other things that would require the same duplicated code treatment, too: when replaying traces, rr compares the replayed registers to the recorded registers at syscalls and halts the replay if the registers don’t match (rr calls this “divergence” and it indicates some kind of bug in rr). The x86-only comparison routine looked like:

// |name1| and |name2| are textual descriptions of |reg1| and |reg2|, respectively,

// for error messages.

bool Registers::compare_register_files(const char* name1, const Registers* reg1,

const char* name2, const Registers* reg2,

int mismatch_behavior) {

bool match = true;

#define REGCMP(_reg) \

do { \

if (reg1-> _reg != reg2-> _reg) { \

maybe_print_reg_mismatch(mismatch_behavior, #_reg, \

name1, reg1-> _reg, \

name2, reg2-> _reg); \

match = false; \

} \

} while (0)

REGCMP(eax);

REGCMP(ebx);

// ...and so forth.

return match;

}

Duplicating the comparison logic for twice as many registers on x86-64 didn’t sound exciting, either.

I didn’t have to solve both problems at once, so I focused solely on getting the registers for GDB. A switch statement with almost-identical cases indicates that you’re doing too much in code, when you should be doing things with data instead. In this case, what we really want is this: given a GDB register number, we want to know whether we know about this register, and if so, how many bytes to copy into the outparam buffer and where to copy those bytes from. A lookup table is really the data structure we should be using here:

// A data structure describing a register.

struct RegisterValue {

// The offsetof the register in user_regs_struct.

size_t offset;

// The size of the register. 0 means we cannot read it.

size_t nbytes;

// Returns a pointer to the register in |regs| represented by |offset|.

// |regs| is assumed to be a pointer to |struct user_regs_struct| of the appropriate

// architecture.

void* pointer_into(void* regs) {

return static_cast(regs) + offset;

}

const void* pointer_into(const char* regs) const {

return static_cast(regs) + offset;

}

};

template

struct RegisterInfo;

template<>

struct RegisterInfo {

static struct RegisterValue registers[DREG_NUM_LINUX_I386];

};

struct RegisterValue RegisterInfo::registers[DREG_NUM_LINUX_I386];

static void initialize_register_tables() {

static bool initialized = false;

if (initialized) {

return;

}

initialized = true;

#define RV_X86(gdb_suffix, name) \

RegisterInfo::registers[DREG_##gdb_suffix] = { \

offsetof(rr::X86Arch::user_regs_struct, name), \

sizeof(((rr::X86Arch::user_regs_struct*)0)->name) \

}

RV_X86(EAX, eax);

RV_X86(ECX, ecx);

// ...and so forth. Registers not explicitly initialized here, e.g. DREG_XMM0, will

// be zero-initialized and therefore have a size of 0.

}

template

size_t Registers::read_register_arch(uint8_t buf, GDBRegister regno, bool* defined) const {

initialize_register_tables();

RegisterValue& rv = RegisterInfo::registers[regno];

if (rv.nbytes == 0) {

*defined = false;

} else {

*defined = true;

memcpy(buf, rv.pointer_into(ptrace_registers()), rv.nbytes);

}

return rv.nbytes;

}

size_t Registers::read_register(uint8_t buf, GDBRegister regno, bool* defined) const {

// RR_ARCH_FUNCTION performs the return for us.

RR_ARCH_FUNCTION(read_register_arch, arch(), buf, regno, defined);

}

Much better, and templating enables us to (re)use the RR_ARCH_FUNCTION macro we use elsewhere in the rr codebase.

Having to initialize the tables before using them is annoying, though. And after debugging a failure or two because I didn’t ensure the tables were initialized prior to using them, I wanted something more foolproof. Carefully writing out the array definition with zero-initialized members in the appropriate places sounded long-winded, difficult to read, and prone to failures that were tedious to debug. Ideally, I’d just specify the array indices to initialize along with the corresponding values, and let zero-initialization take care of the rest.

Initially, I wanted to use designated initializers, which are a great C99 feature that provided exactly what I was looking for, readable initialization of specific array indices:

#define RV_X86(gdb_suffix, name) \

[DREG_##gdb_suffix] = { \

offsetof(rr::X86Arch::user_regs_struct, name), \

sizeof(((rr::X86Arch::user_regs_struct*)0)->name) \

}

struct RegisterValue RegisterInfo::registers[DREG_NUM_LINUX_I386] = {

RV_X86(EAX, eax),

RV_X86(ECX, ecx),

};

I assumed that C++11 (which is the dialect of C++ used in rr) would support something like this, and indeed, my first attempts worked just fine. Except when I added more initializers:

struct RegisterValue RegisterInfo::registers[DREG_NUM_LINUX_I386] = {

RV_X86(EAX, eax),

RV_X86(ECX, ecx),

// ...other integer registers, eflags, segment registers...

RV_X86(ORIG_EAX, orig_eax);

};

I got this curious error message from g++: “Sorry, designated initializers not supported.”

But that’s a lie! You clearly do support designated initializers, because I’ve been able to compile code with them!

Well, yes, g++ supports designated initializers for arrays. Except that the indices have to be in consecutive order from the beginning of the array. And all the integer registers, EIP, and the segment registers appeared in consecutive order in the enumeration, starting with DREG_EAX = 0. But DREG_ORIG_EAX came some 20 members after DREG_GS (the last segment register), and so there we had initialization of non-consecutive array indices, and g++ complained. In other words, designated initializers in g++ are useful for documentation and/or readability purposes, but they’re not useful for sparse arrays. Hence the explicit initialization approach described above.

Turns out there’s a nicer, C++11-ier way to handle these kind of sparse arrays, at the cost of some extra infrastructure. Instead of an explicit [] variable, you can have a class with an operator[] overload and a constructor that takes std::initializer_list>. The first member of the pair is the index into your sparse array, and the second member is the value you want placed there. The constructor takes care of putting everything in its place; the actual code looks like this:

typedef std::pair RegisterInit;

// Templated over N because different architectures have differently-sized register files.

template

struct RegisterTable : std::array {

RegisterTable(std::initializer_list list) {

for (auto& ri : list) {

(*this)[ri.first] = ri.second;

}

}

};

template<>

struct RegisterInfo {

static const size_t num_registers = DREG_NUM_LINUX_I386;

typedef RegisterTable Table;

static Table registers;

};

RegisterInfo::Table RegisterInfo::registers = {

// RV_X86 macro defined similarly to the previous version.

RV_X86(EAX, eax),

RV_X86(ECX, ecx),

// ...many more register definitions

};

// x86-64 versions defined similarly...

There might be a better way to do this that’s even more C++11-ier. Comments welcome!

Once this table-driven scheme was in place, using it for comparing registers was straightforward: each RegisterValue structure adds a name field, for error messages, and a comparison_mask field, for applying to register values prior to comparison. Registers that we don’t care about comparing can have a register mask of zero, just like registers that we don’t want to expose to GDB can have a “size” of zero. A mask is more flexible than a bool compare/don’t compare field, because eflags in particular is not always determinstic between record and replay, so we mask off particular bits of eflags prior to comparison. The core comparison routine then looks like:

templatestatic bool compare_registers_core(const char* name1, const Registers* reg1, const char* name2, const Registers* reg2, int mismatch_behavior) { bool match = true; for (auto& rv : RegisterInfo ::registers) { if (rv.nbytes == 0) { continue; } // Disregard registers that will trivially compare equal. if (rv.comparison_mask == 0) { continue; } // XXX correct but oddly displayed for big-endian processors. uint64_t val1 = 0, val2 = 0; memcpy(&val1, rv.pointer_into(reg1.ptrace_registers()), rv.nbytes); memcpy(&val2, rv.pointer_into(reg2.ptrace_registers()), rv.nbytes); val1 &= rv.comparison_mask; val2 &= rv.comparison_mask; if (val1 != val2) { maybe_print_reg_mismatch(mismatch_behavior, rv.name, name1, val1, name2, val2); match = false; } } return match; }

which I thought was a definite improvement on the status quo. Additional, architecture-specific comparisons can be layered on top of this (see, for instance, the x86-specialized versions in rr’s Registers.cc).

Eliminating or reducing code by writing data structures instead is one of those coding tasks that gives me a warm, fuzzy feeling after I’ve done it. In addition to the above, I was also able to use the RegisterValue structures to provide architecture-independent dumping of register files, which was another big win in making Registers multi-arch aware. I was quite pleased that everything worked out so well.

https://blog.mozilla.org/nfroyd/2014/11/03/table-driven-register-reading-in-rr-2/

|

|

Mozilla WebDev Community: Beer and Tell – October 2014 |

Once a month, web developers from across the Mozilla Project get together to debate which episode is the best episode of Star Trek: The Next Generation. Between screaming matches, we generally find time to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

Peter Bengtsson: django-html-validator

Our first presenter, peterbe, has created a small library called django-html-validator that helps perform HTML validation via either a middleware that runs on every request, or a test request client that you can replace the default TestCase client with. It validates by sending the HTML to validator.nu, but can also be set up to use vnu.jar locally to avoid spamming the service with validation requests.

Peter Bengtsson: Pure JS Autocomplete

Previously, peterbe showed an autocomplete engine based on JavaScript that called out to a search index stored in Redis. This time he shared some new features:

- No dependencies on jQuery or Bootstrap.

- Tab-triggered autocomplete.

- Preview of the autocompleted word.

There’s no library for the engine yet, but a demo is up on peterbe’s webpage and the code is located in the Github repository for his personal site.

Matthew Claypotch: flight-status

Uncle potch had a very simple command-line program to show us called flight-status. It pulls the current status of a flight by scraping the FlightAware webpage for a flight using request and cheerio.

Matthew Claypotch: stylecop

stylecop was born from potch’s need to enforce some interesting style guidelines against the CSS used in Brick. stylecop parses CSS and allows you to specify interesting rules via JavaScript code that normal CSS linters can’t find, such as ensuring all classes start with a specific namespace, or that any tag selectors are used as direct descendants.

Pomax: Bezier.js

In a continuing trend of useful and math-y libraries, Pomax showed off Bezier.js, a library for computing B'ezier curves, their inflection points, intersections, etc. The library is based of his work for “A Primer on B'ezier Curves” and a demo and documentation for the library is available.

Robert Helmer: freebsdxr

DXR is a code search and navigation tool that Mozilla develops to help navigate the Firefox source code. rhelmer shared his work-in-progress of adapting DXR to index the FreeBSD source code. It brings the goodness of DXR, such as full-text searching and structural queries (e.g. “Find all the callers of this function”) to FreeBSD. rhlemer’s work is available as a branch on his Github repo.

Chris Lonnen: leeroyboy

Finally, lonnen stopped by to share some info about leeroybot, a customized version of Hubot used by the Release Engineering team. Along with alterations to the plugins that normally come with Hubot, leeroybot is updated and deployed by TravisCI automatically whenever a change is pushed to the master branch.

If we’ve learned anything this week, it’s that you should never allow someone to bring a Bat’leth to a Star Trek debate.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2014/11/03/beer-and-tell-october-2014/

|

|

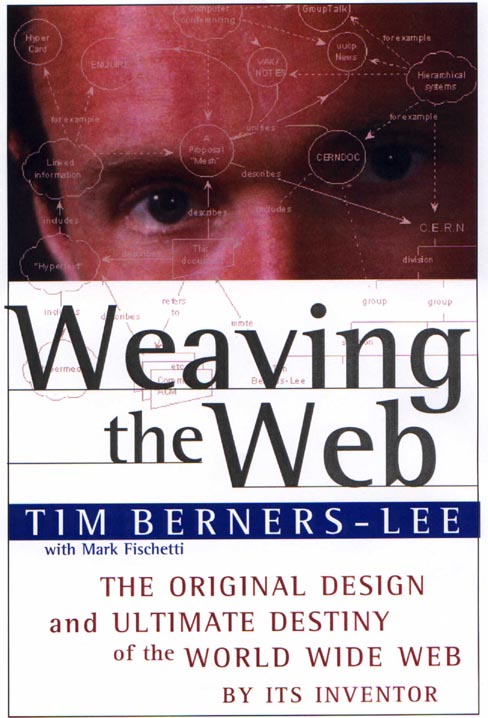

Tim Guan-tin Chien: Tim’s Dream for the Web |

Even before I get myself this username (timdream) and polluted the search result, search result of that term will give you a link to on LogicError, appropriated titled “Tim’s Dream for the Web”. That “Tim” was Sir Tim Berners-Lee, and he imagines the Web to be the powerful means for collaboration between not only people, but machines.

Weaving the Web, credit: W3C.

Collaboration between people has been closer than ever because of the Web. It have already grew closer thanks to the technologies set branded as “HTML5”, and the technologies will only grew stronger, hopefully beyond the catchphrase. As of the machines, a form of Semantic Web is indeed slowly emerging, but not in the ideal open, collaborative way and in a more top-down, proprietary approach, limited to the centralized design in which many are asked to interface with few proprietary platforms who encapsulates all data.

Invasion of the Data Snatchers by ACLU

It’s a good thing for people to start enjoying what it is possible when the data is linked — yet, having them linked in a more open way while safeguarding our privacy remain the work to be done. This week we saw the 20th anniversary of W3C, and the HTML5 spec reaches Recommendation status — the events themselves are testimony of the rough history of the Web. I remain cautiously optimistic on this one — the organizations rally on this front is largely intact, and people will, eventually more aware of the danger when as they enjoy the convenience of the technologies.

The LogicError page was in my bookmark since a long time ago, like Firefox 0.8-ish, but not until now I realized LogicError is in fact an Aaron Swartz project. The footnote itself, with the name, kind of say something.

|

|

Robert Nyman: Trying out Google Inbox |

About a week and a half ago I was happy to get an invite to Google’s new take on e-mail: Google Inbox. The idea is to make it much simpler for users to deal and bundle e-mails they get.

I’ve written about my approach before in How to become efficient at e-mail, something I was happy to see Googler Rob Dodson address:

This article by @robertnyman has totally helped me (sorta) stop sucking at email: http://t.co/aAs5rtTD1H

"Show if unread" FTW!

— Rob Dodson (@rob_dodson) October 23, 2014

So, I feel happy and effective in my approach, but at the same time, always willing to test something new.

Trying Google Inbox

Google Inbox is based on top of your Gmail account, meaning that all existing e-mails, filters, labels etc are still present. This all makes it very easy to try out with an existing account and structure, and also opting out simple if you want to. It is available on mobile as apps for iOS and Android, and through desktop web browsers at https://inbox.google.com (right now this is Chrome-only, probably through the initial testing phase, but the developers have said that they naturally aim at supporting more browsers) and as a Chrome App.

Features

The basic features of Google Inbox are:

Bundling

E-mails are being automatically bundled together in one category and in your inbox you can see the different bundles, quick view of their contents and making it easy to to read or mark all of them as seen

The bundling is done automatically, and out-of-the-box, Google offers a number of bundles such as Travel, Purchases, Social, Updates, Forums and Promos. Going through my e-mail, I’ve been very happy with Google’s automatic filtering of my e-mail into corresponding bundles.

Highlights

Extracting key data from e-mails, like images, attachements etc, to show in the Inbox list without you having to open the actual e-mail.

Reminders

Want a quick to-do list? Google Inbox offers that funcationality right in your inbox now.

Snooze

If you see an e-mail that you need to reply to/act on but just haven’t got the time at the moment, you can snooze it till later (something that users of Mailbox, acquired by Dropbox, will recognize).

Sweep

There’s one quick action for both all e-maiuls in the inbox or all e-mails in a bundle, sweep, to make them all as seen and remove them from the inbox.

Pinning e-mail

You can also pin e-mails that are important to you directly in the inbox so no matter if you mark everything read, the pinned ones will still be there. You also have an option at the top to switch between seeing all e-mails in the inbox or only the pinned ones.

Pinned view switch

Pinned view filtered

Some power features and settings from Gmail are definitely missing, but I think this is a very interesting step in the right direction.

Screenshots

Bundling options

Social bundle

Bundling on mobile

Quick compose on mobile

Navigation bar

Invite-only

At the moment Google Inbox is invite-only, but they seem to be releasing more and more invites (no, I don’t have any invites to share at this time)

When you get the chance to try it, you should, since could be a rewarding perspective and service. And all we aim for at the end of the day is Inbox Zero, right? ![]()

|

|

Christian Heilmann: Reward my actions, please? |

(this was first published on Medium, but kept here for future reference. Also, I know how to use bullet points here)

I remember the first time I used the web. I found these weird things that were words that were underlined. When I activated them, I went to a place that has content related to that word. I liked that. It meant that I didn’t have to disconnect, re-dial another number and connect there to get other content. It was interlinked content using a very simple tool.

My actions were rewarded. I clicked a link about kittens and I got information about kittens. I entered “what is the difference between an Indian and African elephant” in a search box, hit enter and got the information I needed after following another one of those underlined things.

Then the bad guys came. They wanted you to go where you didn’t plan to go and show you things you weren’t interested in but they got paid for showing you. This is how we got pop-ups, pop-unders, interstitials and in the worst case phishing sites.

Bad guys make links and submit buttons do things they were not meant to do. They give you something else than the thing you came for. They don’t reward you for your actions. They distract, redirect, misdirect and monitor you.

Fast forward to now. Browsers have popup blockers and malware filters. They tell you when a link looks dodgy. This is great.

However, the behaviour of the bad guys seems to become a modus operandi of good guys, too. Those who don’t think about me, but about their own needs and wants first.

More and more I find myself activating a link, submitting a form or even typing in a URL and I don’t get what I want. Instead I get all kind of nonsense I didn’t come for:

- Please download our app

- Please do other actions on our site

- Please do these tasks that are super important to us but not related to what you are doing right now

- Please use a different browser/resolution/device/religion/creed/gender

Granted, the latter is a bit over the top, but they all boil down to one thing:

You care more about yourself and bolstering some numbers you measure than about me, your user.

And this annoys me. Don’t do that. Be the good person that shows behind links and actions the stuff people came for. Show that first and foremost and in a very simple fashion. Then show some other things. I am much more likely to interact more with your product when I had a good experience than when I feel badgered by it to do more without ever reaching what I came for.

You want an example? OK. Here’s looking at you LinkedIn. I get an email from someone interesting who wants to connect with me. The job title is truncated, the company not named. Alright then — I touch the button in the email, the LinkedIn app pops up and I get the same shortened title of that person and still no company name. It needs another tap, a long spinner and loading to get that. I connect. Wahey.

Five minutes later, I think that I might as well say hi to that person. I go to the LinkedIn app, to “connections” and I’d expect new connections there, right? Wrong. I get a “People you may know” and a list of connections that has nothing to do with my last actions or new contacts. Frankly I don’t know why I see these people.

I understand you want me to interact with your product. Then let me interact. Don’t send me down a rabbit hole. Make my clicks count. Then I send you all my love and I am very happy to pay for your products.

You don’t lose users because you don’t tell them enough about other cool features you have. You lose them because you confuse them. Reward my actions and we can work together!

http://christianheilmann.com/2014/11/03/reward-my-actions-please/

|

|

Rizky Ariestiyansyah: Browser Khusus untuk Developer, Segera! |

Di Mozilla kami tahu bahwa Developer adalah landasan utama dari web, itu sebabnya kami secara aktif mendorong dan melanjutkan untuk membuat aplikasi yang akan memudahkan kita semua untuk membuat konten web dan aplikasi yang...

The post Browser Khusus untuk Developer, Segera! appeared first on oonlab.

|

|

Mozilla Release Management Team: Firefox 34 beta4 to beta5 |

- 37 changesets

- 78 files changed

- 976 insertions

- 295 deletions

| Extension | Occurrences |

| cpp | 27 |

| h | 18 |

| js | 11 |

| xml | 4 |

| jsm | 4 |

| java | 4 |

| html | 3 |

| ini | 2 |

| xul | 1 |

| sh | 1 |

| list | 1 |

| in | 1 |

| Module | Occurrences |

| js | 19 |

| mobile | 10 |

| netwerk | 8 |

| layout | 7 |

| content | 5 |

| browser | 5 |

| mozglue | 4 |

| toolkit | 3 |

| intl | 3 |

| gfx | 3 |

| dom | 3 |

| security | 2 |

| widget | 1 |

| testing | 1 |

| modules | 1 |

| media | 1 |

| build | 1 |

List of changesets:

| Ryan VanderMeulen | Bug 922976 - Disable 394751.xhtml on B2G for frequent failures. a=test-only - 87081b44ad8a |

| Benoit Jacob | Bug 1089413 - Only test resource sharing on d3d feature level >= 10. r=jmuizelaar, a=sledru - 75329ab4323a |

| Justin Dolske | Bug 1089421 - Forget button should call more attention to it closing all tabs/windows. r=gijs, ui-r=phlsa, a=dolske - a42b0af72449 |

| Justin Dolske | Bug 1088137 - Forget button can fail to clear cookies by running sanitizer too early. r=MattN, a=dolske - 4c8d686c690b |

| Hiroyuki Ikezoe | Bug 1084997 - Replace '' in MOZ_BUILD_APP with '/' to eliminate the difference between windows and others. r=glandium, a=NPOTB - 4197f5318fd8 |

| Cosmin Malutan | Bug 1086527 - Make sure PluralForm.get can be called from strict mode. r=Mossop, a=lsblakk - 363ef26f9bee |

| Gijs Kruitbosch | Bug 1079222 - deny fullscreen from the forget button, r=dolske, a=dolske - 776f967418e1 |

| Justin Dolske | Bug 1085330 - UITour: Highlight positioning breaks when icon target moves into "more tools" overflow panel. r=Unfocused, a=dolske - 74d96225a2a8 |

| Randell Jesup | Bug 1079729: Fix handling of increasing number of SCTP channels used by DataChannels r=tuexen a=lsblakk - cc85ed51d280 |

| Ryan VanderMeulen | Bug 1078237 - Disable test_switch_frame.py on Windows for frequent failures. a=test-only - 76dcced7d838 |

| Jeff Gilbert | Bug 1089022 - Give WebGL conf. tests a longer timeout. r=kamidphish, a=test-only - 9a6a63827c10 |

| Patrick McManus | Bug 1088850 - Disable http/1 framing enforcement from Bug 237623. r=bagder, a=lsblakk - d9496ec99e83 |

| Richard Newman | Bug 1084521 - Use +id not +android:id. r=lucasr, a=lsblakk - 81b50459db25 |

| William Chen | Bug 1064211 - Keep CustomElementData alive while on processing stack. r=mrbkap, a=lsblakk - f7483e854a43 |

| Terrence Cole | Bug 1013001 - Make it simpler to deal with nursery pointers in the compiler. r=jandem, a=lsblakk - c94fc6b83daa |

| Mark Goodwin | Bug 1081711 - Ensure 'remember this decision' works for client certificates. r=wjohnston, a=lsblakk - f953384743a4 |

| William Chen | Bug 1033464 - Do not set nsXBLPrototypeBinding binding element for ShadowRoot. r=smaug, a=lsblakk - c88c66aa42d2 |

| Andrea Marchesini | Bug 1082178 - JS initialization must happen using the correct preferences in workers. r=khuey, a=lsblakk - 301822ecb5fa |

| Jeff Muizelaar | Bug 1072847 - Initialize mSurface. r=BenWa, a=abillings - d5a855ee2081 |

| Jan de Mooij | Bug 1086842 - Fix an Ion type barrier issue. r=bhackett, a=dveditz - b58f505f18df |

| Jason Orendorff | Bug 1065604 - Assert that JSPROP_SHARED is set on all properties defined with JSPROP_GETTER or JSPROP_SETTER. r=Waldo, a=lmandel - ecaedd858fd0 |

| Jason Orendorff | Bug 1042567 - Reflect JSPropertyOp properties more consistently as data properties. r=efaust, a=lmandel - d38dd7de64b3 |

| Ian Stakenvicius | Bug 1090405 - Ensure that 'samples' is not negative in WebMReader. r=rillian, a=lmandel - ea93efd4cf0a |

| Mark Banner | Bug 1086434 - Having multiple outgoing Loop windows in an end call state could result in being unable to received another call. r=dmose, a=lmandel - a7a6e6465c30 |

| Chenxia Liu | Bug 1072831 - Use DialogFragment for Onboarding v1 start pane. r=lucasr, a=lsblakk - 79567465c505 |

| Kai Engert | Bug 1042889 - Cannot override sec_error_ca_cert_invalid. r=dkeeler, a=lmandel - a03fc45643ef |

| Brad Lassey | Bug 1090650 - Change chromecast app id to point to official chromecast app. r=mfinkle, a=lmandel - ddfa86051d29 |

| Ryan VanderMeulen | Bug 1090650 - s/MIRROR_RECIEVER_APP_ID/MIRROR_RECEIVER_APP_ID to fix bustage. a=bustage - b0a9f5a02950 |

| Lawrence Mandel | Disable early beta testing. a=lmandel - 50642467b0f7 |

| David Rajchenbach-Teller | Bug 1087674 - Handle XHR abort()/timeout and certificate errors more gracefully in GMPInstallmanager. r=gfritzsche, a=sledru - 60b312ec8c87 |

| Xidorn Quan | Bug 1077718 - Switch dynamic CounterStyle objects to use arena allocation. r=mats, a=lmandel - f681d39b83ca |

| Robert O'Callahan | Bug 1081185 - Traverse rect edges when searching for w=0 crossings instead of taking diagonals. r=mattwoodrow, a=lmandel - acd99fc02446 |

| Terrence Cole | Bug 1081769 - Assert that we never have a null cross-compartment key. r=billm, a=lmandel - 30ebfff46e63 |

| Mike Hommey | Bug 1059797 - Pre-allocate zlib inflate buffers in faulty.lib. r=froydnj, a=lmandel - 3554e60ef779 |

| Mike Hommey | Bug 1091118 - Part 1: Remove $topsrcdir/gcc/bin from PATH on android builds. r=gps, a=lmandel - 49069150dab1 |

| Mike Hommey | Bug 1091118 - Part 2: Do not use the top-level cache file for freetype2 subconfigure. r=gps, a=lmandel - 16df73a8ddc1 |

| D~ao Gottwald | Bug 1077740 - Reset legacy homepages to about:home. r=gavin, a=lmandel - 8f974876367e |

http://release.mozilla.org/statistics/34/2014/11/03/fx-34-b4-to-b5.html

|

|

Peter Bengtsson: uwsgi and uid |

So recently, I moved home for this blog. It used to be on AWS EC2 and is now on Digital Ocean. I wanted to start from scratch so I started on a blank new Ubuntu 14.04 and later rsync'ed over all the data bit by bit (no pun intended).

When I moved this site I copied the /etc/uwsgi/apps-enabled/peterbecom.ini file and started it with /etc/init.d/uwsgi start peterbecom. The settings were the same as before:

# this is /etc/uwsgi/apps-enabled/peterbecom.ini [uwsgi] virtualenv = /var/lib/django/django-peterbecom/venv pythonpath = /var/lib/django/django-peterbecom user = django master = true processes = 3 env = DJANGO_SETTINGS_MODULE=peterbecom.settings module = django_wsgi2:application

But I kept getting this error:

Traceback (most recent call last):

...

File "/var/lib/django/django-peterbecom/venv/local/lib/python2.7/site-packages/django/db/backends/postgresql_psycopg2/base.py", line 182, in _cursor

self.connection = Database.connect(**conn_params)

File "/var/lib/django/django-peterbecom/venv/local/lib/python2.7/site-packages/psycopg2/__init__.py", line 164, in connect

conn = _connect(dsn, connection_factory=connection_factory, async=async)

psycopg2.OperationalError: FATAL: Peer authentication failed for user "django"

What the heck! I thought. I was able to connect perfectly fine with the same config on the old server and here on the new server I was able to do this:

django@peterbecom:~/django-peterbecom$ source venv/bin/activate (venv)django@peterbecom:~/django-peterbecom$ ./manage.py shell Python 2.7.6 (default, Mar 22 2014, 22:59:56) [GCC 4.8.2] on linux2 Type "help", "copyright", "credits" or "license" for more information. (InteractiveConsole) >>> from peterbecom.apps.plog.models import * >>> BlogItem.objects.all().count() 1040

Clearly I've set the right password in the settings/local.py file. In fact, I haven't changed anything and I pg_dump'ed the data over from the old server as is.

I edit edited the file psycopg2/__init__.py and added a print "DSN=", dsn and those details were indeed correct.

I'm running the uwsgi app as user django and I'm connecting to Postgres as user django.

Anyway, what I needed to do to make it work was the following change:

# this is /etc/uwsgi/apps-enabled/peterbecom.ini [uwsgi] virtualenv = /var/lib/django/django-peterbecom/venv pythonpath = /var/lib/django/django-peterbecom user = django uid = django # THIS IS ADDED master = true processes = 3 env = DJANGO_SETTINGS_MODULE=peterbecom.settings module = django_wsgi2:application

The difference here is the added uid = django.

I guess by moving across (I'm currently on uwsgi 1.9.17.1-debian) I get a newer version of uwsgi or something that simply can't just take the user directive but needs the uid directive too. That or something else complicated to do with the users and permissions that I don't understand.

Hopefully, by having blogged about this other people might find it and get themselves a little productivity boost.

|

|

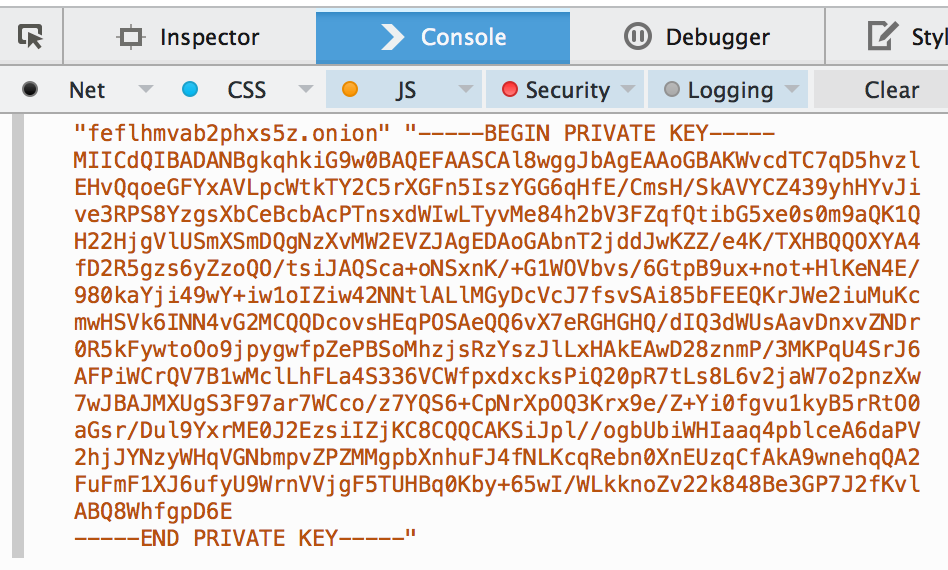

Tim Taubert: Using the WebCrypto API to generate .onion names for Tor hidden services |

You have probably read that Facebook unveiled its hidden service that lets users access their website more safely via Tor. While there are lots of opinions about whether this is good or bad I think that the Tor project described best why that is not as crazy as it seems.

The most interesting part to me however is that Facebook brute-forced a custom hidden service address as it never occurred to me that this is something you might want to do. Again ignoring the pros and cons of doing that, investigating the how seems like a fun exercise to get more familiar with the WebCrypto API if that is still unknown territory to you.

How are .onion names created?

Names for Tor hidden services are meant to be self-authenticating. When creating a hidden service Tor generates a new 1024 bit RSA key pair and then computes the SHA-1 digest of the public key. The .onion name will be the Base32-encoded first half of that digest.

By using a hash of the public key as the URL to contact a hidden service you can easily authenticate it and bypass the existing CA structure. This 80 bit URL is sufficient to prevent collisions, even with a birthday attack (and thus an entropy of 40 bit) you can only find a random collision but not the key pair matching a specific .onion name.

Creating custom .onion names

So how did Facebook manage to come up with a public key resulting in

facebookcorewwwi.onion? The answer is that they were incredibly lucky.

You can brute-force .onion names matching a specific pattern using tools like Shallot or Scallion. Those will generate key pairs until they find one resulting in a matching URL. That is usably fast for 1-5 characters. Finding a 6-character pattern takes on average 30 minutes and for just 7 characters you might need to let it run for a full day.

Coming up with an .onion name starting with an 8-character pattern like

facebook would thus take even longer or need a lot more resources. As a

Facebook engineer confirmed

they indeed got extremely lucky: they generated a few keys matching the pattern,

picked the best and then just needed to come up with an explanation for the

corewwwi part to let users memorize it better.

Without taking a closer look at “Shallot” or “Scallion” let us go with a naive approach. We do not need to create another tool to find .onion names in the browser (the existing ones work great) but it is a good opportunity to again show what you can do with the WebCrypto API in the browser.

Generating a random .onion name

To generate a random name for a Tor hidden service we first need to generate a new 1024 bit RSA key just as Tor would do:

function generateRSAKey() { var alg = { // This could be any supported RSA* algorithm. name: "RSASSA-PKCS1-v1_5", // We won't actually use the hash function. hash: {name: "SHA-1"}, // Tor hidden services use 1024 bit keys. modulusLength: 1024, // We will use a fixed public exponent for now. publicExponent: new Uint8Array([0x03]) }; return crypto.subtle.generateKey(alg, true, ["sign", "verify"]); }

generateKey() returns a Promise that resolves to the new key pair. The second argument specifies that we want the key to be exportable as we need to do that in order to check for pattern matches. We will not actually use the key to sign or verify data but we need specify valid usages for the public and private keys.

To check whether a generated public key matches a specific pattern we of course have to compute the hash for the .onion URL:

function computeOnionHash(publicKey) { // Export the DER encoding of the SubjectPublicKeyInfo structure. var promise = crypto.subtle.exportKey("spki", publicKey); promise = promise.then(function (spki) { // Compute the SHA-1 digest of the SPKI. // Skip 22 bytes (the SPKI header) that are ignored by Tor. return crypto.subtle.digest({name: "SHA-1"}, spki.slice(22)); }); return promise.then(function (digest) { // Base32-encode the first half of the digest. return base32(digest.slice(0, 10)); }); }

We first use exportKey() to get an SPKI representation of the public key, use digest() to compute the SHA-1 digest of that, and finally pass it to base32() to Base32-encode the first half of that digest.

Note: base32() is an RFC 3548 compliant Base32 implementation. chrisumbel/thirty-two is a good one that unfortunately does not support ArrayBuffers, I will use a slightly adapted version of it in the example code.

Finding a specific .onion name

The only thing missing now is a function that checks for pattern matches and loops until we found one:

function findOnionName(pattern) { var key; // Start by generating a random key pair. var promise = generateRSAKey().then(function (pair) { key = pair.privateKey; // Generate the .onion hash of the public key. return computeOnionHash(pair.publicKey); }); return promise.then(function (hash) { // Try again if the pattern doesn't match. if (!pattern.test(hash)) { return findOnionName(pattern); } // Key matches! Export and format it. return formatKey(key).then(function (formatted) { return {key: formatted, hash: hash}; }); }); }

We simply use generateRSAKey() and computeOnionHash() as defined before. In case of a pattern match we export the PKCS8 private key information, encode it as Base64 and format it nicely:

function formatKey(key) { // Export the DER-encoded ASN.1 private key information. var promise = crypto.subtle.exportKey("pkcs8", key); return promise.then(function (pkcs8) { var encoded = base64(pkcs8); // Wrap lines after 64 characters. var formatted = encoded.match(/.{1,64}/g).join("\n"); // Wrap the formatted key in a header and footer. return "-----BEGIN PRIVATE KEY-----\n" + formatted + "\n-----END PRIVATE KEY-----"; }); }

Note: base64() refers to an existing Base64 implementation that can deal with ArrayBuffers. niklasvh/base64-arraybuffer is a good one that I will use in the example code.

What is logged to the console can be directly used to replace any random key that Tor has assigned before. Here is how you would use the code we just wrote:

findOnionName(/ab/).then(function (result) { console.log(result.hash + ".onion", result.key); }, function (err) { console.log("An error occurred, please reload the page."); });

The Promise returned by findOnionName() will not resolve until a match was found. When generating lots of keys Firefox currently sometimes fails with a “transient error” that needs to be investigated. If you want a loop that runs despite that error you could simply restart the search in the error handler.

The code

https://gist.github.com/ttaubert/389255d724f219f76900

Include it in a minimal web site and have the Web Console open. It will run in Firefox 33+ and Chrome 37+ with the WebCrypto API explicitly enabled (if necessary).

The pitfalls

As said before, the approach shown above is quite naive and thus very slow. The easiest optimization to implement might be to spawn multiple web workers and let them search in parallel.

We could also speed up finding keys by not regenerating the whole RSA key every loop iteration but instead increasing the public exponent by 2 (starting from 3) until we find a match and then check whether that produces a valid key pair. If it does not we can just continue.

Lastly, the current implementation does not perform any safety checks that Tor might run on the generated key. All of these points would be great reasons for a follow-up post.

Important: You should use the keys generated with this code to run a hidden service only if you trust the host that serves it. Getting your keys off of someone else’s web server is a terrible idea. Do not be that guy or gal.

|

|

Jennifer Boriss: Five Things I’ve Learned About redditors (so far) |

|

|