Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Doug Belshaw: POSTPONED: Mozilla Maker Party Newcastle 2014 |

We’ve made the decision today to postpone the Maker Party planned for Saturday 13th September at Campus North, Newcastle-upon-Tyne.

Although there were lots of people interested in the event, the timing proved problematic for potential mentors and attendees alike. We’re going to huddle next week and think about a more suitable time – perhaps in November.

Thanks to those who got in touch about the event and offered their support. ![]()

http://dougbelshaw.com/blog/2014/09/02/postponed-mozilla-maker-party-newcastle-2014/

|

|

Jared Wein: We knew unicorns could bounce, but now they spin?! |

One of the hidden features of Firefox 29 was a unicorn that bounced around the Firefox menu when it was emptied. The LA Times covered it in their list of five great features of Firefox 29.

Building on the fun, Firefox 32 (released today) will now spin the unicorn when you press the mouse down in the area that unicorn is bouncing.

The really cool thing about the unicorns movement, both bouncing and spinning, and coloring is that this is all completed using pure CSS. There is no Javascript triggering the animation, direction, or events.

The unicorn is shown when the menu’s :empty pseudo-class is true. The direction and speed of the movement is controlled via a CSS animation that moves the unicorn in the X- and Y-direction, with both moving at different speeds. On :hover, the image of the unicorn gets swapped from grayscale to colorful. Finally, :active triggers the spinning.

Tagged: australis, CSS, firefox, planet-mozilla

http://msujaws.wordpress.com/2014/09/02/we-knew-unicorns-could-bounce-but-now-they-spin/

|

|

Daniel Stenberg: HTTP/2 interop pains |

At around 06:49 CEST on the morning of August 27 2014, Google deployed an HTTP/2 draft-14 implementation on their front-end servers that handle logins to Google accounts (and possibly others). Those at least take care of all the various login stuff you do with Google, G+, gmail, etc.

The little problem with that was just that their implementation of HTTP2 is in disagreement with all existing client implementations of that same protocol at that draft level. Someone immediately noticed this problem and filed a bug against Firefox.

The Firefox Nightly and beta versions have HTTP2 enabled by default and so users quickly started to notice this and a range of duplicate bug reports have been filed. And keeps being filed as more users run into this problem. As far as I know, Chrome does not have this enabled by default so much fewer Chrome users get this ugly surprise.

The Google implementation has a broken cookie handling (remnants from the draft-13 it looks like by how they do it). As I write this, we’re on the 7th day with this brokenness. We advice bleeding-edge users of Firefox to switch off HTTP/2 support in the mean time until Google wakes up and acts.

You can actually switch http2 support back on once you’ve logged in and it then continues to work fine. Below you can see what a lovely (wildly misleading) error message you get if you try http2 against Google right now with Firefox:

This post is being debated on hacker news.

Updated: 20:14 CEST: There’s a fix coming, that supposedly will fix this problem on Thursday September 4th.

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1059627] changes made at the same time as a comment are no longer grouped with the comment

- [880097] Only retrieve database fetched values if requested

- [1056162] add bit.ly support to bmo

- [1059307] Remove reporting of the firefox release channel from the guided bug entry (hasn’t been accurate since firefox 25)

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/09/02/happy-bmo-push-day-111/

|

|

John O'Duinn: “Lost Cat” by Caroline Paul |

After all the fun reading “Meanwhile in San Francisco”, I looked to see if this duo had co-written any other books. Sure enough, they had.

“Lost Cat” tells the true story of how an urban cat owner (one of the authors) loses her cat, then has the cat casually walk back in the door weeks later healthy and well. The book details various experiments the authors did using GPS trackers, and tiny “CatCam” cameras to figure out where her cat actually went. Overlaying that data onto google maps surprised them both – they never knew their cats roamed so far and wide across the city. The detective work they did to track down and then meeting with “Cat StealerA” and “Cat Stealer B” made for a fun read… Just like “Meanwhile in San Francisco”, the illustrations are all paintings. Literally. My all-time favorite painting of any cat ever is on page7.

“Lost Cat” tells the true story of how an urban cat owner (one of the authors) loses her cat, then has the cat casually walk back in the door weeks later healthy and well. The book details various experiments the authors did using GPS trackers, and tiny “CatCam” cameras to figure out where her cat actually went. Overlaying that data onto google maps surprised them both – they never knew their cats roamed so far and wide across the city. The detective work they did to track down and then meeting with “Cat StealerA” and “Cat Stealer B” made for a fun read… Just like “Meanwhile in San Francisco”, the illustrations are all paintings. Literally. My all-time favorite painting of any cat ever is on page7.

A fun read… and a great gift to any urban cat owners you know.

http://oduinn.com/blog/2014/09/01/lost-cat-by-caroline-paul/

|

|

Nick Thomas: Deprecating our old rsync modules |

We’ve removed the rsync modules mozilla-current and mozilla-releases today, after calling for comment a few months ago and hearing no objections. Those modules were previously used to deliver Firefox and other Mozilla products to end users via a network of volunteer mirrors but we now use content delivery networks (CDN). If there’s a use case we haven’t considered then please get in touch in the comments or on the bug.

http://ftangftang.wordpress.com/2014/09/02/deprecating-our-old-rsync-modules/

|

|

Josh Aas: Simple Code Review Checklists |

What if, when giving a patch r+ on Mozilla’s bugzilla, you were presented with the following checklist:

- I have considered:

- Memory leaks

- Null checks

- Security implications

- Mozilla Code Style

You could not actually submit an r+ unless you had checked an HTML check box next to each item. For patches where any of this is irrelevant, just check the box(es) – you considered it.

Checklists like this are commonly used in industries that value safety, quality, and consistency (e.g. medicine, construction, aviation). I don’t see them as often as I’d expect in software development, despite our commitments to these values.

The idea here is to get people to think about the most common and/or serious classes of errors that can be introduced with nearly all patches. Reviewers tend to focus on whatever issue a patch addresses and pay less attention to the other myriad issues any patch might introduce. Example: a patch adds a null check, the reviewer focuses on pointer validity, and misses a leak being introduced.

Catching mistakes in code review is much, much more efficient than dealing with them after they make it into our code base. Once they’re in, fixing them requires a report, a regression range, debugging, a patch, another patch review, and another opportunity for further regressions. If a checklist like this spurred people to do some extra thinking and eliminated even one in twenty (5%) of preventable regressions in code review, we’d become a significantly more efficient organization.

For this to work, the checklist must be kept short. In fact, there is an art to creating effective checklists, involving more than just brevity, but I won’t get into anything else here. My list here has only four items. Are there items you would add or remove?

General thoughts on this or variations as a way to reduce regressions?

http://boomswaggerboom.wordpress.com/2014/09/01/simple-code-review-checklists/

|

|

Pascal Finette: I've Seen the Future - and It's Virtual |

More than 20 years ago I first experienced virtual reality in one of those large-scale 3D rigs which was traveling the country, setting up shop in the local multiplex cinema and charging you a small fortune to step into a 4-by-4 foot contraption, strap on a pair of 3D goggles, grab a plastic gun and hunt down some aliens in an immersive 3D environment.

It’s funny - as unimpressive as the graphics were, as much as the delay between movement and visual update was puke inducing – I still have vivid memories of the game and the incredible experience of literally stepping into a new world.

Fast forward to last year: Oculus revived the whole Virtual Reality (VR) scene with their Rift headset – cobbled together with cheap off-the-shelf components and some clever hardware and software hacking. The first time I tried the Rift I was hooked. It was the exact same crazy experience I had some 20 years ago – I found myself in a new world, just this time with much better graphics, none of the nasty visual delays (which makes most people motion sick) and all delivered in a much more palatable device. And again, I can’t get that initial experience out of my head (in my case a rather boring walking experience of a Tuscan villa).

Since that experience, I joined Singularity University where we have a Rift in our Innovation Lab. Over the course of the last 8 weeks I must have demoed the Rift to at least 30 people – and they all react in the exact same way:

People giggle, laugh, scream, start moving with the motion they see in the headset… They are lost within the experience in less than 30 seconds of putting the goggles on. The last time I’ve seen people react emotionally in a similar way to a new piece of technology was when Apple introduced the iPhone.

It’s rare to see a piece of technology create such a strong emotional reaction (delight!). And that’s precisely the reason why I believe VR will be huge. A game changer. The entry vector will be gaming – with serious applications following suit (think about use cases in the construction industry, engineering, visualization of complex information) and immersive storytelling being probably the biggest game changer. In the future you will not watch a movie or the news – you will be right in it. You will shop in these environments. You will not Skype but literally be with the other person.

And by being instead of just watching we will be able to tap much deeper into human empathy than ever before. To get a glimpse of this future, check out these panoramic pictures of the destruction in Gaza.

With prices for VR technology rapidly approaching zero (the developer version of Oculus Rift’s DK2 headset is a mere $350 and Google already introduced a cardboard (!) kit which turns your Android phone into a VR headset) and software development tools becoming much more accessible, we are rapidly approaching the point where the tools of production become so accessible that we will see an incredible variety of content being produced. And as VR is not bound to a specific hardware platform, I believe we will see a market more akin to the Internet than the closed ecosystems of traditional game consoles or mobile phone app stores.

The future of virtual reality is nigh. And it’s looking damn real.

|

|

Gervase Markham: Kingdom Code UK |

Kingdom Code is a new initiative to gather together Christians who program, to direct their efforts towards hastening the eventual total triumph of God’s kingdom on earth. There’s a preparatory meet-up on Monday 15th September (tickets) and then a full get-together on Monday 13th October. Check out the website and sign up if you are interested.

(There’s also Code for the Kingdom in various cities in the US and India, if you live nearer those places than here.)

http://feedproxy.google.com/~r/HackingForChrist/~3/5qK7pLgMj8k/

|

|

Adam Lofting: One month of Webmaker Growth Hacking |

This post is an attempt to capture some of the things we’ve learned from a few busy and exciting weeks working on the Webmaker new user funnel.

I will forget some things, there will be other stories to tell, and this will be biased towards my recurring message of “yay metrics”.

How did this happen?

As Dave pointed out in a recent email to Webmaker Dev list, “That’s a comma, not a decimal.”

What happened to increase new user sign-ups by 1,024% compared the previous month?

Is there one weird trick to…?

No.

Sorry, I know you’d like an easy answer…

This growth is the result of a month of focused work and many many incremental improvements to the first-run experience for visitors arriving on webmaker.org from the promotion we’ve seen on the Firefox snippet. I’ll try to recount some of it here.

While the answer here isn’t easy, the good news is it’s repeatable.

Props

While I get the fun job of talking about data and optimization (at least it’s fun when it’s good news), the work behind these numbers was a cross-team effort.

Aki, Andrea, Hannah and I formed the working group. Brett and Geoffrey oversaw the group, sanity checked our decisions and enabled us to act quickly. And others got roped in along the way.

I think this model worked really well.

Where are these new Webmaker users coming from?

We can attribute ~60k of those new users directly to:

- Traffic coming from the snippet

- Who converted into users via our new Webmaker Landing pages

Data-driven iterations

I’ve tried to go back over our meeting notes for the month and capture the variations on the funnel as we’ve iterated through them. This was tricky as things changed so fast.

This image below gives you an idea, but also hides many more detailed experiments within each of these pages.

With 8 snippets tested so far, 5 funnel variations and at least 5 content variables within each funnel we’ve iterated through over 200 variations of this new user flow in a month.

We’ve been able to do this and get results quickly because of the volume of traffic coming from the snippet, which is fantastic. And in some cases this volume of traffic meant we were learning new things quicker than we were able to ship our next iteration.

What’s the impact?

If we’d run with our first snippet design, and our first call to action we would have had about 1,000 new webmaker users from the snippet, instead of 60,000 (the remainder are from other channels and activities). Total new user accounts is up by ~1,000% but new users from the snippet specifically increased by around 6 times that.

One not-very-weird trick to growth hacking:

I said there wasn’t one weird trick, but I think the success of this work boils down to one piece of advice:

- Prioritize time and permission for testing, with a clear shared objective, and get just enough people together who can make the work happen.

It’s not weird, and it sounds obvious, but it’s a story that gets overlooked often because it doesn’t have the simple causation based hooked we humans look for in our answers.

It’s much more appealing when someone tells you something like “Orange buttons increase conversion rate”. We love the stories of simple tweaks that have remarkable impact, but really it’s always about process.

More Growth hacking tips:

- Learn to kill your darlings, and stay happy while doing it

- We worked overtime to ship things that got replaced within a week

- It can be hard to see that happen to your work when you’re invested in the product

- My personal approach is to invest my emotion in the impact of the thing being made rather than the thing itself

- But I had to lose a lot of A/B tests to realize that

- Your current page is your control

- Test ideas you think will beat it

- If you beat it, that new page is your new control

- Rinse and repeat

- Optimize with small changes (content polishing)

- Challenge with big changes (disruptive ideas)

- Focus on areas with the most scope for impact

- Use data to choose where to use data to make choices

- Don’t stretch yourself too thin

What happens next?

- We have some further snippet coverage for the next couple of weeks, but not at the same level we’ve had recently, so we’ll see this growth rate drop off

- We can start testing the funnel we’ve built for other sources of traffic to see how it performs

- We have infrastructure for spinning up and testing landing pages for many future asks

- This work is never done, but with any optimization you see declining returns on investment

- We need to keep reassessing the most effective place to spend our time

- We have a solid account sign-up flow now, but there’s a whole user journey to think about after that

- We need to gather up and share the results of the tests we ran within this process

Testing doesn’t have to be scary, but sometimes you want it to be.

http://feedproxy.google.com/~r/adamlofting/blog/~3/5gy86MXb7iw/

|

|

Gervase Markham: Google Safe Browsing Now Blocks “Deceptive Software” |

From the Google Online Security blog:

Starting next week, we’ll be expanding Safe Browsing protection against additional kinds of deceptive software: programs disguised as a helpful download that actually make unexpected changes to your computer—for instance, switching your homepage or other browser settings to ones you don’t want.

I posted a comment asking:

How is it determined, and who determines, what software falls into this category and is therefore blocked?

However, this question has not been approved for publication, let alone answered :-( At Mozilla, we recognise exactly the behaviour this initiative is trying to stop, but without written criteria, transparency and accountability, this could easily devolve into “Chrome now blocks software Google doesn’t like.” Which would be concerning.

Firefox uses the Google Safe Browsing service but enhancements to it are not necessarily automatically reflected in the APIs we use, so I’m not certain whether or not Firefox would also be blocking software Google doesn’t like, and if it did, whether we would get some input into the list.

Someone else asked:

So this will block flash player downloads from https://get.adobe.com/de/flashplayer/ because it unexpectedly changed my default browser to Google Chrome?!

Kudos to Google for at least publishing that comment, but it also hasn’t been answered. Perhaps this change might signal a move by Google away from deals which sideload Chrome? That would be most welcome.

http://feedproxy.google.com/~r/HackingForChrist/~3/HbSZy8FImAo/

|

|

Pascal Finette: Robots are eating our jobs |

Shahin Farshchi wrote a piece for IEEE Spectrum, the flagship magazine of the Institute of Electrical and Electronics Engineers, on “Five Myths and Facts About Robotics Technology Today”.

In the article he states:

“Robots are intended to eliminate jobs: MYTH – Almost every major manufacturing and logistics company I’ve spoken to looks to robotics as a means to improve the efficiency of its operations and the quality of life of its existing workers. So human workers continue to be a key part of the business when it comes to robotics. In fact, workers should view robots as how skilled craftsmen view their precision tools: enhancing output while creating greater job satisfaction. Tesla Motors is just one example of using robots [pictured above] to do all the limb-threatening and back-breaking tasks while workers oversee their operation and ensure the quality of their output. At Tesla’s assembly lines, robots glue, rivet, and weld parts together, under the watchful eye of humans. These workers can pride themselves with being part of a new era in manufacturing where robots help to reinvent and reinvigorate existing industries like the automotive sector.”

I disagree.

It is well documented that robots eliminate jobs (heck - that’s what they are for amongst other things). Shahin even shows a picture from Tesla’s highly automated factory depicting a fully automated production line without a single human around. Stating that robots are not replacing jobs but that the few remaining workers “can pride themselves with being part of a new era in manufacturing where robots help to reinvent and reinvigorate existing industries like the automotive sector” really doesn’t cut it.

Robots and automation is destroying jobs, especially at the lower end of the spectrum. At the same time we are not creating enough new jobs - which already leads to massive challenges to our established systems and will only get worse over time.

I suggest you watch this:

In my opinion what’s needed, is us collectively acknowledging the issues at hand and start a productive dialog. The 2013 World Development Report states that we need to create 600 million new jobs globally in the next 15 years to sustain current employment rates – and this doesn’t take into account potential massive job losses due to automation and robots.

We need to start working on this. Now.

|

|

Kevin Ngo: Testing Project Browserify Modules in Karma Test Runner with Gulp |

If you want to test local Browserify modules in your project with Karma, you'll have to take an extra step. One solution is to use karma-browserify that bundles your modules with your tests, but it has downfalls requiring files that require other files. That really sucks since we'll often be unit testing local modules that depend on at least one other module, and thus it'd only be useful for like requiring simple NPM modules.

Another solution uses Gulp to manually build a

test bundle and put it on the project JS root path such that local modules

can be resolved.

Here is the Gulp task in our gulpfile.js:

var browserify = require('browserify'); var glob = require('glob'); // You'll have to install this too. gulp.task('tests', function() { // Bundle a test JS bundle and put it on our project JS root path. var testFiles = glob.sync('./tests/**/*.js'); // Bundle all our tests. return browserify(testFiles).bundle({debug: true}) .pipe(source('tests.js')) // The bundle name. .pipe(gulp.dest('./www/js')); // The JS root path. });

A test bundle, containing all our test files, will be spit out on our JS root

path. Now when we do require('myAppFolder/someJSFile'), Browserify will

easily be able to find the module.

But we also have to tell Karma where our new test bundle is. Do so in our

karma.config.js file:

files: [ {pattern: 'www/js/tests.js', included: true} ]

We'll also want to tell Gulp to re-bundle our tests every time the tests are touched. This can be annoying if you have Gulp set up to watch your JS path, since the tests will spit out a bundle on the JS path

gulp.watch('./tests/**/*.js', ['tests']);

Run your tests and try requiring one of your project files. It should work!

|

|

Patrick Cloke: Adding an Auxiliary Audio Input to a 2005 Subaru Outback |

I own a 2005 (fourth generation) Subaru Outback, I’ve had it since the fall of 2006 and it has been great. I have a little over 100,000 miles and do not plan to sell it anytime soon.

There is one thing that just kills me though. You cannot (easily [1]) change the radio in it…and it is just old enough [2] to have neigher BlueTooth nor an auxiliary audio input. I’ve been carrying around a book of CDs with me for the past 8 years. I decided it was time to change that.

I knew that it was possible to "modify" the radio to accept an auxiliary input, but it involved always playing a silent CD, which I did not find adequate. I recently came across a post of how to do this in such a way that the radio functions as normal, but when you plug in a device to the auxiliary port it cuts out the radio and plays from the device. Someone else had also confirmed that it worked for them. Cool!

I vaguely followed the directions, but made a few changes here and there. Also, everyone online seems to make it seem like the radio is super easy to get out…I seriously think I spent at least two hours on it. There were two videos and a PDF I found useful for this task.

A few images of the uninstalled stereo before any disassembly. (So I could remember how to reassemble it!)

I wouldn’t say that this modification was extremely difficult, but it does involve:

- Soldering to surface mount components (I’m not awesome a soldering, but I have had a good amount of experience).

- The willingness to potentially trash a radio.

- Basic understanding of electrical diagrams and how switches work.

- A lot of time! I spent ~13 hours total working on this.

Total cost of components, however, was < $5.00 (and that’s probably overestimating.) Really the only component I didn’t have was the switching audio jack, which I got at my local RadioShack for $2.99. (I also picked up wire, heatshrink, etc. so…$5.00 sounded reasonable.) The actual list of parts and tools I used was:

- 1/8" Stereo Panel-Mount Phone Jack [$2.99, RadioShack #274-246]

- ~2 feet of each of green and red 22 gauge wire, ~1 foot of black 22 gauge wire.

- Soldering iron / Solder

- 3 x Alligator clip testing wires (1 black, 1 red, 1 green)

- Multimeter

- Hot glue gun / Hot glue

- Various sizes of flat/slotted and Phillips head screw drivers

- Wire strippers

- Wire cutter

- Needle nosed pliers

- Flashlight

- Drill with 1/4" drill bit and a 1/2" spade bit (plus some smaller sized drill bits for pilot holes)

Anyway, once you have the radio out you can disassembly it down to it’s bare components. (It is held together with a bunch of screws and tabs, I took pictures along each step of the way to ensure I could put it back together.)

The initial steps of disassembly: the front after removing the controls, the reverse of the control unit, a top-down view after removing the top of the unit [3].

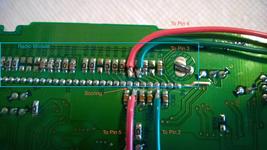

The actual circuit board of the stereo unit. You can see the radio module on the left.

The radio module connects to the motherboard with a 36-pin connector. Pin 31 is the right audio channel and pin 32 is left audio channel. I verified this by connected the disassembly radio to the car and testing with alligator clips hooked up to my phones audio output [4]. I already knew these were the pins from the directions, but I verified by completing the circuit to these pins and ensuring I heard mixed audio with my phone and the radio.

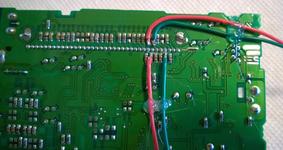

The direction suggested cutting the pin and bending it up to solder to it. I didn’t have any cutting tool small enough to get inbetween the pins…so I flipped the board over and did sketchier things. I scored the board to remove the traces [5] that connected the radio module to the rest of the board. I then soldered on either side of this connection to put it through the audo connector.

Five soldered connections are required, four to the bottom of the board [6] and one to the ground at the top of the unit.

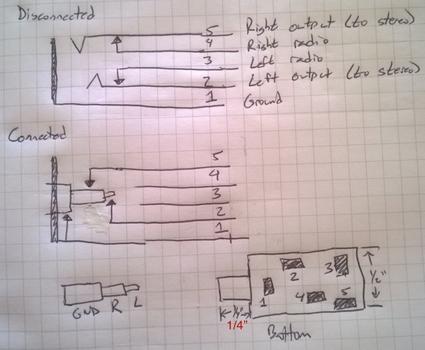

Now, the way that this works is that the audio connector output (pins 2 and 5) is always connected. If nothing is in the jack, it is connected as a passthrough to the inputs (pins 3 and 4, respectively). If an audio connector is plugged in, input redirects to the jack. (Pin 1 is ground.) For reference, red is right audio and green is left audio (black is ground).

A few of the diagrams necessary to do this. The top two diagram is simple the connectors two states: no plug and plug. The bottom two diagrams are a normal 1/8" audio plug and the physical pin-out and measurements of the jack.

To reiterate, pins 2 and 5 connect to the "stereo side" of the scored pins 31 and 32 of the radio module. (I.e. They are the output from the connector back to what will be played by the stereo.) Pins 3 and 4 are the inputs from the radio module side of pins 31 and 32 to the connector.

So after soldering for connections (and some hot glue), we have the ability to intercept the signal. At this point I took the bare motherboard and tested this in my car with alligator clips to ensure the radio still worked, I then connected the alligator clips to a cut audio plug to ensure everything worked.

The wires were also hot glued to the circuit board as strain relief. After reassembly I tested again with alligator clips.

At this point, I reassembled the radio case and ran the wires out through holes in the side / bottom toward the front of the unit. I noticed there was an empty spot in the top left of the unit which looked like it would fit the panel mount audio jack. After doing some measurements I deemed my chances good enough to drill a hole here for the connector. Some tips on drilling plastic, if you haven’t done it much: use the lowest speed you can; start with very small bits and work your way up (I used 4 stages of bits); and cover both sides in masking tape to avoid scratches.

Another benefit of tape is you can write anywhere you want. These measurements were taken initially on the back and transcribed to the front (where I drilled from).

The plastic was actually too think for the panel mount connector to reach through, which is where the 1/2" spade bit came in handy. I use it to drill through roughly half the thickness of the plastic (a little at a time with lots of testing). The connector was able to nestle inside the thinner plastic and reach all the way through.

After the initial hole was drilled, the tape on the back was removed to thin the plastic.

The last bit was soldering the five connections onto the audio connector, applying a coating of hot glue (for strain relief and to avoid shorts). Once the connector was soldered, the front panel was carefully reassembled. Finally, the completed unit was reinstalled back into the car and voila, I now have an auxiliary audio input! Can’t wait to test it out on a long car trip.

The soldered and hot-glued audio jack.

The final installed stereo unit.

One caveat of doing this (and I’m unsure if this is because I didn’t cut the pins as suggested or if this is just a fact of doing it this way…). If you have an auxiliary input device playing AND play a CD, the audio mixes instead of being replaced by the auxiliary device. It works fine on radio though, so just remember to set the stereo to FM.

| [1] | The head unit of the stereo is directly built into the dashboard and includes the heat / air conditioning controls. People do sell kits to convert the dash into one that can accept an aftermarket radio…but where’s the fun in that? |

| [2] | The 2007 edition had an option for a stereo with satelite radio and an AUX input. I probably could have bought this stereo and installed it, but I was quoted $285 last time I asked about changing my radio. |

| [3] | This might seem insane, but I was fairly certain I’d be able to solder a jumper back into place if everything didn’t work, so I actually felt more comfortable doing this than cutting the pin. |

| [4] | Playing one of my favorite albums: No Control by Bad Religion |

| [5] | You can see I actually had a CD in the CD player when I removed the radio. Oops! Luckily it was just a copy of one of my CDs (I never take originals in my car). I didn’t end up scratching it or anything either! |

| [6] | Please don’t judge my soldering! Two of the four connections were a little sloppy (I had to add solder to those instead of just tinning the wires). I did ensure there were no shorts with a multimeter (and had to resolder one connection). |

http://patrick.cloke.us/posts/2014/08/30/adding-an-auxiliary-audio-input-to-a-2005-subaru-outback/

|

|

Chris McAvoy: Open Badges and JSON-LD |

The BA standard working group has had adding extensions to the OB assertion specification high on its roadmap this summer. We agreed that before we could add an extension to an assertion or Badge Class, we needed to add machine readable schema definitions for the 1.0 standard.

We experimented with JSON-Schema, then JSON-LD. JSON-LD isn’t a schema validator, it’s much more. It builds linked data semantics on the JSON specification. JSON-LD adds several key features to JSON, most of which you can play around with in the JSON-LD node module.

- Add semantic data to a JSON structure, link the serialized object to an object type definition.

- Extend the object by linking to multiple object type definitions.

- A standard way to flatten and compress the data.

- Express the object in RDF.

- Treat the objects like a weighted graph.

All of which are features that support the concept behind the Open Badges standard very well. At its core, the OB standard is a way for one party (the issuer) to assert facts about another party (the earner). The assertion (the badge) becomes portable and displayable at the discretion of the owner of the badge.

JSON-LD is also quickly becoming the standard method of including semantic markup on html pages for large indexers like Google. Schema.org now lists JSON-LD examples alongside RDFa and Microdata. Google recommends using JSON-LD for inclusion in their rich snippet listings.

We’ve been talking about JSON-LD on the OB standard working group calls for a while now. It’s starting to feel like consensus is forming around inclusion of JSON-LD markup in the standard. This Tuesday, September 2nd 2014, we’ll meet again to collectively build a list of arguments for and against the move. We’ll also discuss a conditional rollout plan (conditional in that it will only be executed if we get the thumbs up from the community) and identify any gaps we need to cover with commitments from the community.

It’s going to be a great meeting, if you’re at all interested in JSON-LD and Open Badges, please join us!

|

|

Doug Belshaw: Weeknote 35/2014 |

This week I’ve been:

- Surviving while being home alone. The rest of my family flew down to Devon (it’s quicker/easier than driving) to visit the in-laws.

- Working on lots of stuff around the house. There are no plants left in my garden, for example. We decided that we want to start from ‘ground zero’ so I went on a bit of a mission.

- Suffering after launching myself into the week too hard. I’d done half of the things I wanted to do all week by Tuesday morning. By Wednesday I was a bit burnt out.

- Writing blog posts:

- Accepting an invitation to join Code Club’s education advisory committee.

- Finding out about an opportunity to work with a well-known university in the US to design a module for their Ed.D. programme. More on that soon, hopefully!

- Clearing out the Webmaker badges queue (with some assistance from my amazing colleagues)

- Inviting some people to talk to me about the current Web Literacy Map and how we can go about updating it to a version 2.0.

- Finishing and sending the rough draft of a video for a badge which will be available on the iDEA award website when it launches properly.

- Starting to lift weights at the gym. I actually started last week, but I’ve already noticed it help my swimming this week. Improved stamina, and the bottom of my right hamstring doesn’t hurt when I get out of the pool!

Next week I’m at home with a fuller calendar than usual. That’s because I’m talking to lots of people about future directions for the Web Literacy Map. If you’ve started using it, then I’d love to interview you. Sign up for that here.

|

|

Eric Shepherd: The Sheppy Report: August 29, 2014 |

This week, my WebRTC research continued; I spent a lot of time watching videos of presentations, pausing every few seconds to take notes, and rewinding often to be sure I got things right. It was interesting but very, very time-consuming!

I got a lot accomplished this week, although not any actual code on samples like I’d planned to. However, the pages on which the smaller samples will go are starting to come together, between bits of actual content on MDN and my extensive notes and outline. So that’s good.

I’m looking forward to this three-day Labor Day holiday here in the States. I’ll be back at it on Tuesday!

What I did this week?

- Copy-edited the Validator glossary entry.

- Copy-edited and cleaned up the Learning area page Write a simple page in HTML.

- Created an initial stub documentation project plan page for updating the HTML element interface reference docs.

- Turned https://developer.mozilla.org/en-US/docs/Project:About into a redirect to the right place.

- Read a great deal about WebRTC.

- Watched many videos about WebRTC, pausing a lot to take copious notes.

- Built an outline of all the topics I want to be sure to cover. I’m sure this will continue to grow for a while yet.

- Gathered notes and built agendas for the MDN community meeting and the Web APIs documentation meeting.

- Updated the WebRTC doc plan with new information based on my initial notes.

- Offered more input on a bug recommending that we try to add code to prevent people from using the style attribute or any undefined classes.

- Filed bug 1060395 asking for a way to find the pages describing the individual methods and properties of an interface in the Web API reference

- Fixed bug 1058814 about hard-to-read buttons by correcting the styles used by a macro.

- Dealt with expense reports.

- Started very initial work on WebRTC doc tree construction, preparing to reshuffle and clean up the existing, somewhat old, pages, and to add lots of new stuff.

- Started work on trying to figure out how to make the

SubpageMenuByCategoriesmacro not lose headers; it’s calling through toMakeColumnsForDL, which specifically only works for a straight-up

Meetings attended this week

Monday

- MDN bug triage meeting

- #mdndev planning meeting

Tuesday

- Developer Relations weekly meeting.

- 1:1 with Teoli. This went on for an hour instead of the usual 30 minutes, due to the enormous amount of Big Stuff we discussed.

Wednesday

- MDN community meeting

Friday

- #mdn review meeting

- MDN bug swat

- Web APIs documentation meeting.

A pretty good week all in all!

http://www.bitstampede.com/2014/08/29/the-sheppy-report-august-29-2014/

|

|

Benoit Girard: Visual warning for slow B2G transaction landed |

With the landing of bug 1055050, if you turn on the FPS counter on mobile you will now notice a rectangle around the screen edge to warning you that a transaction was out of budget.

- The visual warning will appear if a transaction took over 200ms from start to finish.

- Yellow indicates the transaction took over 200ms.

- Orange will indicate the transaction took about 500ms.

- Red will indicate the transaction is about 1000ms or over.

What’s a transaction?

It’s running the rendering pipeline and includes (1) Running request animation frame and other refresh observers, (2) Flushing pending style changes, (3) Flushing and reflow any pending layout changes, (4) Building a display list, (5) Culling, (6) Updating the layer tree, (7) Sending the final to the compositor, (8) Syncing resources with the GPU. It does NOT include compositing which isn’t part of the main thread transaction. It does not warn for other events like slow running JS events.

Why is this important?

A transaction, just like any other gecko event, blocks the main thread. This means that anything else queued and waiting to be service will be delayed. This means that many things on the page/app will be delayed like: animations, typing, canvas, js callbacks, timers, the next frame.

Why 200ms?

200ms is already very high. If we want anything in the app to run at 60 FPS that doesn’t use a magical async path then any event taking 16ms or more will cause noticeable stutter. However we’re starting with a 200ms threshold to focus on the bigger items first.

How do I fix a visual warning?

The warning as just provided as a visual tool.

http://benoitgirard.wordpress.com/2014/08/29/visual-warning-for-slow-b2g-transaction-landed/

|

|

Priyanka Nag: Maker Party Bhubaneshwar |

Saturday (23rd August 2014), we were at the Center of IT & Management Education (CIME) where we were asked to address a crowd of 100 participants whom we were supposed to teach webmaking. Trust me, very rarely do we get such crowd in events where we get the opportunity to be less of a teacher and more of a learner. We taught them Webmaking, true, but in return we learnt a lot from them.

|

| Maker Party at Center of IT & Management Education (CIME) |

On Sunday, things were even more fabulous at Institute of Technical Education & Research(ITER), Siksha 'O' Anusandhan University college, where we were welcomed by around 400 participants, all filled with energy, enthusiasm and the willingness to learn.

|

| Maker Party at Institute of Technical Education & Research(ITER) |

Our agenda for both days were simple....to have loads and loads of fun! We kept the tracks interactive and very open ended. On both days, we did cover the following topics:

- Introduction to Mozilla

- Mozilla Products and projects

- Ways of contributing to Mozilla

- Intro to Webmaker tools

- Hands-on session on Thimble, Popcorn and X-ray goggles and Appmaker

|

| Cake..... |

- Sayak Sarkar, the co-organizer for this event.

- Sumantro, Umesh and Sukanta from travelling all the way from Kolkata and helping us out with the sessions.

- Rish and Prasanna for organizing these events.

- Most importantly, the entire team of volunteers from both colleges without whom we wouldn't havebeen able to even move a desk.

.jpg) |

| The article in the newspaper next morning |

http://priyankaivy.blogspot.com/2014/08/maker-party-bhubaneshwar.html

|

|

Pascal Finette: Follow Your Fears |

Building trophies in my soul…

|

|