Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Talospace Project: Firefox 92 on POWER |

Meanwhile, in OpenPOWER JIT progress, I'm about halfway through getting the Wasm tests to pass, though I'm currently hung up on a memory corruption bug while testing Wasm garbage collection. It's our bug; it doesn't happen with the C++ interpreter, but unfortunately like most GC bugs it requires hitting it "just right" to find the faulty code. When it all passes, we'll pull everything up to 91ESR for the MVP, and you can try building it. If you want this to happen faster, please pitch in and help.

|

|

The Mozilla Blog: Matrix 4, Blue’s Clues, #StarTrekDay and More — Everything That’s Old is New Again in This Week’s Top Shelf |

|

|

Spidermonkey Development Blog: SpiderMonkey Newsletter (Firefox 92-93) |

|

|

The Mozilla Blog: Feel good when you get online with Firefox |

The post Feel good when you get online with Firefox appeared first on The Mozilla Blog.

https://blog.mozilla.org/en/videos/feel-good-when-you-get-online-with-firefox/

|

|

The Mozilla Blog: Did internet friends fill the gaps left by social distance? |

March 2020 brought to the world a scenario we only imagined possible in dystopian novels. Once bustling cities and towns were desolate. In contrast, the highways and byways of the internet were completely congested with people grasping for human connection, and internet friends became more important than ever.

Since then, there have been countless discussions about how people have fared with keeping in touch with others during the COVID-19 pandemic — like how families have endured while being separated by continents without the option to travel, and how once solid friendships have waxed and waned without brunches and cocktail hours.

However, the internet has served more like a proverbial town square than ever before, with many having found themselves using online spaces to create and cultivate internet friends more over the last year and a half than ever before. As the country starts hesitantly opening, the looming question overall is, what will these online relationships look like when COVID-19 is no more?

For Will F. Coakley, a deputy constable from Austin, Texas, the highs of her online friend groups she made on Zoom and Marco Polo have already dissipated.

“My COVID circle is no more,” she said. “I’m 38, so people my age often have spouses and children.”

Coakley found online platforms to be a refreshing reprieve from her demanding profession that served at the frontlines of the pandemic. Just as she was getting accustomed to ‘the new normal,’ her routines once again changed, with many online friends falling out of touch as cities and towns began to experiment with opening.

Coakley has not met anyone from her COVID circle in person, and any further communication is uncertain, boding even worse than the potential dissolutions of real-life friendships reported on throughout the year.

“In a perfect world, we would hope that things opening up would mean that you could start to meet up with your online friends in person. However, many people will experience a transition in their social circle as they start allocating more of their emotional resources to in-person interactions.” said Kyler Shumway, PsyD, a clinical psychologist and author of The Friendship Formula: How to Say Goodbye to Loneliness and Discover Deeper Connection. “You may spend less time with your online people and more time with coworkers, friends, and family that are in your immediate area.”

Looking back, many social apps themselves similarly spiked during the time of the lockdown and are now seeing use fall. The invite-only, social networking app Clubhouse launched in March 2020. It quickly gained popularity amid the height of the pandemic, having amassed 600,000 registered users by December 2020 and 8.1 million downloads by mid- February 2021.

The original fervor over Clubhouse has waned as fewer people are cooped up indoors. While many people still use the platform for various professional purposes or niche hobbies, its day-to-day usership has dropped significantly.

Olivia B. Othman, 38, a Project Assistant in Wuppertal, Germany developed friendships online during the pandemic through Clubhouse as well as through a local app called Spontacts. She has been able to meet people in person as her area has begun to open and said she found the experience to be liberating.

While Othman already had experience with developing close personal relationships online, the pandemic prompted a unique perspective for her, encouraging her to invest in new devices for better communication.

Overall, she has fared well with sustaining her online friendships.

“I have dropped some [people] but also found good people among them,” Othman said.

Othman wasn’t alone in turning to apps for friendship and human connection. Facebook and Instagram were among the most downloaded apps in 2020, according to the Business of Apps. Facebook had 2.85 billion monthly active users as of the first quarter of 2021, compared to 2.60 billion during the first quarter of 2020. While Instagram went from 1 billion monthly active users in the first quarter of 2020 to 1.07 billion monthly active users as of the first quarter of 2021. And Twitter grew from 186 million users to 199 million users since the pandemic started, according to Twitter’s first quarter earnings report..

Apps such as TikTok and YouTube were popular outlets for creating online friendship in 2020; however, their potential for replicating the emotional fulfillment that comes from interpersonal relationships is limited, as Shumway said, “the unmet need remains unmet.”

“Many online spaces offer synthetic connections. Instead of spending time with a friend playing a game online or going out to grab coffee, a person might be tempted to watch one of their favorite Youtubers or scroll through TikTok for hours on end,” Shumway said. “These kinds of resources provide a felt sense of relationship – you feel like you’re part of something. But then when you turn off your screen, those feelings of loneliness will come right back.”

“Relationships that may have formed over the past year through online interactions, my sense is that those will continue to last even as people start to reconnect in person. Online friendships have their limits, but they are friendships nonetheless,” Shumway said.

Zach Fox, 29, a software engineer has maintained long-distance friendships thanks in large part to online gaming, an important social connection that carried on from before the pandemic.

“We would text chat with each other most of the time, and use voice chat when playing video games together,” he said.

While he is excited about seeing friends and family again as restrictions lift, Fox feels his online friendships just as strong.

“I feel closer to some of my online friends than I do to some of my ‘offline’ friends,” he said. “With several exceptions, such as my relationship with my fianc'ee, I tend to favor online friendships because I have the opportunity to be present with and spend quality time with my online friends more often than I can spend time with IRL friends.”

Ultimately, many are finding less anxiousness and more excitement in the prospect of returning to the real world, even if some interaction may be awkward. Both Coakley and Othman stated they favored in-person meetings to online interactions, despite having enjoyed the times they had with many online friends.

“As you make these choices, make sure to communicate openly and honestly. Rather than letting a friendship slowly decay through ghosting, consider being real and explaining to them what is happening for you. You might share that you are spending more time with people in person and that you have less energy for online time with them,” Shumway said.

As things change Coakley has found other connections to keep herself grounded. Her mental health team comprises a counselor she has sessions with online but has only met in person once. She admitted to being more enthusiastic about her counselor who she meets with in person regularly.

“I hate having a screen between them and me. It’s more intimate and a shared experience in person,” she said.

Despite not keeping up with her pandemic friends, Coakley does have other online friends that she knew before the pandemic with whom she has close connections.

“At the very least, my [online] connections with [folks and with] Black women have developed into actual friendships, and we have plans on meeting in person,” she said. “We’ve become an integral part of each other’s lives. Like family, almost.”

The post Did internet friends fill the gaps left by social distance? appeared first on The Mozilla Blog.

https://blog.mozilla.org/en/internet-culture/internet-friends-during-pandemic-online/

|

|

The Rust Programming Language Blog: Announcing Rust 1.55.0 |

The Rust team is happy to announce a new version of Rust, 1.55.0. Rust is a programming language empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.55.0 is as easy as:

rustup update stable

If you don't have it already, you can get rustup

from the appropriate page on our website, and check out the

detailed release notes for 1.55.0 on GitHub.

What's in 1.55.0 stable

Cargo deduplicates compiler errors

In past releases, when running cargo test, cargo check --all-targets, or similar commands which built the same Rust crate in multiple configurations, errors and warnings could show up duplicated as the rustc's were run in parallel and both showed the same warning.

For example, in 1.54.0, output like this was common:

$ cargo +1.54.0 check --all-targets

Checking foo v0.1.0

warning: function is never used: `foo`

--> src/lib.rs:9:4

|

9 | fn foo() {}

| ^^^

|

= note: `#[warn(dead_code)]` on by default

warning: 1 warning emitted

warning: function is never used: `foo`

--> src/lib.rs:9:4

|

9 | fn foo() {}

| ^^^

|

= note: `#[warn(dead_code)]` on by default

warning: 1 warning emitted

Finished dev [unoptimized + debuginfo] target(s) in 0.10s

In 1.55, this behavior has been adjusted to deduplicate and print a report at the end of compilation:

$ cargo +1.55.0 check --all-targets

Checking foo v0.1.0

warning: function is never used: `foo`

--> src/lib.rs:9:4

|

9 | fn foo() {}

| ^^^

|

= note: `#[warn(dead_code)]` on by default

warning: `foo` (lib) generated 1 warning

warning: `foo` (lib test) generated 1 warning (1 duplicate)

Finished dev [unoptimized + debuginfo] target(s) in 0.84s

Faster, more correct float parsing

The standard library's implementation of float parsing has been updated to use the Eisel-Lemire algorithm, which brings both speed improvements and improved correctness. In the past, certain edge cases failed to parse, and this has now been fixed.

You can read more details on the new implementation in the pull request description.

std::io::ErrorKind variants updated

std::io::ErrorKind is a #[non_exhaustive] enum that classifies errors into portable categories, such as NotFound or WouldBlock. Rust code that has a std::io::Error can call the kind method to obtain a std::io::ErrorKind and match on that to handle a specific error.

Not all errors are categorized into ErrorKind values; some are left uncategorized and placed in a catch-all variant. In previous versions of Rust, uncategorized errors used ErrorKind::Other; however, user-created std::io::Error values also commonly used ErrorKind::Other. In 1.55, uncategorized errors now use the internal variant ErrorKind::Uncategorized, which we intend to leave hidden and never available for stable Rust code to name explicitly; this leaves ErrorKind::Other exclusively for constructing std::io::Error values that don't come from the standard library. This enforces the #[non_exhaustive] nature of ErrorKind.

Rust code should never match ErrorKind::Other and expect any particular underlying error code; only match ErrorKind::Other if you're catching a constructed std::io::Error that uses that error kind. Rust code matching on std::io::Error should always use _ for any error kinds it doesn't know about, in which case it can match the underlying error code, or report the error, or bubble it up to calling code.

We're making this change to smooth the way for introducing new ErrorKind variants in the future; those new variants will start out nightly-only, and only become stable later. This change ensures that code matching variants it doesn't know about must use a catch-all _ pattern, which will work both with ErrorKind::Uncategorized and with future nightly-only variants.

Open range patterns added

Rust 1.55 stabilized using open ranges in patterns:

match x as u32 {

0 => println!("zero!"),

1.. => println!("positive number!"),

}

Read more details here.

Stabilized APIs

The following methods and trait implementations were stabilized.

Bound::clonedDrain::as_strIntoInnerError::into_errorIntoInnerError::into_partsMaybeUninit::assume_init_mutMaybeUninit::assume_init_refMaybeUninit::writearray::mapops::ControlFlowx86::_bittestx86::_bittestandcomplementx86::_bittestandresetx86::_bittestandsetx86_64::_bittest64x86_64::_bittestandcomplement64x86_64::_bittestandreset64x86_64::_bittestandset64

The following previously stable functions are now const.

Other changes

There are other changes in the Rust 1.55.0 release: check out what changed in Rust, Cargo, and Clippy.

Contributors to 1.55.0

Many people came together to create Rust 1.55.0. We couldn't have done it without all of you. Thanks!

Dedication

Anna Harren was a member of the community and contributor to Rust known for coining the term "Turbofish" to describe ::<> syntax. Anna recently passed away after living with cancer. Her contribution will forever be remembered and be part of the language, and we dedicate this release to her memory.

|

|

Hacks.Mozilla.Org: Time for a review of Firefox 92 |

Release time comes around so quickly! This month we have quite a few CSS updates, along with the new Object.hasOwn() static method for JavaScript.

This blog post provides merely a set of highlights; for all the details, check out the following:

CSS Updates

A couple of CSS features have moved from behind a preference and are now available by default: accent-color and size-adjust.

accent-color

The accent-color CSS property sets the color of an element’s accent. Accents appear in elements such as a checkbox or radio input. It’s default value is auto which represents a UA-chosen color, which should match the accent color of the platform. You can also specify a color value. Read more about the accent-color property here.

size-adjust

The size-adjust descriptor for @font-face takes a percentage value which acts as a multiplier for glyph outlines and metrics. Another tool in the CSS box for controlling fonts, it can help to harmonize the designs of various fonts when rendered at the same font size. Check out some examples on the size-adjust descriptor page on MDN.

And more…

Along with both of those, the break-inside property now has support for values avoid-page and avoid-column, the font-size-adjust property accepts two values and if that wasn’t enough system-ui as a generic font family name for the font-family property is now supported.

font-size-adjust property on MDN

Object.hasOwn arrives

A nice addition to JavaScript is the Object.hasOwn() static method. This returns true if the specified property is a direct property of the object (even if that property’s value is null or undefined). false is returned if the specified property is inherited or not declared. Unlike the in operator, this method does not check for the specified property in the object’s prototype chain.

Object.hasOwn() is recommended over Object.hasOwnProperty() as it works for objects created using Object.create(null) and with objects that have overridden the inherited hasOwnProperty() method.

Read more about Object.hasOwn() on MDN

The post Time for a review of Firefox 92 appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2021/09/time-for-a-review-of-firefox-92/

|

|

Will Kahn-Greene: Mozilla: 10 years |

It's been a long while since I wrote Mozilla: 1 year review. I hit my 10-year "Moziversary" as an employee on September 6th. I was hired in a "doubling" period of Mozilla, so there are a fair number of people who are hitting 10 year anniversaries right now. It's interesting to see that even though we're all at the same company, we had different journeys here.

I started out as a Software Engineer or something like that. Then I was promoted to Senior Software Engineer and then Staff Software Engineer. Then last week, I was promoted to Senior Staff Software Engineer. My role at work over time has changed significantly. It was a weird path to get to where I am now, but that's probably a topic for another post.

I've worked on dozens of projects in a variety of capacities. Here's a handful of the ones that were interesting experiences in one way or another:

SUMO (support.mozilla.org): Mozilla's support site

Input: Mozilla's feedback site, user sentiment analysis, and Mozilla's initial experiments with Heartbeat and experiments platforms

MDN Web Docs: documentation, tutorials, and such for web standards

Mozilla Location Service: Mozilla's device location query system

Buildhub and Buildhub2: index for build information

Socorro: Mozilla's crash ingestion pipeline for collecting, processing, and analyzing crash reports for Mozilla products

Tecken: Mozilla's symbols server for uploading and downloading symbols and also symbolicating stacks

Standup: system for reporting and viewing status

FirefoxOS: Mozilla's mobile operating system

I also worked on a bunch of libraries and tools:

siggen: library for generating crash signatures using the same algorithm that Socorro uses (Python)

Everett: configuration library (Python)

Markus: metrics client library (Python)

Bleach: sanitizer for user-provided text for use in an HTML context (Python)

ElasticUtils: Elasticsearch query DSL library (Python)

mozilla-django-oidc: OIDC authentication for Django (Python)

Puente: convenience library for using gettext strings in Django (Python)

crashstats-tools: command line tools for accessing Socorro APIs (Python)

rob-bugson: Firefox addon that adds Bugzilla links to GitHub PR pages (JS)

paul-mclendahand: tool for combining GitHub PRs into a single branch (Python)

Dennis: gettext translated strings linter (Python)

I was a part of things:

Soloists

Data steward for crash data

Mozilla Open Source Support: I served on the Foundational Technology grant committee

I've given a few presentations 1:

Dennis Dubstep translation (2013)

Tecken Overview (2020)

Socorro Overview (2021)

- 1

I thought there were more, but I can't recall what they might have been.

I've left lots of FIXME notes everywhere.

I made some stickers:

I've worked with a lot of people and created some really warm, wonderful friendships. Some have left Mozilla, but we keep in touch.

I've been to many work weeks, conferences, summits, and all hands trips.

I've gone through a few profile pictures:

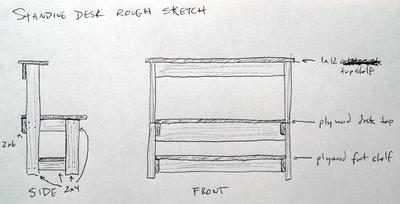

I've built a few desks, though my pictures are pretty meagre:

I've written lots of blog posts on status, project retrospectives, releases, initiatives, and such. Some of them are fun reads still.

It's been a long 10 years. I wonder if I'll be here for 10 more. It's possible!

https://bluesock.org/~willkg/blog/mozilla/mozilla_10_years.html

|

|

This Week In Rust: This Week in Rust 407 |

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

- [Inside] Splitting the const generics features

- [Inside] 1.55.0 pre-release testing

Newsletters

Project/Tooling Updates

- rust-analyzer Changelog #93

- This week in Fluvio #5: the programmable streaming platform

- rustc_codegen_gcc: Progress Report #3

- This week in Datafuse #6

- Announcing Relm4 v0.1

- SixtyFPS (GUI crate) weekly report for 6th of September 2021

Observations/Thoughts

- Why Rust for offensive security

- Had a blast porting one of my serverless applications from Go to Rust - some things I learned

- Broken Encapsulation

- Faster Top Level Domain Name Extraction with Rust

- Rust programs written entirely in Rust

- Fast Rust Builds

- Virtual Machine Dispatch Experiments in Rust

- Rust Verification Tools - Retrospective

- How to avoid lifetime annotations in Rust (and write clean code)

- Using SIMD acceleration in Rust to create the world's fastest

tac - Overview of the Rust cryptography ecosystem

- Rustacean Principles

- Writing software that's reliable enough for production

- Plugins in Rust: Getting Started

- A Gopher's Foray into Rust

- Building a reliable and tRUSTworthy web service

- [audio] The Rustacean Station Podcast - Rust in cURL

Rust Walkthroughs

- The Why and How of Rust Declarative Macros

- Build a secure access tunnel to a service inside of a Remote Private Network, using Rust

- Rust on RISC-V BL602: Rhai Scripting

- Rudroid - Writing the World's worst Android Emulator in Rust

- Hexagonal architecture in Rust #3

- Hexagonal architecture in Rust #4

- Explaining How Memory Management in Rust Works by Comparing with JavaScript

- Postgres Extensions in Rust

- Let's overtake go/fasthttp with rust/warp

- How we built our Python Client that's mostly Rust

- Combining Rust and C++ code in your Bela project

- Data-oriented, clean&hexagonal architecture softwware in Rust - through an example project

- Let's build an LC-3 Virtual Machine

- How to think of unwrap

- Learning Rust: Interfacing with C

- How to build a job queue with Rust and PostgreSQL

- [ID] Belajar Rust - 02: Instalasi Rust

- [video] Crust of Rust: async/await

- [video] Concurrency in Rust - Sharing State

- [video] Setting up an Arduino Project using Rust

Miscellaneous

- Unity files patent for ECS in game engines that would probably affect many Rust ECS crates, including Bevy's

- Rust 2021 celebration and thanks

- Rust on RISC-V BL602: Rhai Scripting

- Wanted: Rust sync web framework

- [audio] Rust 2021 Edition

Crate of the Week

Sadly, we had no nominations this week. Still, in the spirit of not leaving you without some neat rust code, I give you gradient, a command line tool to extract gradients from SVG, display and manipulate them.

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

- Rust for the Polyglot Programmer - a guide in need of review by and feedback from the Rust Community

- Survey - How People Use Rust

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

300 pull requests were merged in the last week

- introduce

let...else - update const generics feature gates

- allow

~constbounds on trait assoc functions - emit specific warning to clarify that

#[no_mangle]should not be applied on foreign statics or functions - fix 2021 dyn suggestion that used code as label

- warn when

[T; N].into_iter()is ambiguous in the new edition - detect bare blocks with type ascription that were meant to be a struct literal

- use right span in prelude collision suggestions with macros

- improve structured tuple struct suggestion

- move global analyses from lowering to resolution

fmt::Formatter::pad: don't callchars().count()more than one time- add

carrying_add,borrowing_sub,widening_mul,carrying_mulmethods to integers - stabilize

UnsafeCell::raw_get - stabilize

Iterator::intersperse - stabilize

std::os::unix::fs::chroot - compiler-builtins: optimize

memcpy,memmoveandmemset - futures: add

TryStreamExt::try_forward, removeTryStreambound fromStreamExt::forward - futures: correcting overly restrictive lifetimes in vectored IO

- cargo: stabilize 2021 edition

- cargo: improve error message when unable to initialize git index repo

- clippy: add the

derivable_implslint - rustdoc: clean up handling of lifetime bounds

- rustdoc: don't panic on ambiguous inherent associated types

- rustdoc: box

GenericArg::Constto reduce enum size - rustdoc: display associated types of implementors

Rust Compiler Performance Triage

A busy week, with lots of mixed changes, though in the end only a few were deemed significant enough to report here.

Triage done by @pnkfelix. Revision range: fe379..69c4a

3 Regressions, 1 Improvements, 3 Mixed; 0 of them in rollups 57 comparisons made in total

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

- [disposition: close] Proposal: Else clauses for for and while loops

- [disposition: merge] Scrape code examples from examples/ directory for Rustdoc

- [disposition: merge] Rust-lang crate ownership policy

Tracking Issues & PRs

- [disposition: merge] Deprecate array::IntoIter::new

- [disposition: merge] Partially stabilize array_methods

- [disposition: merge] Tracking issue Iterator map_while

New RFCs

Upcoming Events

Online

- September 8, 2021, Denver, CO, US - Rust Q&A - Rust Denver

- September 14, 2021, Seattle, WA, US - Monthly Meetup - Seattle Rust Meetup

- September 15, 2021, Vancouver, BC, CA - Considering Rust - Vancouver Rust

- September 16, 2021, Berlin, DE - Rust Hack and Learn - Berline.rs

- September 18, 2021, Tokyo, JP - Rust.Tokyo 2021

North America

- September 8, 2021, Atlanta, GA, US - Grab a beer with fellow Rustaceans - Rust Atlanta

- September 9, 2021, Pleasant Grove, UT, US - Rusty Engine: A 2D game engine for learning Rust with Nathan Stocks (and Pizza)

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Formlogic

OCR Labs

ChainSafe

Subspace

dcSpark

Kraken

Kollider

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

In Rust, soundness is never just a convention.

Thanks to Riccardo D'Ambrosio for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2021/09/08/this-week-in-rust-407/

|

|

Data@Mozilla: This Week in Glean: Data Reviews are Important, Glean Parser makes them Easy |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.) All “This Week in Glean” blog posts are listed in the TWiG index).

At Mozilla we put a lot of stock in Openness. Source? Open. Bug tracker? Open. Discussion Forums (Fora?)? Open (synchronous and asynchronous).

We also have an open process for determining if a new or expanded data collection in a Mozilla project is in line with our Privacy Principles and Policies: Data Review.

Basically, when a new piece of instrumentation is put up for code review (or before, or after), the instrumentor fills out a form and asks a volunteer Data Steward to review it. If the instrumentation (as explained in the filled-in form) is obviously in line with our privacy commitments to our users, the Data Steward gives it the go-ahead to ship.

(If it isn’t _obviously_ okay then we kick it up to our Trust Team to make the decision. They sit next to Legal, in case you need to find them.)

The Data Review Process and its forms are very generic. They’re designed to work for any instrumentation (tab count, bytes transferred, theme colour) being added to any project (Firefox Desktop, mozilla.org, Focus) and being collected by any data collection system (Firefox Telemetry, Crash Reporter, Glean). This is great for the process as it means we can use it and rely on it anywhere.

It isn’t so great for users _of_ the process. If you only ever write Data Reviews for one system, you’ll find yourself answering the same questions with the same answers every time.

And Glean makes this worse (better?) by including in its metrics definitions almost every piece of information you need in order to answer the review. So now you get to write the answers first in YAML and then in English during Data Review.

But no more! Introducing glean_parser data-review and mach data-review: command-line tools that will generate for you a Data Review Request skeleton with all the easy parts filled in. It works like this:

- Write your instrumentation, providing full information in the metrics definition.

- Call

python -m glean_parser data-review(ormach data-reviewif you’re adding the instrumentation to Firefox Desktop). - glean_parser will parse the metrics definitions files, pull out only the definitions that were added or changed in , and then output a partially-filled-out form for you.

Here’s an example. Say I’m working on bug 1664461 and add a new piece of instrumentation to Firefox Desktop:

fog.ipc:

replay_failures:

type: counter

description: |

The number of times the ipc buffer failed to be replayed in the

parent process.

bugs:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

data_reviews:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

data_sensitivity:

- technical

notification_emails:

- chutten@mozilla.com

- glean-team@mozilla.com

expires: neverI’m sure to fill in the `bugs` field correctly (because that’s important on its own _and_ it’s what glean_parser data-review uses to find which data I added), and have categorized the data_sensitivity. I also included a helpful description. (The data_reviews field currently points at the bug I’ll attach the Data Review Request for. I’d better remember to come back before I land this code and update it to point at the specific comment…)

Then I can simply use mach data-review 1664461 and it spits out:

!! Reminder: it is your responsibility to complete and check the correctness of

!! this automatically-generated request skeleton before requesting Data

!! Collection Review. See https://wiki.mozilla.org/Data_Collection for details.

DATA REVIEW REQUEST

1. What questions will you answer with this data?

TODO: Fill this in.

2. Why does Mozilla need to answer these questions? Are there benefits for users?

Do we need this information to address product or business requirements?

TODO: Fill this in.

3. What alternative methods did you consider to answer these questions?

Why were they not sufficient?

TODO: Fill this in.

4. Can current instrumentation answer these questions?

TODO: Fill this in.

5. List all proposed measurements and indicate the category of data collection for each

measurement, using the Firefox data collection categories found on the Mozilla wiki.

Measurement Name | Measurement Description | Data Collection Category | Tracking Bug

---------------- | ----------------------- | ------------------------ | ------------

fog_ipc.replay_failures | The number of times the ipc buffer failed to be replayed in the parent process. | technical | https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

6. Please provide a link to the documentation for this data collection which

describes the ultimate data set in a public, complete, and accurate way.

This collection is Glean so is documented

[in the Glean Dictionary](https://dictionary.telemetry.mozilla.org).

7. How long will this data be collected?

This collection will be collected permanently.

**TODO: identify at least one individual here** will be responsible for the permanent collections.

8. What populations will you measure?

All channels, countries, and locales. No filters.

9. If this data collection is default on, what is the opt-out mechanism for users?

These collections are Glean. The opt-out can be found in the product's preferences.

10. Please provide a general description of how you will analyze this data.

TODO: Fill this in.

11. Where do you intend to share the results of your analysis?

TODO: Fill this in.

12. Is there a third-party tool (i.e. not Telemetry) that you

are proposing to use for this data collection?

No.As you can see, this Data Review Request skeleton comes partially filled out. Everything you previously had to mechanically fill out has been done for you, leaving you more time to focus on only the interesting questions like “Why do we need this?” and “How are you going to use it?”.

Also, this saves you from having to remember the URL to the Data Review Request Form Template each time you need it. We’ve got you covered.

And since this is part of Glean, this means this is already available to every project you can see here. This isn’t just a Firefox Desktop thing.

Hope this saves you some time! If you can think of other time-saving improvements we could add once to Glean so every Mozilla project can take advantage of, please tell us on Matrix.

If you’re interested in how this is implemented, glean_parser’s part of this is over here, while the mach command part is here.

:chutten

(( This is a syndicated copy of the original post. ))

|

|

Chris H-C: This Week in Glean: Data Reviews are Important, Glean Parser makes them Easy |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.) All “This Week in Glean” blog posts are listed in the TWiG index).

At Mozilla we put a lot of stock in Openness. Source? Open. Bug tracker? Open. Discussion Forums (Fora?)? Open (synchronous and asynchronous).

We also have an open process for determining if a new or expanded data collection in a Mozilla project is in line with our Privacy Principles and Policies: Data Review.

Basically, when a new piece of instrumentation is put up for code review (or before, or after), the instrumentor fills out a form and asks a volunteer Data Steward to review it. If the instrumentation (as explained in the filled-in form) is obviously in line with our privacy commitments to our users, the Data Steward gives it the go-ahead to ship.

(If it isn’t _obviously_ okay then we kick it up to our Trust Team to make the decision. They sit next to Legal, in case you need to find them.)

The Data Review Process and its forms are very generic. They’re designed to work for any instrumentation (tab count, bytes transferred, theme colour) being added to any project (Firefox Desktop, mozilla.org, Focus) and being collected by any data collection system (Firefox Telemetry, Crash Reporter, Glean). This is great for the process as it means we can use it and rely on it anywhere.

It isn’t so great for users _of_ the process. If you only ever write Data Reviews for one system, you’ll find yourself answering the same questions with the same answers every time.

And Glean makes this worse (better?) by including in its metrics definitions almost every piece of information you need in order to answer the review. So now you get to write the answers first in YAML and then in English during Data Review.

But no more! Introducing glean_parser data-review and mach data-review: command-line tools that will generate for you a Data Review Request skeleton with all the easy parts filled in. It works like this:

- Write your instrumentation, providing full information in the metrics definition.

- Call

python -m glean_parser data-review(ormach data-reviewif you’re adding the instrumentation to Firefox Desktop). - glean_parser will parse the metrics definitions files, pull out only the definitions that were added or changed in , and then output a partially-filled-out form for you.

Here’s an example. Say I’m working on bug 1664461 and add a new piece of instrumentation to Firefox Desktop:

fog.ipc:

replay_failures:

type: counter

description: |

The number of times the ipc buffer failed to be replayed in the

parent process.

bugs:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

data_reviews:

- https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

data_sensitivity:

- technical

notification_emails:

- chutten@mozilla.com

- glean-team@mozilla.com

expires: neverI’m sure to fill in the `bugs` field correctly (because that’s important on its own _and_ it’s what glean_parser data-review uses to find which data I added), and have categorized the data_sensitivity. I also included a helpful description. (The data_reviews field currently points at the bug I’ll attach the Data Review Request for. I’d better remember to come back before I land this code and update it to point at the specific comment…)

Then I can simply use mach data-review 1664461 and it spits out:

!! Reminder: it is your responsibility to complete and check the correctness of

!! this automatically-generated request skeleton before requesting Data

!! Collection Review. See https://wiki.mozilla.org/Data_Collection for details.

DATA REVIEW REQUEST

1. What questions will you answer with this data?

TODO: Fill this in.

2. Why does Mozilla need to answer these questions? Are there benefits for users?

Do we need this information to address product or business requirements?

TODO: Fill this in.

3. What alternative methods did you consider to answer these questions?

Why were they not sufficient?

TODO: Fill this in.

4. Can current instrumentation answer these questions?

TODO: Fill this in.

5. List all proposed measurements and indicate the category of data collection for each

measurement, using the Firefox data collection categories found on the Mozilla wiki.

Measurement Name | Measurement Description | Data Collection Category | Tracking Bug

---------------- | ----------------------- | ------------------------ | ------------

fog_ipc.replay_failures | The number of times the ipc buffer failed to be replayed in the parent process. | technical | https://bugzilla.mozilla.org/show_bug.cgi?id=1664461

6. Please provide a link to the documentation for this data collection which

describes the ultimate data set in a public, complete, and accurate way.

This collection is Glean so is documented

[in the Glean Dictionary](https://dictionary.telemetry.mozilla.org).

7. How long will this data be collected?

This collection will be collected permanently.

**TODO: identify at least one individual here** will be responsible for the permanent collections.

8. What populations will you measure?

All channels, countries, and locales. No filters.

9. If this data collection is default on, what is the opt-out mechanism for users?

These collections are Glean. The opt-out can be found in the product's preferences.

10. Please provide a general description of how you will analyze this data.

TODO: Fill this in.

11. Where do you intend to share the results of your analysis?

TODO: Fill this in.

12. Is there a third-party tool (i.e. not Telemetry) that you

are proposing to use for this data collection?

No.As you can see, this Data Review Request skeleton comes partially filled out. Everything you previously had to mechanically fill out has been done for you, leaving you more time to focus on only the interesting questions like “Why do we need this?” and “How are you going to use it?”.

Also, this saves you from having to remember the URL to the Data Review Request Form Template each time you need it. We’ve got you covered.

And since this is part of Glean, this means this is already available to every project you can see here. This isn’t just a Firefox Desktop thing.

Hope this saves you some time! If you can think of other time-saving improvements we could add once to Glean so every Mozilla project can take advantage of, please tell us on Matrix.

If you’re interested in how this is implemented, glean_parser’s part of this is over here, while the mach command part is here.

:chutten

|

|

Cameron Kaiser: TenFourFox FPR32 SPR4 available |

http://tenfourfox.blogspot.com/2021/09/tenfourfox-fpr32-spr4-available.html

|

|

The Mozilla Blog: Doomscrolling, ads in texting, Theranos NOT Thanos, and more are the tweets on this week’s #TopShelf |

|

|

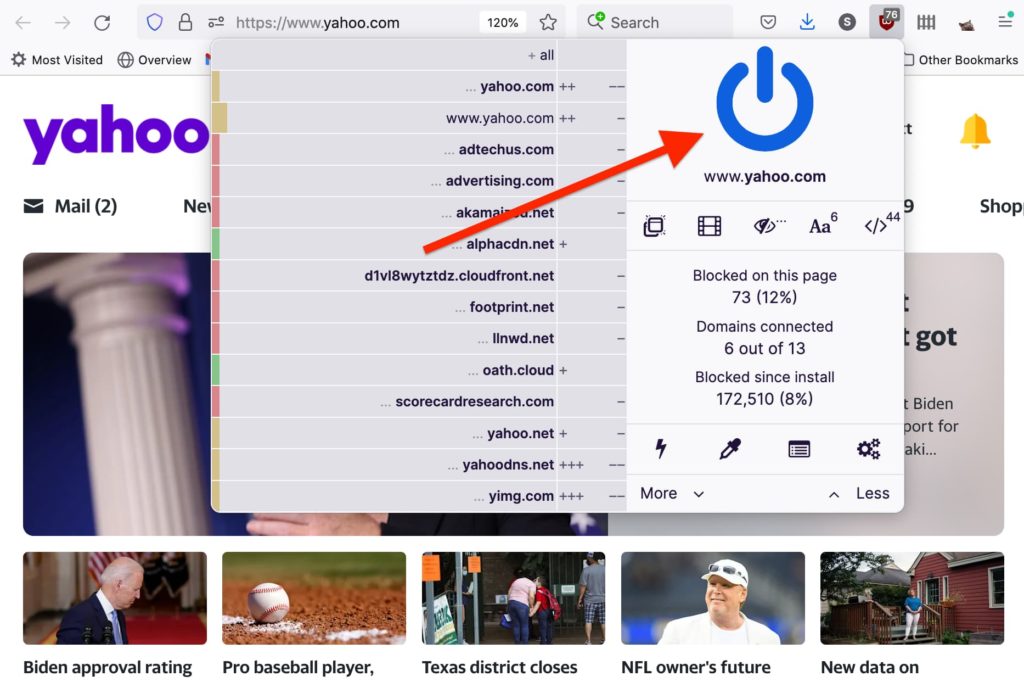

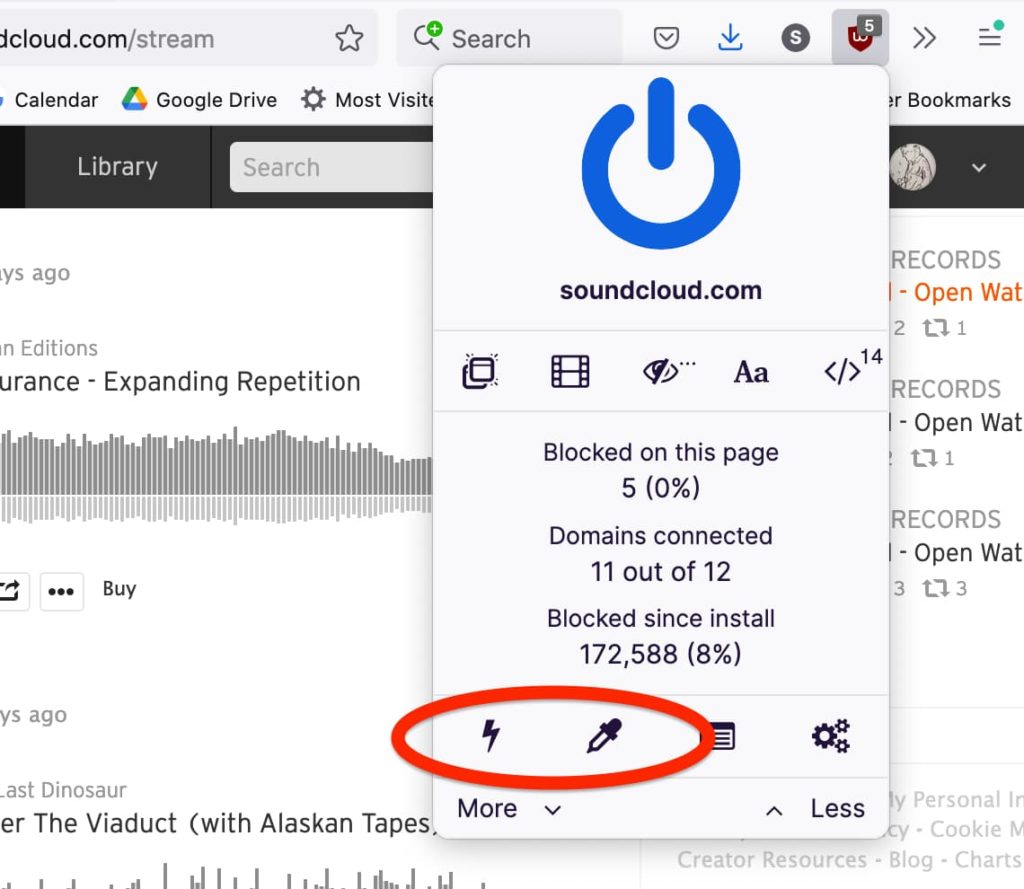

Firefox Add-on Reviews: uBlock Origin—everything you need to know about the ad blocker |

Rare is the browser extension that can satisfy both passive and power users. But that’s an essential part of uBlock Origin’s brilliance—it is an ad blocker you could recommend to your most tech forward friend as easily as you could to someone who’s just emerged from the jungle lost for the past 20 years.

If you install uBlock Origin and do nothing else, right out of the box it will block nearly all types of internet advertising—everything from big blinking banners to search ads and video pre-rolls and all the rest. However if you want extremely granular levels of content control, uBlock Origin can accommodate via advanced settings.

We’ll try to split the middle here and walk through a few of the extension’s most intriguing features and options…

Does using uBlock Origin actually speed up my web experience?

Yes. Not only do web pages load faster because the extension blocks unwanted ads from loading, but uBlock Origin utilizes a uniquely lightweight approach to content filtering so it imposes minimal impact on memory consumption. It is generally accepted that uBlock Origin offers the most performative speed boost among top ad blockers.

But don’t ad blockers also break pages?

Occasionally that can occur, where a page breaks if certain content is blocked or some websites will even detect the presence of an ad blocker and halt passage.

Fortunately this doesn’t happen as frequently with uBlock Origin as it might with other ad blockers and the extension is also extremely effective at bypassing anti-ad blockers (yes, an ongoing battle rages between ad tech and content blocking software). But if uBlock Origin does happen to break a page you want to access it’s easy to turn off content blocking for specific pages you trust or perhaps even want to see their ads.

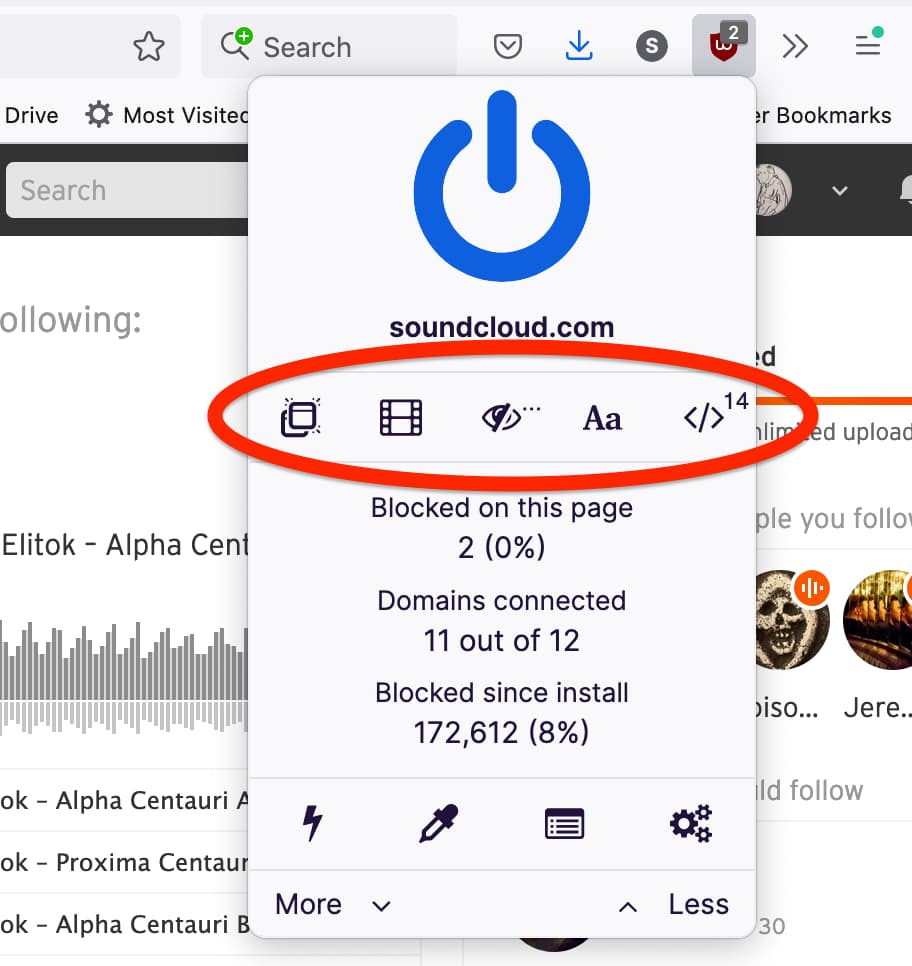

Show us a few tips & tricks

Let’s take a look at some high level settings and what you can do with them.

- Lightning bolt button enables Element Zapper, which lets you temporarily remove page elements by simply mousing over them and clicking. For example, this is convenient for removing embedded gifs or for hiding disturbing images you may encounter in some news articles.

- Eye dropper button enables Element Picker, which lets you permanently remove page elements. For example, if you find Facebook Stories a complete waste of time, just activate Element Picker, mouse over/click the Stories section of the page, select “Create” and presto—The End of Facebook Stories.

The five buttons on this row will only affect the page you’re on.

- Pop-up button blocks—you guessed it—pop-ups

- Film button blocks large media elements like embedded video, audio, or images

- Eye slash button disables cosmetic filtering, which is on by default and elegantly reformats your pages when ads are removed, but if you’d prefer to see pages laid out as they were intended (with just empty spaces instead of ads) then you have that option

- “Aa” button blocks remote fonts from loading on the page

- “>” button disables JavaScript on the page

Does uBlock Origin protect against malware?

In addition to using various advertising block lists, uBlock Origin also leverages potent lists of known malware sources, so it automatically blocks those for you as well. To be clear, there is no software that can offer 100% malware protection, but it doesn’t hurt to give yourself enhanced protections like this.

All of the content block lists are actively maintained by volunteers who believe in the mission of providing users with more choice and control over the content they see online. “uBlock Origin stands uncompromisingly for all users’ best interests, it’s not monetized, and its development and maintenance is driven only by volunteers who share the same view,” says uBlock Origin founder and developer Raymond Hill. “As long as I am the maintainer of [uBlock Origin], this will not change.”

We could go into a lot more detail about uBlock Origin—how you can create your own custom filter lists, how you can set it to block only media of a certain size, cloud storage sync, and so on—but power users will discover these delights on their own. Hopefully we’ve provided enough insight here to help you make an informed choice about exploring uBlock Origin, whether it be your first ad blocker or just the latest.

If you’d like to check out other amazing ad blocker options, please see What’s the best ad blocker for you?

https://addons.mozilla.org/blog/ublock-origin-everything-you-need-to-know-about-the-ad-blocker/

|

|

Mark Mayo: Celebrating 10k KryptoSign users with an on-chain lottery feature! |

TL;DR: we’re adding 3 new features to KryptoSign today!

- CSV downloads of a document’s signers

- Document Locking (prevent further signing)

- Document Lotteries (pick a winner from list of signers)

Why? Well, you folks keep abusing this simple Ethereum-native document signing tool to run contests for airdrops and pre-sales, so we thought we’d make your lives a bit easier! :)

We launched KryptoSign in May this year as tool for Kai, Bart, and I to do the lightest possible “contract signing” using our MetaMask wallets. Write down a simple scope of work with someone, both parties sign with their wallet to signal they agree. When the job is complete, their Ethereum address is right there to copy-n-paste into a wallet to send payment. Quick, easy, delightful. :)

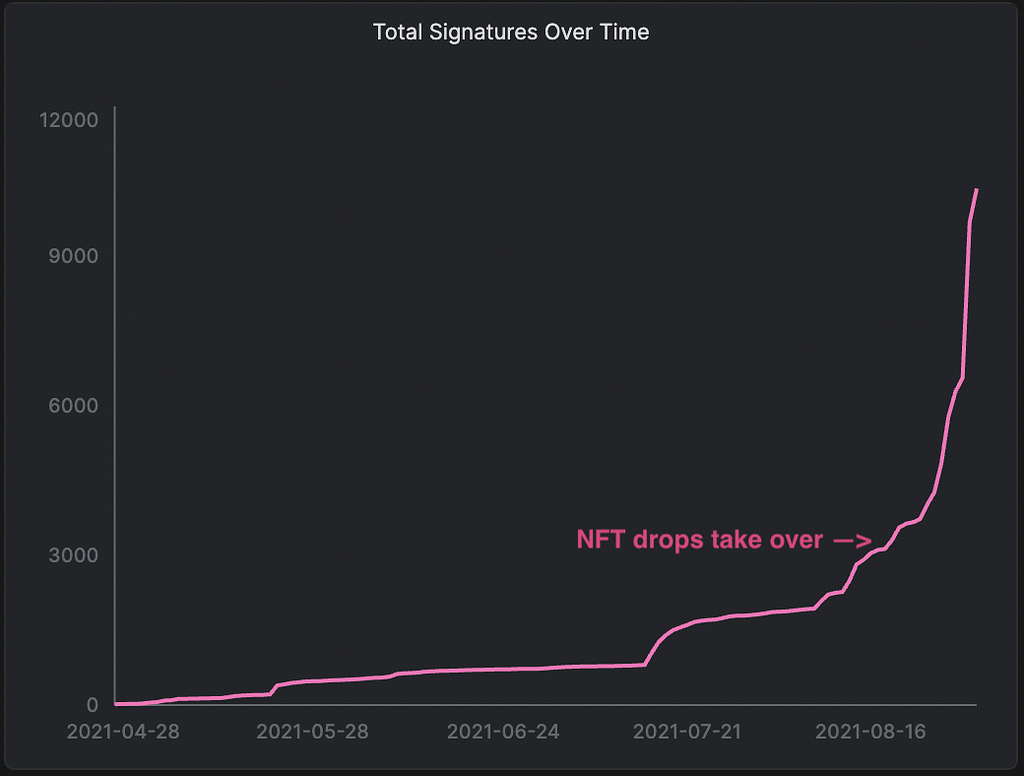

But as often happens, users started showing up and using it for other things. Like guestbooks. And then guestbooks became a way to sign up users for NFT drops as part of contests and pre-sales, and so on. The organizer has everyone sign a KS doc, maybe link their Discord or Twitter, and then picks a winner and sends a NFT/token/etc. to their address in the signature block. Cool.

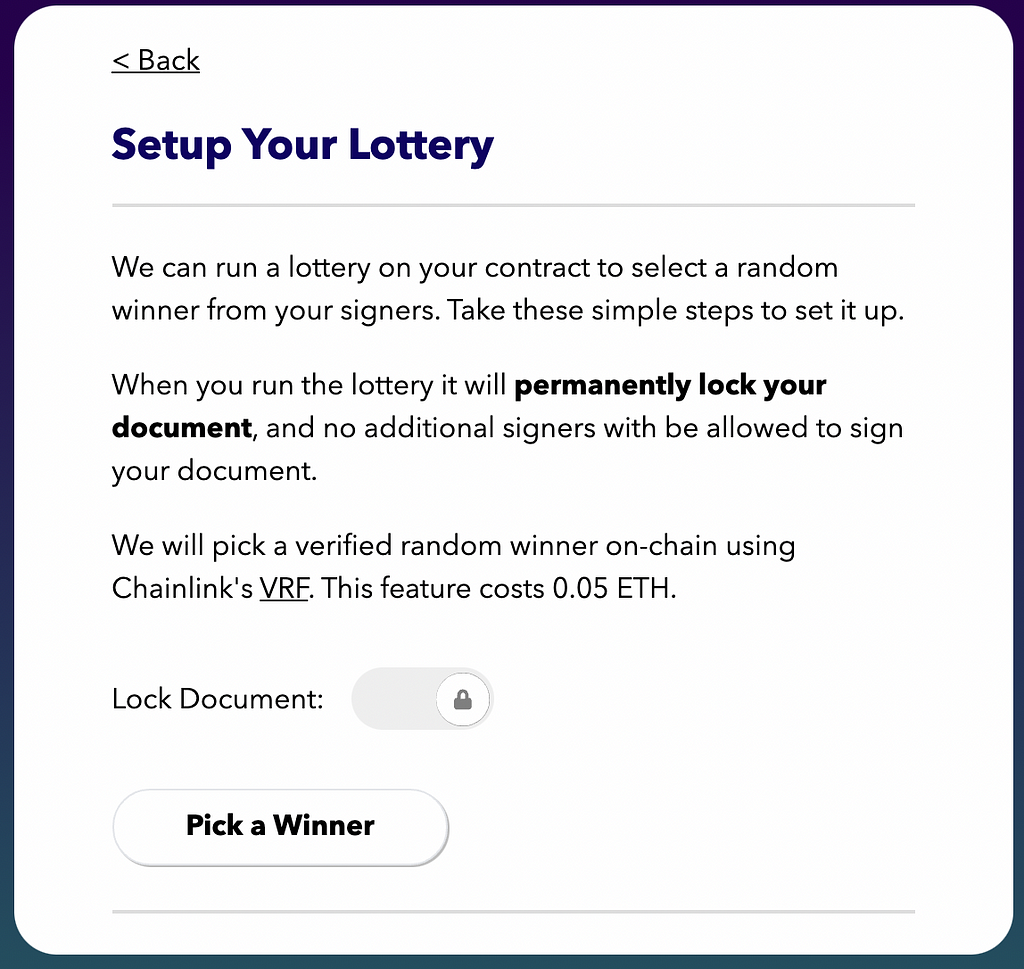

As these NFT drops started getting really hot the feature you all wanted was pretty obvious: have folks sign a KS document as part of a pre-sales window, and have KS pick the winner automatically. Because the stakes on things like hot NFT pre-sales are high, we decided to implement the random winner using Chainlink’s VRF — verifiable random functions — which means everyone involved in a KryptoSign lottery can independently confirm how the random winner was picked. Transparency is nice!

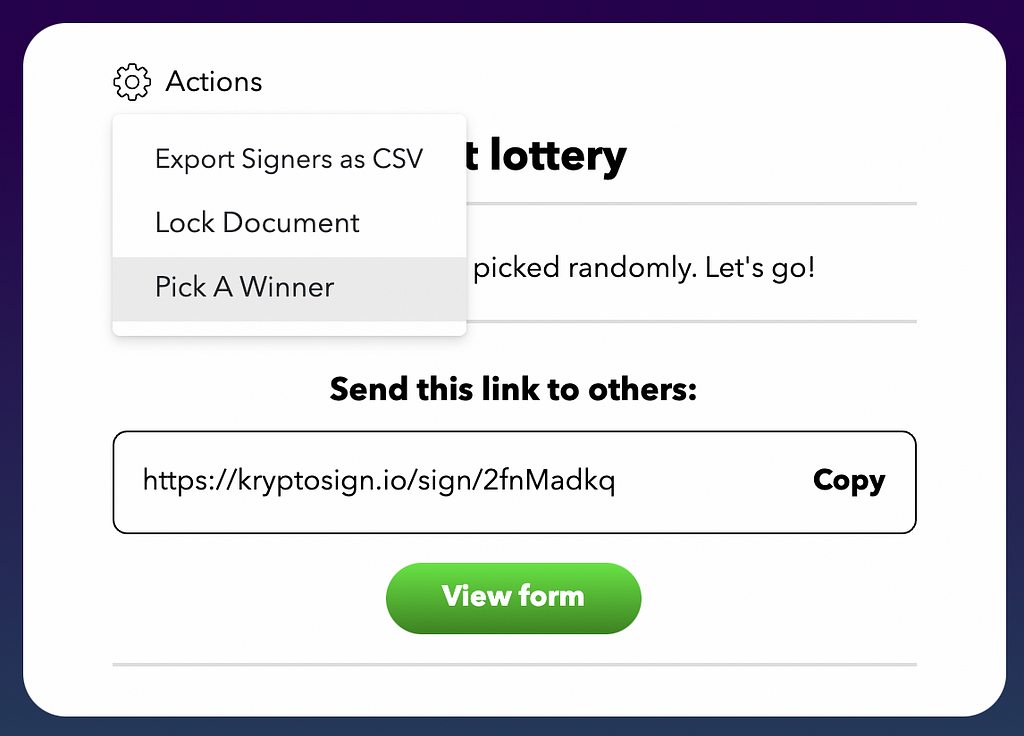

The UI for doing this is quite simple, as you’d hope and expect from KryptoSign. There’s an action icon on the document now:

When you’re ready to pick a winner, it’s pretty easy. Lock the document, and hit the button:

Of note, to pick a winner we’re collecting to 0.05 ETH from you to cover the cost of the 2 LINK required to invoke the VRF on mainnet. You don’t need your own LINK and all the gas-incurring swapping that would imply. Phew! The user approves a single transaction with their wallet (including gas to interact with the smart contract) and they’re done.

Our initial users really wanted the on-chain trust of a VRF, and are willing to pay for it so their communities can trust the draw, but for other use cases you have in mind, maybe it’s overkill? Let us know! We’ll continue to build upon KryptoSign as long as people find useful things to do with it.

Finally, big props to our team who worked through some rough patches with calling the Chainlink VRF contract. Blockchain is weird, yo! This release saw engineering contributions from Neo Cho, Ryan Ouyang, and Josh Peters. Thanks!

— Mark

Celebrating 10k KryptoSign users with an on-chain lottery feature! was originally published in Block::Block on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

The Mozilla Blog: Mozilla VPN Completes Independent Security Audit by Cure53 |

Today, Mozilla published an independent security audit of its Mozilla VPN, which provides encryption and device-level protection of your connection and information when you are on the Web, from Cure53, an unbiased cybersecurity firm based in Berlin with more than 15 years of running software testing and code auditing. Mozilla periodically works with third-party organizations to complement our internal security programs and help improve the overall security of our products. During the independent audit, there were two medium and one high severity issues that were discovered. We have addressed these in this blog post and published the security audit report.

Since our launch last year, Mozilla VPN, our fast and easy-to-use Virtual Private Network service, has expanded to seven countries including Austria, Belgium, France, Germany, Italy, Spain and Switzerland adding to a total of 13 countries where Mozilla VPN is available. We also expanded our VPN service offerings and it’s now available on Windows, Mac, Linux, Android and iOS platforms. Lastly, our list of languages that we support continues to grow, and to date we support 28 languages.

Developed by Mozilla, a mission-driven company with a 20-year track record of fighting for online privacy and a healthier internet, we are committed to innovate and bring new features to the Mozilla VPN through feedback from our community. This year, the team has been working on additional security and customization features which will soon be available to our users.

We know that it’s more important than ever for you to feel safe, and for you to know that what you do online is your own business. Check out the Mozilla VPN and subscribe today from our website.

For more on Mozilla VPN:

Celebrating Mozilla VPN: How we’re keeping your data safe for you

Latest Mozilla VPN features keep your data safe

Mozilla Puts Its Trusted Stamp on VPN

The post Mozilla VPN Completes Independent Security Audit by Cure53 appeared first on The Mozilla Blog.

https://blog.mozilla.org/en/mozilla/news/mozilla-vpn-completes-independent-security-audit-by-cure53/

|

|

Mozilla Security Blog: Mozilla VPN Security Audit |

To provide transparency into our ongoing efforts to protect your privacy and security on the Internet, we are releasing a security audit of Mozilla VPN that Cure53 conducted earlier this year.

The scope of this security audit included the following products:

- Mozilla VPN Qt5 App for macOS

- Mozilla VPN Qt5 App for Linux

- Mozilla VPN Qt5 App for Windows

- Mozilla VPN Qt5 App for iOS

- Mozilla VPN Qt5 App for Android

Here’s a summary of the items discovered within this security audit that were medium or higher severity:

- FVP-02-014: Cross-site WebSocket hijacking (High)

- Mozilla VPN client, when put in debug mode, exposes a WebSocket interface to localhost to trigger events and retrieve logs (most of the functional tests are written on top of this interface). As the WebSocket interface was used only in pre-release test builds, no customers were affected. Cure53 has verified that this item has been properly fixed and the security risk no longer exists.

- FVP-02-001: VPN leak via captive portal detection (Medium)

- Mozilla VPN client allows sending unencrypted HTTP requests outside of the tunnel to specific IP addresses, if the captive portal detection mechanism has been activated through settings. However, the captive portal detection algorithm requires a plain-text HTTP trusted endpoint to operate. Firefox, Chrome, the network manager of MacOS and many applications have a similar solution enabled by default. Mozilla VPN utilizes the Firefox endpoint. Ultimately, we have accepted this finding as the user benefits of captive portal detection outweigh the security risk.

- FVP-02-016: Auth code could be leaked by injecting port (Medium)

- When a user wants to log into Mozilla VPN, the VPN client will make a request to https://vpn.mozilla.org/api/v2/vpn/login/windows to obtain an authorization URL. The endpoint takes a port parameter that will be reflected in a

element after the user signs into the web page. It was found that the port parameter could be of an arbitrary value. Further, it was possible to inject the @ sign, so that the request will go to an arbitrary host instead of localhost (the site’s strict Content Security Policy prevented such requests from being sent). We fixed this issue by improving the port number parsing in the REST API component. The fix includes several tests to prevent similar errors in the future.

- When a user wants to log into Mozilla VPN, the VPN client will make a request to https://vpn.mozilla.org/api/v2/vpn/login/windows to obtain an authorization URL. The endpoint takes a port parameter that will be reflected in a

If you’d like to read the detailed report from Cure53, including all low and informational items, you can find it here.

More information on the issues identified in this report can be found in our MFSA2021-31 Security Advisory published on July 14th, 2021.

The post Mozilla VPN Security Audit appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2021/08/31/mozilla-vpn-security-audit/

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla Mornings on the Digital Markets Act: Key questions for Parliament |

On 13 September, Mozilla will host the next installment of Mozilla Mornings – our regular event series that brings together policy experts, policymakers and practitioners for insight and discussion on the latest EU digital policy developments.

On 13 September, Mozilla will host the next installment of Mozilla Mornings – our regular event series that brings together policy experts, policymakers and practitioners for insight and discussion on the latest EU digital policy developments.

For this installment, we’re checking in on the Digital Markets Act. Our panel of experts will discuss the key outstanding questions as the debate in Parliament reaches its fever pitch.

Speakers

Andreas Schwab MEP

IMCO Rapporteur on the Digital Markets Act

Group of the European People’s Party

Mika Shah

Co-Acting General Counsel

Mozilla

Vanessa Turner

Senior Advisor

BEUC

With opening remarks by Raegan MacDonald, Director of Global Public Policy, Mozilla

Moderated by Jennifer Baker

EU technology journalist

Logistical details

Monday 13 September, 17:00 – 18:00 CEST

Zoom Webinar

Register *here*

Webinar login details to be shared on day of event

The post Mozilla Mornings on the Digital Markets Act: Key questions for Parliament appeared first on Open Policy & Advocacy.

|

|