Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mark Mayo: How we airdropped 4700 MeebitsDAO “Red Ticket” NFTs |

[I’m Mark from block::block; we help build DAO backends and various NFT bits and bobs]

So what happened was that the 6th most rare Meebit was fractionalized into 1M pieces, and 30,000 (3%) of those fragments were graciously donated to MeebitsDAO by Divergence.VC. Kai proposed that a fun way to re-distribute those fractions would be to do a giveaway contest. Earn tickets for a raffle, have a shot at a chunk of a famous Meebit. Cool! There’s 3 different kinds of tickets, but for the 1st lottery Kai wanted to airdrop a raffle ticket in the form of an NFT — aka the “Red Ticket” — to every current Meebit holder so they could have a chance to win. Hype up the MeebitsDAO and have some fun!

The first question was “cool idea, but how do we not lose our shirts on gas fees minting 4700 NFTs!?”. There’s a bunch of low-gas alternative chains out there now, which, fortunately, we’d played around with quite a bit when we started doing community “Achievement” NFTs (see some here on OpenSea) for MeebitsDAO. Polygon, for the moment at least, had some really compelling advantages for this kind of “badge” NFT where there’s no/limited monetary value in the token:

- Polygon is 100% ethereum compatible — same solidity smart contracts, same metamask, even the same explorer (the etherscan team built polygonscan). Easy!

- For end users Polygon addresses are the same as their Ethereum addresses. 1:1. Nice!

- OpenSea natively/automatically displays NFTs from a user’s matching polygon address in their collection! This was huge, because we knew 99% of wallets we wanted to drop a ticket on wouldn’t otherwise notice activity on a side-chain.

- Polygon assets can be moved back to Ethereum mainnet by folks if they so desire, which has a nice feeling.

Getting up and running on Polygon is covered elsewhere, and is pretty simple:

- Add a “custom RPC” network to metamask.

- Get some fake test MATIC (the native token on the polygon chain) on the “Mumbai” testnet from a faucet and play around.

- Get some “real” MATIC on mainnet. Fees are super low on Polygon, so you don’t need much for minting, 5 MATIC ($5!) is plenty to mint thousands of NFTs. I swapped Eth for MATIC on 1inch, and then bridged that MATIC to Polygon. There are many other ways of doing it.

For MeebitsDAO, we create our own ERC721 smart contracts and mint from them instead of using a minting service. It gives us more control, and over time we’re building up a repo of code and scripts that gets better and better and is purpose-built to the needs of the MeebitsDAO community. This maybe sounds like a lot of work vs using a site like Cargo, but if you have some Node.js experience tools like Hardhat make deploying contracts and minting from them approachable.

If you’re new to Ethereum and NFTs, the first thing you need to do know is that you 1st deploy your smart contract to the blockchain, at which point it will get an address, and then you call that smart contract on that address to mint NFT tokens. As you mint the tokens you need to supply a URI that contains the metadata for that particular token (almost everything we think of as “the NFT” — the description, image, etc. — actually lives in the metadata file off-chain). We generate a JSON file for each ticket and upload it to IPFS via a Pinata gateway, and then pin the file with the Pinata SDK. (pinning is the mechanism where you entice IPFS nodes to not discard your files.. ah, IPFS..)

Like many projects, we lean on the heavily tested de facto ERC721 contracts published by the OpenZeppelin team:

https://medium.com/media/8151e4e4313636843195e0442a8246f3/hrefThe contract maybe looks a little daunting but really all it says is:

- Be an ERC721 that has metadata in an external URI, and be burnable by an approved address on an access list.

- The Counters stuff lets the token be capped — i.e. a fixed supply, supplied at contract creation.

- safeMint/burn/tokenURI/supportedInterface are just boilerplate to allow above.

- totalSupply() lets block explorers like etherscan/polygonscan/etc. know what the cap on the token is so they can display it in their UIs.

Once the contract is published, we have a helper script in the hardhat repo that simply reads a CSV files of addresses we want to airdop the ticket to and calls safeMint() on the contract. Here’s the core loop:

https://medium.com/media/43a212e771f50e7a23f29033ee8125c7/hrefBecause blockchain calls don’t always finish quickly, or at all, we use a try{} block to wait for the transactions to succeed before moving onto the next ticket, logging success/failure. When we minted the 4689 NFTs it just happened to be on a morning when Polygon was quite busy, so it took a few hours to complete. We had logs of 2 mints that failed, so we just re-ran those to complete the drop.

You can check out the full repo on GitHub, but hopefully this gives you a quick view at the two “bespoke” pieces: the contract and the minting logic.

Our goal is to share as much as we can about what goes on behind the scenes at block::block as we help the MeebitsDAO team launch fun ideas! Let us know if it’s helpful, what’s missing, what’s cool, and what else we could write about that help shine a light on “DAO Ops”. :)

How we airdropped 4700 MeebitsDAO “Red Ticket” NFTs was originally published in Block::Block on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Mark Mayo: How we airdropped 4700 MeebitsDAO “Red Ticket” NFTs |

[I’m Mark from block::block; we help build DAO backends and various NFT bits and bobs]

So what happened was that the 6th most rare Meebit was fractionalized into 1M pieces, and 30,000 (3%) of those fragments were graciously donated to MeebitsDAO by Divergence.VC. Kai proposed that a fun way to re-distribute those fractions would be to do a giveaway contest. Earn tickets for a raffle, have a shot at a chunk of a famous Meebit. Cool! There’s 3 different kinds of tickets, but for the 1st lottery Kai wanted to airdrop a raffle ticket in the form of an NFT — aka the “Red Ticket” — to every current Meebit holder so they could have a chance to win. Hype up the MeebitsDAO and have some fun!

The first question was “cool idea, but how do we not lose our shirts on gas fees minting 4700 NFTs!?”. There’s a bunch of low-gas alternative chains out there now, which, fortunately, we’d played around with quite a bit when we started doing community “Achievement” NFTs (see some here on OpenSea) for MeebitsDAO. Polygon, for the moment at least, had some really compelling advantages for this kind of “badge” NFT where there’s no/limited monetary value in the token:

- Polygon is 100% ethereum compatible — same solidity smart contracts, same metamask, even the same explorer (the etherscan team built polygonscan). Easy!

- For end users Polygon addresses are the same as their Ethereum addresses. 1:1. Nice!

- OpenSea natively/automatically displays NFTs from a user’s matching polygon address in their collection! This was huge, because we knew 99% of wallets we wanted to drop a ticket on wouldn’t otherwise notice activity on a side-chain.

- Polygon assets can be moved back to Ethereum mainnet by folks if they so desire, which has a nice feeling.

Getting up and running on Polygon is covered elsewhere, and is pretty simple:

- Add a “custom RPC” network to metamask.

- Get some fake test MATIC (the native token on the polygon chain) on the “Mumbai” testnet from a faucet and play around.

- Get some “real” MATIC on mainnet. Fees are super low on Polygon, so you don’t need much for minting, 5 MATIC ($5!) is plenty to mint thousands of NFTs. I swapped Eth for MATIC on 1inch, and then bridged that MATIC to Polygon. There are many other ways of doing it.

For MeebitsDAO, we create our own ERC721 smart contracts and mint from them instead of using a minting service. It gives us more control, and over time we’re building up a repo of code and scripts that gets better and better and is purpose-built to the needs of the MeebitsDAO community. This maybe sounds like a lot of work vs using a site like Cargo, but if you have some Node.js experience tools like Hardhat make deploying contracts and minting from them approachable.

If you’re new to Ethereum and NFTs, the first thing you need to do know is that you 1st deploy your smart contract to the blockchain, at which point it will get an address, and then you call that smart contract on that address to mint NFT tokens. As you mint the tokens you need to supply a URI that contains the metadata for that particular token (almost everything we think of as “the NFT” — the description, image, etc. — actually lives in the metadata file off-chain). We generate a JSON file for each ticket and upload it to IPFS via a Pinata gateway, and then pin the file with the Pinata SDK. (pinning is the mechanism where you entice IPFS nodes to not discard your files.. ah, IPFS..)

Like many projects, we lean on the heavily tested de facto ERC721 contracts published by the OpenZeppelin team:

https://medium.com/media/8151e4e4313636843195e0442a8246f3/hrefThe contract maybe looks a little daunting but really all it says is:

- Be an ERC721 that has metadata in an external URI, and be burnable by an approved address on an access list.

- The Counters stuff lets the token be capped — i.e. a fixed supply, supplied at contract creation.

- safeMint/burn/tokenURI/supportedInterface are just boilerplate to allow above.

- totalSupply() lets block explorers like etherscan/polygonscan/etc. know what the cap on the token is so they can display it in their UIs.

Once the contract is published, we have a helper script in the hardhat repo that simply reads a CSV files of addresses we want to airdop the ticket to and calls safeMint() on the contract. Here’s the core loop:

https://medium.com/media/43a212e771f50e7a23f29033ee8125c7/hrefBecause blockchain calls don’t always finish quickly, or at all, we use a try{} block to wait for the transactions to succeed before moving onto the next ticket, logging success/failure. When we minted the 4689 NFTs it just happened to be on a morning when Polygon was quite busy, so it took a few hours to complete. We had logs of 2 mints that failed, so we just re-ran those to complete the drop.

You can check out the full repo on GitHub, but hopefully this gives you a quick view at the two “bespoke” pieces: the contract and the minting logic.

Our goal is to share as much as we can about what goes on behind the scenes at block::block as we help the MeebitsDAO team launch fun ideas! Let us know if it’s helpful, what’s missing, what’s cool, and what else we could write about that help shine a light on “DAO Ops”. :)

|

|

Hacks.Mozilla.Org: Spring Cleaning MDN: Part 1 |

As we’re all aware by now, we made some big platform changes at the end of 2020. Whilst the big move has happened, its given us a great opportunity to clear out the cupboards and closets.

Illustration by Daryl Alexsy

Most notably MDN now manages its content from a repository on GitHub. Prior to this, the content was stored in a database and edited by logging in to the site and modifying content via an in-page (WYSIWYG) editor, aka ‘The Wiki’. Since the big move, we have determined that MDN accounts are no longer functional for our users. If you want to edit or contribute content, you need to sign in to GitHub, not MDN.

Due to this, we’ll be removing the account functionality and removing all of the account data from our database. This is consistent with our Lean Data Practices principles and our commitment to user privacy. We also have the perfect opportunity to be doing this now, as we’re moving our database from MySQL to PostgreSQL this week.

Accounts will be disabled on MDN on Thursday, 22nd July.

Don’t worry though – you can still contribute to MDN! That hasn’t changed. All the information on how to help is here in this guide.

The post Spring Cleaning MDN: Part 1 appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2021/07/spring-cleaning-mdn-part-1/

|

|

Mozilla Security Blog: Stopping FTP support in Firefox 90 |

The File Transfer Protocol (FTP) has long been a convenient file exchange mechanism between computers on a network. While this standard protocol has been supported in all major browsers almost since its inception, it’s by now one of the oldest protocols still in use and suffers from a number of serious security issues.

The biggest security risk is that FTP transfers data in cleartext, allowing attackers to steal, spoof and even modify the data transmitted. To date, many malware distribution campaigns launch their attacks by compromising FTP servers and downloading malware on an end user’s device using the FTP protocol.

Aligning with our intent to deprecate non-secure HTTP and increase the percentage of secure connections, we, as well as other major web browsers, decided to discontinue support of the FTP protocol.

Aligning with our intent to deprecate non-secure HTTP and increase the percentage of secure connections, we, as well as other major web browsers, decided to discontinue support of the FTP protocol.

Removing FTP brings us closer to a fully-secure web which is on a path to becoming HTTPS only and any modern automated upgrading mechanisms such as HSTS or also Firefox’s HTTPS-Only Mode, which automatically upgrade any connection to become secure and encrypted do not apply to FTP.

The FTP protocol itself has been disabled by default since version 88 and now the time has come to end an era and discontinue the support for this outdated and insecure protocol — Firefox 90 will no longer support the FTP protocol.

If you are a Firefox user, you don’t have to do anything to benefit from this security advancement. As soon as your Firefox auto-updates to version 90, any attempt to launch an attack relying on the insecure FTP protocol will be rendered useless, because Firefox does not support FTP anymore. If you aren’t a Firefox user yet, you can download the latest version here to start benefiting from all the ways that Firefox works to protect you when browsing the web.

The post Stopping FTP support in Firefox 90 appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2021/07/20/stopping-ftp-support-in-firefox-90/

|

|

Spidermonkey Development Blog: SpiderMonkey Newsletter (Firefox 90-91) |

|

|

The Talospace Project: Firefox 90 on POWER (and a JIT progress report) |

Unfortunately, a promising OpenPOWER-specific update for Fx90 bombed. Ordinarily I would have noticed this with my periodic smoke-test builds but I've been trying to continue work on the JavaScript JIT in my not-so-copious spare time (more on that in a moment), so I didn't notice this until I built Fx90 and no TLS connection would work (they all abort with SSL_ERROR_BAD_SERVER). I discussed this with Dan Hor'ak and the official Fedora build of Firefox seemed to work just fine, including when I did a local fedpkg build. After a few test builds over the last several days I determined the difference was that the Fedora Firefox package is built with --use-system-nss to use the NSS included with Fedora, so it wasn't using whatever was included with Firefox.

Going to the NSS tree I found bug 1566124, an implementation of AES-GCM acceleration for Power ISA. (Interestingly, I tried to write an implementation of it last year for TenFourFox FPR22 but abandoned it since it would be riskier and not much faster with the more limited facilities on 32-bit PowerPC.) This was, to be blunt, poorly tested and Fedora's NSS maintainer indicated he would disable it in the shipping library. Thus, if you use Fedora's included NSS, it works, and if you use the included version in the Firefox tree (based on NSS 3.66), it won't. The fixes are in NSS 3.67, which is part of Firefox 91; they never landed on Fx90.

The two fixes are small (to security/nss/lib/freebl/ppc-gcm-wrap.c and security/nss/lib/freebl/ppc-gcm.s), so if you're building from source anyway the simplest and highest-performance option is just to include them. (And now that it's working, I do have to tip my hat to the author: the implementation is about 20 times faster.) Alternatively, Fedora 34 builders can still just add --with-system-nss to their .mozconfig as long as you have nspr-devel installed, or a third workaround is to set NSS_DISABLE_PPC_GHASH=1 before starting Firefox, which disables the faulty code at runtime. In Firefox 91 this whole issue should be fixed. I'm glad the patch is done and working, but it never should have been committed in its original state without passing the test suite.

Another issue we have a better workaround for is bug 1713968, which causes errors building JavaScript with gcc. The reason that Fedora wasn't having any problem doing so is its rather voluminous generated .mozconfig that, amongst other things, uses -fpermissive. This is a better workaround than minor hacks to the source, so that is now in the .mozconfigs I'm using. I also did a minor tweak to the PGO-LTO patch so that it applies cleanly. With that, here are my current configurations:

Debug

export CC=/usr/bin/gcc

export CXX=/usr/bin/g++

mk_add_options MOZ_MAKE_FLAGS="-j24" # as you like

ac_add_options --enable-application=browser

ac_add_options --enable-optimize="-Og -mcpu=power9 -fpermissive"

ac_add_options --enable-debug

ac_add_options --enable-linker=bfd

export GN=/home/censored/bin/gn # if you have it

PGO-LTO Optimized

export CC=/usr/bin/gcc

export CXX=/usr/bin/g++

mk_add_options MOZ_MAKE_FLAGS="-j24" # as you like

ac_add_options --enable-application=browser

ac_add_options --enable-optimize="-O3 -mcpu=power9 -fpermissive"

ac_add_options --enable-release

ac_add_options --enable-linker=bfd

ac_add_options --enable-lto=full

ac_add_options MOZ_PGO=1

export GN=/home/censored/bin/gn # if you have it

export RUSTC_OPT_LEVEL=2

So, JavaScript. Since our last progress report our current implementation of the Firefox JavaScript JIT (the minimum viable product of which will be Baseline Interpreter + Wasm) is now able to run scripts of significant complexity, but it's still mostly a one-man show and I'm currently struggling with an issue fixing certain optimized calls to self-hosted scripts (notably anything that calls RegExp.prototype.* functions: it goes into an infinite loop and hits the recursion limit). There hasn't been any activity the last week because I've preferred not to commit speculative work yet, plus the time I wasted tracking down the problem above with TLS. The MVP will be considered "V" when it can pass the JavaScript JIT and conformance test suites and it's probably a third of the way there. You can help. Ask in the comments if you're interested in contributing. We'll get this done sooner or later because it's something I'm motivated to finish, but it will go a lot faster if folks pitch in.

https://www.talospace.com/2021/07/firefox-90-on-power-and-jit-progress.html

|

|

The Mozilla Blog: Olivia Rodrigo, the cast of “The French Dispatch,” “Loki” and more are on this week’s Top Shelf |

|

|

Mozilla Reps Community: New Council Members – 2021 H1 Election |

We are happy to welcome two new fully onboarded members to the Reps Council!

Hossain Al Ikram and Luis Sanchez join the other continuing members in leading the Reps Program. Tim Maks van den Broek was also re-elected and continues to contribute to the council.

Both Ikram and Luis are starting their activity as council members by contributing to the Mentorship project. They are focusing on supporting communication within the Mentors and the Council (such as preparing Mentors Calla) and on renewing and carrying out the onboarding for new Mentors.

As the new members become active in the Council, we want to thank outgoing members for their contributions. Thank you very much Shina and Faisal!

The Mozilla Reps Council is the governing body of the Mozilla Reps Program. It provides the general vision of the program and oversees day-to-day operations globally. Currently, 7 volunteers and 2 paid staff sit on the council. Find out more in the Reps wiki, and look up current members in the Community Portal.

https://blog.mozilla.org/mozillareps/2021/07/16/new-council-members-2021-h1-election/

|

|

Mozilla Performance Blog: What’s new in Perfherder? |

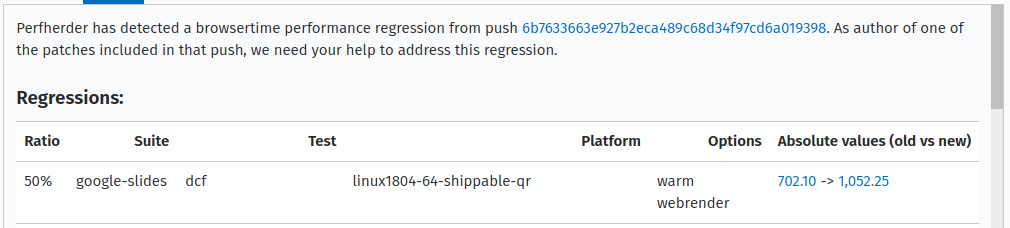

Since last “What’s new in Perfherder” article a lot has changed. Our development team is making progresses towards automating the regression detection process. This post will cover the various improvements that have been made to Perfherder since July 2020.

Alerts view

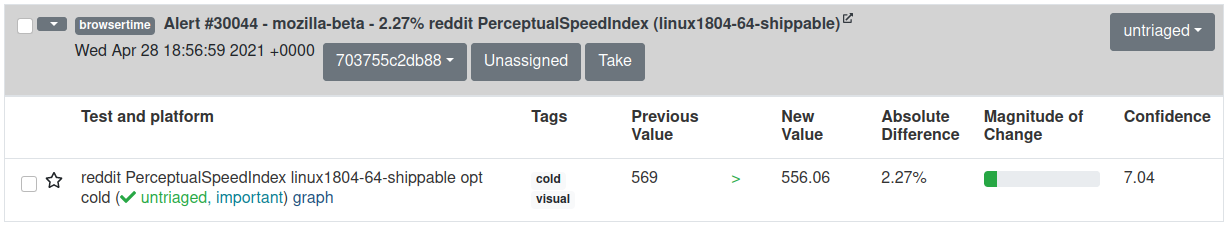

We added tags for tests. They are meant to describe what type of test it is. For example, the alert below is the PerceptualSpeedIndex visual metric for the cold variant of reddit.

The “Tags” column is next to “Test and platform”

We improved the checkbox of the alert summaries so can all alert items be selected by a specific status.

Check alerts menu

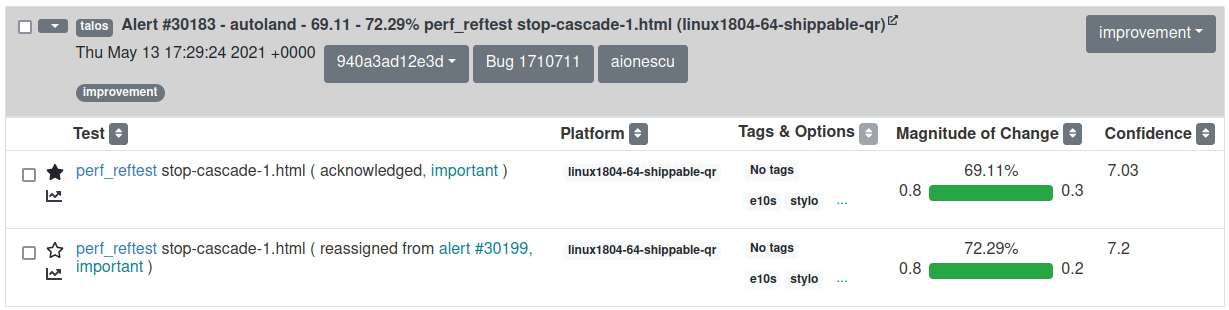

Talos tests now have links to documentation for every alert item, so if one isn’t very familiar to the regressed/improved test, this documentation can help for a better understanding of it. The alert items can be sorted by the various columns present in the alerts view. We split the Test and platform column into Test and Platform and we are now able to sort by platform also.

The Previous Value, New Value, Absolute Difference, and Magnitude of Change were joined together into a single Magnitude of Change column as they were showing basically the same information. Last but not least, the graph link at the end of each test name was moved under the star as a graph icon.

The documentation link is present at the end of every test name and platform The sorting buttons are available next to each column

Regression template

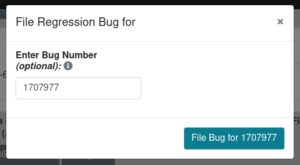

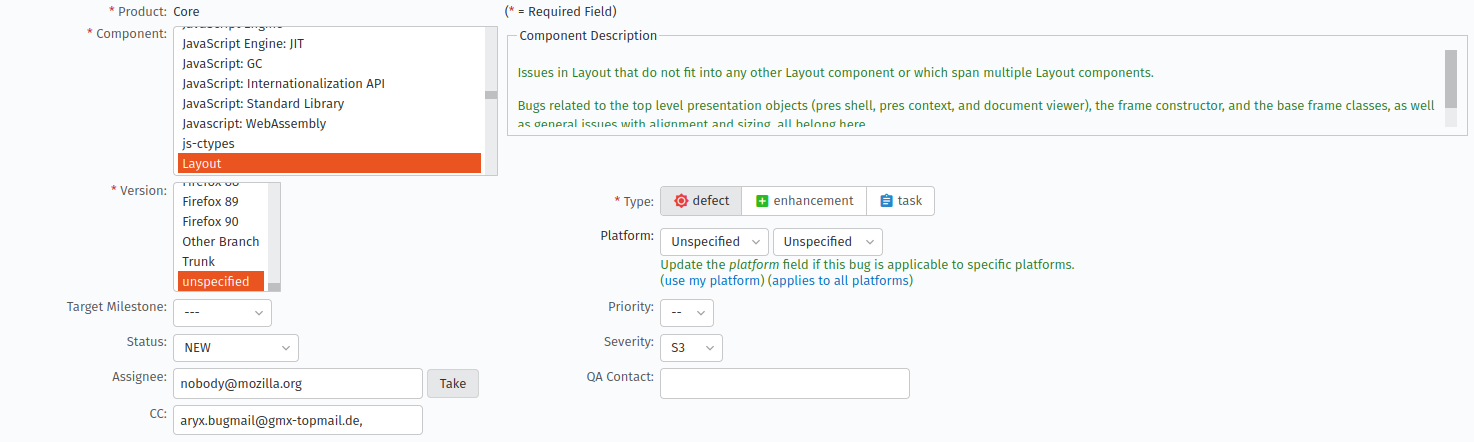

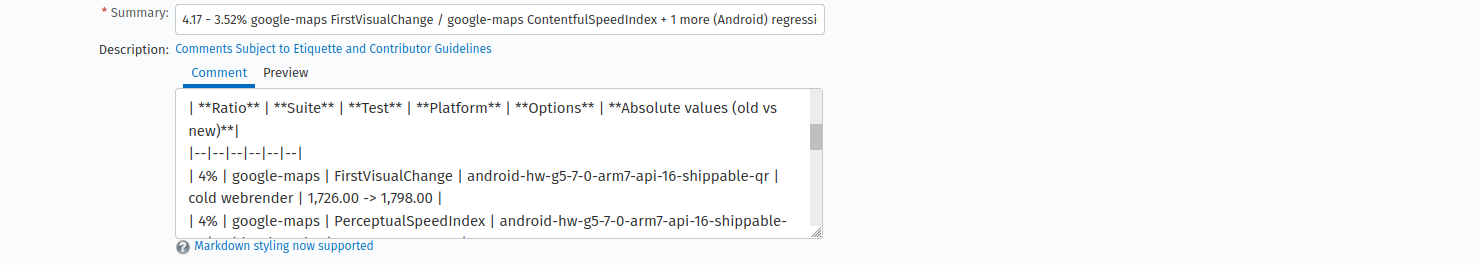

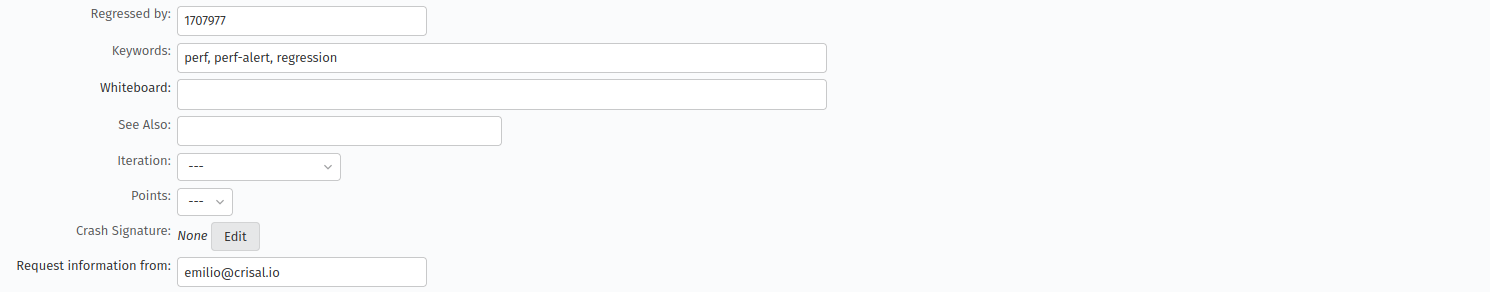

We’ve almost automated the filing of the regression bugs. We don’t have to copy-paste anymore the details from the regressor bug but just to input its number in the dialog below and the new bug screen the fields will auto-populate. The only thing that’s left to be automated is setting the Version of the bug, which should be the latest release of Firefox. It is currently set to unspecified.

File Regression Bug Modal

The autofilled fields, Screen 1

The autofilled fields, Screen 2

The autofilled fields, Screen 3

Another cool thing that we improved is a link to the visual recordings of a browsertime pageload test. In the comment 0/description of the bug, the old and new (regressed) values are linked to a tgz archive that contains the video recording of the pageload test for each page cycle.

The before and after links are under “Absolute values” column

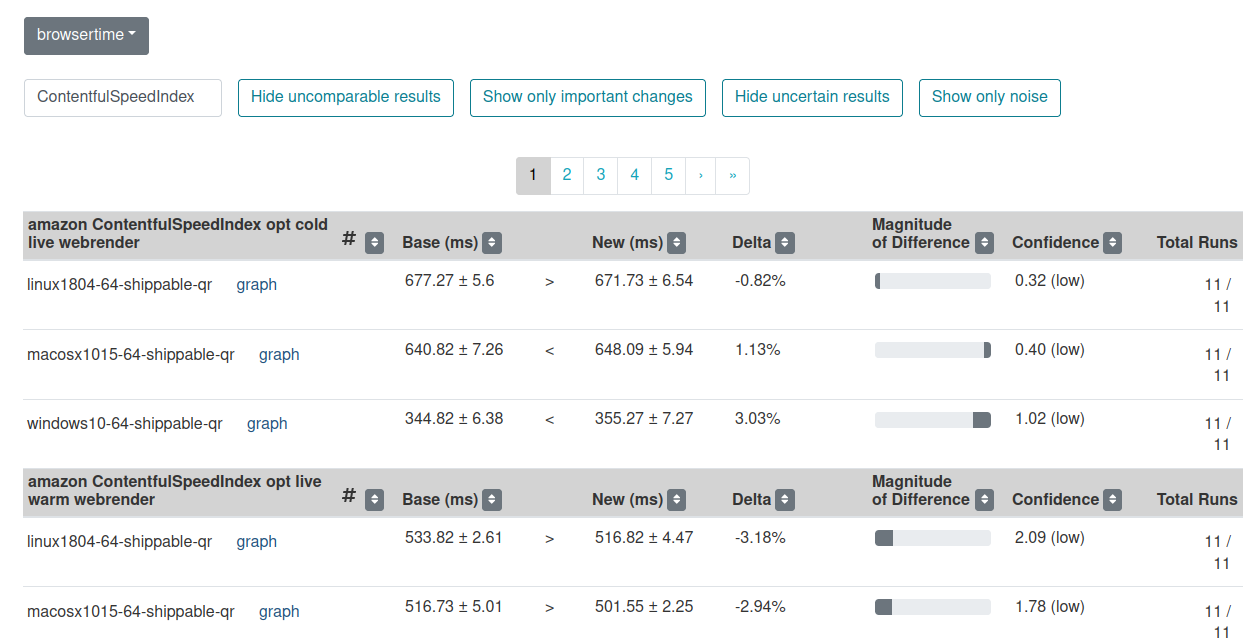

Compare view

We added pagination for compare view when the number of results is higher than 10 and now we don’t have the problem of loading too much results in one page anymore.

Compare view with pagination

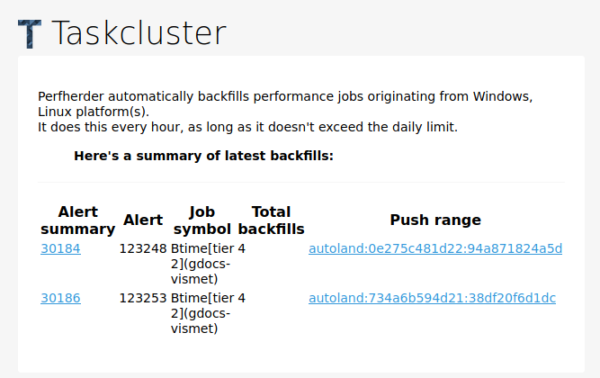

Backfill tool

The backfill tool is probably the biggest surprise. We call it Sherlock. It runs every hour and checks that there are data points for the revision the alert is created on and the previous one. It basically makes sure that there’s no data points gap so the culprit is identified precisely.

Backfill report email

UI/UX improvements

Of course the improvements are not limited to those, we’ve made various backend and front-end cleanups and optimizations not really visible to the UI:

- The graph view tooltip was adjusted to avoid it obscuring the target text

- The empty alert summaries were removed from the database

- The Perfherder UI was improved to better indicate mouse-overs and actionable elements

https://blog.mozilla.org/performance/2021/07/15/whats-new-in-perfherder-2/

|

|

This Week In Rust: This Week in Rust 399 |

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Newsletters

Project/Tooling Updates

- Announcing Arti, a pure-Rust Tor implementation

- Programmatic stream filtering using WebAssembly

- Filecoin Rust implementation "Forest" project update

- Mina Rust implementation update: Web 3.0 with Rust x Wasm

- This Week In TensorBase 11

- Rust Analyzer Changelog #85

Observations/Thoughts

- (Risp (in (Rust) (Lisp)))

- [series] Learning Rust #6: Understanding ownership in Rust

- [series] Why and how we wrote a compiler in Rust: Part 2

- [audio] What's New in Rust 1.52 and 1.53

Rust Walkthroughs

- Inline In Rust

- Using WebAssembly threads from C, C++ and Rust

- Host a wasm module on Raspberry Pi easily Part 1

- Hello, Video Codec! - Demystify video codecs by writing one in ~100 lines of Rust

- Learning Idiomatic Rust with FizzBuzz

- Rust + Tauri + Svelte Tutorial

- Rust Nibbles : Gazebo - An introduction to the Gazebo library

- A Rust controller for Kubernetes

- First steps with Docker + Rust

- [series] Writing an RPG using rg3d - #1 - Character Controller

- [series] Rust #4: Options and Results (Part 2)

- [series] Basic CRUD with rust using tide - refactoring

- [video] End-to-end Encrypted Messaging in Rust, with Ockam by Mrinal Wadhwa

- [video] [series] Building a Web Application with Rust - Part IX - Deploying on Kubernetes

- [video] [series] ULTIMATE Rust Lang Tutorial! - Smart Pointers Part 1

- [video] [series] Implementing Hazard Pointers in Rust (part 2)

Papers

Miscellaneous

Crate of the Week

This week's crate is endbasic, an emulator friendly DOS / BASIC environment running on small hardware and the web.

Thanks to Julio Merino for the suggestion.

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

Synth

- Specify collections on import

- Add tests for examples (i.e. bank_db)

- Implemented a converter for timestamptz

- Feature: Doc template generator

Forest

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

254 pull requests were merged in the last week

- improve opaque pointers support

- recover from

&dyn mut... parse errors - improve error reporting for modifications behind

&references - do not suggest adding a semicolon after ?

- use

#[track_caller]in const panic diagnostics - query-ify global limit attribute handling

- support forwarding caller location through trait object method call

- shrink the deprecated span

- report an error if resolution of closure call functions failed

- stabilize

RangeFrompatterns in 1.55 - account for capture kind in auto traits migration

- stop generating

allocas &memcmpfor simple short array equality - inline

Iterator as IntoIterator - optimize unchecked indexing into

chunksand 'chunks_mut` - add

Integer::logvariants - special case for integer log10

- cargo: unify cargo and rustc's error reporting

- rustdoc: fix rendering of reexported macros 2.0 and fix visibility of reexported items

Rust Compiler Performance Triage

Mostly quiet week; improvements outweighed regressions.

Triage done by @simulacrum. Revision range: 9a27044f4..5aff6dd

1 Regressions, 4 Improvements, 0 Mixed; 0 of them in rollups

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs are currently in the final comment period.

Tracking Issues & PRs

- [disposition: merge] Move assert_matches to an inner module

- [disposition: merge] Stabilize arbitrary_enum_discriminant

- [disposition: close] regression: infallible residual could not convert error

- [disposition: merge] Document iteration order of retain functions

- [disposition: merge] Partially stabilize const_slice_first_last

- [disposition: merge] Stabilize const_fn_transmute, const_fn_union

- [disposition: merge] Allow leading pipe in matches!() patterns.

- [disposition: close] Add expr202x macro pattern

- [disposition: merge] Remove P: Unpin bound on impl Future for Pin

- [disposition: merge] Stabilize core::task::if_ready!

- [disposition: merge] Tracking Issue for IntoInnerError::into_parts etc. (io_into_inner_error_parts)

- [disposition: close] Implement RFC 2500 Needle API (Part 1)

New RFCs

Upcoming Events

Online

- July 14, 2021, Malaysia - Rust Meetup July 2021 - Golang Malaysia, feat Rustlang, Erlang, Haskelllang and

.*-?(lang|script)\ - July 14, 2021, Dublin, IE - Rust Dublin July Remote Meetup - Rust Dublin

- July 20, 2021, Washington, DC, US - Mid-month Rustful - Rust DC

- July 21, 2021, Vancouver, BC, CA - Rust Adoption at Huawei - Vancouver Rust

- July 22, 2021, Tokyo, JP - Rust LT Online#4 - Rust JP

- July 22, 2021, Berlin, DE - Rust Hack and Learn - Berline.rs

- July 27, 2021, Dallas, TX, US - Last Tuesday - Dallas Rust

North America

- July 14, 2021, Atlanta, GA, US - Grab a beer with fellow Rustaceans - Rust Atlanta

- July 27, 2021, Chicago, IL, US - Rust in production at Tempus - Chicago Rust Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

GraphCDN

Netlify

ChainSafe Systems

NZXT

Kollider

Tempus Ex

Estuary

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Beginning Rust: Uh why does the compiler stop me from doing things this is horrible

Advanced Rust: Ugh why doesn't the compiler stop me from doing things this is horrible

Thanks to Nixon Enraght-Moony for the self-suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2021/07/14/this-week-in-rust-399/

|

|

Hacks.Mozilla.Org: Getting lively with Firefox 90 |

Getting lively with Firefox 90

As the summer rolls around for those of us in the northern hemisphere, temperatures are high and unwinding with a cool ice tea is high on the agenda. Isn’t it lucky then that Background Update is here for Windows, which means Firefox can update even if it’s not running. We can just sit back and relax!

Also this release we see a few nice JavaScript additions, including private fields and methods for classes, and the at() method for Array, String and TypedArray global objects.

This blog post just provides a set of highlights; for all the details, check out the following:

Classes go private

A feature JavaScript has lacked since its inception, private fields and methods are now enabled by default In Firefox 90. This allows you to declare private properties within a class. You can not reference these private properties from outside of the class; they can only be read or written within the class body.

Private names must be prefixed with a ‘hash mark’ (#) to distinguish them from any public properties a class might hold.

This shows how to declare private fields as opposed to public ones within a class:

class ClassWithPrivateProperties {

#privateField;

publicField;

constructor() {

// can be referenced within the class, but not accessed outside

this.#privateField = 42;

// can be referenced within the class aswell as outside

this.publicField = 52;

}

// again, can only be used within the class

#privateMethod() {

return 'hello world';

}

// can be called when using the class

getPrivateMessage() {

return this.#privateMethod();

}

}Static fields and methods can also be private. For a more detailed overview and explanation, check out the great guide: Working with private class features. You can also read what it takes to implement such a feature in our previous blog post Implementing Private Fields for JavaScript.

JavaScript at() method

The relative indexing method at() has been added to the Array, String and TypedArray global objects.

Passing a positive integer to the method returns the item or character at that position. However the highlight with this method, is that it also accepts negative integers. These count back from the end of the array or string. For example, 1 would return the second item or character and -1 would return the last item or character.

This example declares an array of values and uses the at() method to select an item in that array from the end.

const myArray = [5, 12, 8, 130, 44];

let arrItem = myArray.at(-2);

// arrItem = 130

It’s worth mentioning there are other common ways of doing this, however this one looks quite neat.

Conic gradients for Canvas

The 2D Canvas API has a new createConicGradient() method, which creates a gradient around a point (rather than from it, like createRadialGradient() ). This feature allows you to specify where you want the center to be and in which direction the gradient should start. You then add the colours you want and where they should begin (and end).

This example creates a conic gradient with 5 colour stops, which we use to fill a rectangle.

var canvas = document.getElementById('canvas');

var ctx = canvas.getContext('2d');

// Create a conic gradient

// The start angle is 0

// The centre position is 100, 100

var gradient = ctx.createConicGradient(0, 100, 100);

// Add five color stops

gradient.addColorStop(0, "red");

gradient.addColorStop(0.25, "orange");

gradient.addColorStop(0.5, "yellow");

gradient.addColorStop(0.75, "green");

gradient.addColorStop(1, "blue");

// Set the fill style and draw a rectangle

ctx.fillStyle = gradient;

ctx.fillRect(20, 20, 200, 200);

The result looks like this:

New Request Headers

Fetch metadata request headers provide information about the context from which a request originated. This allows the server to make decisions about whether a request should be allowed based on where the request came from and how the resource will be used. Firefox 90 enables the following by default:

The post Getting lively with Firefox 90 appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2021/07/getting-lively-with-firefox-90/

|

|

Mozilla Performance Blog: Bringing you a snappier Firefox |

In this blog post we’ll talk about what the Firefox Performance team set out to achieve for 2021 and the Firefox 89 release last month. With the help of many people from across the Firefox organization, we delivered a 10-30% snappier, more instantaneous Firefox experience. That’s right, it isn’t just you! Firefox is faster, and we have the numbers to prove it.

Some of the things you might find giving you a snappier response are:

- Typing in the URL bar or a document editor (like Google Docs or Office 365)

- Opening a site menu like the file menu on Google Docs

- Playing a browser based video game and using your keyboard to control your movements within the video

Our Goals

We have made many page-load and startup performance improvements over the last couple of years which have made Firefox measurably faster. But we hadn’t spent much time looking at how quickly the browser responds to little user interactions like typing in a search bar or changing tabs. Little things can add up, and we want to deliver the best performance for every user experience. So, we decided it was time to focus our efforts on responsiveness performance starting with the Firefox June release.

Responsiveness

The meaning of the word responsiveness as used within computer applications can be rather broad, so for the purpose of this blogpost, we will define three types of experiences that can impact the responsive feel of a browser.

- Instantaneous responsiveness: These are simple actions taken by a user where the browser ought to respond instantly. An example is pressing a key on your keyboard in a document or an input field. You want these to be displayed as swiftly as possible, giving the user a sense of instantaneous feedback. In general this means we want the results for these interactions to be displayed within 50ms[1] of the user taking an action.

- Small but perceptible lag: This is an interaction where the response is not instantaneous and there is enough work involved that it is not expected to be. The lag is sufficient for the user to perceive it, even if they are not distracted from the task at hand. This is something like switching between channels on Slack or selecting an email in Gmail. A typical threshold for this would be that these interactions occur in under a second.

- Jank: This is when a site, or in the worst case the browser UI itself, actually becomes unresponsive to user input for a non-insignificant amount of time. These are disruptive and perceptible pauses in interaction with the browser.

Instantaneous Responsiveness

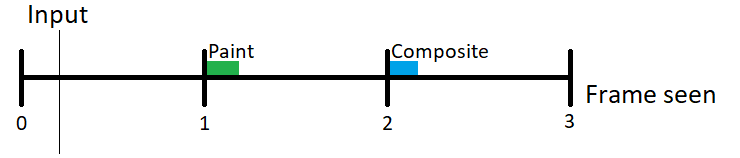

We’ve had some pretty solid indications (from tests and proofs of concepts) that we could make our already fast interactions feel even more instantaneous. One area in particular that stood out to us was the ‘depth’ of our painting pipeline.

About our painting pipeline

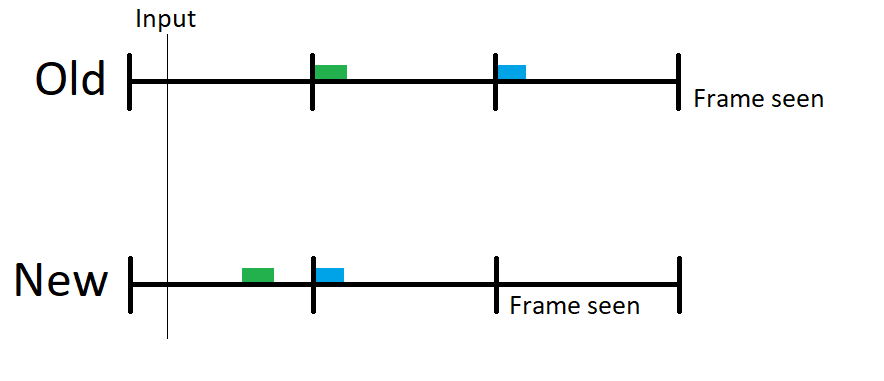

Let’s talk about that a little more. Here you can see a graphical representation of our old painting pipeline:

Most users currently use 60Hz monitors, which means their monitor displays 60 frames per second. Each segment of the timeline above represents a single frame on the screen and is approximately 16.67ms.

In most cases, when input is received from the operating system, it would take anywhere from 0 to 16.67ms for the next frame to occur (1), and at the start of that new frame, we would paint the resulting changes to the UI (the green rectangle).

Then another 16.67ms later (2), we would composite the results of that drawing onto the browser window, which would then be handed off to the OS (the blue rectangle).

At the earliest, it would be another 16.67ms later (3) when the operating system would actually present the result of the user interaction (input) to the user. This means even in the ideal case it would take at least 34-50ms for the result of an interaction to show up on the screen. But often there is other work the browser might be doing, or additional latency introduced by the input devices, the operating system, or the display hardware that would make this response even slower.

Shortening the Painting Pipeline

We set out to improve that situation by shortening the painting pipeline and by better scheduling when we handle input events. Some of the first results of this work were landed by Matt Woodrow, where he implemented a suggestion by Markus Stange in bug 1675614. This essentially changed the painting pipeline like this:

We now paint as soon as we finish processing user input if we believe we have enough time to finish before the next frame comes in. This means we can then composite the results one frame earlier. So in most cases, this will result in the frame being seen a whole 16.67ms earlier by the users. We’ll talk some more about the results of this work later.

Currently this solution doesn’t always work, for example if there are other animations happening. In Firefox 91, we’re bringing this to more situations as well as improvements to the scheduling of our input event handling. We’ll be sure to talk more about those in future blog posts!

Small but perceptible lag

When it comes to small but perceptible lags in interaction, we found that the majority of lags are caused by time spent in JavaScript code. Much has been written about why JavaScript engines in their current form are often optimized for the wrong thing. TL;DR – Years of optimizing for benchmarks have driven a lot of decisions inside the engine that do not match well with real world web applications and frameworks like React.

Real World JavaScript Performance

In order to address this, we’ve begun to closely investigate commonly used websites in an attempt to understand where our browser may be under-performing on these workloads. Many different experiments fell out of this, and several of these have turned into promising improvements to SpiderMonkey, Firefox’s JavaScript engine. (The SpiderMonkey team has their own blog, where you can follow along with some of the work they’re doing.) One result of these experiments was an improvement to array iterators (bug 1699851), which gave us a surprise improvement in the Firefox June Release.

Along with this, many other ideas were prototyped and implemented for future versions. From improvements to the architecture of object structures to faster for-of loops, the JavaScript team has contributed much of their time to offering significant improvements to real world JS performance that are setting us up for plenty of further improvements to come throughout the rest of 2021. We’d especially like to thank Ted Campbell, Iain Ireland, Steve Fink, Jan de Mooij and Denis Palmeiro for their many contributions!

Jank

Through hard work from Florian Qu`eze and Doug Thayer, we now have a Background Hang Reporter tool that helps us detect (and ultimately fix) browser jank.

Background Hang Reporter

While still in the early stages of development, this tool has already proven extremely useful. The resulting data can be found here. This essentially makes it possible for us to see the stacktraces of frequently seen main thread hangs inside the Firefox parent process. We can also attach bugs to these hangs in the tool, and this has already helped us address some important issues.

For example, we discovered that accessibility was being enabled unnecessarily for most Windows users with a touchscreen. In order to facilitate accessibility features, the browser does considerable extra work. While this extra work is critical to our many users that require these accessibility features, it caused considerable jank for many users that did not need them. James Teh’s assistance was invaluable in resolving this, and with the landing of bug 1687535, the number of users with accessibility code unnecessarily enabled, as well as the number of associated hang reports, has gone down considerably.

Measuring performance

Along with all this work, we’ve also been in the process of attempting to do better at measuring the performance for our users ‘in the wild’, as we like to say. This means adding more telemetry probes that collect data about how your browser is performing in an anonymous way, without compromising your privacy. This allows us to detect improvements with an accuracy that no form of internal testing can really provide.

Improved “instantaneous responsiveness”

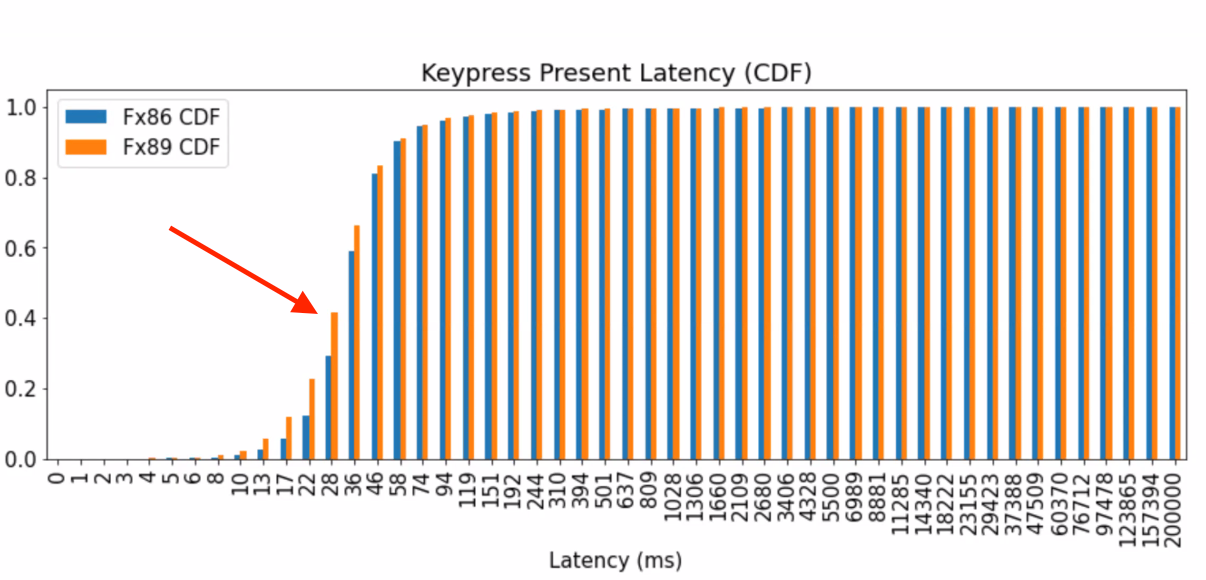

As an example we can look at the latency for our keyboard interactions. This describes the time taken between the operating system delivering us a keyboard event, to us handing the frame off to the window manager:

If we take into account an additional frame the OS requires to display our change, 34ms is approximately the time required to hit the 50ms “instantaneous” threshold,). Looking at the 28-35ms bucket, we see that we now hit that target more than 40% of the time, vs less than 30% in Firefox 86.

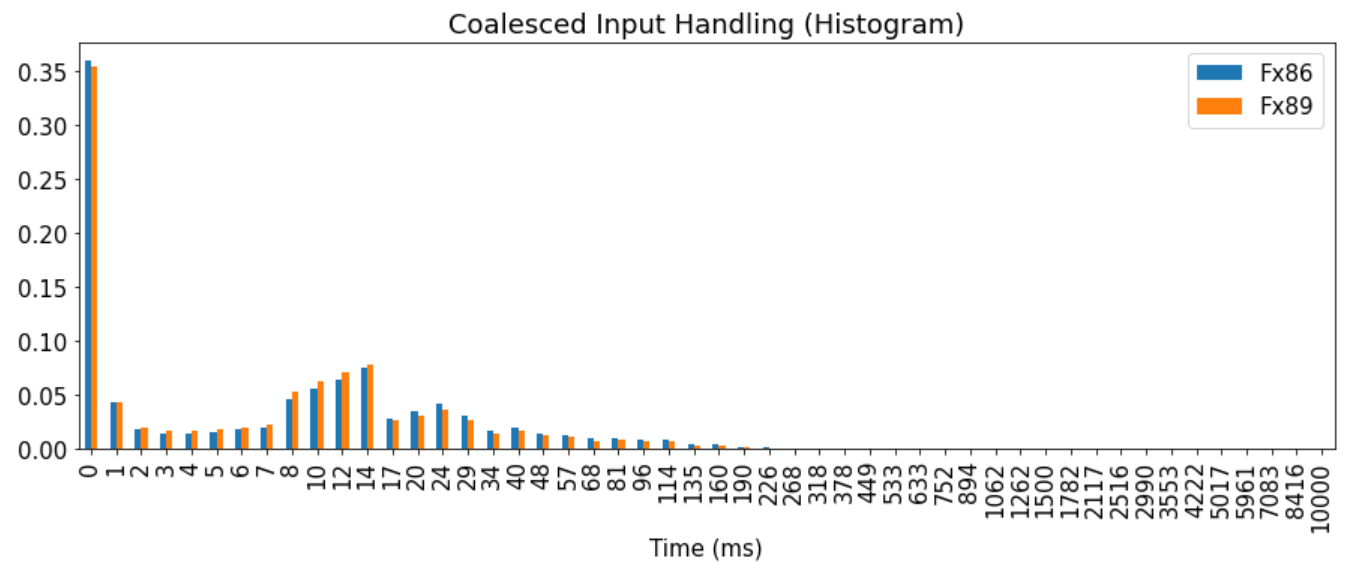

Improved “Small but perceptible lag”

Another datapoint we can look at actually tells us more about the speed of our JS processing, this describes the time from when we receive an input event from the operating system, to when we’ve processed the JavaScript handler associated with that input event.

If we look carefully here we can see a small, but consistent shift here from the higher buckets to the lower buckets. We’ve actually been able to track this improvement down and it appears to have occurred right around the landing of bug 1699851. Since we had not been able to detect this improvement internally outside of microbenchmarks, it reaffirms the value of improving our telemetry further to better determine how our work is impacting real users.

What’s Next?

We’d like to again thank all the people (including those we might have forgotten!) that contributed to these efforts. All the work described above is still in its early days, and all the improvements we’ve shown here are the result of first steps taken as a part of more extensive plans to improve Firefox responsiveness.

So if you feel Firefox is more responsive, it isn’t just your imagination, and more importantly, we’ve got more speedups coming!

[1] Research varies widely on this so we choose 50ms as a latency threshold which is imperceptible to most users.

https://blog.mozilla.org/performance/2021/07/13/bringing-you-a-snappier-firefox/

|

|

Mozilla Security Blog: Firefox 90 introduces SmartBlock 2.0 for Private Browsing |

Today, with the launch of Firefox 90, we are excited to announce a new version of SmartBlock, our advanced tracker blocking mechanism built into Firefox Private Browsing and Strict Mode. SmartBlock 2.0 combines a great web browsing experience with robust privacy protection, by ensuring that you can still use third-party Facebook login buttons to sign in to websites, while providing strong defenses against cross-site tracking.

At Mozilla, we believe that privacy is a fundamental right. As part of the effort to provide a strong privacy option, Firefox includes the built-in Tracking Protection feature that operates in Private Browsing windows and Strict Mode to automatically block scripts, images, and other content from being loaded from known cross-site trackers. Unfortunately, blocking such cross-site tracking content can break website functionality.

Ensuring smooth logins with Facebook

Logging into websites is, of course, a critical piece of functionality. For example: many people value the convenience of being able to use Facebook to sign up for, and log into, a website. However, Firefox Private Browsing blocks Facebook scripts by default: that’s because our partner Disconnect includes Facebook domains on their list of known trackers. Historically, when Facebook scripts were blocked, those logins would no longer work.

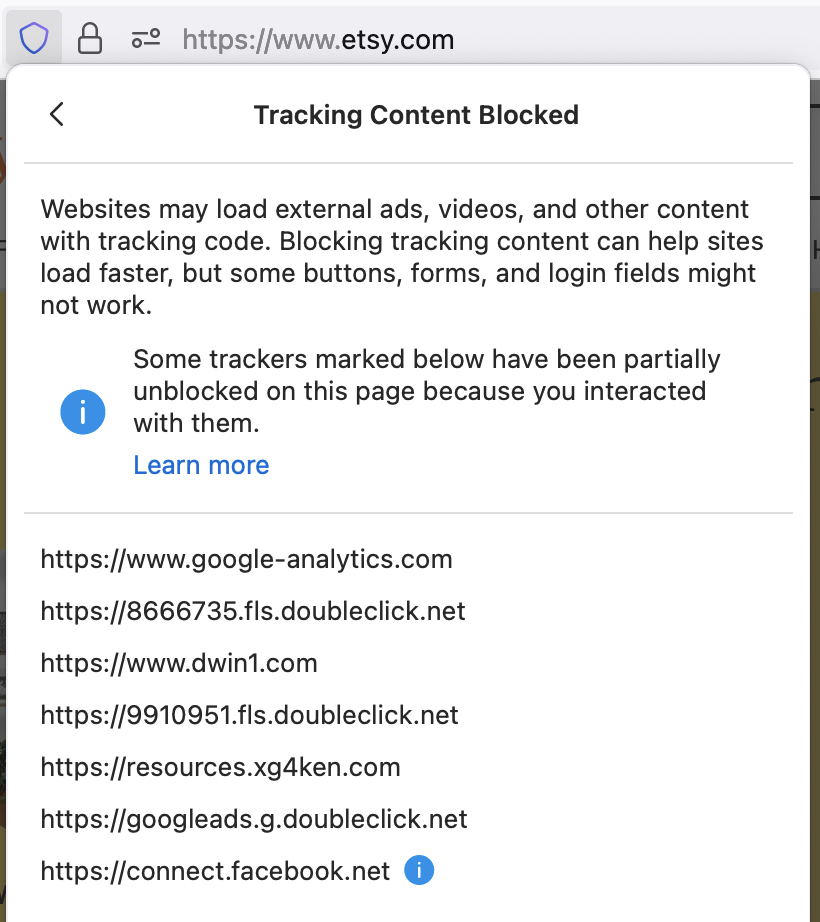

For instance, if you visit etsy.com, the front page gives the following options to sign in, including a button to sign in using Facebook’s login service. If you click on the Enhanced Tracking Protection shield in the address bar, (![]() )and click on Tracking Content, however, you will see that Firefox has automatically blocked third-party tracking content from Facebook to prevent any possible tracking of you by Facebook on that page:

)and click on Tracking Content, however, you will see that Firefox has automatically blocked third-party tracking content from Facebook to prevent any possible tracking of you by Facebook on that page:

Prior to Firefox 90, if you were using a Private Browsing window, when you clicked on the “Continue with Facebook” button to sign in, the “sign in” would fail to proceed because the third-party Facebook script required had been blocked by Firefox.

Prior to Firefox 90, if you were using a Private Browsing window, when you clicked on the “Continue with Facebook” button to sign in, the “sign in” would fail to proceed because the third-party Facebook script required had been blocked by Firefox.

Now, SmartBlock 2.0 in Firefox 90 eliminates this login problem. Initially, Facebook scripts are all blocked, just as before, ensuring your privacy is preserved. But when you click on the “Continue with Facebook” button to sign in, SmartBlock reacts by quickly unblocking the Facebook login script just in time for the sign-in to proceed smoothly. When this script gets loaded, you can see that unblocking indicated in the list of blocked tracking content:

SmartBlock 2.0 provides this new capability on numerous websites. On all websites where you haven’t signed in, Firefox continues to block scripts from Facebook that would be able to track you. That’s right — you don’t have to choose between being protected from tracking or using Facebook to sign in. Thanks to Firefox SmartBlock, you can have your cake and eat it too!

SmartBlock 2.0 provides this new capability on numerous websites. On all websites where you haven’t signed in, Firefox continues to block scripts from Facebook that would be able to track you. That’s right — you don’t have to choose between being protected from tracking or using Facebook to sign in. Thanks to Firefox SmartBlock, you can have your cake and eat it too!

And we’re baking more cakes! We are continuously working to expand SmartBlock’s capabilities in Firefox Private Browsing and Strict Mode to give you an even better experience on the web while continuing to provide strong protection against trackers.

Thank you

Our privacy protections are a labor of love. We want to acknowledge the work and support of many people at Mozilla that helped to make SmartBlock possible, including Paul Z"uhlcke, Johann Hofmann, Steven Englehardt, Tanvi Vyas, Wennie Leung, Mikal Lewis, Tim Huang, Dimi Lee, Ethan Tseng, Prangya Basu, and Selena Deckelmann.

The post Firefox 90 introduces SmartBlock 2.0 for Private Browsing appeared first on Mozilla Security Blog.

|

|

Support.Mozilla.Org: What’s up with SUMO – July 2021 |

Hey SUMO folks,

Welcome to a new quarter. Lots of projects and planning are underway. But first, let’s take a step back and see what we’ve been doing for the past month.

Welcome on board!

- Hello to strafy, Naheed, Taimur Ahmad, and Felipe. Thanks for contributing to the forum and welcome to SUMO!

Community news

- The advance search syntax is available on our platform now (read more about it here).

- Our wiki has a new face now. Please take a look and let us know if you have any feedback.

- Another reminder to check out Firefox Daily Digest to get daily updates about Firefox. Go check it out and subscribe if you haven’t already.

- Check out the following release notes from Kitsune in the month:

Community call

- Watch the monthly community call if you haven’t. Learn more about what’s new in June!

- Reminder: Don’t hesitate to join the call in person if you can. We try our best to provide a safe space for everyone to contribute. You’re more than welcome to lurk in the call if you don’t feel comfortable turning on your video or speaking up. If you feel shy to ask questions during the meeting, feel free to add your questions on the contributor forum in advance, or put them in our Matrix channel, so we can address them during the meeting.

Community stats

KB

KB Page views

| Month | Page views | Vs previous month |

| June 2021 | 9,125,327 | +20.04% |

Top 5 KB contributors in the last 90 days:

KB Localization

Top 10 locale (besides en) based on total page views

| Locale | Apr 2021 page views | Localization progress (per Jul, 8) |

| de | 10.21% | 100% |

| fr | 7.51% | 89% |

| es | 6.58% | 46% |

| pt-BR | 5.43% | 65% |

| ru | 4.62% | 99% |

| zh-CN | 4.23% | 99% |

| ja | 3.98% | 54% |

| pl | 2.49% | 84% |

| it | 2.42% | 100% |

| id | 1.61% | 2% |

Top 5 localization contributors in the last 90 days:

Forum Support

Forum stats

| Month | Total questions | Answer rate within 72 hrs | Solved rate within 72 hrs | Forum helpfulness |

| Jun 2021 | 4676 | 63.58% | 15.93% | 78.33% |

Top 5 forum contributors in the last 90 days:

Social Support

| Channel | Jun 2021 | |

| Total conv | Conv handled | |

| @firefox | 7082 | 160 |

| @FirefoxSupport | 1274 | 448 |

Top 5 contributors in Q1 2021

- Christophe Villeneuve

- Pravin

- Emin Mastizada

- Md Monirul Alom

- Andrew Truong

Play Store Support

We don’t have enough data for the Play Store Support yet. However, you can check out the overall Respond Tool metrics here.

Product updates

Firefox desktop

- FX Desktop V90 (07/13)

- Shimming Exceptions UI (Smarkblock)

- DNS over HTTPS – remote settings config

- Background Update Agent (BAU)

- About:third-party

Firefox mobile

- FX Android V90 (07/13)

- Credit Card Auto-Complete

- FX IOS V35 (07/13)

- Folders for your Bookmarks

- Opt-in or out of Experiments

Other products / Experiments

- Mozilla VPN V2.4 (07/13)

- Split Tunneling (Windows and Linux)

- Support for Local DNS

- Addition of In app Feedback submission

- Variable Pricing addition (EU and US)

- Expansion Phase 2 to EU (Spain, Italy, Belgium, Austria, Switzerland)

Shout-outs!

- Kudos for everyone who’s been helping with the Firefox 89 release.

- Franz for helping with the forum and for the search handover insight.

If you know anyone that we should feature here, please contact Kiki and we’ll make sure to add them in our next edition.

Useful links:

- #SUMO Matrix group

- SUMO Discourse

- Contributor forums

- Twitter @SUMO_mozilla and @FirefoxSupport

- SUMO Blog

https://blog.mozilla.org/sumo/2021/07/13/whats-up-with-sumo-july-2021/

|

|

The Mozilla Blog: Break free from the doomscroll with Pocket |

Last year a new phrase crept into the zeitgeist: doomscrolling, the tendency to get stuck in a bad news content cycle even when consuming it makes us feel worse. That’s no surprise given that 2020 was one for the books with an unrelenting flow of calamitous topics, from the pandemic to murder hornets to wild fires. Even before we had a name for it and real life became a Nostradamus prediction, it was all too easy to fall into the doomscroll trap. Many content recommendation algorithms are designed to keep our eyeballs glued to a screen, potentially leading us into more questionable, extreme or ominous territory.

Pocket, the content recommendation and saving service from Mozilla, offers a brighter view, inviting readers to take a different direction with high-quality content and an interface that isn’t designed to trap you or bring you down. You can get great recommendations and also save content to your Pocket, both in the app and through Firefox, every time you open a new tab in the browser. Pocket doesn’t send you down questionable rabbit holes or bombard you with a deluge of depressing or anxiety-producing content. Its recommendations are vetted by thoughtful, dedicated human editors who do a lot of reading and watching so you don’t have to dig through the muck.

“I’ve always loved reading, and it is definitely a thrill to read all day at my desk and not feel like I’m procrastinating. I’m actually doing my job,” said Amy Maoz, Pocket recommendations editor.

Amy and colleague Alex Dalenberg are two members of Pocket’s human curator team, and they are some of the people who look after the stories that appear on the Firefox new tab page.

Every day, Pocket users save millions of articles, videos, links and more from across the web, forming the foundation of Pocket’s recommendations. From this activity, Pocket’s algorithms surface the most-saved and most-read content from the Pocket community. Pocket’s human curators then sift through this material and elevate great reads for the recommendation mix: in-depth features, clever explainers, curiosity chasers, timely reads and evergreen pieces. The curator team makes sure that a wide assortment of publishers are represented, as well as a large variety of topics, including what’s happening in the world right now. And it’s done in a way that respects and preserves the privacy of Pocket readers.

“I’m consistently impressed and delighted by what great content Pocket users find all across the web,” said Maoz. “Our users do an incredible job pointing us to fascinating, entertaining and informative articles and videos and more.”

“Saving something in your Pocket is different from, say, pressing the ‘like’ button on it,” Alex Dalenberg, Pocket recommendations editor, added. “It’s more personal. You are saving it for later, so it’s less performative. And that often points us to real gems.”

It makes sense that a lot of big, juicy stories end up in Pocket; articles from The New York Times, The Guardian, Wired and The Ringer are regularly among the top-saved by readers. Pocket’s algorithms also flag stories from smaller publications that receive a notable number of saves and highlight them to the curators for consideration. That allows smaller publications and diverse voices to get wider exposure for content that might have otherwise flown under the radar.

“The power of the web is that everybody owns a printing press now, but I feel like we’ve lost a bit of that web 1.0 or 1.5 feeling,” Dalenberg said. “It’s always really exciting when we can surface exceptional content from smaller players and indie web publications, not just the usual suspects. It’s also great to hear people say how much they like discovering new publications because they saw them in Pocket’s recommendations.”

The power of a Pocket recommendation

Scalawag magazine is a small nonprofit publication dedicated to U.S. Southern culture and issues, with a belief that great storytelling and reporting can lead to policy changes. Last June, Scalawag published a round-up piece entitled Reckoning with white supremacy: Five fundamentals for white folks to share how they had been covering issues of systemic racism in the South and police systems since it launched in 2015.

“I wrote it mostly for other folks on the team to use as a guide to send to well-meaning friends who found themselves suddenly interested in these issues in the summer of protests, almost as a reference guide for people unfamiliar with our work but who wanted to learn more,” said Lovey Cooper, Scalawag’s Managing Editor and author of the piece.

Cooper published it on a Wednesday evening and sent it to a few friends on Thursday. By Friday morning, traffic was suddenly overwhelming their site, and Pocket was the driver. The Pocket team had recommended Cooper’s story on the Firefox new tab, and people were reading it. Lots of them.

“I watched the metrics as I sat on the phone with various tech gurus to get the site back up and running, and within two hours — even with the site not working anywhere except in-app viewers like Pocket — the piece became our most viewed story of the year,” she said.

By Sunday, Scalawag saw more than five times its usual average monthly visitors to the site since Friday alone. They gained hundreds of new email subscribers, and thousands in expected lifetime membership and one-time donation revenue from readers who had not previously registered on the site. It became the most viewed story Scalawag had ever published, beating out by a huge margin the couple of times The New York Times featured them.

“The rest of June was a whirlwind too,” Cooper said. “We were being asked to speak on radio programs and at events like never before, due to our unique positioning as lifelong champions of racial and social justice. Just as those topics came into the mainstream zeitgeist, we were perfectly poised to showcase to the world that, yes, Scalawag has indeed always been fighting this fight with our stories — and here are the articles to prove it.”

Cooper’s piece was also included in a Pocket collection, What We’re Reading: The Fight for Racial Equity, Justice and Black Lives. Pocket has continued to publish Racial Justice collections, a set of in-depth collections curated by Black scholars, journalists and writers.

“We saw this as an opportunity to use our platform to amplify and champion Black voices and diverse perspectives,” said Carolyn O’Hara, Director of Editorial at Pocket. “We have always felt that it’s our responsibility at Pocket to highlight pieces that can inform and inspire from all across the web, and we’re more committed to that than ever.”

Scalawag’s story shows how Pocket’s curated recommendations can provide hungry readers with context and information while elevating smaller publishers whose thoughtful content deserves more attention and readership.

Quality content over dubious information

The idea that everyone has a printing press thanks to the internet is a double-edged sword. Anyone can publish anything, which has also opened the door to misinformation as a cottage industry. Then it shows up on social media. And with more people turning to social media as their news and information sources, even when it isn’t vetted, misinformation quickly takes off and does damage. But you won’t find it in Pocket.

The Pocket editorial team works hard to maintain one bias: quality content. Along with misinformation, you won’t find clickbait on Pocket, nor are you likely to find breaking news. Those are more in the moment reads, rather than save it for later reads. Maoz asserts that no one really saves articles like Here’s what 10 celebs look like in bikinis to read it tomorrow. They might click it, but they don’t hold onto it with Pocket.

Essential Reading: What is ransomware?

Here’s what you need to know about the growing cybersecurity threat.

And when it comes to current events and breaking news, you’ll find that Pocket recommendations often have a wider or higher altitude view. “We’re not necessarily recommending the first or second day story but the Sunday magazine story,” Dalenberg adds, since it’s often the longer, more in-depth reads that users are saving. That would be the history of the bathing suit, for example, rather than a clickbait celeb paparazzi story, whose goal might solely be to deploy online tracking and serve ads more so than to provide quality content.

“People are opening a new tab in Firefox to do something, and we aren’t trying to shock or surprise them into clicking on our recommendations, to bait them into engaging, in other words,” said Maoz. “We’re offering up content we believe is worthy of their time and attention. ”

Curators won’t recommend content to Pocket that they believe is misleading or sensational, or from a source without a strong history of integrity. They also avoid articles based on studies with just a single source, choosing instead to wait until there is more information to confirm or debunk the story. They also review the meta-image – the preview image that appears when an article is shared. Since they don’t have control over what image a publisher selects, they take care to avoid surprising people with inappropriate visuals on the Firefox new tab.

As part of the Mozilla family, Pocket, like Firefox, looks out for your privacy.

“Pocket doesn’t mine everyone’s data to show them creepily targeted stories and things they don’t actually want to read,” Maoz said. “When I tell people about what I do at Pocket, I always tie it back to privacy, which I think is really cool. That’s basically why we have jobs — because Mozilla cares about privacy.”

The post Break free from the doomscroll with Pocket appeared first on The Mozilla Blog.

https://blog.mozilla.org/en/internet-culture/deep-dives/break-free-from-the-doomscroll-with-pocket/

|

|

Mozilla Security Blog: Firefox 90 supports Fetch Metadata Request Headers |

We are pleased to announce that Firefox 90 will support Fetch Metadata Request Headers which allows web applications to protect themselves and their users against various cross-origin threats like (a) cross-site request forgery (CSRF), (b) cross-site leaks (XS-Leaks), and (c) speculative cross-site execution side channel (Spectre) attacks.

Cross-site attacks on Web Applications

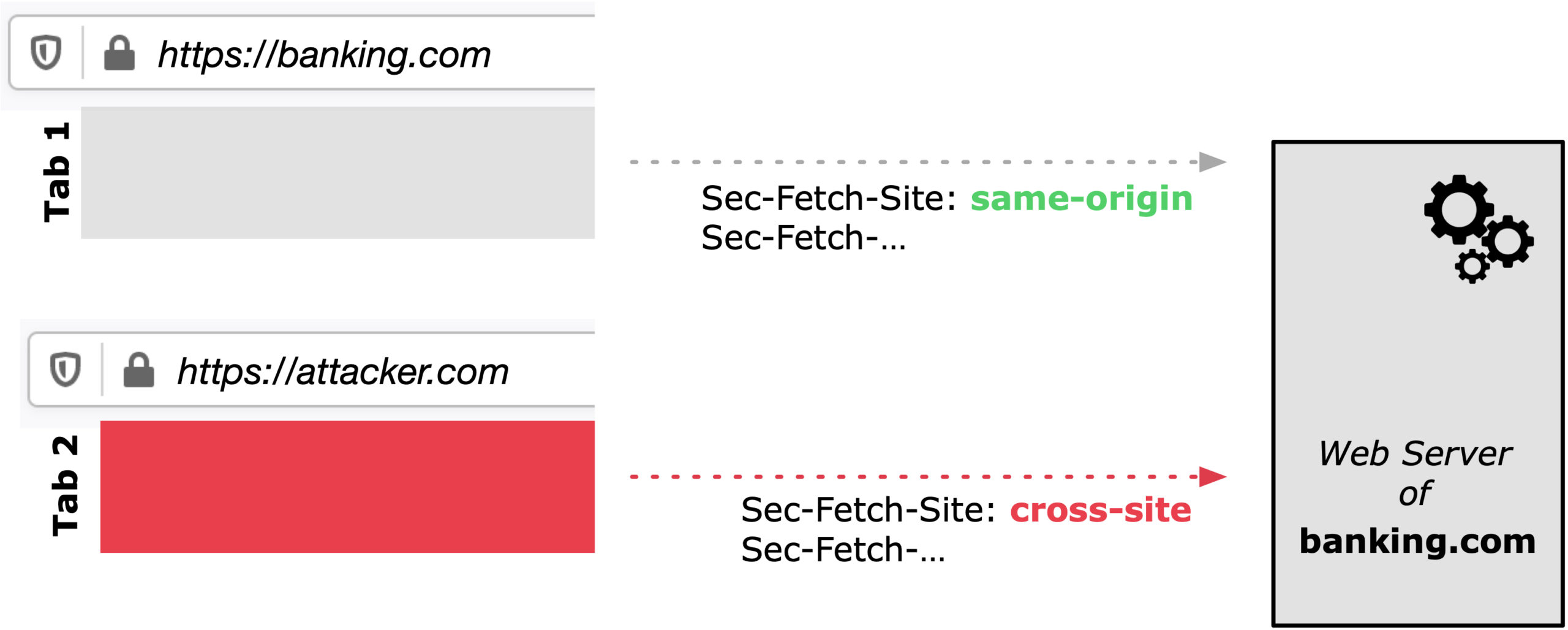

The fundamental security problem underlying cross-site attacks is that the web in its open nature does not allow web application servers to easily distinguish between requests originating from its own application or originating from a malicious (cross-site) application, potentially opened in a different browser tab.

Firefox 90 sending Fetch Metadata (Sec-Fetch-*) Request Headers which allows web application servers to protect themselves against all sorts of cross site attacks.

For example, as illustrated in the Figure above, let’s assume you log into your banking site hosted at https://banking.com and you conduct some online banking activities. Simultaneously, an attacker controlled website opened in a different browser tab and illustread as https://attacker.com performs some malicious actions.

Innocently, you continue to interact with your banking site which ultimately causes the banking web server to receive some actions. Unfortunately the banking web server has little to no control of who initiated the action, you or the attacker in the malicious website in the other tab. Hence the banking server or generally web application servers will most likely simply execute any action received and allow the attack to launch.

Introducing Fetch Metadata

As illustrated in the attack scenario above, the HTTP request header Sec-Fetch-Site allows the web application server to distinguish between a same-origin request from the corresponding web application and a cross-origin request from an attacker-controlled website.

Inspecting Sec-Fetch-* Headers ultimately allows the web application server to reject or also ignore malicious requests because of the additional context provided by the Sec-Fetch-* header family. In total there are four different Sec-Fetch-* headers: Dest, Mode, Site and User which together allow web applications to protect themselves and their end users against the previously mentioned cross-site attacks.

Going Forward

While Firefox will soon ship with it’s new Site Isolation Security Architecture which will combat a few of the above issues, we recommend that web applications make use of the newly supported Fetch Metadata headers which provide a defense in depth mechanism for applications of all sorts.

As a Firefox user, you can benefit from the additionally provided headers as soon as your Firefox auto-updates to version 90. If you aren’t a Firefox user yet, you can download the latest version here to start benefiting from all the ways that Firefox works to protect you when browsing the internet.

The post Firefox 90 supports Fetch Metadata Request Headers appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2021/07/12/firefox-90-supports-fetch-metadata-request-headers/

|

|

Cameron Kaiser: TenFourFox FPR32 SPR2 available |

http://tenfourfox.blogspot.com/2021/07/tenfourfox-fpr32-spr2-available.html

|

|

The Mozilla Blog: Net neutrality: reacting on the Executive Order on Promoting Competition in the American Economy |

The Biden Administration issued today an Executive Order on Promoting Competition in the American Economy.

“Reinstating net neutrality is a crucial down payment on the much broader internet reform that we need and we’re glad to see the Biden Administration make this a priority in its new Executive Order today. Net neutrality preserves the environment that creates room for new businesses and new ideas to emerge and flourish, and where internet users can freely choose the companies, products, and services that they want to interact with and use. In a marketplace where consumers frequently do not have access to more than one internet service provider (ISP), these rules ensure that data is treated equally across the network by gatekeepers.” — Ashley Boyd, VP of Advocacy at Mozilla

In March 2021, we sent a joint letter to the FCC asking for the Commission to reinstate net neutrality as soon as it is in working order. Mozilla has been one of the leading voices in the fight for net neutrality for almost a decade, together with other advocacy groups. Mozilla has defended user access to the internet, in the US and around the world. Our work to preserve net neutrality has been a critical part of that effort, including our lawsuit against the FCC to keep these protections in place for users in the US.

The post Net neutrality: reacting on the Executive Order on Promoting Competition in the American Economy appeared first on The Mozilla Blog.

|

|

The Mozilla Blog: Mozilla responds to the UK CMA consultation on Google’s commitments on the Chrome Privacy Sandbox |

Regulators and technology companies together have an unique opportunity to improve the privacy properties of online advertising. Improving privacy for everyone must remain the north star of efforts surrounding privacy preserving advertising and we welcome the recent moves by the UK’s Competition Markets Authority to invite public comments on the recent voluntary commitments proposed by Google for its Chrome Privacy Sandbox initiative.

Google’s commitments are a positive step forward and a sign of tangible progress in creating a higher baseline for privacy protections on the open web. Yet, there remain ways in which the commitments can be made even stronger to promote competition and protect user privacy. In our submission, we focus on three specific points of feedback.

First, the CMA should work towards creating a high baseline of privacy protections and an even playing field for the open web. We strongly support binding commitments that would prohibit Google from self-preferencing when using the Chrome Privacy Sandbox technologies and from combining user data from certain sources for targeting or measuring digital ads on first and third party inventory. This approach provides a model for how regulators might protect both competition and privacy while allowing for innovation in the technology sector, and we hope to see this followed by other dominant technology platforms as well.

Second, Google should not be restricted from deploying limitations on the use of third-party cookies for pervasive web tracking, which should be made independent of the development of its Privacy Sandbox proposals. We encourage the CMA to reconsider requirements that will hinder efforts to build a more privacy respecting internet. Given the widespread harms resulting from web tracking, we believe restrictions on the use of third party cookies should be decoupled from the development of other Chrome Privacy Sandbox proposals and that Google should have the flexibility to protect its users from cross-site tracking on an unconditional timeframe. By doing so, agencies such as the CMA and ICO would publicly acknowledge the importance expeditiously limiting the role of third party cookies in pervasive web tracking.

And third, relevant Chrome Privacy Sandbox proposals should be developed and deployed via formal processes at open standard bodies. It is critical for new functionality introduced by the Chrome Privacy Sandbox proposals to be thoroughly vetted to understand its implications for privacy and competition by all relevant stakeholders in a public and transparent manner. For this reason,we encourage the CMA to require an explicit commitment that relevant proposals are developed via formal processes and oversight at open standard development organizations (SDOs) and deployed pursuant to the final specifications.

We look forward to engaging with the CMA and other stakeholders in the coming months with our work on privacy preserving advertising, including but not limited to proposals within the Chrome Privacy Sandbox.

For more on this:

Building a more privacy-preserving ads-based ecosystem

The post Mozilla responds to the UK CMA consultation on Google’s commitments on the Chrome Privacy Sandbox appeared first on The Mozilla Blog.

https://blog.mozilla.org/en/mozilla/uk-cma-google-commitments-chrome-privacy-sandbox/

|

|