Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Addons Blog: Extension Signing: Availability of Unbranded Builds |

With the release of Firefox 48, extension signing can no longer be disabled in the release and beta channel builds by using a preference. As outlined when extension signing was announced, we are publishing specialized builds that support this preference so developers can continue to test against the code that beta and release builds are generated from. These builds do not use Firefox branding, do not update automatically, and are available for the en-US locale only.

You can find links to the latest unbranded beta and release builds on the Extension Signing page as they come out. Additional information on Extension Signing, including a Frequently Asked Questions section, is also available on this page.

Update: We’re aware of a problem with the builds where they’re getting updated to branded releases/betas. We’re looking into it, and a workaround while we correct the issue is outlined in the Extension Signing page.

https://blog.mozilla.org/addons/2016/07/29/extension-signing-availability-of-unbranded-builds/

|

|

Nathan Froyd: a git pre-commit hook for tooltool manifest checking |

I’ve recently been uploading packages to tooltool for my work on Rust-in-Gecko and Android toolchains. The steps I usually follow are:

- Put together tarball of files.

- Call tooltool.py from build-tooltool to create a tooltool manifest.

- Upload files to tooltool with said manifest.

- Copy bits from said manifest into one of the manifest files automation uses.

- Do try push with new manifest.

- Admire my completely green try push.

That would be the ideal, anyway. What usually happens at step 4 is that I forget a comma, or I forget a field in the manifest, and so step 5 winds up going awry, and I end up taking several times as long as I would have liked.

After running into this again today, I decided to implement some minimal validation for automation manifests. I use a fork of gecko-dev for development, as I prefer Git to Mercurial. Git supports running programs when certain things occur; these programs are known as hooks and are usually implemented as shell scripts. The hook I’m interested in is the pre-commit hook, which is looked for at .git/hooks/pre-commit in any git repository. Repositories come with a sample hook for every hook supported by Git, so I started with:

cp .git/hooks/pre-commit.sample .git/hooks/pre-commit

The sample pre-commit hook checks trailing whitespace in files, which I sometimes leave around, especially when I’m editing Python, and can check for non-ASCII filenames being added. I then added the following lines to that file:

if git diff --cached --name-only | grep -q releng.manifest; then

for f in $(git diff --cached --name-only | grep releng.manifest); do

if ! python -<In prose, we’re checking to see if the current commit has any releng.manifest files being changed in any way. If so, then we’ll try parsing each of those files as JSON, and throwing an error if one doesn’t parse.

There are several ways this check could be more robust:

- The check will error if a commit is removing a releng.manifest, because that file won’t exist for the script to check;

- The check could ensure that the unpack field is set for all files, as the manifest file used for the upload in step 3, above, doesn’t include that field: it needs to be added manually.

- The check could ensure that all of the digest fields are the correct length for the specified digest in use.

- …and so on.

So far, though, simple syntax errors are the greatest source of pain for me, so that’s what’s getting checked for. (Mismatched sizes have also been an issue, but I’m unsure of how to check that…)

What pre-commit hooks have you found useful in your own projects?

https://blog.mozilla.org/nfroyd/2016/07/29/a-git-pre-commit-hook-for-tooltool-manifest-checking/

|

|

Roberto A. Vitillo: Differential Privacy for Dummies |

Technology allows companies to collect more data and with more detail about their users than ever before. Sometimes that data is sold to third parties, other times it’s used to improve products and services.

In order to protect users’ privacy, anonymization techniques can be used to strip away any piece of personally identifiable data and let analysts access only what’s strictly necessary. As the Netflix competition in 2007 has shown though, that can go awry. The richness of data allows to identify users through a sometimes surprising combination of variables like the dates on which an individual watched certain movies. A simple join between an anonymized datasets and a non-anonymized one can re-identify anonymized data.

Aggregated data is not much safer either! Given two sets of aggregated data and

that count how many individuals have property

and knowing that individual

is missing from

, one can infer whether individual

has property

by subtracting

from

.

Differential Privacy to the rescue

Differential privacy formalizes the idea that a query should not reveal whether any one person is present in a dataset, much less what their data are. Imagine two otherwise identical datasets, one with your information in it, and one without it. Differential Privacy ensures that the probability that a query will produce a given result is nearly the same whether it’s conducted on the first or second dataset. The idea is that if an individual’s data doesn’t significantly affect the outcome of a query, then he might be OK in giving his information up as likely no harm will come from it. The result of the query can damage an individual regardless of his presence in a dataset though. For example, if an analysis on a medical dataset finds a correlation between lung cancer and smoking, then the health insurance cost for a particular smoker might increase regardless of his presence in the study.

More formally, differential privacy requires that the probability of a query producing any given output changes by at most a multiplicative factor when a record (e.g. an individual) is added or removed from the input. The largest multiplicative factor quantifies the amount of privacy difference. This sounds harder than it actually is and the next sections will iterate on the concept with various examples, but first we need to define a few terms.

Dataset

We will think of a dataset as being a collections of records from an universe

. One way to represent a dataset

is with a histogram in which each entry

represents the number of elements in the dataset equal to

. For example, say we collected data about coin flips of three individuals, then given the universe

, our dataset

would have two entries:

and

, where

. Note that in reality a dataset is likely to be an ordered lists of rows (i.e. a table) but the former representation makes the math a tad easier.

Distance

Given the previous definition of dataset, we can define the distance between two datasets with the

norm as:

Mechanism

A mechanism is an algorithm that takes as input a dataset and returns an output, so it can really be anything, like a number or a statistical model. Using the previous coin-flipping example, if mechanism counts the number of individuals in the dataset, then

.

Differential Privacy

A mechanism satisfies

differential privacy if for every pair of datasets

such that

, and for every subset

:

What’s important to understand is that the previous statement is just a definition. The definition is not an algorithm, but merely a condition that must be satisfied by a mechanism to claim that it satisfies differential privacy. Differential privacy allows researchers to use a common framework to study algorithms and compare their privacy guarantees.

Let’s check if our mechanism satisfies

differential privacy. Can we find a counter-example for which:

is false? Given such that

and

, then:

i.e. , which is clearly false, hence this proves that mechanism

doesn’t satisfy

differential privacy.

Composition theorems

A powerful property of differential privacy is that mechanisms can easily be composed.

Let be a dataset and

an arbitrary function. Then, the sequential composition theorem asserts that if

is

differentially private, then

is

differentially private. Intuitively this means that given an overall fixed privacy budget, the more mechanisms are applied to the same dataset, the more the available privacy budget for each individual mechanism will decrease.

The parallel composition theorem asserts that given partitions of a dataset

, if for an arbitrary partition

,

is

differentially private, then

is

differentially private. In other words, if a set of

differentially private mechanisms is applied to a set of disjoint subsets of a dataset, then the combined mechanism is still

differentially private.

The randomized response mechanism

The first mechanism we will look into is “randomized response”, a technique developed in the sixties by social scientists to collect data about embarrassing or illegal behavior. The study participants have to answer a yes-no question in secret using the following mechanism :

- Flip a biased coin with probability of heads

;

- If heads, then answer truthfully with

;

- If tails, flip a coin with probability of heads

and answer “yes” for heads and “no” for tails.

In code:

def randomized_response_mechanism(d, alpha, beta):

if random() < alpha:

return d

elif random() < beta:

return 1

else:

return 0

Privacy is guaranteed by the noise added to the answers. For example, when the question refers to some illegal activity, answering “yes” is not incriminating as the answer occurs with a non-negligible probability whether or not it reflects reality, assuming and

are tuned properly.

Let’s try to estimate the proportion of participants that have answered “yes”. Each participant can be modeled with a Bernoulli variable

which takes a value of 0 for “no” and a value of 1 for “yes”. We know that:

Solving for yields:

Given a sample of size , we can estimate

with

. Then, the estimate

of

is:

To determine how accurate our estimate is we will need to compute its standard deviation. Assuming the individual responses are independent, and using basic properties of the variance,

By taking the square root of the variance we can determine the standard deviation of . It follows that the standard deviation

is proportional to

, since the other factors are not dependent on the number of participants. Multiplying both

and

by

yields the estimate of the number of participants that answered “yes” and its relative accuracy expressed in number of participants, which is proportional to

.

The next step is to determine the level of privacy that the randomized response method guarantees. Let’s pick an arbitrary participant. The dataset is represented with either 0 or 1 depending on whether the participant answered truthfully with a “no” or “yes”. Let’s call the two possible configurations of the dataset respectively

and

. We also know that

for any

. All that’s left to do is to apply the definition of differential privacy to our randomized response mechanism

:

The definition of differential privacy applies to all possible configurations of , e.g.:

The privacy parameter can be tuned by varying

. For example, it can be shown that the randomized response mechanism with

and

satisfies

differential privacy.

The proof applies to a dataset that contains only the data of a single participant, so how does this mechanism scale with multiple participants? It follows from the parallel composition theorem that the combination of differentially private mechanisms applied to the datasets of the individual participants is

differentially private as well.

The Laplace mechanism

The Laplace mechanism is used to privatize a numeric query. For simplicity we are going to assume that we are only interested in counting queries , i.e. queries that count individuals, hence we can make the assumption that adding or removing an individual will affect the result of the query by at most 1.

The way the Laplace mechanism works is by perturbing a counting query with noise distributed according to a Laplace distribution centered at 0 with scale

,

Then, the Laplace mechanism is defined as:

where is a random variable drawn from

.

In code:

def laplace_mechanism(data, f, eps):

return f(data) + laplace(0, 1.0/eps)

It can be shown that the mechanism preserves differential privacy. Given two datasets

such that

and a function

which returns a real number from a dataset, let

denote the probability density function of

and

the probability density function of

. Given an arbitrary real point

,

by the triangle inequality. Then,

What about the accuracy of the Laplace mechanism? From the cumulative distribution function of the Laplace distribution it follows that if , then

. Hence, let

and

:

where . The previous equation sets a probalistic bound to the accuracy of the Laplace mechanism that, unlike the randomized response, does not depend on the number of participants

.

Counting queries

The same query can be answered by different mechanisms with the same level of differential privacy. Not all mechanisms are born equally though; performance and accuracy have to be taken into account when deciding which mechanism to pick.

As a concrete example, let’s say there are individuals and we want to implement a query that counts how many possess a certain property

. Each individual can be represented with a Bernoulli random variable:

participants = binomial(1, p, n)

We will implement the query using both the randomized response mechanism , which we know by now to satisfy

differential privacy, and the Laplace mechanism

which satisfies

differential privacy as well.

def randomized_response_count(data, alpha, beta):

randomized_data = randomized_response_mechanism(data, alpha, beta)

return len(data) * (randomized_data.mean() - (1 - alpha)*beta)/alpha

def laplace_count(data, eps):

return laplace_mechanism(data, np.sum, eps)

r = randomized_response_count(participants, 0.5, 0.5)

l = laplace_count(participants, log(3))

Note that while that while is applied to each individual response and later combined in a single result, i.e. the estimated count,

is applied directly to the count, which is intuitively why

is noisier than

. How much noisier? We can easily simulate the distribution of the accuracy for both mechanisms with:

def randomized_response_accuracy_simulation(data, alpha, beta, n_samples=1000):

return [randomized_response_count(data, alpha, beta) - data.sum()

for _ in range(n_samples)]

def laplace_accuracy_simulation(data, eps, n_samples=1000):

return [laplace_count(data, eps) - data.sum()

for _ in range(n_samples)]

r_d = randomized_response_accuracy_simulation(participants, 0.5, 0.5)

l_d = laplace_accuracy_simulation(participants, log(3))

As mentioned earlier, the accuracy of grows with the square root of the number of participants:

Randomized Response Mechanism Accuracy

Randomized Response Mechanism Accuracywhile the accuracy of is a constant:

Laplace Mechanism Accuracy

Laplace Mechanism AccuracyYou might wonder why one would use the randomized response mechanism if it’s worse in terms of accuracy compared to the Laplace one. The thing about the Laplace mechanism is that the private data about the users has to be collected and stored, as the noise is applied to the aggregated data. So even with the best of intentions there is the remote possibility that an attacker might get access to it. The randomized response mechanism though applies the noise directly to the individual responses of the users and so only the perturbed responses are collected! With the latter mechanism any individual’s information cannot be learned, but an aggregator can still infer population statistics.

Real world use-cases

The algorithms presented in this post can be used to answer simple counting queries. There are many more mechanisms out there used to implement complex statistical procedures like machine learning models. The concept behind them is the same though: there is a certain function that needs to be computed over a dataset in a privacy preserving manner and noise is used to mask how much information about an arbitrary individual is leaked.

One such mechanism is RAPPOR, an approach pioneered by Google to collect frequencies of an arbitrary set of strings. The idea behind it is to collect vectors of bits from users where each bit is perturbed with the randomized response mechanism. The bit-vector might represent a set of binary answers to a group of questions, a value from a known dictionary or, more interestingly, a generic string encoded through a Bloom filter. The bit-vectors are aggregated and the expected count for each bit is computed in a similar way as shown previously in this post. Then, a statistical model is fit to estimate the frequency of a candidate set of known strings. The main drawback with this approach is that it requires a known dictionary.

Later on the approach has been improved to infer the collected strings without the need of a known dictionary at the cost of accuracy and performance. To give you an idea, to estimate a distribution over an unknown dictionary of 6-letter strings without knowing the dictionary, in the worst case, a sample size in the order of 300 million is required; the sample size grows quickly as the length of the strings increases. That said, the mechanism consistently finds the most frequent strings which enable to learn the dominant trends of a population.

Even though the theoretical frontier of differential privacy is expanding quickly there are only a handful implementations out there that, by ensuring privacy without the need for a trusted third party like RAPPOR, suit well the kind of data collection schemes commonly used in the software industry.

References

- The Algorithmic Foundations of Differential Privacy

- Differential Privacy and Machine Learning: a Survey and Review

- Differential Privacy – A Primer for the Perplexed

- Building a RAPPOR with the Unknown: Privacy-Preserving Learning of Associations and Data Dictionaries

- Jupyter notebook used to generate plots

https://robertovitillo.com/2016/07/29/differential-privacy-for-dummies/

|

|

Nicholas Nethercote: How to switch to a 64-bit Firefox on Windows |

I recently wrote about 64-bit Firefox builds on Windows, explaining why you might want to switch — it can reduce the likelihood of out-of-memory crashes — and also some caveats.

However, I didn’t explain how to switch, so I will do that now.

First, if you want to make sure that you aren’t already running a 64-bit Firefox, type “about:support” in the address bar and then look at the User Agent field in the Application Basics table near the top of the page.

- If it contains the string “Win64”, you are already running a 64-bit Firefox.

- If it contains the string “WOW64“, you are running a 32-bit Firefox on a 64-bit Windows installation, which means you can switch to a 64-bit build.

- Otherwise, you are running a 32-bit Firefox on a 32-bit Windows installation, and cannot switch to a 64-bit Firefox.

Here are links to pages contain 64-bit builds for all the different release channels.

- Release

- Beta

- Developer Edition

- Nightly: This is a user-friendly page, but it only has the en-US locale.

- Nightly: This is a more intimidating page, but it has all locales. Look for a file with a name of the form

firefox-, e.g.. .win64.installer.exe firefox-50.0a1.de.win64.installer.exefor Nightly 50 in German.

By default, 32-bit Firefox and 64-bit Firefox are installed to different locations:

C:\Program Files (x86)\Mozilla Firefox\C:\Program Files\Mozilla Firefox\

If you are using a 32-bit Firefox and then you download and install a 64-bit Firefox, by default you will end up with two versions of Firefox installed. (But note that if you let the 64-bit Firefox installer add shortcuts to the desktop and/or taskbar, these shortcuts will replace any existing shortcuts to 32-bit Firefox.)

Both the 32-bit Firefox and the 64-bit Firefox will use the same profile, which means all your history, bookmarks, extensions, etc., will be available in either version. You’ll be able to run both versions, though not at the same time with the same profile. If you decide you don’t need both versions you can simply remove the unneeded version through the Windows system settings, as normal; your profile will not be touched when you do this.

Finally, there is a plan to gradually roll out 64-bit Firefox to Windows users in increasing numbers.

https://blog.mozilla.org/nnethercote/2016/07/29/how-to-switch-to-a-64-bit-firefox-on-windows/

|

|

Air Mozilla: Bay Area Rust Meetup July 2016 |

Bay Area Rust Meetup for July 2016. Topics TBD.

Bay Area Rust Meetup for July 2016. Topics TBD.

|

|

Jeff Muizelaar: Counting function calls per second |

#pragma D option quiet

dtrace:::BEGIN

{

rate = 0;

}

profile:::tick-1sec

{

printf("%d/sec\n", rate);

rate = 0;

}

pid$target:XUL:*SharedMemoryBasic*Create*:entry

{

rate++;

}

You can run this script with following command:

$ dtrace -s $SCRIPT_NAME -p $PIDI'd be interested in knowing if anyone else has a similar technique for OSs that don't have dtrace.

http://muizelaar.blogspot.com/2016/07/counting-function-calls-per-second.html

|

|

Support.Mozilla.Org: What’s Up with SUMO – 28th July |

Hello, SUMO Nation!

July’s almost over… but our updates are not, obviously :-) How have you been? Are you melting in the shade or freezing in the sun? Maybe both? ;-) Here are the hotte… no, wait, the coolest news on the web, for your eyes only!

Welcome, new contributors!

If you just joined us, don’t hesitate – come over and say “hi” in the forums!

Contributors of the week

- Priyansu, Nayan Banik, Moin Shaikh, and DeadDrive who have rocked Twitter for SUMO – as nominated by Noah – THANK YOU!

- Cynthia, Jhonatas, and the whole Brazilian community for being super organized and mega helpful!

- All the “secret helpers” who responded to a quick l10n intervention call – you rock!

- wxie – for an unstoppable l10n spree for Chinese!

- Facyber & civas – for their Firefox for Android KB madness in Serbian – thank you!

- The forum supporters who helped users out for the last week.

- The writers of all languages who worked on the KB for the last week.

We salute you!

Most recent SUMO Community meeting

- You can read the notes here and see the video at AirMozilla.

The next SUMO Community meeting…

- …is happening on the 3rd of August!

- If you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- PLATFORM REMINDER! The Platform Meetings are BACK! If you missed the previous ones, here is the etherpad, with links to the recordings on AirMo.

- UPDATE! We’re in the final stages of negotations and contracting details. More details as we have them (most likely in a special blog post dedicated to just the platform)

- On a slightly less serious front… E10s is coming! (thank you, Jobava!)

- Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

- Ongoing reminder #3: want to know what’s going on with the Admins? Check this thread in the forum.

Social

- REMINDER: A social sprint for Firefox 48 is taking place soon, stay tuned for more news soon! Repeat after me – AUGUST THE SECOND! (almost a Polish king)

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- IMPORTANT REMINDER! Please report any fake firefox-update malware in this thread, the more evidence is found, the closer we get to solving this issue. What can you do?

- Provide URLs and copies of the fake file to antivirus partners to add it to their blocks.

- Report the web forgeries through the Google URL forgery reporting tool

- Looking for an ad server source is the key to success here (if there is one core source of this issue)

- For those who are getting ready for the new update coming up on August 2nd, see what’s new in beta and what made the cut for Version 48!

- Now, some E10s for realsies – learn more straight from Asa!

- Remember, if you’re new to the support forum, come over and say hi! We’re looking forward to seeing you in the forums for SUMO days!

Knowledge Base & L10n

- The list of high priority articles is here. Please make sure that they are localized as soon as possible – our users rely on your awesomeness!

- Firefox for iOS 5 is LIVE! Make sure you localize all the missing pieces of the KB if it’s present in your locale.

- Reminder: L10n hackathons everywhere! Find your people and get organized! If you have questions about joining, contact your global locale team.

Firefox

- for Android

- Version 48 coming August 2nd.

- for Desktop

- Version 48 coming August 2nd.

- for iOS

- 5.0 is here! You can talk about it on our forums!

Thus, between a Firefox for iOS and a Firefox for Desktop and Android released into the wild wide web – that’s a lot of heat! Now I know why it’s so easy to sweat nowadays. Well, maybe there will be some relief in the weeks between releases ;-) Winter is coming! Slowly, but surely…

Keep rocking the helpful web, SUMO Nation!

https://blog.mozilla.org/sumo/2016/07/28/whats-up-with-sumo-28th-july/

|

|

Air Mozilla: Reps weekly, 28 Jul 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Air Mozilla: Web QA Team Meeting, 28 Jul 2016 |

They say a Mozilla Web QA team member is the most fearless creature in the world. They say their jaws are powerful enough to crush...

They say a Mozilla Web QA team member is the most fearless creature in the world. They say their jaws are powerful enough to crush...

|

|

Air Mozilla: Privacy Lab - July 2016 - Student Privacy |

Join us for presentations and a lively discussion around Student Privacy. Guest speakers include: *Alex Smolen, head of security/privacy for Clever *Andrew Rock, online privacy...

Join us for presentations and a lively discussion around Student Privacy. Guest speakers include: *Alex Smolen, head of security/privacy for Clever *Andrew Rock, online privacy...

https://air.mozilla.org/privacy-lab-july-2016-student-privacy/

|

|

Christian Heilmann: Why ChakraCore matters |

People who have been in the web development world for a long time might remember A List Apart’s posts from 16 years ago talking about “Why $browser matters”. In these posts, Zeldman explained how the compliance with web standards and support for new features made some browsers stand out, even if they don’t have massive market share yet.

These browsers became the test bed for future-proof solutions. Solutions that later on already were ready for a larger group of new browsers. Functionality that made the web much more exciting than the one of old. The web we built for one browser and relied on tried-and-true, yet hacky solutions like table layouts. These articles didn’t praise a new browser with flashy new functionality. Browsers featured in this series were the ones compliant with upcoming and agreed standards. That’s what made them important.

The web thrives on diversity. Not only in people, but also in engines. We would not be where we are today if we had stuck with one browser engine. We would not enjoy the openness and free availability of our technologies if Mozilla hadn’t showed that you can be open and a success. The web thrives on user choice and on tool choice for developers.

Competition makes us better and our solutions more creative. Standardisation makes it possible for users of our solutions to maintain them. To upgrade them without having to re-write them from scratch. Monoculture brings quick success but in the long run always ends in dead code on the web that has nowhere to execute. As the controlled, solution-to-end-all-solutions changed from underneath the developers without backwards compatibility.

Today my colleague Arunesh Chandra announced at the NodeSummit in San Francisco that ChakraCore, the open source JavaScript engine of Microsoft, in part powering the Microsoft Edge browser is available now for Linux and OSX.

This is a huge step for Microsoft, who – like any other company – is a strong believer in its own products. It also does well keeping their developers happy by sticking to what they are used to. It is nothing they needed to do to stay relevant. But it is something that is the right thing to do to ensure that the world of Node also has more choice and is not dependent on one predominant VM. Many players in the market see the benefits of Node and want to support it, but are not sold on a dependency on one JavaScript VM. A few are ready to roll out their own VMs, which cater to special needs, for example in the IoT space.

This angers a few people in the Node world. They worry that with several VMs, the “browser hell” of “supporting all kind of environment” will come to Node. Yes, it will mean having to support more engines. But it is also an opportunity to understand that by using standardised code, ratified by the TC39, your solutions will be much sturdier. Relying on specialist functionality of one engine always means that you are dependent on it not changing. And we are already seeing far too many Node based solutions that can’t upgrade to the latest version as breaking changes would mean a complete re-write.

ChakraCore matters the same way browsers that dared to support web standards mattered. It is a choice showing that to be future proof, Node developers need to be ready to allow their solutions to run on various VMs. I’m looking forward to seeing how this plays out. It took the web a few years to understand the value of standards and choice. Much rhetoric was thrown around on either side. I hope that with the great opportunity that Node is to innovate and use ECMAScript for everything we will get there faster and with less dogmatic messaging.

Photo by Ian D. Keating

https://www.christianheilmann.com/2016/07/27/why-chakracore-matters/

|

|

Daniel Stenberg: A third day of deep HTTP inspection |

This fine morning started off with some news: Patrick is now our brand new official co-chair of the IETF HTTPbis working group!

This fine morning started off with some news: Patrick is now our brand new official co-chair of the IETF HTTPbis working group!

Subodh then sat down and took us off on a presentation that really triggered a long and lively discussion. “Retry safety extensions” was his name of it but it involved everything from what browsers and HTTP clients do for retrying with no response and went on to also include replaying problems for 0-RTT protocols such as TLS 1.3.

Julian did a short presentation on http headers and his draft for JSON in new headers and we quickly fell down a deep hole of discussions around various formats with ups and downs on them all. The general feeling seems to be that JSON will not be a good idea for headers in spite of a couple of good characteristics, partly because of its handling of duplicate field entries and how it handles or doesn’t handle numerical precision (ie you can send “100” as a monstrously large floating point number).

Mike did a presentation he called “H2 Regrets” in which he covered his work on a draft for support of client certs which was basically forbidden due to h2’s ban of TLS renegotiation, he brought up the idea of extended settings and discussed the lack of special handling dates in HTTP headers (why we send 29 bytes instead of 4). Shows there are improvements to be had in the future too!

Martin talked to us about Blind caching and how the concept of this works. Put very simply: it is a way to make it possible to offer cached content for clients using HTTPS, by storing the data in a 3rd host and pointing out that data to the client. There was a lengthy discussion around this and I think one of the outstanding questions is if this feature is really giving as much value to motivate the rather high cost in complexity…

The list of remaining Lightning Talks had grown to 10 talks and we fired them all off at a five minutes per topic pace. I brought up my intention and hope that we’ll do a QUIC library soon to experiment with. I personally particularly enjoyed EKR’s TLS 1.3 status summary. I heard appreciation from others and I agree with this that the idea to feature lightning talks was really good.

With this, the HTTP Workshop 2016 was officially ended. There will be a survey sent out about this edition and what people want to do for the next/future ones, and there will be some sort of report posted about this event from the organizers, summarizing things.

Attendees numbers

The companies with most attendees present here were: Mozilla 5, Google 4, Facebook, Akamai and Apple 3.

The companies with most attendees present here were: Mozilla 5, Google 4, Facebook, Akamai and Apple 3.

The attendees were from the following regions of the world: North America 19, Europe 15, Asia/pacific 6.

38 participants were male and 2 female.

23 of us were also at the 2015 workshop, 17 were newcomers.

15 people did lightning talks.

I believe 40 is about as many as you can put in a single room and still have discussions. Going larger will make it harder to make yourself heard as easily and would probably force us to have to switch to smaller groups more and thus not get this sort of great dynamic flow. I’m not saying that we can’t do this smaller or larger, just that it would have to make the event different.

Some final words

I had an awesome few days and I loved all of it. It was a pleasure organizing this and I’m happy that Stockholm showed its best face weather wise during these days. I was also happy to hear that so many people enjoyed their time here in Sweden. The hotel and its facilities, including food and coffee etc worked out smoothly I think with no complaints at all.

Hope to see again on the next HTTP Workshop!

https://daniel.haxx.se/blog/2016/07/27/a-third-day-of-deep-http-inspection/

|

|

Air Mozilla: The Joy of Coding - Episode 65 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Mozilla Reps Community: Rep of the Month – July 2016 |

Please join us in congratulating Christophe Villeneuve as Reps of the Month for July 2016!

Christophe Villeneuve has been a Rep for more than 9 months now and has reported more than 100 activities in the program. From talks on security to writing articles and organizing events, Christophe is active in many different areas. Did we already mention that he also bakes Firefox cookies?

His energy and drive to promote the Open Web and web security is astonishing. Even if sometimes external factors intervene and some of the activities get blockers he neither gets disappointed nor quits, he looks for the next possibility out there. He truly is an open source believer contributing to other open source communities (like Drupal, PHP, MariaDB) as well and he tries to combine those activities for bigger audiences.

Please don’t forget to congratulate him on Discourse!

https://blog.mozilla.org/mozillareps/2016/07/27/rep-of-the-month-july-2016/

|

|

Mozilla Addons Blog: Linting and Automatically Reloading WebExtensions |

We recently announced web-ext 1.0, a command line tool that makes developing WebExtensions more of a breeze. Since then we’ve fixed numerous bugs and added two new features: automatic extension reloading and a way to check for “lint” in your source code.

You can read more about getting started with web-ext or jump in and install it with npm like this:

npm install --global web-extAutomatic Reloading

Once you’ve built an extension, you can try it out with the run command:

web-ext runThis launches Firefox with your extension pre-installed. Previously, you would have had to manually re-install your extension any time you changed the source. Now, web-ext will automatically reload the extension in Firefox when it detects a source file change, making it quick and easy to try out a new icon or fiddle with the CSS in your popup until it looks right.

Automatic reloading is only supported in Firefox 49 or higher but you can still run your extension in Firefox 48 without it.

Checking For Code Lint

If you make a mistake in your manifest or any other source file, you may not hear about it until a user encounters the error or you try submitting the extension to addons.mozilla.org. The new lint command will tell you about these mistakes so you can fix them before they bite you. Run it like this:

web-ext lintFor example, let’s say you are porting an extension from Chrome that used the history API, which hasn’t fully landed in Firefox at the time of this writing. Your manifest might declare the history permission like this:

{

"manifest_version": 2,

"name": "My Extension",

"version": "1.0",

"permissions": [

"history"

]

}When running web-ext lint from the directory containing this manifest, you’ll see an error explaining that history is an unknown permission.

Try it out and let us know what you think. As always, you can submit an issue if you have an idea for a new feature of if you run into a bug.

https://blog.mozilla.org/addons/2016/07/27/linting-and-automatically-reloading-webextensions/

|

|

Air Mozilla: Weekly SUMO Community Meeting July 27, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-july-27-2016/

|

|

Dave Hunt: A Summer to Mentor |

This summer I am mentoring Justin Potts – a university intern working on improving Mozilla’s add-ons related test automation, and Ana Ribeiro – an Outreachy participant working on enhancing the pytest-html plugin.

It’s not my first time working with Justin, who has been a regular team contributor for a few years now, and last summer helped me to get the pytest-selenium plugin released. It certainly helped to have previous experience working with Justin when deciding to take on the official role as mentor for his first internship. Unfortunately, his project is rather difficult to define, as he’s been working on a number of things, though mostly they are related to Firefox add-ons and running automated tests. There’s no shortage of challenging tasks for Justin to work on, and he’s taking them on with the enthusiasm that I expected he would. You can read more about Justin’s internship on his blog.

Ana’s project grew out of a security exploit discovered in Jenkins, which led to the introduction of the

Content-Security-Policyheader for static files being served. This meant that the fancy HTML reports generated by pytest-html were broken due to the use of JavaScript, inline CSS, and inline images. Along with a few other enhancements, providing a CSP friendly from the plugin became a perfect candidate project for Outreachy. As part of her application, Ana contributed a patch for pytest-variables, and I was impressed with her level of communication over the patch. To get Ana familiar with the plugin, her initial contributions were not related to the CSP issue, but she’s now making good progress on this. You can read more about Ana’s Outreachy project on her dedicated blog.

So far I have mostly enjoyed the experience of being a mentor – it especially feels great to see the results that Justin and Ana are producing. Probably the most challenging aspect for me is being remote – Justin is based in Mountain View, California, and Ana is based in Brazil. It’s hard to feel a connection when you’re dependent on instant messages and video conferencing, though I suspect it’s probably harder for them than it is for me. Fortunately, I did get to work with them a little in London during the all hands, and then some more with Ana in Freiburg during the pytest sprint.

There are still a few weeks left for their projects, and I’m hoping they’ll both be able to conclude them to their satisfaction!

|

|

Myk Melez: a basic browser app in Positron |

Over in Positron, we’ve implemented enough of Electron’s

git clone https://github.com/hokein/electron-sample-apps

git clone https://github.com/mozilla/positron

cd positron

./mach build

./mach run ../electron-sample-apps/webview/browser/

https://mykzilla.org/2016/07/27/a-basic-browser-app-in-positron/

|

|

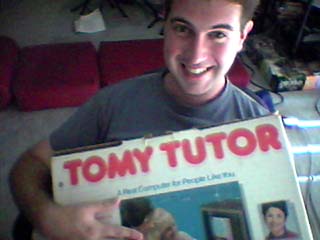

Cameron Kaiser: And now for something completely different: Join me at Vintage Computer Festival XI |

A programming note: My wife and I will be at the revised, resurrected Vintage Computer Festival XI August 6 and 7 in beautiful Mountain View, CA at the Computer History Museum (just down the street from the ominous godless Googleplex). I'll be demonstrating my very first home computer, the Tomy Tutor (a weird partial clone of the Texas Instruments 99/4A), and its Japanese relatives. Come by, enjoy the other less interesting exhibits, and bask in the nostalgic glow when 64K RAM was enough and cassette tape was king.

I'm typing this in a G5-optimized build of 45 and it seems to perform pretty well. JavaScript benches over 20% faster than 38 due to improvements in the JIT (and possibly some marginal improvement from gcc 4.8), and this is before I start doing further work on PowerPC-specific improvements which will be rolled out during 45's lifetime. Plus, the AltiVec code survived without bustage in our custom VP8, UTF-8 and JPEG backends, and I backported some graphics performance patches from Firefox 48 that improve throughput further. There's still a few glitches to be investigated; I spent most of tonight figuring out why I got a big black window when going to fullscreen mode (it turned out to be several code regressions introduced by Mozilla removing old APIs), and Amazon Music still has some weirdness moving from track to track. It's very likely there will be other such issues lurking next week when you get to play with it, but that's what a beta cycle is for.

38.10 will be built over the weekend after I'm done doing the backports from 45.3. Stay tuned for that.

http://tenfourfox.blogspot.com/2016/07/and-now-for-something-completely.html

|

|

Eric Shepherd: MDN pro tip: Watch for changes |

The Web moves pretty fast. Things are constantly changing, and the documentation content on the Mozilla Developer Network (MDN) is constantly changing, too. The pace of change ebbs and flows, and often it can be helpful to know when changes occur. I hear this most from a few categories of people:

- Firefox developers who work on the code which implements a particular technology. These folks need to know when we’ve made changes to the documentation so they can review our work and be sure we didn’t make any mistakes or leave anything out. They often also like to update the material and keep up on what’s been revised recently.

- MDN writers and other contributors who want to ensure that content remains correct as changes are made. With so many people making change to some of our content, keeping up and being sure mistakes aren’t made and that style guides are followed is important.

- Contributors to specifications and members of technology working groups. These are people who have a keen interest in knowing how their specifications are being interpreted and implemented, and in the response to what they’ve designed. The text of our documentation and any code samples, and changes made to them, may be highly informative for them to that end.

- Spies. Ha! Just kidding. We’re all about being open in the Mozilla community, so spies would be pretty bored watching our content.

There are a few ways to watch content for changes, from the manual to the automated. Let’s take a look at the most basic and immediately useful tool: MDN page and subpage subscriptions.

Subscribing to a page

After logging into your MDN account (creating one if you don’t already have one), make your way to the page you want to subscribe to. Let’s say you want to be sure nobody messes around with the documentation about

After logging into your MDN account (creating one if you don’t already have one), make your way to the page you want to subscribe to. Let’s say you want to be sure nobody messes around with the documentation about

https://www.bitstampede.com/2016/07/26/mdn-pro-tip-watch-for-changes/

|

|