Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Roberto A. Vitillo: Longitudinal studies with Telemetry |

Unified Telemetry (UT) replaced both Telemetry and FHR at the end of last year. FHR has been historically used to answer longitudinal questions, such as churn, while Telemetry has mainly been used for performance studies.

In UT-land, multiple self-contained submissions are generated for a profile over time in contrast to FHR for which submissions contained all historical records. With the new format, a typical longitudinal query on the raw data requires conceptually a big group-by on the profile ID over all submissions to recreate the history for each profile. To avoid the expensive grouping operation we are providing a longitudinal batch view of our Telemetry dataset.

The longitudinal view is logically organized as a table where rows represent profiles and columns the various metrics (e.g. startup time). Each field of the table contains a list of chronologically sorted values, one per Telemetry submission received for that profile. Even though each profile could have been represented as an array of structures with Parquet, ultimately we decided to represent it as a structure of arrays to deal with some inefficiencies in reading nested data from Spark.

The dataset is periodically regenerated from scratch, which allows us to apply non backward compatible changes to the schema and not worry about merging procedures.

The dataset can be accessed from Mozilla’s Spark clusters as a DataFrame:

frame = sqlContext.sql(SELECT * FROM longitudinal)

The view contains several thousand measures, which include all histograms and a large part of our scalar metrics stored in the various sections of Telemetry submissions.

frame.printSchema()

root |-- profile_id: string |-- normalized_channel: string |-- submission_date: array | |-- element: string |-- build: array | |-- element: struct | | |-- application_id: string | | |-- application_name: string | | |-- architecture: string | | |-- architectures_in_binary: string | | |-- build_id: string | | |-- version: string | | |-- vendor: string | | |-- platform_version: string | | |-- xpcom_abi: string | | |-- hotfix_version: string ...

A Jupyter notebook with example queries that use the longitudinal dataset is available on Spark clusters launched from a.t.m.o.

http://robertovitillo.com/2016/03/21/longitudinal-studies-with-telemetry/

|

|

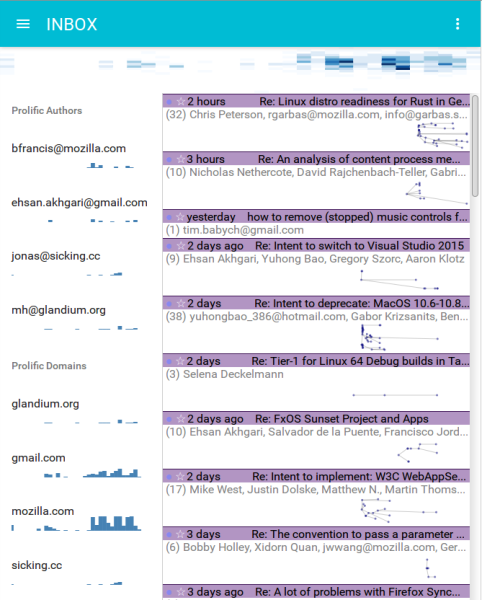

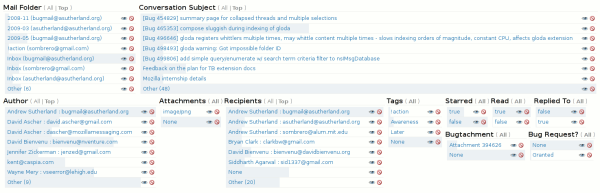

Andrew Sutherland: Web Worker-assisted Email Visualizations using Vega |

tl;dr: glodastrophe, the experimental entirely-client-side JS desktop-ish email app now supports Vega-based visualizations in addition to new support infrastructure for extension-y things and creating derived views based on the search/filter infrastructure.

Two of the dreams of Mozilla Messaging were:

- Shareable email workflows (credit to :davida). If you could figure out how to set up your email client in a way that worked for you, you should be able to share that with others in a way that doesn’t require them to manually duplicate your efforts and ideally without you having to write code. (And ideally without anyone having to review code/anything in order to ensure there are no privacy or security problems in the workflow.)

- Useful email visualizations. While in the end, the only visualization ever shipped with Thunderbird was the simple timeline view of the faceted global search, various experiments happened along the way, some abandoned. For example, the following screenshot shows one of the earlier stages of faceted search development where each facet attempted to visualize the relative proportion of messages sharing that facet.

At the time, the protovis JS visualization library was the state of the art. Its successor the amazing, continually evolving d3 has eclipsed it. d3, being a JS library, requires someone to write JS code. A visualization written directly in JS runs into the whole code review issue. What would be ideal is a means of specifying visualizations that is substantially more inert and easy to sandbox.

Enter, Vega, a visualization grammar that can be expressed in JSON that can not only define “simple” static visualizations, but also mind-blowing gapminder-style interactive visualizations. Also, it has some very clever dataflow stuff under the hood and builds on d3 and its well-proven magic. I performed a fairly extensive survey of the current visualization, faceting, and data processing options to help bring visualizations and faceted filtered search to glodastrophe and other potential gaia mail consumers like the Firefox OS Gaia Mail App.

Digression: Two relevant significant changes in how the gaia mail backend was designed compared to its predecessor Thunderbird (and its global database) are:

- As much as can possibly be done in a DOM/Web Worker(s) is done so. This greatly assists in UI responsiveness. Thunderbird has to do most things on the main thread because of hard-to-unwind implementation choices that permeate the codebase.

- It’s assumed that the local mail client may only have a subset of the messages known to the server, that the server may be smart, and that it’s possible to convince servers to support new functionality. In many ways, this is still aspirational (the backend has not yet implemented search on server), but the architecture has always kept this in mind.

In terms of visualizations, what this means is that we pre-chew as much of the data in the worker as we can, drastically reducing both the amount of computation that needs to happen on the main (page) thread and the amount of data we have to send to it. It also means that we could potentially farm all of this out to the server if its search capabilities are sufficiently advanced. And/or the backend could cache previous results.

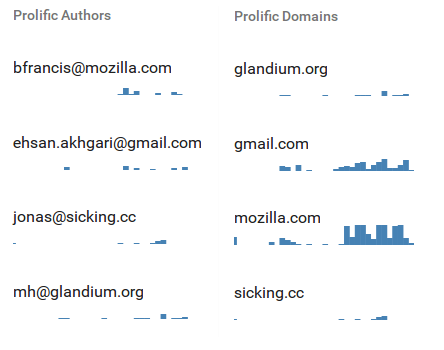

For example, in the faceted visualizations on the sidebar (placed side-by-side here):

In the “Prolific Authors” visualization definition, the backend in the worker constructs a Vega dataflow (only!). The search/filter mechanism is spun up and the visualization’s data gathering needs specify that we will load the messages that belong to each conversation in consideration. Then for each message we extract the author and age of the message and feed that to the dataflow graph. The data transforms bin the messages by date, facet the messages by author, and aggregate the message bins within each author. We then sort the authors by the number of messages they authored, and limit it to the top 5 authors which we then alphabetically sort. If we were doing this on the front-end, we’d have to send all N messages from the back-end. Instead, we send over just 5 histograms with a maximum of 60 data-points in each histogram, one per bin.

Same deal with “Prolific domains”, but we extract the author’s mail domain and aggregate based on that.

Similarly, the overview Authored content size over time heatmap visualization sends only the aggregated heatmap bins over the wire, not all the messages. Elaborating, for each message body part, we (now) compute an estimate of the number of actual “fresh” content bytes in the message. Anything we can detect as a quote or a mailing list footer or multiple paragraphs of legal disclaimers doesn’t count. The x-axis bins by time; now is on the right, the oldest considered message is on the left. The y-axis bins by the log of the authored content size. Messages with zero new bytes are at the bottom, massive essays are at the top. The current visualization is useless, but I think the ingredients can and will be used to create something more informative.

Other notable glodastrophe changes since the last blog post:

- Front-end state management is now done using redux

- The Material UI React library has been adopted for UI widget purposes, though the conversation and message summaries still need to be overhauled.

- React was upgraded

- A war was fought with flexbox and flexbox won. Hard-coding and calc() are the only reason the visualizations look reasonably sized.

- Webpack is now used for bundling in order to facilitate all of these upgrades and reduce potential contributor friction.

More to come!

https://www.visophyte.org/blog/2016/03/21/web-worker-assisted-email-visualizations-using-vega/

|

|

This Week In Rust: This Week in Rust 123 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: Vikrant and llogiq.

Updates from Rust Community

News & Blog Posts

- [discussion] What, in your opinion is the worst thing about Rust?.

- The epic story of Dropbox’s exodus from the amazon cloud empire (feat. Rust).

- Servo is going to have an alpha release in June: Servo + browser.html.

- Learn you a Rust II - references and borrowing.

- Mozilla looks towards the IoT for Rust.

- Working with C unions in Rust FFI.

- [pdf] Fuzzing the Rust typechecker using CLP.

- This week in Servo 55.

Notable New Crates & Project Updates

- Gtk-rs released 0.0.7 with major changes.

- RustType 0.2 - Now with dynamic GPU font caching.

- libs.rs. A catalogue of Rust community's awesomeness.

- dryad.so.1 - an experimental x86-64 ELF dynamic linker, written entirely in Rust (and some asm)

- Termion (previously called libterm) now supports 256 colour mode, asynchronous key events, special key events, and password input.

Summer of Code Projects

Hi students! Looking for an awesome summer project in Rust? Look no further! Chris Holcombe from Canonical is an experienced Google Summer of Code mentor and has a project to implement CephX protocol decoding. Check it out here.

Servo is also accepting GoSC project submissions under the Mozilla banner. See if any of the project ideas appeal to you and read the advice for applications.

Servo also has a project in Rails Girls Summer of Code. nom is also participating.

Crate of the Week

This week's Crate of the Week is tempfile, a crate that does exactly what it says on the tin. Thanks to Steven Allen for the suggestion!

Submit your suggestions for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- [easy] Rust: OsString could implement the Default trait.

- [easy] Rust: Backtrace contains function names with MIR, but not on MSVC.

- [less easy] Servo: Write a tool that reports unnecessary crate dependencies.

- [easy] Servo/Saltfs: Trim down the symlinks to ARM cross-compilation binaries.

- [easy]

cargo add: Target specifications. - [easy]

cargo list: More tests. - [easy/mentored]

multipart: create sample projects

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

103 pull requests were merged in the last week.

Notable changes

- RFC 1210 impl specialization has landed! Yay!

- MIR is now able to bootstrap itself...into orbit!

- typestrong const integers (breaking change)

- Fast floating point algebra

- #derive now uses intrinsics::unreachable

- *Map-Entries now have a

.key()method - Rustc platform intrinsics went on a slimming diet

rustc --test -q: Shorter test output- LLVM assertions disabled on ARM to workaround codegen bug

- Another LLVM update

- Fix an ICE in region inference

- No *into_ascii methods after all (destabilized and removed because of inference regression)

- Warn, not err on inherent overlaps (for now, this will become an error in later versions)

- coercions don't kill rustc anymore

- const_eval now correctly handles right shifts

- Fix overflow when subtracting Instant

New Contributors

- Daniel J Rollins

- pravic

- Stu Black

- tiehuis

- Todd Lucas

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

- RFC 1479: Unix socket support in the standard library.

- RFC 1498: Add octet-oriented interface to

std::net::Ipv6Addr. - RFC 1434: Implement a method,

contains(), forRange,RangeFrom, andRangeTo, checking if a number is in the range.. - RFC 1432: Add a

replace_slicemethod toVecandStringwhich removes a range of elements, and replaces it in place with a given sequence of values.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Unsafe expressions.

- Prevent unstable items from causing name resolution conflicts with downstream code.

- Add support for naked functions.

- Expand the current pub/non-pub categorization of items with the ability to say "make this item visible solely to a (named) module tree".

New RFCs

- Allow fields in traits that map to lvalues in

impl'ing type. as_millisfunction onstd::time::Duration.- Add

global_asm!for module-level inline assembly.

Upcoming Events

- 3/22. Bay Area Rust: Rust, Data, and Science.

- 3/23. OpenTechSchool Berlin: Rust Hack and Learn.

- 3/31. Tokyo Rust Meetup #4.

- 4/15. Frankfurt/Main Rust Lint Workshop

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

- PhD and postdoc positions at MPI-SWS.

- Rust developer at The Blackbird.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

ok and what trait is from from? From :D ok :D so from is from From nice!

Thanks to Thomas Winwood for the suggestion.

Submit your quotes for next week!

https://this-week-in-rust.org/blog/2016/03/21/this-week-in-rust-123/

|

|

Cameron Kaiser: The practicality of the case for TenSixFox |

TenFourFox gets cited a lot as a successful example of a community-driven Firefox fork (to Tiger PowerPC, for those on our syndicated sites who are unfamiliar), and we're usually what gets pointed to when some open-source project (including Firefox) stops supporting a platform. However, even though we have six years so far of successful ports in the can, we're probably not a great example anymore. While we've had major contributors in the past -- Tobias and Ben in particular, but others as well -- and Chris T still volunteers his time in user support and herds the localizer cats, pretty much all the code and porting work is done entirely by yours truly these days. It's just not the community it was, and it's a lot of work for me personally. Nevertheless, our longevity means the concept of a "TenSixFox" gets brought up periodically with expectations of the same success, as Dan did in his first piece, and as a result a lot of people assume that our kind of lightning will simply strike twice.

Unfortunately, even if you found someone as certifiably insane highly motivated as your humble narrator willing to do it, the tl;dr is that a TenSixFox can't be accomplished in the same way TenFourFox was. Restoring 10.4 compatibility to Firefox is, at the 50,000' level, a matter of "merely" adding back the code Mozilla removed to the widget and graphics modules (primarily between Firefox 3.6 and 4, though older code has been gradually purged since then). The one thing that could have been problematic in the beginning was font support, since Tiger's CoreText is inadequate for handling text runs of the complexity required and the ATSUI support had too many bugs to keep it working, but Mozilla solved that problem for us by embedding a font shaper for its own use (Harfbuzz) which we adopted exclusively. After that, as versions ticked along, whatever new features they introduced that we couldn't support we just ruthlessly disabled (WebGL, WebRTC, asm.js, etc.), but the "core" of the browser, the ability to interpret JavaScript, display HTML and handle basic chrome, continued to function. Electrolysis fundamentally changes all of that by introducing a major, incompatible and (soon to be) mandatory change to the browser's basic architecture. It already has serious issues on 10.6 and there is nothing that says those issues are fixable or avoidable; it may well be impossible to get multi-process operational on Snow Leopard without significant showstoppers. That's not a recipe for continued functionality, and there is no practical way of maintaining single-process support once it disappears because of how pervasive the changes required will eventually be.

Plus, there's another technical problem as well. Currently Firefox can't even be built on 10.6; as of this writing the minimum supported compiler is clang 4.2, which means Xcode 4.6 at minimum, which requires 10.8. This isn't a showstopper yet because cross-building is a viable if inconvenient option, but it makes debugging harder, and since 10.8 is being dropped too it is probable that the Xcode minimum requirement will jump as well.

So if you're that nuts enthusiastic, and you have the chops, what are your options?

Option 1 is to keep using TenFourFox on Rosetta, with all the performance and functionality limitations that would imply. Let me reiterate that I don't support Intel Macs and I still have no plans to; this project is first and foremost for Power Macs. I know some of you do run it on 10.6 for yuks and some of you even do so preferentially, but there's not going to be another Intel build of TenFourFox unless someone wants to do it themselves. That brings us to ...

Option 2 is to build TenFourFox natively for 10.6. I currently only support linking against the 10.4 SDK because of all the problems we had trying to maintain a codebase linkable against 10.4 and 10.5 (Tobias used to do this for AuroraFox but that support is long gone). Fortunately, Xcode 3 will still allow this and you can build and debug directly on a Snow Leopard system; plus, since the system minimum will be 10.6 anyway, you'll dodge issue 209 and you have the option of 10.5 compatibility too. You'll have to get all the MacPorts prerequisites including gcc 4.8, enable the x86 JIT again in JavaScript and deal with some of our big-endian code for fast font table enumeration and a couple other things (the compiler will throw an error so you'll know where to make the changes), but the rest of it should "just work." The downside is that, since you are also linking against the 10.4 SDK (regardless of your declared minimum OS support), you'll also have all the limitations of TenFourFox because you're actually porting it, not Firefox (no WebGL, no plugins, no WebRTC, no CoreText shaper; and, because we use a hybrid of Carbon and Cocoa code, you're limited to a 32-bit build). On the other hand, you'll get access to our security updates and core improvements, which I plan to keep making for as long as I personally use TenFourFox, so certain things will be more advanced than the next option(s), which are ...

Option 3 is to keep building 45ESR for 10.6 after 45ESR support terminates by continuing to port security updates. You won't need to make any changes to the code to get the build off the ground, but you'll need both 10.6 and 10.8 systems to debug and build respectively, and every six weeks you'll have to raid the current ESR branch and backport the changes. You won't get any of the TenFourFox patches and core updates unless you manually add them yourself (and debug them, since we won't support you directly). However, you'll have the option of a 64-bit build and you'll have all the supported 10.6 features as well as support for 10.7 and 10.8.

Finally, option 4 is to keep building firefox-release after 45ESR for 10.6 until it doesn't work anymore. Again, you'll need both 10.6 and 10.8 systems to be effective. This option would get you the most current browser in terms of technology, and even if Electrolysis is currently the default, as long as single-process mode is still available (such as in Safe Mode or for accessibility) you should be able to hack the core to always fall back to it. However, now that 10.6-10.8 support is being dropped, you'll have to undo any checks to prevent building and running on those versions and you might not get many more release cycles before other things break or fail to build. Worst of all, once you get marooned on a particular version between ESRs you'll find backporting security patches harder since you're on a branch that's neither fish nor fowl (from personal experience).

That's about the size of it, and I hope it makes it clear TenFourFox and any putative TenSixFox will exist in very different technological contexts. By the time 45ESR support ends, TenFourFox will have maintained source parity with Firefox for almost seven years and kept up with most of Gecko's major technology advancements, and even the very last G5 to roll off the assembly line will have had 11 years of support. I'm pretty proud of that, and I feel justifiably so. Plus, my commitment to the platform won't end there when we go to feature parity; we'll be keeping a core that can still handle most current sites for at least a couple years to come, because I'll be still using it.

TenSixFox, on the other hand, even if it ends up existing, will lack source parity with Firefox just about from its very beginning. It's not going to be the technological victory that we were at our own inception in 2010, and it's not likely to be the success story we've been for that and other important reasons. That doesn't mean it's not worth it, just that there will be some significant compromises and potential problems, and you'd have to do a substantial amount of work now and in the future to keep it viable. For many of you, that may not be worth it, but for a couple of you, it just might be.

http://tenfourfox.blogspot.com/2016/03/the-practicality-of-case-for-tensixfox.html

|

|

Daniel Stenberg: curl is 18 years old tomorrow |

Another notch on the wall as we’ve reached the esteemed age of 18 years in the cURL project. 9 releases were shipped since our last birthday and we managed to fix no less than a total of 457 bugs in that time.

On this single day in history…

20,000 persons will be visiting the web site, transferring over 4GB of data.

1.3 bug fixes will get pushed to the git repository (out of the 3 commits made)

300 git clones are made of the curl source tree, by 100 unique users.

4000 curl source archives will be downloaded from the curl web site

8 mails get posted on the curl mailing lists (at least one of them will be posted by me).

I will spend roughly 2 hours on curl related work. Mostly answering mail, bug reports and debugging, but also maintaining infrastructure, poke on the web site and if lucky, actually spending a few minutes writing new code.

Every human in the connected world will use at least one service, tool or application that runs curl.

Happy birthday to us all!

https://daniel.haxx.se/blog/2016/03/19/curl-is-18-years-old-tomorrow/

|

|

Benjamin Kerensa: Glucosio Named Top Open Source Project |

Last year, I was diagnosed with Type 2 diabetes and my life was pretty much flipped upside down for awhile. One thing I immediately set out to do was to find software, preferably open source, that would help me get on track and have an improved health outcome.

There was a lot of software out there but I did not find any that aligned with my needs as a person with diabetes. Instead, I found a lot of mobile apps and software built by companies that put profit first and were not driven by the needs of people with diabetes.

Right then I came up with the idea to start an open source project that made cross-platform apps (iOS, Android, Desktop, Web etc) with the focus of improving the health outcomes of people with diabetes and supporting research. But there were already two great open source projects out there like Nightscout and Tidepool, so why start our own?

Simply put, I wanted to do something different as I’ve seen this great divide in the diabetes community where not only are things like communities, podcasts and advocates divided around what type of diabetes a person has, but also the two open source projects out there were focused only on people with Type 1 diabetes. This is problematic because Type 2 diabetes is left outside of these intentional Type 1 diabetes circles when we should be working together to solve both types of diabetes and pooling our resources together to advocate for an end to both types of diabetes.

Glucosio for Android

Glucosio for AndroidAnd so Glucosio was born with the vision that an app was needed that benefits both people with Type 1 and Type 2 diabetes. Not focusing on one or the other, but instead giving equal energy to features that will benefit both types. Our vision was that this new open source project will unite people who have Type 1 or Type 2 diabetes or know someone who does to contribute to the project and in turn help accelerate research for both types while at the same time helping those with diabetes keep track of things that affect their health outcomes.

Last year was a lot of work for the entire Glucosio team. We worked hard to build awesome open source software for people with Type 1 and Type 2 diabetes and we pulled it off. Glucosio for Android is the first of our diabetes apps you can download today but we also have Glucosio for iOS and Glucosio for Web and an API for researchers that are all being actively worked on.

It is our hope to also have a cross-platform desktop app (OSX, Windows and Linux) in the future as more contributors join to contribute.

And this week, we were excited to announce that Glucosio following in the footsteps of some pretty stellar projects like Docker, Ghost and others has been named one of Black Duck Software’s Top Open Source Projects of 2015.

If you are interested contributing some code, documentation, design, UX or even money (to the Glucosio Foundation) to the effort of helping millions who struggle with diabetes worldwide, we’d love to have your support but you can also spread the word about our project by tweeting or sharing our project site or connecting with us over social media.

|

|

Chris Cooper: RelEng & RelOps Weekly highlights - March 18, 2016 |

After the huge push last week to realize our first beta release using build promotion, there’s not a whole lot of new work to report on this week. We continue to polish the build promotion process in the face of a busier-than-normal week in terms of operational work.

Release:

Firefox 46.0 beta 1 was finally released to world last week, and the ninth rebuild was, in fact, the charm. As the first release attempted using the new build promotion process, this is a huge milestone for Mozilla releases.

As proof we’re getting better, Firefox 46.0 beta 2 was released this week using the same build promotion process and only required three build iterations. Progress!

This was also a week for dot releases, with security releases in the pipe for Firefox 45 and our extended support release (ESR) versions.

Kim just stepped into the releaseduty rotation for the Firefox 46 cycle. Kudos to Mihai for fixing up the releaseduty docs during his rotation so the process is easy to step into! We released Firefox 45.0esr, Firefox 46.0b1, Thunderbird 38.7.0 and Firefox 38.7.0esr with several other releases in the pipeline. See the notes for details:

- https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-03-16

- https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-03-23

Operational:

There is new capacity in AWS for our Linux64 and Emulator 64 jobs thanks to Vlad and Kim’s work in bug 1252248.

Alin and Amy moved 10 WinXP machines to the Windows 8 pool to reduce pending counts on that platform. (bug 1255812)

Kim removed all the code used to run our panda infrastructure from our repos in bug 1186617. Amy is in the process of decommissioning the associated hardware in bug 1189476.

Speaking of Amy, she received a much-deserved promotion this week. To quote from Lawrence’s announcement email:

“I’m excited to promote Amy Rich into the newly created position of Head of Operations [for Platform Operations]. This new role, which reports directly to me, expands the purview of her existing systems ops team, and includes assisting me with more management leadership responsibility.

“Amy’s unique mix of skills make her a great fit for this role. She has a considerable systems engineering background, and she and her team have been responsible for greatly improving our release infrastructure over the past five years. As a people manager, her commitment to both individuals and the big picture engenders loyalty, respect, and admiration. She is inquisitive and reflective, bringing strategic perspective to decision-making processes such as setting the relative priority between TaskCluster migration and Windows 7 in AWS. As a leader, she has recently stepped up to shepherd projects aimed at creating a more cohesive Platform Operations team, and she is also assisting with Mozilla’s company-wide Diversity & Inclusion strategy.

“Amy’s team will focus on systems automation, lifecycle, and operations support. This involves taking on systems ops responsibilities for Engineering Productivity (VCS, MozReview, MXR/DXR, Bugzilla, and Treeherder) in addition to those of Release Engineering. The long-term vision for this team is that they will support the systems ops needs for all of Platform Operations.

Please join me in congratulating Amy on her new role!”

Indeed! Congratulations, Amy!

See you next week!

|

|

Karl Dubost: [worklog] Python code review, silence for some bugs. |

Seen a lot of discussions about Ads blockers. It seems it's like pollen fever, these discussions are seasonal and recurrent. I have a proposal for the Web and its publishing industry. Each time, you are using the term in an article Ad Blocker, replace it by Performance Enhancer or Privacy Safeguard and see if your article still makes sense. Tune of the week: Feeling Good — Nina Simone.

Webcompat Life

Progress this week:

Today: 2016-03-18T18:14:59.820644 450 open issues ---------------------- needsinfo 9 needsdiagnosis 130 needscontact 95 contactready 84 sitewait 104 ----------------------

You are welcome to participate

- Some thoughts about Mozilla Outreachy program.

- HTTP HEAD and its support

Webcompat issues

(a selection of some of the bugs worked on this week).

- Sometimes trying to get Apple attention is hard. If you know someone working there on the front-end of iTunes, you might be able to help us.

document.write()+ user agent sniffing + bad css selectors = image too big. Another one difficult to contact- Google Calendar old bug is… old. or Search Log-in for different locales or sometimes not even there at all. Like not receiving the tier1 version.

- We are very close to get a fix for Gmail on Firefox.

Gecko Bugs

- Debugger and User-Agent switcher interactions.

Webcompat.com development

- Explaining how to work with pull requests and branches

- Helping contributors on the project. 741 on

form.py, 865 ondatabase, 969 - We need a migration script for the DB.

- We need to tight up our management scripts.

Reading List

- Instagram-style filters in HTML5 Canvas. Clever technique for applying filters to photo when browsers are lacking support for CSS filters. Two thoughts: 1. What is the performance analysis? 2. This should be tied to a class name with a proper fallback for seeing the image when JS is not here.

- 6 reasons to start using flexbox. "But in actual fact, flexbox is very well supported, at 95.89% global support. (…) The solution to this I have settled on is to just use autoprefixer. Keeping track of which vendor prefixes we need to use for what isn’t necessarily the best use of our time and effort, so we can and should automate it."

- Seen that introductory blog post about CSS. I liked it very much, because it's very basic. It's written for beginners but with a nice turn into it. It also reminds me that writing about the simplest thing can be very helpful to others.

- Selenium and JavaScript events. In that case, used with python for functional testing.

Follow Your Nose

TODO

- Document how to write tests on webcompat.com using test fixtures.

- ToWrite: rounding numbers in CSS for

width - ToWrite: Amazon prefetching resources with

for Firefox only.

Otsukare!

|

|

Daniel Pocock: FOSSASIA 2016 at the Science Centre Singapore |

FOSSASIA 2016 is now under way. The Debian, Red Hat and Ring (Savoir-Faire Linux) teams are situated beside each other in the exhibit area.

Thanks to Hong Phuc from the FOSSASIA team for helping us produce these new Debian banners

Google Summer of Code 2016

If you are keen to participate in GSoC 2016, please feel free to come and discuss it with me in person or attend any of my sessions at FOSSASIA this weekend. Please also see my blog about getting selected for GSoC, attending a community event like FOSSASIA is a great way to distinguish yourself from the many applications we receive each year. (Debian Outreach / GSoC information).

Real-time communications, WebRTC and mobile VoIP at FOSSASIA

There are a number of events involving real-time communications technology throughout FOSSASIA 2016, please come and join us at these:

| Event | Time |

|---|---|

| Hands-on workshop with WebRTC and mobile VoIP (1 hr) | Saturday, 14:30 |

| Talk: Free Communications with Free Software (20 min) | Saturday, 17:40 |

| Ring: a decentralized and secure communication platform | Sunday, 13:00 |

and the full program details, including locations, are in the schedule.

|

|

Jennie Rose Halperin: Media for Everyone? |

Media for Everyone?

User empowerment and community in the age of subscription streaming media

The Netflix app is displayed alongside other streaming media services. (Photo credit: Matthew Keys / Flickr Creative Commons)

In 2002, Tim O’Reilly wrote the essay “Piracy is Progressive Taxation and other thoughts on the evolution of online distribution,” which makes several salient points that remain relevant as unlimited, native, streaming media continues to take the place of the containerized media product. In the essay, he predicts the rise of streaming media as well as the intermediary publisher on the Web that serves its purpose as content exploder. In an attempt to advocate for flexible licensing in the age of subscription streaming media, I’d like to begin by discussing two points in particular from that essay: “Obscurity is a far greater threat to authors and creative artists than piracy” and “’Free’ is eventually replaced by a higher-quality paid service.”

As content becomes more fragmented and decontainerized across devices and platforms (the “Internet of Things”), I have faith that expert domain knowledge will prevail in the form of vetted, quality materials, and streaming services provide that curation layer for many users. Subscription services could provide greater visibility to artists by providing unlimited access and new audiences. However, the current licensing regulations surrounding content on streaming subscription services privilege the corporation rather than the creator, further exercising the hegemony of the media market. The first part of this essay will discuss the role of serendipity and discovery in streaming services and how they contribute to user engagement. Next, I will explore how Creative Commons and flexible licensing in the age of unlimited subscription media can return power to the creator by supporting communities of practice around content creation in subscription streaming services.

Tim O’Reilly’s second assertion that “’Free’ is eventually replaced by a higher-quality paid service” is best understood through the lens of information architecture. In their seminal work Information Architecture for the World Wide Web, Morville, Arango, and Rosenfeld write about how most software solutions are designed to solve specific problems, and as they outgrow their shells they become ecosystems, thereby losing clarity and simplicity. While the physical object’s data is constrained within its shell, the digital object provides a new set of metadata based on the user’s usage patterns and interests. Media is spread out among a variety of types, devices, and spaces, platforms cease to define the types of content that people consume, with native apps replacing exportable, translatable solutions like the MP3 or PDF. Paid services utilize the data from these ecosystems and create more meaningful consumption patterns within a diverse media landscape.

What content needs is coherency, that ineffable quality that helps us create taxonomy and meaning across platforms. Streaming services provide a comfortable architecture so users don’t have to rely on the shattered, seemingly limitless, advertising-laden media ecosystem of the Internet. Unlimited streaming services provide the coherency that users seek in content, and their focus should be on discoverability and engagement.

If you try sometimes, you might get what you need: serendipity and discoverability in streaming media

Not all streaming services operate within the same content model, which provides an interesting lens to explore the roles of a variety of products. Delivering the “sweet spot” of content to users is an unfulfillable ideal for most providers, and slogging through a massive catalog of materials can quickly cause information overload.

When most content is licensed and available outside of the service, discoverability and user empowerment should be the primary aim of the streaming media provider.

While Spotify charges $9.99 per month for more music than a person can consume in their entire lifetime, the quality of the music is not often listed as a primary reason why users engage with the product. In fact, most of the music on Spotify I can describe as “not to my taste,” and yet I pay every month for premium access to the entire library. At Safari Books Online, we focused on content quality in addition to scope, with “connection to expert knowledge” and subject matter coherency being a primary reason why subscribers paid premium prices rather than relying on StackOverflow or other free services.

Spotify’s marketing slogan, “Music for everyone” focuses on its content abundance, freemium model, and ease of use rather than its quality. The marketing site does not mention the size of Spotify’s library, but the implications are clear: it’s huge.

These observations beg a few questions:

- Would I still pay $9.99 per month for a similar streaming service that only provided music in the genres I enjoy like jazz, minimal techno, or folk by women in the 1970s with long hair and a bone to pick with Jackson Browne?

- What would I pay to discover more music in these genres? What about new music created by lesser-known artists?

- What is it about Spotify that brought me back to the service after trying Apple Music and Rdio? What would bring me back to Safari if I tried another streaming media service like Lynda or Pluralsight?

- How much will users pay for what is, essentially, an inflexible native discoverability platform that exists to allow them access to other materials that are often freely available on the Web in other, more exportable formats?

Serendipity and discoverability were the two driving factors in my decision to stay with Spotify as a streaming music service. Spotify allows for almost infinite taste flexibility and makes discoverability easy through playlists and simple search. In addition, a social feed allows me to follow my friends and discover new music. Spotify bases its experience on my taste preferences and social network, and I can easily skip content that is irrelevant or not to my liking.

To contrast, at Safari, while almost every user lauded the diversity of content, most found the amount of information overwhelming and discoverability problematic. As a counter-example, the O’Reilly Learning Paths product have been immensely popular on Safari, even though the “paths” consist of recycled content from O’Reilly Media repackaged to improve discoverability. While the self-service discovery model worked for many users, for most of our users, guidance through the library in the form of “paths” provides a serendipitous adventure through content that keeps them wanting more.

Music providers like Tidal have experimented with exclusive content, but content wants to be free on the Internet, and streaming services should focus on user need and findability, not exclusivity. Just because a Beyonce single drops first on Tidal, it doesn’t mean I can’t torrent it soon after. In Spotify, the “Discover Weekly” playlists as well as the ease of use of my own user-generated playlists serve the purpose of “exclusive content.” By providing me the correct dose of relevant content through playlists and social connection, Spotify delivers a service that I cannot find anywhere else, and these discoverability features are my primary product incentive. Spotify’s curated playlists, even algorithmically calculated ones, feel home-spun, personal, and unique, which is close to product magic.

There seems to be an exception to this rule in the world of streaming television, where users generally want to be taken directly to the most popular exclusive content. I would argue that the Netflix ecosystem is much smaller than in a streaming business or technical library or music service. This is why Netflix can provide a relatively limited list of rotating movies while focusing on its exclusive content while services like Spotify and Safari consistently grow their libraries to delight their users with the extensive amount of content available.

In fact, most people subscribe to Netflix for its exclusive content, and streaming television providers that lag behind (like Hulu), often provide access to content that is otherwise easily discoverable other places on the Web. Why would I watch Broad City with commercials on Hulu one week after it airs when I can just go to the Free TV Project and watch it an hour later for free? There is no higher quality paid service than free streaming in this case, and until Hulu strikes the balance between payment, advertising, licensed content, and exclusive content, they will continue to lag behind Netflix.

As access to licensed content becomes centralized and ubiquitous among a handful of streaming providers, it should be the role of the streaming service to provide a new paradigm that supports the role of artists in the 21st Century that challenges the dominant power structures within the licensed media market.

Shake it off, Taylor: the dream of Creative Commons and the power of creators

As a constantly evolving set of standards, Creative Commons is one way that streaming services can focus on a discoverability and curation layer that provides the maximum benefit to both users and creators. If we allow subscription media to work with artists rather than industry, we can increase the power of the content creator and loosen stringent, outdated copyright regulations. I recognize that much of this section is a simplification of the complex issue of copyright, but I wish to create a strawman that brings to light what Lawrence Lessig calls “a culture in which creators get to create only with the permission of the powerful, or of creators from the past.” The unique positioning of streaming, licensed content is no longer an issue that free culture can ignore, and creating communities of practice around licensing could ease some of the friction between artists and subscription services.

When Taylor Swift withheld her album from Apple Music because the company would not pay artists for its temporary three-month trial period, it sent a message to streaming services that withholding pay from artists is not acceptable. I believe that Swift made the correct choice to take a stand against Apple for not paying artists, but I want to throw a wrench into her logic.

Copies of 1989 have probably been freely available on the Internet since before its “official” release. (The New Yorker ran an excellent piece on leaked albums last year.) By not providing her album to Apple Music but also not freely licensing it, Swift chose to operate under the old rules that govern content, where free is the exception, not the norm.

Creative Commons provides the framework and socialization that could provide streaming services relevancy and artists the new audiences they seek. The product that users buy via streaming services is not necessarily music or books (they can buy those anywhere), it is the ability to consume it in a manner that is organized, easy, and coherent across platforms: an increased Information Architecture. The flexible licensing of Creative Commons could at least begin the discussion to cut out the middle man between streaming services, licensing, and artists, allowing these services to act more like Soundcloud, Wattpad, or Bandcamp, which provide audience and voice to lesser-known artists. These services do what streaming services have so far failed to do because of their licensing rules: they create social communities around media based on user voice and community connection.

The outlook for both the traditional publishing and music industries are similarly grim and to ignore the power of the content creator is to lapse into obscurity. While many self-publishing platforms present Creative Commons licensing as a matter of course and pride, subscription streaming services usually present all content as equally, stringently licensed. Spotify’s largest operating costs are licensing costs and most of the revenue in these transactions goes to the licensor, not the artist. To rethink a model that puts trust and power in the creator could provide a new paradigm under which creators and streaming services thrive. This could take shape in a few ways:

- Content could be licensed directly from the creator and promoted by the streaming service.

- Content could be exported outside of the native app, allowing users to distribute and share content freely according to the wishes of its creator.

- Content could be directly uploaded to the streaming service, vetted or edited by the service, and signal boosted according to the editorial vision of the streaming content provider.

When Safari moved from books as exportable PDFs to a native environment, some users threatened to leave the service, viewing the native app as a loss of functionality. This exodus reminds me that while books break free of their containers, the coherence of the ecosystem maintains that users want their content in a variety of contexts, usable in a way that suits them. Proprietary native apps do not provide that kind of flexibility. By relying on native apps as a sole online/offline delivery mechanism, streaming services ultimately disenfranchise users who rely on a variety of IoT devices to consume media. Creative Commons could provide a more ethical licensing layer to rebalance the power differential that streaming services continue to uphold.

The right to read, listen, and watch: streaming, freedom, and pragmatism

Several years ago, I would probably have scoffed at this essay, wondering why I even considered streaming services as a viable alternative to going to the library or searching for content through torrents or music blogs, but I am fundamentally a pragmatist and seek to work on systems that present the most exciting vision for creators. 40 million Americans have a Netflix account and Spotify has over 10 million daily active users. The data they collect from users is crucial to the media industry’s future.

To ignore or deny the rise of streaming subscription services as a content delivery mechanism has already damaged the free culture movement. While working with subscription services feels antithetical to its goals, content has moved closer and closer toward Stallman’s dystopian vision from 1997 and we need to continue to create viable alternatives or else continue to put the power in the hands of the few rather than the many.

Licensed streaming services follow the through line of unlimited content on the Web, and yet most users want even more content, and more targeted content for their specific needs. The archetype of the streaming library is currently consumption, with social sharing as a limited exception. Services like Twitter’s Vine and Google’s YouTube successfully create communities based on creation rather than consumption and yet they are constantly under threat, with large advertisers still taking the lion’s share of profits.

I envision an ecosystem of community-centered content creation services that are consistently in service to their users, and I think that streaming services can take the lead by considering licensing options that benefit artists rather than corporations.

The Internet turns us all into content creators, and rather than expanding ecosystems into exclusivity, it would be heartening to see a streaming app that is based on community discoverability and the “intertwingling” of different kinds of content, including user-generated content. The subscription streaming service can be considered as industry pushback in the age of user-generated content, yet it’s proven to be immensely popular. For this reason, conversations about licensing, user data, and artistic community should be a primary focus within free culture and media.

The final lesson of Tim O’Reilly’s essay is: “There’s more than one way to do it,” and I will echo this sentiment as the crux of my argument. As he writes, “’Give the wookie what he wants!’… Give it to [her] in as many ways as you can find, at a fair price, and let [her] choose which works best for [her].” By amplifying user voice in curation and discoverability as well as providing a more fair, free, and open ecosystem for artists, subscription services will more successfully serve their users and creators in ways that make the artistic landscape more humane, more diverse, and yes, more remixable.

|

|

Eitan Isaacson: Benchmarking Speech Rate |

In my last post, I covered the speech rate problem as I perceived it. Understanding the theory of what is going on is half the work. I decided to make a cross-platform speech rate benchmark that would allow me to identify how well each platform conforms to rate changes.

So how do you measure speech rate? I guess you can count words per minute. But in reality, all we want is relative speech rate. The duration of each utterance at given rates should give us a good reference for the relative rate. Is it perfect? Probably not. Each speech engine may treat the time between words, and punctuation pauses differently at different rates, but it will give us a good picture.

For example. If it takes a speech service

http://blog.monotonous.org/2016/03/17/benchmarking-speech-rate/

|

|

Support.Mozilla.Org: What’s up with SUMO – 17th March |

Hello, SUMO Nation!

Time for a slightly personal introduction… Are you a fan of the whole time change, with Daylight Saving Time and changing times, just because we can’t figure time out, apparently? Yeah, me neither. So, if you missed an important meeting with someone in a different time zone this week because of the change… don’t worry, keep rocking! C’est la vie :-) Let’s get on with the show!

Welcome, new contributors!

Contributors of the week

- The forum supporters who helped users out for the last 7 days.

- The writers of all languages who worked on the KB for the last 7 days.

- The Slovenian localizers who hit 100% for the SUMO UI!

- The Telugu localizers who hit the 50 KB articles milestone for their locale!

- The Portuguese localizers who hit yet another milestone for their locale!

- Teo for updating the Polish Thunderbird KB!

We salute you!

Most recent SUMO Community meeting

- Reminder: WE HAVE MOVED TO WEDNESDAYS!

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

The next SUMO Community meeting…

- …is happening on WEDNESDAY the 23rd of March – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Monday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Developers

- Important announcement: Kitsune development is currently entering maintenance mode due to changes in the developer group – we’ll keep you posted as the situation develops. If you have questions or concerns about this, please contact us via the forum.

- The latest SUMO Platform meeting notes can be found here.

- Do you want to help us build Kitsune (the engine behind SUMO)? Read more about it here and fork it on GitHub!

- We are discussing adding notifications to the contributor profiles – take a look at the thread here.

Community

- We will let you know about nominations for the London Work Week soon – in the meantime, take a look at this wiki page for more details.

- If you ever wondered how to get your name onto the about:credits page in Firefox, take a look here.

- Final reminder: want to help us figure out how to deal with IRC, Telegram, and all that jazz? Jump into this thread!

-

Ongoing reminder: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

Social

- Madalina is waiting for your feedback regarding Sprinklr. Send it directly to her, using her email address.

- Final reminder: The Social Wiki is here – participate as you see fit!

- An ongoing and gentle technical reminder for those already supporting on Social – please don’t forget to sign your initials at the end of each reply and always use the @ at the beginning of it.

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

Knowledge Base

- Firefox for iOS version 3.0 documentation is here! So far, there’s only one article available, but the second one is coming soon (it needs approval from the Firefox for iOS team).

- You can see all the articles that have been updated for version 45 in this document.

- You can check the KB training article feedback and progress in this document.

Localization

- Reminder: version 45 articles are ready for localization… Get to it, l10ns!

- Safwan, our resident codemaster, is working on something really, really cool for localizers (and KB writers in general), again :-)

- Pontoon tutorial videos are in production – so far, the following topics are being covered (if you think we’re missing something, let me know):

- How to log into Pontoon and navigate to SUMO

- How to localize strings in Pontoon

- How to search for broken strings and repair them

- Useful tips and tricks in Pontoon

- Are you aware of the l10n hackathons happening in your locale? Take a look at this blog post and contact your l10n team to get involved. I would love for all localizers in each locale to come together and have a great time – let’s make that happen!

- Reminder: Click here to see the date of the latest UI string update (from your Pontoon contributions)

- Reminder: we are deprioritizing Firefox OS content localization for now (more context here). If you have questions about it, let me know.

Firefox

- for Android

- Version 44 support discussion thread.

- Version 45 support discussion thread.

- Version 46 thread coming up soon.

- for iOS

- No new news, apart from the documentation for version 3.0 mentioned before.

- No new news, apart from the documentation for version 3.0 mentioned before.

That’s it for this week, but there should be a post or two coming your way before Monday… I hope! If there’s nothing but crickets, worry not – there will be more news next week. In the meantime, stay safe, stay dry, stay warm, and don’t forget to smile – I hear it’s good for your face muscles :-)

https://blog.mozilla.org/sumo/2016/03/17/whats-up-with-sumo-17th-march/

|

|

Mozilla Addons Blog: Add-on Signing Enforcement In Firefox 46 for Android |

As part of the Extension Signing initiative, signing enforcement will be enabled by default on Firefox 46 for Android. All Firefox for Android extensions on addons.mozilla.org have been signed, and developers who self-host add-ons for Android should ensure those add-ons are signed prior to April 12th, 2016.

Extension signing enforcement can be disabled using the preference outlined in the FAQ, and this preference will be removed in a future release.

More information on Extension Signing is available on the Mozilla Wiki, and information on the change to Firefox for Android can be found on Bugzilla under Bug 1244329.

https://blog.mozilla.org/addons/2016/03/17/add-on-signing-enforcement-in-firefox-46-for-android/

|

|

Air Mozilla: What Works: Gender Equality by Design with Iris Bohnet |

Gender equality is a moral and a business imperative. But unconscious bias holds us back, and de-biasing people's minds has proven to be difficult and...

Gender equality is a moral and a business imperative. But unconscious bias holds us back, and de-biasing people's minds has proven to be difficult and...

https://air.mozilla.org/what-works-gender-equality-by-design-with-iris-bohnet/

|

|

Air Mozilla: Web QA Weekly Meeting, 17 Mar 2016 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Air Mozilla: Reps weekly, 17 Mar 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Robert O'Callahan: Running Firefox For Windows With rr |

Behold!

OK OK, this is cheating a bit. Wine can run Firefox for Windows on Linux (if you disable multiprocess in Firefox; if you don't, Firefox starts up but nothing will load). rr can run Wine. Transitivity holds.

Caveats: it's slow (rr's syscall buffering optimization is mostly disabled by Wine), and the debugging experience is pretty much nonexistent since gdb doesn't know what's going on. But hey.

http://robert.ocallahan.org/2016/03/running-firefox-for-windows-with-rr.html

|

|

Bogomil Shopov: How to engage us, developers to use your API. |

There are tens of thousands of API’s available. More to come. Most of the companies though, have troubles engaging developers to use them. So I have decided to share a few thoughts and ideas on how you can do that, based on my experience.

Design your API well

Nobody likes powerful, but not developer-friendly APIs. Follow the “standards” in the area, but innovate a bit to make us (developers) happy and eager to learn more. I will not spend more time here, because I guess you are already building your API if you need the information below. If you are looking for more info on that subject, click here to read an excellent article.

Document your API

If you want other people to use it – document it well. Add examples for the most popular programming languages. Copy/ Paste/ Run is the first step to a great journey.

Do not forget the not-so-trending programming languages at the moment. Target people who explore them – they are the right group to start with.

Eat your own dog-food

Ask your internal developers to use the API. Get the feedback from them and make it better. I am not talking about the developers who wrote the API, they must use it of course. Try to engage other teams within the company (if you have any) to use the API.

Organize an internal Friday APIJam. Sit together in a room for a few hours and do something useful using the technologies you work with – don’t push them to learn new language or technique – just use the API focusing on the value.

Come up with nice awards for the most active participants, get some sweets and drinks (even beer) as well. Then ask the participants to present their work at the end and listen to their feedback.

Hack your API

Organize hackatons with external groups or jump into such organized by someone else – ask developers to hack the API and to create a small app that will serve theirs needs – then promote the effort and make those developers rockstars by using your PR channels.

The goal is not to test your API (as you do during the internal APIJam event), but to show the value that your API brings to the world. The Call for Action should be something like “use our API to build your own App”.

Create more initiatives like that. Repeat();

Connect

Get in touch with the local developer groups and go the their next meeting with some pizza and beer. Show them your data, ask for their feedback, show them your API, don’t be afraid to ask for help.

Then create a fair process to work with communities around you – what you want from them and what’s in for them.

Discuss

Push the discussion around your API and manage it. Respond to comments immediately, ask for feedback and show how it is implemented. Post your API to reddit, Dzone and other similar sites and get real, honest feedback (together with some trolls, that’s inevitable)

Equilibrium

Treat your community members equally. Sometimes a new member can have a kick-butt idea and if you ignore him/her this can have negative impact overall. Focus on the value!

Partner up

Find partners to help you to get traction. Why don’t you contact your local startup accelerators and do something together to include your awesome API as an requirement for the next call? Does it work? Oh yeah!

Explore

Constantly explore new ways and hacks on how to engage the community, but remember – this must be a fair deal – every part should be happy and equally satisfied. This is your way towards an engaged community.

The best API ever?

No, it’s not yours. Is this one :)

What do you think?

Do you have a different experience? Please share!

More resources?

P.S The head image is under CC license by giorgiop5

|

|

Karl Dubost: HEAD and its support |

What is HTTP HEAD?

HTTP HEAD is defined in RFC7231:

The HEAD method is identical to GET except that the server MUST NOT send a message body in the response (i.e., the response terminates at the end of the header section). The server SHOULD send the same header fields in response to a HEAD request as it would have sent if the request had been a GET, except that the payload header fields (Section 3.3) MAY be omitted. This method can be used for obtaining metadata about the selected representation without transferring the representation data and is often used for testing hypertext links for validity, accessibility, and recent modification.

A payload within a HEAD request message has no defined semantics; sending a payload body on a HEAD request might cause some existing implementations to reject the request.

The response to a HEAD request is cacheable; a cache MAY use it to satisfy subsequent HEAD requests unless otherwise indicated by the Cache-Control header field (Section 5.2 of [RFC7234]). A HEAD response might also have an effect on previously cached responses to GET; see Section 4.3.5 of [RFC7234].

The first sentence says it all. Ok let's test it on a server we recently received a bug report for an issue not related to this post:

http HEAD http://www.webmotors.com.br/comprar/busca-avancada-carros-motos 'User-Agent:Mozilla/5.0 (Android 4.4.4; Mobile; rv:48.0) Gecko/48.0 Firefox/48.0'

and we discover an interesting result.

HTTP/1.1 302 Found Cache-Control: private Cache-control: no-cache="set-cookie" Connection: keep-alive Content-Length: 219 Content-Type: text/html; charset=utf-8 Date: Thu, 17 Mar 2016 05:05:16 GMT Location: http://www.webmotors.com.br/erro/paginaindisponivel?aspxerrorpath=/comprar/busca-avancada-carros-motos Set-Cookie: AWSELB=FBEB4F8D0A4EA85DD70F4AB212E2DE8B1D243194C8211DB47745035BB893091C3757B63537A8283279E292F270164C17215D106B7608F46725A149C18EC4961E97F3828361;PATH=/;MAX-AGE=3600 Vary: User-Agent,Accept-Encoding X-AspNetMvc-Version: 5.2 X-Powered-By: ASP.NET X-UA-Compatible: IE=Edge

The server is probably Microsoft IIS with an ASP layer. Not blaming IIS here, I have seen that pattern on more than one server.

The server response is 302, aka a redirection given in the Location: field.

The 302 (Found) status code indicates that the target resource resides temporarily under a different URI.

But if we follow that redirection to that Location:, we get the message:

Ops! A p'agina que voc^e procura n~ao foi encontrada.

which is basically, they can't find the page. So basically, if the page doesn't exist, they should send back either

404 Not Found, aka they can't find the resource.405 Method Not Allowed, aka theHEADmethod is not allowed.

Let's verify this and request the same URI with a GET and printing only the HTTP response headers.

http --print h GET http://www.webmotors.com.br/comprar/busca-avancada-carros-motos 'User-Agent:Mozilla/5.0 (Android 4.4.4; Mobile; rv:48.0) Gecko/48.0 Firefox/48.0'

The response is this time 200 OK, which means it worked and we received the right answer.

HTTP/1.1 200 OK Cache-Control: private Cache-control: no-cache="set-cookie" Connection: keep-alive Content-Encoding: gzip Content-Length: 30458 Content-Type: text/html; charset=utf-8 Date: Thu, 17 Mar 2016 05:38:03 GMT Set-Cookie: gBT_comprar_vender=X; path=/ Set-Cookie: Segmentacao=controller=Comprar&action=buscaavancada&gBT_comprar_vender=X&gBT_financiamento=&gBT_revista=&gBT_seguros=&gBT_servicos=&marca=X&modelo=X&blindado=&ano_modelo=X&preco=X&uf=X&Cod_clientePJ=&posicao=&BT_comprar_vender=X&BT_financiamento=X&BT_revista=X&BT_seguros=X&BT_servicos=X&carroceria=&anuncioDfp=Comprar/Buscas/Busca_Avan&midiasDfp=101,81; expires=Sat, 17-Sep-2016 05:38:03 GMT; path=/ Set-Cookie: AWSELB=FBEB4F8D0A4EA85DD70F4AB212E2DE8B1D243194C8CA5862EA033196053DA70C8A276707F8A7ABFB1FA324CD7934D5EC56F696CA5DFAECA620F087B46CAE01F4173A09BBD5;PATH=/;MAX-AGE=3600 Vary: Accept-Encoding X-AspNetMvc-Version: 5.2 X-Powered-By: ASP.NET X-UA-Compatible: IE=Edge

So if they unfortunately forbid HEAD which is handy for caching check instead of having to download again the full resource. But I guess in their case, it doesn't matter very much because there is no real caching information.

I wonder how Firefox and other browsers are handling HEAD, or if they use it at all.

Though the real issue so far for this server is a server side user agent sniffing sending the desktop version to Firefox Android and the mobile version to Chrome on Android.

Digression

One more thing.

Redbot is quite a nice tool for linting HTTP headers. We can test on this Web site. Specifically for the caching information. This is what redbot is telling us about this resource:

The Cache-Control: no-cache directive means that while caches can store this response, they cannot use it to satisfy a request unless it has been validated (either with an If-None-Match or If-Modified-Since conditional) for that request.

This response doesn't have a Last-Modified or ETag header, so it effectively can't be used by a cache.

No caching. Fresh page all the time. Sad panda for the Web.

|

|

Karl Dubost: Fixing Gmail on Firefox Android |

Do you remember, a couple of weeks ago, I explained on how Gmail was broken on Firefox for Android. I was finishing my post with:

Basically it seems that in Blink world the border-width is taking over the border-image-width. This should be verified. Definitely a difference of implementation. I need to check with David Baron.

I was wrong, it's a lot simpler and indeed David helped me find the culprit.

|

|