Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

QMO: Seeking participants interested in a FX OS testing event |

On October 24-25th we are planning a joint l10n/QA hackathon style meetup in Paris, France. This will be similar in format to the first event we held in July in Lima, Peru.

If you are interested in participating, please visit this site to learn more about the event. We will select 5 contributors who reside in the EU to participate in this exciting event. This is a great opportunity to learn more about Firefox OS QA and directly contribute to work on one of the Mozilla QA functional teams.

The deadline for application submission is Wednesday, August 26, 2015.

Please contact marcia@mozilla.com if you have any questions.

https://quality.mozilla.org/2015/08/seeking-participants-interested-in-a-fx-os-testing-event/

|

|

Nick Desaulniers: My SIGGRAPH 2015 Experience |

I was recently lucky enough to get to attend my first SIGGRAPH conference this year. While I didn’t attend any talks, I did spend some time in the expo. Here is a collection of some of the neat things I saw at SIGGRAPH 2015. Sorry it’s not more collected; I didn’t have the intention of writing a blog post until after folks kept asking me “how was it?”

VR

Most booths had demos on VR headsets. Many were DK2’s and GearVR’s. AMD and NVIDIA had Crescent Bay’s (next gen VR headset). It was noticeably lighter than the DK2, and I thought it rendered better quality. It had nicer cable bundling, and headphones built in, that could fold up and lock out of the way that made it nice to put on/take off. I also tried a Sony Morpheus. They had a very engaging demo that was a tie in to the upcoming movie about tight rope walking, “The Walk”. They had a thin PVC pipe taped to the floor that you had to balance on, and a fan, and you were tight rope walking between the Twin Towers. Looking down and trying to balance was terrifying. There were some demos with a strange mobile VR setup where folks had a backpack on that had an open laptop hanging off the back and could walk around. Toyota and Ford had demos where you could inspect their vehicles in virtual space. I did not see a single HTC/Valve Vive at SIGGRAPH.

AR

Epson had some AR glasses. They were very glasses friendly, unlike most VR headsets. The nose piece was flexible, and if you flattened it out, the headset could rest on top of your glasses and worked well. The headset had some very thick compound lenses. There was a front facing camera and they had a simple demo using image recognition of simple logos (like QR codes) that helped provide position data. There were other demos with orientation tracking that worked well. They didn’t have positional sensor info, but had some hack that tried to estimate positional velocity off the angular momentum (I spoke with the programmer who implemented it). https://moverio.epson.biz/

Holograms

There was a demo of holograms using tilted pieces of plastic arranged in a box. Also, there was a multiple (200+) projector array that projected a scene onto a special screen. When walking around the screen, the viewing angle always seemed correct. It was very convincing, except for the jarring restart of the animated loop which could be smoothed out (think looping/seamless gifs).

VR/3D video

Google cardboard had a booth showing off 3D videos from youtube. I had a hard time telling if the video were stereoscopic or monoptic since the demo videos only had things in the distance so it was hard to tell if parallax was implemented correctly. A bunch of booths were showing off 3D video, but as far as I could tell, all of the correctly rendered stereoscopic shots were computer rendered. I could not find a single instance with footage shot from a stereoscopic rig, though I tried.

Booths/Expo

NVIDIA and Intel had the largest booths, followed by Pixar’s Renderman. Felt like a GDC event, smaller, but definitely larger than GDC next. More focus on shiny photorealism demos, artistic tools, less on game engines themselves.

Vulcan/OpenGL ES 3.2

Intel had demos of Vulcan and OpenGL ES 3.2. For 3.2 they were showing off tessellation shaders, I think. For the Vulcan demo, they had a cool demo showing how with a particle demo scene rendered with OpenGL 4, a single CPU was pegged, it was using a lot of power, and had pretty abysmal framerate. When rendering the same scene with Vulcan, they were able to more evenly distribute the workload across CPUs, achieve higher framerate, while using less power. The API to Vulcan is still not published, so no source code is available. It was explained to me that Vulcan is still not thread safe; instead you get the freedom to implement synchronization rather than the driver.

Planetarium

There was a neat demo of a planetarium projector being repurposed to display an “on rails” demo of a virtual scene. You didn’t get parallax since it was being projected on a hemisphere, but it was neat in that like IMAX your entire FOV was encompassed, but you could move your head, not see any pixels, and didn’t experience any motion sickness or disorientation.

X3D/X3DOM

I spoke with some folks at the X3D booth about X3DOM. To me, it seems like a bunch of previous attempts have kind of added on too much complexity in an effort to support every use case under the sun, rather than just accept limitations, so much so that getting started writing hello world became difficult. Some of the folks I spoke to at the booth echoed this sentiment, but also noted the lack of authoring tools as things that hurt adoption. I have some neat things I’m working on in this space, based on this and other prior works, that I plan on showing off at the upcoming BrazilJS.

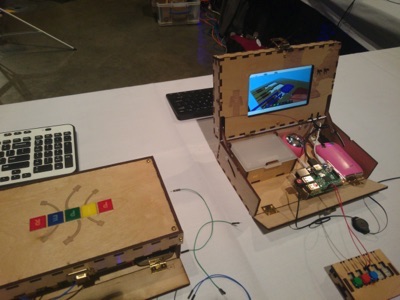

Maker Faire

There was a cool maker faire, some things I’ll have to order for family members (young hackers in training) were Canny bots, eBee and Piper.

Experimental tech

Bunch of neat input devices, one I liked used directional sound as tactile feedback. One demo was rearranging icons on a home screen. Rather than touch the screen, there was a field of tiny speakers that would blast your finger with sound when it entered to simulate the feeling of vibration. It would vibrate to let you know you had “grabbed” and icon, and then drag it.

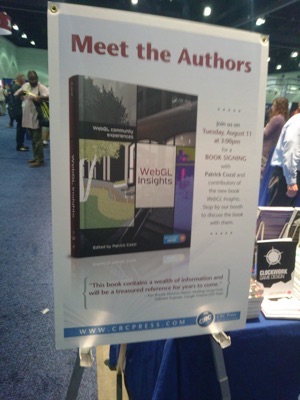

Book Signing

This was the first time I got to see my book printed in physical form! It looked gorgeous, hardcover printed in color. I met about half of the fellow authors who were also at SIGGRAPH, and our editor. I even got to meet Eric Haines, who reviewed my chapter before publication!

http://nickdesaulniers.github.io/blog/2015/08/14/my-siggraph-2015-experience/

|

|

Laura de Reynal: The remix definition |

“What does remixing mean ? To take something that’s pretty good, and add your touch to properly make it better with no disrespect to the creator.”

15 years old teenager, Chicago

Filed under: Mozilla

|

|

The Rust Programming Language Blog: Rust in 2016 |

This week marks three months since Rust 1.0 was released. As we’re starting to hit our post-1.0 stride, we’d like to talk about what 1.0 meant in hindsight, and where we see Rust going in the next year.

What 1.0 was about

Rust 1.0 focused on stability, community, and clarity.

Stability, we’ve discussed quite a bit in previous posts introducing our release channels and stabilization process.

Community has always been one of Rust’s greatest strengths. But in the year leading up to 1.0, we introduced and refined the RFC process, culminating with subteams to manage RFCs in each particular area. Community-wide debate on RFCs was indispensable for delivering a quality 1.0 release.

All of this refinement prior to 1.0 was in service of reaching clarity on what Rust represents:

Altogether, Rust is exciting because it is empowering: you can hack without fear. And you can do so in contexts you might not have before, dropping down from languages like Ruby or Python, making your first foray into systems programming.

That’s Rust 1.0; but what comes next?

Where we go from here

After much discussion within the core team, early production users, and the broader community, we’ve identified a number of improvements we’d like to make over the course of the next year or so, falling into three categories:

- Doubling down on infrastructure;

- Zeroing in on gaps in key features;

- Branching out into new places to use Rust.

Let’s look at some of the biggest plans in each of these categories.

Doubling down: infrastructure investments

Crater

Our basic stability promise for Rust is that upgrades between versions are “hassle-free”. To deliver on this promise, we need to detect compiler bugs that cause code to stop working. Naturally, the compiler has its own large test suite, but that is only a small fraction of the code that’s out there “in the wild”. Crater is a tool that aims to close that gap by testing the compiler against all the packages found in crates.io, giving us a much better idea whether any code has stopped compiling on the latest nightly.

Crater has quickly become an indispensable tool. We regularly compare the nightly release against the latest stable build, and we use crater to check in-progress branches and estimate the impact of a change.

Interestingly, we have often found that when code stops compiling, it’s not because of a bug in the compiler. Rather, it’s because we fixed a bug, and that code happened to be relying on the older behavior. Even in those cases, using crater helps us improve the experience, by suggestion that we should phase fixes in slowly with warnings.

Over the next year or so, we plan to improve crater in numerous ways:

- Extend the coverage to other platforms beyond Linux, and run test suites on covered libraries as well.

- Make it easier to use: leave an

@crater: testcomment to try out a PR. - Produce a version of the tool that library authors can use to see effects of their changes on downstream code.

- Include code from other sources beyond crates.io.

Incremental compilation

Rust has always had a “crate-wide” compilation model. This means that the Rust compiler reads in all of the source files in your crate at once. These are type-checked and then given to LLVM for optimization. This approach is great for doing deep optimization, because it gives LLVM full access to the entire set of code, allowing for more better inlining, more precise analysis, and so forth. However, it can mean that turnaround is slow: even if you only edit one function, we will recompile everything. When projects get large, this can be a burden.

The incremental compilation project aims to change this by having the Rust compiler save intermediate by-products and re-use them. This way, when you’re debugging a problem, or tweaking a code path, you only have to recompile those things that you have changed, which should make the “edit-compile-test” cycle much faster.

Part of this project is restructuring the compiler to introduce a new intermediate representation, which we call MIR. MIR is a simpler, lower-level form of Rust code that boils down the more complex features, making the rest of the compiler simpler. This is a crucial enabler for language changes like non-lexical lifetimes (discussed in the next section).

IDE integration

Top-notch IDE support can help to make Rust even more productive. Up until now, pioneering projects like Racer, Visual Rust, and Rust DT have been working largely without compiler support. We plan to extend the compiler to permit deeper integration with IDEs and other tools; the plan is to focus initially on two IDEs, and then grow from there.

Zeroing in: closing gaps in our key features

Specialization

The idea of zero-cost abstractions breaks down into two separate goals, as identified by Stroustrup:

- What you don’t use, you don’t pay for.

- What you do use, you couldn’t hand code any better.

Rust 1.0 has essentially achieved the first goal, both in terms of language features and the standard library. But it doesn’t quite manage to achieve the second goal. Take the following trait, for example:

pub trait Extend<A> {

fn extend<T>(&mut self, iterable: T) where T: IntoIterator<Item=A>;

}

The Extend trait provides a nice abstraction for inserting data from any kind of

iterator into a collection. But with traits today, that also means that each

collection can provide only one implementation that works for all iterator

types, which requires actually calling .next() repeatedly. In some cases, you

could hand code it better, e.g. by just calling memcpy.

To close this gap, we’ve proposed specialization, allowing you to provide multiple, overlapping trait implementations as long as one is clearly more specific than the other. Aside from giving Rust a more complete toolkit for zero-cost abstraction, specialization also improves its story for code reuse. See the RFC for more details.

Borrow checker improvements

The borrow checker is, in a way, the beating heart of Rust; it’s the part of the compiler that lets us achieve memory safety without garbage collection, by catching use-after-free bugs and the like. But occasionally, the borrower checker also “catches” non-bugs, like the following pattern:

match map.find(&key) {

Some(...) => { ... }

None => {

map.insert(key, new_value);

}

}

Code like the above snippet is perfectly fine, but the borrow checker struggles

with it today because the map variable is borrowed for the entire body of the

match, preventing it from being mutated by insert. We plan to address this

shortcoming soon by refactoring the borrow checker to view code in terms of

finer-grained (“non-lexical”) regions – a step made possible by the move to the

MIR mentioned above.

Plugins

There are some really neat things you can do in Rust today – if you’re willing to use the Nightly channel. For example, the regex crate comes with macros that, at compile time, turn regular expressions directly into machine code to match them. Or take the rust-postgres-macros crate, which checks strings for SQL syntax validity at compile time. Crates like these make use of a highly-unstable compiler plugin system that currently exposes far too many compiler internals. We plan to propose a new plugin design that is more robust and provides built-in support for hygienic macro expansion as well.

Branching out: taking Rust to new places

Cross-compilation

While cross-compiling with Rust is possible today, it involves a lot of manual configuration. We’re shooting for push-button cross-compiles. The idea is that compiling Rust code for another target should be easy:

- Download a precompiled version of

libstdfor the target in question, if you don’t already have it. - Execute

cargo build --target=foo. - There is no step 3.

Cargo install

Cargo and crates.io is a really great tool for distributing

libaries, but it lacks any means to install executables. RFC 1200 describes a

simple addition to cargo, the cargo install command. Much like the

conventional make install, cargo install will place an executable in your

path so that you can run it. This can serve as a simple distribution channel,

and is particularly useful for people writing tools that target Rust developers

(who are likely to be familiar with running cargo).

Tracing hooks

One of the most promising ways of using Rust is by “embedding” Rust code into systems written in higher-level languages like Ruby or Python. This embedding is usually done by giving the Rust code a C API, and works reasonably well when the target sports a “C friendly” memory management scheme like reference counting or conservative GC.

Integrating with an environment that uses a more advanced GC can be quite challenging. Perhaps the most prominent examples are JavaScript engines like V8 (used by node.js) and SpiderMonkey (used by Firefox and Servo). Integrating with those engines requires very careful coding to ensure that all objects are properly rooted; small mistakes can easily lead to crashes. These are precisely the kind of memory management problems that Rust is intended to eliminate.

To bring Rust to environments with advanced GCs, we plan to extend the compiler with the ability to generate “trace hooks”. These hooks can be used by a GC to sweep the stack and identify roots, making it possible to write code that integrates with advanced VMs smoothly and easily. Naturally, the design will respect Rust’s “pay for what you use” policy, so that code which does not integrate with a GC is unaffected.

Epilogue: RustCamp 2015, and Rust’s community in 2016

We recently held the first-ever Rust conference, RustCamp 2015, which sold out with 160 attendees. It was amazing to see so much of the Rust community in person, and to see the vibe of our online spaces translate into a friendly and approachable in-person event. The day opened with a keynote from Nicholas Matsakis and Aaron Turon laying out the core team’s view of where we are and where we’re headed. The slides are available online (along with several other talks), and the above serves as the missing soundtrack. Update: now you can see the talks as well!

There was a definite theme of the day: Rust’s greatest potential is to unlock a new generation of systems programmers. And that’s not just because of the language; it’s just as much because of a community culture that says “Don’t know the difference between the stack and the heap? Don’t worry, Rust is a great way to learn about it, and I’d love to show you how.”

The technical work we outlined above is important for our vision in 2016, but so is the work of those on our moderation and community teams, and all of those who tirelessly – enthusiastically – welcome people coming from all kinds of backgrounds into the Rust community. So our greatest wish for the next year of Rust is that, as its community grows, it continues to retain the welcoming spirit that it has today.

|

|

Jonathan Griffin: Engineering Productivity Update, August 13, 2015 |

From Automation and Tools to Engineering Productivity

“Automation and Tools” has been our name for a long time, but it is a catch-all name which can mean anything, everything, or nothing, depending on the context. Furthermore, it’s often unclear to others which “Automation” we should own or help with.

For these reasons, we are adopting the name “Engineering Productivity”. This name embodies the diverse range of work we do, reinforces our mission (https://wiki.mozilla.org/Auto-tools#Our_Mission), promotes immediate recognition of the value we provide to the organization, and encourages a re-commitment to the reason this team was originally created—to help developers move faster and be more effective through automation.

The “A-Team” nickname will very much still live on, even though our official name no longer begins with an “A”; the “get it done” spirit associated with that nickname remains a core part of our identity and culture, so you’ll still find us in #ateam, brainstorming and implementing ways to make the lives of Mozilla’s developers better.

Highlights

Treeherder: Most of the backend work to support automatic starring of intermittent failures has been done. On the front end, several features were added to make it easier for sheriffs and others to retrigger jobs to assist with bisection: the ability to fill in all missing jobs for a particular push, the ability to trigger Talos jobs N times, the ability to backfill all the coalesced jobs of a specific type, and the ability to retrigger all pinned jobs. These changes should make bug hunting much easier. Several improvements were made to the Logviewer as well, which should increase its usefulness.

Perfherder and performance testing: Lots of Perfherder improvements have landed in the last couple of weeks. See details at wlach’s blog post. Meanwhile, lots of Talos cleanup is underway in preparation for moving it into the tree.

MozReview: Some upcoming auth changes are explained in mcote’s blog post.

Mobile automation: gbrown has converted a set of robocop tests to the newly enabled mochitest-chrome on Android. This is a much more efficient harness and converting just 20 tests has resulted in a reduction of 30 minutes of machine time per push.

Developer workflow: chmanchester is working on building annotations into moz.build files that will automatically select or prioritize tests based on files changed in a commit. See his blog post for more details. Meanwhile, armenzg and adusca have implemented an initial version of a Try Extender app, which allows people to add more jobs on an existing try push. Additional improvements for this are planned.

Firefox automation: whimboo has written a Q2 Firefox Automation Report detailing recent work on Firefox Update and UI tests. Maja has improved the integration of Firefox media tests with Treeherder so that they now officially support all the Tier 2 job requirements.

WebDriver and Marionette: WebDriver is now officially a living standard. Congratulations to David Burns, Andreas Tolfsen, and James Graham who have contributed to this standard. dburns has created some documentation which describes which WebDriver endpoints are implemented in Marionette.

Version control: The ability to read and extra metadata from moz.build files has been added to hg.mozilla.org. This opens the door to cool future features, like the ability auto file bugs in the proper component and automatically selecting appropriate reviewers when pushing to MozReview. gps has also blogged about some operational changes to hg.mozilla.org which enables easier end-to-end testing of new features, among other things.

The Details

- [dylan] bug 1188339 – Increase length of all tokens value for greater security

- [glob] bug 1146761 – In the new modal UI, clicking on date, flag, etc., scrolls to the corresponding change

- for more details, see https://wiki.mozilla.org/BMO/Recent_Changes

- almost finished the required changes to the backend (both db schema and data ingestion)

- Several retrigger features were added to Treeherder to make merging and bisections easier: auto fill all missing/coalesced jobs in a push; trigger all Talos jobs N times; backfill a specific job by triggering it on all skipped commits between this commit and the commit that previously ran the job, retrigger all pinned jobs in treeherder. This should improve bug hunting for sheriffs and developers alike.

- [jfrench] Logviewer ‘action buttons’ are now centralized in a Treeherder style navbar https://bugzil.la/1183872

- [jfrench] Logviewer skipped steps are now recognized as non-failures and presented as blue info steps https://bugzil.la/1192195, https://bugzil.la/1192198

- [jfrench] Middle-mouse-clicking on a job in treeherder now launches the Logviewer https://bugzil.la/1077338

- [vaibhav] Added the ability to retrigger all pinned jobs (bug https://bugzil.la/1121998)

- Camd’s job chunking management will likely land next week https://bugzil.la/1163064

- [wlach] / [jmaher] Lots of perfherder updates, details here: http://wrla.ch/blog/2015/08/more-perfherder-updates/ Highlights below

- [wlach] The compare pushes view in Perfherder has been improved to highlight the most important information.

- [wlach] If your try push contains Talos jobs, you’ll get a url for the Perfherder comparison view when pushing (https://bugzil.la/1185676).

- [jmaher/wlach] Talos generates suite and test level metrics and perfherder now ingests those data points. This fixes results from internal benchmarks which do their own summarization to report proper numbers.

- [jmaher/parkouss] Big talos updates (thanks to :parkouss), major refactoring, cleanup, and preparation to move talos in tree.

- [mcote] The work is not quite done, but I blogged about upcoming MozReview auth changes, which people might find interesting: https://mrcote.info/blog/2015/08/04/mozreview-auth-changes/

- [gps] Ordering of review comments in Bugzilla now matches that in Review Board https://bugzil.la/1183935

- [gbrown] Demonstrated that some all-javascript robocop tests can run more efficiently as mochitest-chrome; about 20 such tests converted to mochitest-chrome, saving about 30 minutes per push.

- [gbrown] Working on “mach emulator” support: wip can download and run 2.3, 4.3, or x86 emulator images. Sorting out cache management and cross-platform issues.

- [jmaher/bc] landed code for tp4m/tsvgx on autophone- getting closer to running on autophone soon.

- [ahal] Created patch to clobber compiled python files in srcdir

- [ahal] More progress on mach/mozlog patch https://bugzil.la/1027665)

- [chmanchester] Fix to allow ‘mach try’ to work without test arguments (bug 1192484)

- [maja_zf] firefox-media-tests ‘log steps’ and ‘failure summaries’ are now compatible with Treeherder’s log viewer, making them much easier to browse. This means the jobs now satisfy all Tier-2 Treeherder requirements.

- [sydpolk] Refactoring of tests after fixing stall detection is complete. I can now take my network bandwidth prototype and merge it in.

- [whimboo] Firefox Automation report for Q2 – it covers all the work as been done in Q2 by us: http://www.hskupin.info/2015/08/11/firefox-automation-report-q2-2015/

- Finished adapting mozregression (https://bugzil.la/1132151) and mozdownload (https://bugzil.la/1136822) to S3.

- (Henrik) Isn’t archive.mozilla.org only a temporary solution, before we move to TC?

- (armenzg) I believe so but for the time being I believe we’re out of the woods

- The manifestparser dependency was removed from mozprofile (bug 1189858)

- [ahal] Fix for https://bugzil.la/1185761

- [sydpolk] Platform Jenkins migration to the SCL data center has not yet begun in earnest due to PTO. Hope to start making that transition this week.

- [chmanchester] work in progress to build annotations in to moz.build files to automatically select or prioritize tests based on what changed in a commit. Strawman implementation posted in https://bugzil.la/1184405 , blog post about this work at http://chmanchester.github.io/blog/2015/08/06/defining-semi-automatic-test-prioritization/

- [adusca/armenzg] Try Extender (http://try-extender.herokuapp.com ) is open for business, however, a new plan will soon be released to make a better version that integrates well with Treeherder and solves some technichal difficulties we’re facing

- [armenzg] Code has landed on mozci to allow re-triggering tasks on TaskCluster. This allows re-triggering TaskCluster tasks on the try server when they fail.

- [armenzg] Work to move Firefox UI tests to the test machines instead of build machines is solving some of the crash issues we were facing

- [ahal] re-implemented test-informant to use ActiveData: http://people.mozilla.org/~ahalberstadt/test-informant/

- [ekyle] Work on stability: Monitoring added to the rest of the ActiveData machines.

- [ekyle] Problem: ES was not balancing the import workload on the cluster; probably because ES assumes symmetric nodes, and we do not have that. The architecture was changed to prefer a better distribution of work (and query load) – There now appears to be less OutOfMemoryExceptions, despite test-informant’s queries.

- [ekyle] More Problems: Two servers in the ActiveData complex failed: The first was the ActiveData web server; which became unresponsive, even to SSH. The machine was terminated. The second server was the ‘master’ node of the ES cluster: This resulted in total data loss, but it was expected to happen eventually given the cheap configuration we have. Contingency was in place: The master was rebooted, the configuration was verified, and data re-indexed from S3. More nodes would help with this, but given the rarity of the event, the contingency plan in place, and the low number of users, it is not yet worth paying for.

- [ato] WebDriver is now officially a living standard (https://sny.no/2015/08/living)

- [ato] Rewrote chapter on extending the WebDriver protocol with vendor-specific commands

- [ato] Defined the Get Element CSS Value command in specification

- [ato] Get Element Attribute no longer conflates DOM attributes and properties; introduces new command Get Element Property

- [ato] Several significant infrastructural issues with the specification was fixed

- [AutomatedTester] Created Status page to show how many of the WebDriver endpoints are implemented. See https://developer.mozilla.org/en-US/docs/Mozilla/QA/Marionette/WebDriver/status

- moz.build reading API deployed and enabled on mozilla-central and version-control-tools

- https://mozilla-version-control-tools.readthedocs.org/en/latest/hgmo/mozbuildinfo.html

- This will allow us to do more magic, such as auto filing bugs in the proper component and automatically selecting appropriate reviewers when pushing to MozReview

- http://gregoryszorc.com/blog/2015/08/04/hg.mozilla.org-operational-workings-now-open-sourced/

- Project managers for FxOS have a renewed interest in the project tracking, and overall status dashboards. Talk only, no coding yet.

https://jagriffin.wordpress.com/2015/08/13/engineering-productivity-update-august-13-2015/

|

|

Jonas Finnemann Jensen: Getting Started with TaskCluster APIs (Interactive Tutorials) |

When we started building TaskCluster about a year and a half ago one of the primary goals was to provide a self-serve experience, so people could experiment and automate things without waiting for someone else to deploy new configuration. Greg Arndt (:garndt) recently wrote a blog post demystifying in-tree TaskCluster scheduling. The in-tree configuration allows developers to write new CI tasks to run on TaskCluster, and test these new tasks on try before landing them like any other patch.

This way of developing test and build tasks by adding in-tree configuration in a patch is very powerful, and it allows anyone with try access to experiment with configuration for much of our CI pipeline in a self-serve manner. However, not all tools are best triggered from a post-commit-hook, instead it might be preferable to have direct API access when:

- Locating existing builds in our task index,

- Debugging for intermittent issues by running a specific task repeatedly, and

- Running tools for bisecting commits.

To facilitate tools like this TaskCluster offers a series of well-documented REST APIs that can be access with either permanent or temporary TaskCluster credentials. We also provide client libraries for Javascript (node/browser), Python, Go and Java. However, being that TaskCluster is a loosely coupled set of distributed components it is not always trivial to figure out how to piece together the different APIs and features. To make these things more approachable I’ve started a series of interactive tutorials:

- Tutorial 1: Modern asynchronous Javascript,

- Tutorial 2: Authentication against TaskCluster, and,

- Tutorial 3: Creating Your First Task

All these tutorials are interactive, featuring a runtime that will transpile your code with babel.js before running it in the browser. The runtime environment also exposes the require function from a browserify bundle containing some of my favorite npm modules, making the example editors a great place to test code snippets using taskcluster or related services.

Happy hacking, and feel free submit PRs for all my spelling errors at github.com/taskcluster/taskcluster-docs.

http://jonasfj.dk/2015/08/getting-started-with-taskcluster-apis-interactive-tutorials/

|

|

Air Mozilla: Intern Presentations |

7 interns will be presenting what they worked on over the summer. 1. Nate Hughes - HTTP/2 on the Wire Adaptations of the Mozilla platform...

7 interns will be presenting what they worked on over the summer. 1. Nate Hughes - HTTP/2 on the Wire Adaptations of the Mozilla platform...

|

|

Air Mozilla: Intern Presentation |

Alice Scarpa will kick of today's Intern Presentations by presenting remotely from Brazil on "Building Tools with Mozci" from 1:40 - 2:00pm PDT: Mozilla Ci...

Alice Scarpa will kick of today's Intern Presentations by presenting remotely from Brazil on "Building Tools with Mozci" from 1:40 - 2:00pm PDT: Mozilla Ci...

|

|

Air Mozilla: German speaking community bi-weekly meeting |

https://wiki.mozilla.org/De/Meetings

https://wiki.mozilla.org/De/Meetings

https://air.mozilla.org/german-speaking-community-bi-weekly-meeting-20150813/

|

|

Michael Kaply: Firefox 40 Breaking Changes for Enterprise |

There are couple changes in Firefox 40 that folks need to be aware of. One I've mentioned, one I just found out about.

- The distribution/bundles directory is no longer used. As I said in this post, you must get the latest CCK2 and rebuild your AutoConfig distribution for use on Firefox 40. The new distribution you build will work as far back as Firefox 31. Unfortunately, the ability to disable safe mode won't work on Firefox 40 and beyond.

- The searchplugins directory is no longer in browser/searchplugins; it's integrated into the the product. If you were using this directory to add or remove engines, it won't work. You can either use the CCK2 or put the engine XML files in distribution/searchplugins/common. See this post.

If you have additional problems, please let me know.

https://mike.kaply.com/2015/08/13/firefox-40-breaking-changes-for-enterprise/

|

|

Air Mozilla: Web QA Weekly Meeting |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Air Mozilla: August Brantina with Hiten Shah, Founder, KISSMetrics + Crazy Egg |

August Brantina (breakfast+cantina) featuring Hiten Shah, founder of KISSMetrics & Crazy Egg

August Brantina (breakfast+cantina) featuring Hiten Shah, founder of KISSMetrics & Crazy Egg

https://air.mozilla.org/august-brantina-with-hiten-shah-founder-kissmetrics-crazy-egg/

|

|

QMO: Firefox 41 Beta 3 Testday, August 21st |

I’m writing to let you know that Friday, August 21st, we’ll be hosting the Firefox 41.0 Beta 3 Testday. The main focus of this event is going to be set on Firefox Hello Text Chat and Add-ons Signing. Detailed participation instructions are available in this etherpad.

No previous testing experience is required so feel free to join us on the #qa IRC channel and our moderators will make sure you’ve got everything you need to get started.

Hope to see you all on Friday! Let’s make Firefox better together!

https://quality.mozilla.org/2015/08/firefox-41-beta-3-testday-august-21st/

|

|

Air Mozilla: Intern Presentation |

Alice Scarpa will kick of today's Intern Presentations by presenting remotely from Brazil on "Building Tools with Mozci" from 1:40 - 2:00pm PDT: Mozilla Ci...

Alice Scarpa will kick of today's Intern Presentations by presenting remotely from Brazil on "Building Tools with Mozci" from 1:40 - 2:00pm PDT: Mozilla Ci...

|

|

Gary Kwong: Multilingual slides in HTML5 Mozilla Sandstone slidedeck |

Edit: You can now preview the changes live. Also, the pull request got accepted!

I just submitted a GitHub PR for adding multilingual support to Mozilla’s HTML5 Sandstone slidedeck. The selection is persistent across slide changes, and in Firefox, the URL bar will update the lang attribute as well.

Pictures are worth thousands of words, so here you go:

To add languages:

- Add the style tags to the stylesheet

- Modify the language menu

- Place your translation within tags with language code class names

Example code:

https://garykwong.wordpress.com/2015/08/12/multilingual-slides-in-html5-mozilla-sandstone-slidedeck/

|

|

Tantek Celik: An Alphabet of IndieWeb Building Blocks: Article to Z |

Rather than stake any claim to being the Alphabet, I decided it would be more useful to document an alphabet of the IndieWeb, choosing a primary IndieWeb building block for each letter A-Z, with perhaps a few secondary and additional useful terms.

- article posts are what built the blogosphere and a classic indieweb post type. audio posts like podcasts are another post type. Most sites have an archive UI. The authorship algorithm is used by consuming sites and readers.

- backfeed is how you reverse syndicate responses on your posts's silo copies back to originals, perhaps using Bridgy, the awesome backfeed as a service proxy. Many indieweb sites publish bookmark posts.

- checkin, collection, and comics are indieweb post types. You should have a create UI and a contact page for personal communication. This post uses a custom post style. IndieWebCamp is a commons with a code-of-conduct.

- design in the IndieWeb is more important than the plumbing of protocols & formats. The delete protocol uses webmentions and HTTP 410 Gone for decentralized deletions. Consider the disclosure UI pattern.

- event, exercise, and edit, are all very useful types of IndieWeb posts for decentralized calendaring, personal quantified self (QS) tracking, and peer-to-peer collaboration.

- food posts are also useful for QS. Follow posts can notify an IndieWeb site of a new reader. Use fragmentions to link to particular text in a post or page. Both the facepile UI pattern and file-storage are popular on the IndieWeb.

- generations are a model of different successive populations of indieweb users, each helping the next with ever more user-friendly IndieWeb tools & services to broaden adoption, activism, and advocacy.

- homepage — everyone should have one on their personal domain, that serves valid HTML, preferably using HTTPS. Come to a Homebrew Website Club meetup (every other Wednesday) and get help setting up your own!

- IndieAuth is a key IndieWeb building block, for signing-into the IndieWebCamp wiki & micropub clients. IndieMark & indiewebify.me measure indieweb support. Use invitation posts to invite people to events, and indie-config for webactions.

- jam posts are for posting a specific song that you’re currently into, jamming to, listening to repeatedly, etc. The term “jam” was inspired by thisismyjam.com, a site for posting jams, and listening to jams of those you follow.

- Known is perhaps the most popular IndieWeb-supporting-by-default content posting/management system (CMS) available both as open source to self-host, and as the Withknown.com hosted service.

- like posts can be used to “like” anything on the web, not just posts in silos. Longevity is a key IndieWeb motivator and principle, since silos shutdown for many reasons, breaking permalinks and often deleting your content.

- microformats are a simple standard for representing posts, authors, responses etc. to federate content across sites. Micropub is a standard API for posting to the IndieWeb. Marginalia are peer-to-peer responses to parts of posts.

- note posts are the simplest and most fundamental IndieWeb post type, you should implement them on your site and own your tweets. Notifications are a stream of mentions of and responses to you and your posts.

- ownyourdata is a key indieweb incentive, motivation, rallying cry, and hashtag. The excellent OwnYourGram service can automatically PESOS your Instagram photos to photo posts on your own site via micropub.

- posts have permalinks. Use person-tags to tag people in Photos. Use POSSE to reach your friends on silos. PESOS only when you must. Use PubSubHubbub for realtime publishing. IndieWeb principles encourage a plurality of projects.

- quotation posts are excerpts of the contents of other posts. The open source Quill posting client guides you through adding micropub support to your site, step-by-step.

- reply is perhaps the second most common type of indieweb post, often with the reply-context UI pattern. Use a repost to share the entirety of another post. An integrated reader feature on your site can replace your silo stream reading.

- stream posts on your homepage, like notes, photos, and articles, perhaps even your scrobble & sleep posts. Selfdogfood your ideas, design, UX, code before asking others to do so. Support salmentions to help pass SWAT0.

- text-first design is a great way to start working on any post type. Use tags to categorize posts, and a tag-reply to tag others’ posts. Use travel posts to record your travel. The timeline page records the evolution of key IndieWeb ideas.

- UX is short for user experience, a primary focus of the IndieWeb, and part of our principle of user-centric design before protocols & formats. Good UI (short for user interface) patterns and URL design are part of UX.

- video posts are often a challenge to support due to different devices & browsers requiring different video formats. The Vouch extension to Webmention can be used to reduce or even prevent spam.

- Webmentions notify sites of responses from other sites. Webactions allow users to respond to posts using their own site. If you use WordPress, install IndieWeb plugins. Woodwind is a nice reader. We encourage wikifying.

- XFN is the (increasingly ironically named) XHTML Friends Network, a spec in wide use for identity and authentication with

rel=me, and less frequently for other values like contact, acquaintance, friend, etc. - YAGNI is short for "You Aren't Gonna Need It" and one of the best ways the IndieWeb community has found to both simplify, and/or debunk & fight off more complex proposals (often from academics or enterprise architects).

- Z time (or Zulu time) is at a zero offset from UTC. Using and storing times in “Z” is a common technique used by indieweb servers and projects to minimize and avoid problems with timezones.

Background

The idea for an A-Z or alphabet of indieweb stuff came to me when I curated the alphabetically ordered lists of IndieWeb in review 2014: Technologies, etc. from the raw list of new non-redirect pages created in 2014.

I realized I was getting pretty close to a whole alphabet of building blocks. In particular the list of Community Resources has 16 of 26 letters all by itself!

Rather than try to figure out how to expand any of those into the whole alphabet, I decided to keep them focused on key things in 2014, and promptly forgot any thoughts of an alphabet.

Monday, since the topic of conversation in nearly every channel was Google renaming itself to “Alphabet”, I figured why not make an actually useful “alphabet” instead, and braindumped the best IndieWeb term or terms that came to mind for each letter A-Z on the IndieWebCamp wiki.

Subsequently I decided the list would look better if the first letters of each of the words served stylistically as list item indicators, wrote a custom post stylesheet to do so, and rewrote the content accordingly. The colors are from my CSS3 trick for a pride rainbow background, with yellow changed to #ffba03 for readability.

Incidentally, this is the first time I've combined the :nth-child(an+b) and ::first-letter selectors to achieve a particular effect: nth-child with a=6 b=1…6 for the colors, and first-letter for the drop-cap, highlighted in that color. View source to see the scoped style element and CSS rules.

Since I wrote up the initial IndieWeb alphabet on the IndieWebCamp wiki which is CC0, this post (and its custom post styling) is also licensed CC0 to allow the same re-usability.

Thanks in particular to Kartik Prabhu for reviewing, providing some better suggestions, and noting a few absences, as well as feedback from Aaron Parecki, Ben Roberts, and Kevin Marks.

|

|

Andreas Tolfsen: The sorry state of women in tech |

I read the anonymous blog post Your Pipeline Argument is Bullshit with great interest. While it’s not very diplomatic and contains a great deal of ranting, it speaks about something very important: Women in tech. I read it more as an expression of frustration, than a mindless flamatory rant as others have indicated.

On the disproportion of women granted CS degrees in the US:

I use this data because I graduated with a CS degree in 2000. Convenient. The 2000s is also when the proportion of women getting CS degrees started falling even faster. My first job out of school had an older woman working there who let me know back in the 80s there were a lot more women. Turns out, in the early 80s, almost 40% of CS degrees in the US were granted to women.

In 2009 only about 18% women graduated with CS degrees.

Although purely anecdotal evidence, my experience after working seven years as a professional programmer is that more women than men leave the industry to pursue other careers. In fact the numbers show that the cumulative pool of talent (meaning all who hold CS degrees) is close to 25% women.

We have to recognise what a sorry state our industry is in. Callous and utilitarian focus on technology alone, as opposed to building a healthy discourse and community around computing that is inclusive to women, does more harm than we can imagine.

Because men hire men, and especially those men similar to ourselves, I’m in favour of positive action to balance the numbers.

Whoever is saying that we should hire based on talent alone, there’s little evidence to support that 25% of the available CS talent is any worse than the remaining three quarters.

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2015/08/12 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

Add-ons Forum

As we announced before, there’s a new add-ons community forum for all topics related to AMO or add-ons in general. The old forum is still available in read-only mode, but will be permanently taken down by the end of the month.

The Review Queues

- Most nominations for full review are taking less than 10 weeks to review.

- 330 nominations in the queue awaiting review.

- Most updates are being reviewed within 10 weeks.

- 128 updates in the queue awaiting review.

- Most preliminary reviews are being reviewed within 12 weeks.

- 360 preliminary review submissions in the queue awaiting review.

The unlisted queues aren’t mentioned here, but they are empty for the most part. We’re in the process of getting more help to reduce queue length and waiting times for the listed queues. There are many new volunteer reviews joining us now, and a part-time contractor who should help us with the longest-waiting add-ons in the queues.

If you’re an add-on developer and would like to see add-ons reviewed faster, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 40 Compatibility

The Firefox 40 compatibility blog post is up. The automatic compatibility validation was run last weekend. I apologize for the delay, we’re making some changes to ensure that we run them more in advance of the release.

Firefox 41 Compatibility

The compatibility blog post should come up soon.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition (formerly known as Aurora) to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Extension Signing

We announced that we will require extensions to be signed in order for them to continue to work in release and beta versions of Firefox. The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions.

The new add-on installation UI and signature warnings are now enabled in release versions of Firefox. Signature enforcement will be turned on in 41 (with a pref to disable), and the pref will be removed on 42. The wiki page has all the details.

Electrolysis

Electrolysis, also known as e10s, is the next major compatibility change coming to Firefox. In a nutshell, Firefox will run on multiple processes now, running content code in a different process than browser code. This should improve responsiveness and overall stability, but it also means many add-ons will need to be updated to support this.

We will be talking more about these changes in this blog in the future. We recommend you look at the available documentation and adapt your add-on code as soon as possible.

https://blog.mozilla.org/addons/2015/08/12/add-ons-update-69/

|

|

Air Mozilla: Product Coordination Meeting |

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

https://air.mozilla.org/product-coordination-meeting-20150812/

|

|

Weekly Mozilla Reps call

Weekly Mozilla Reps call