Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org:443/mozilla-weekly-project-meeting-20150504/

|

|

Mozilla Science Lab: Mozilla Science Lab Week in Review, April 27 – May 3 |

The Week in Review is our weekly roundup of what’s new in open science from the past week. If you have news or announcements you’d like passed on to the community, be sure to share on Twitter with @mozillascience and @billdoesphysics, or join our mailing list and get in touch there.

Awards & Grants

- Applications for the PLOS Early Career Travel Award Program are now open; ten $500 awards are available to help early career researchers publishing in PLOS attend meetings and conferences to present their work.

Tools & Resources

- EIFL has released the FOSTER (Facilitate Open Science Training for European Research) Toolkit for training sessions, a comprehensive review, analysis and strategy document for conducting open science training for students, policy makers, librarians, project managers and instructor-trainers.

Blogs & Papers

- A study led by the Center for Open Science that attempted to replicate the findings of 100 journal articles in psychology has concluded, with data posted online; 39 of the articles investigated were reproduced, with substantial similarities found in several dozen more.

- The Joint Research Centre of the European Commission has released an interim report on their ongoing work in ‘Analysis of emerging reputation mechanisms for scholars'; the report maps an ontology of research-related activities onto the reputation-building activities that attempt to capture them, and reviews the social networks that attempt to facilitate this construction of reputation on the web.

- Alyssa Goodman et al published Ten Simple Rules for the Care and Feeding of Scientific Data in PLOS Computational Biology. In it, the authors touch not only on raw data, but the importance of permanent identifiers by which to identify it, and the context provided by publishing workflows in addition to code.

- David Takeuchi wrote about his concerns that the American federal government’s proposed FIRST act will curtail funding for the social sciences, and place too much emphasis on perceived relevance at the expense of reproducibility.

- The Georgia Tech Computational Linguistics Lab blogged about the results of a recent graduate seminar where students were set to reproducing the results of several papers in computational social science. The author makes several observations on the challenges faced, including the difficulties in reproducing results based on social network or other proprietary information, and on the surprising robustness of machine-learning driven analyses.

- Cobi Smith examined both the current state and future importance of open government data in Australia.

Meetings & Conferences

- Submissions for the Workshop on Digital Scientific Communication (Pozna'n, Poland, 18 September) are open until 5 July. The workshop hopes to explore revising scientific communication to better facilitate the discovery of existing research results.

- The Advancing Research Communication & Scholarship (ARCS) Conference was this week; the Science Lab’s own director Kaitlin Thaney spoke on contributorship badges for science.

http://mozillascience.org/mozilla-science-lab-week-in-review-april-27-may-3/

|

|

Mike Conley: Electrolysis and the Big Tab Spinner of Doom |

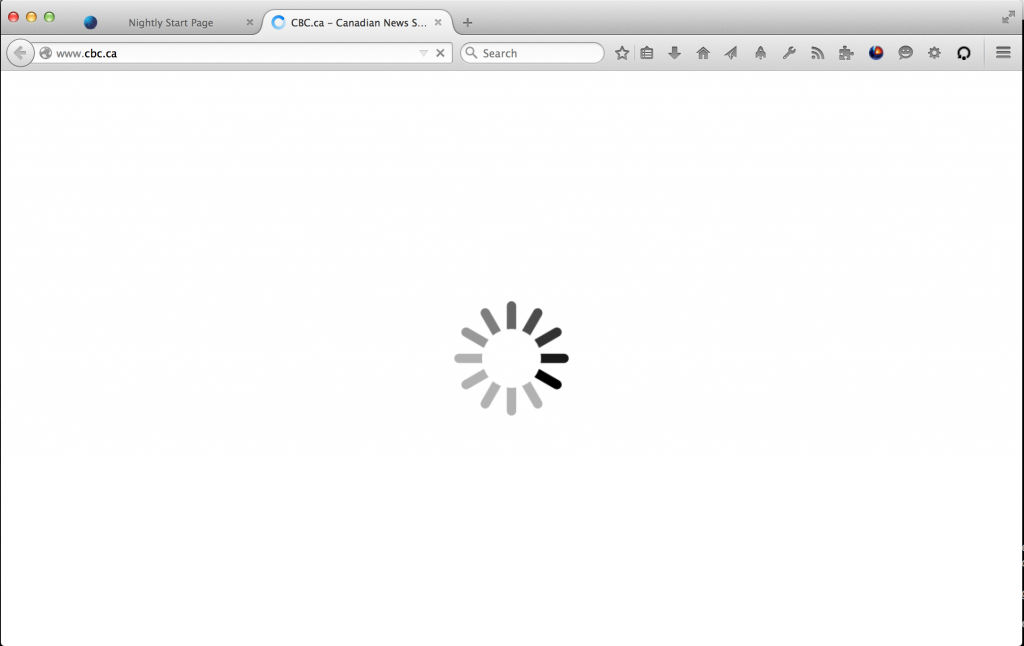

Have you been using Firefox Nightly and seen this big annoying spinner?

I hate that thing. I hate it.

And while we’re working on making the spinner itself less ugly, I’d like to eliminate, or at least reduce its presence to the absolute minimum.

How do I do that? Well, first, know your enemy.

What does it even mean?

That big spinner means that the graphics part of Gecko hasn’t given us a frame yet to paint for this browser tab. That means we have nothing yet to show for the tab you’ve selected.

In the single-process Firefox that we ship today, this graphics operation of preparing a frame is something that Firefox will block on, so the tab will just not switch until the frame is ready. In fact, I’m pretty sure the whole browser will become unresponsive until the frame is ready.

With Electrolysis / multi-process Firefox, things are a bit different. The main browser process tells the content process, “Hey, I want to show the content associated with the tab that the user just selected”, and the content process computes what should be shown, and when the frame is ready, the parent process hears about it and the switch is complete. During that waiting time, the rest of the browser is still responsive – we do not block on it.

So there’s this window of time where the tab switch has been requested, and when the frame is ready.

During that window of time, we keep showing the currently selected tab. If, however, 300ms passes, and we still haven’t gotten a frame to paint, that’s when we show the big spinner.

So that’s what the big spinner means – we waited 300ms, and we still have no frame to draw to the screen.

How bad is it?

I suspect it varies. I see the spinner a lot less on my Windows machine than on my MacBook, so I suspect that performance is somehow worse on OS X than on Windows. But that’s purely subjective. We’ve recently landed some Telemetry probes to try to get a better sense of how often the spinner is showing up, and how laggy our tab switching really is. Hopefully we’ll get some useful data out of that, and as we work to improve tab switch times, we’ll see improvement in our Telemetry numbers as well.

Where is the badness coming from?

This is still unclear. And I don’t think it’s a single thing – many things might be causing this problem. Anything that blocks up the main thread of the content process, like slow JavaScript running on a web-site, can cause the spinner.

I also seem to see the spinner when I have “many” tabs open (~30), and have a build going on in the background (so my machine is under heavy load).

Maybe we’re just doing things inefficiently in the multi-process case. I recently landed profile markers for the Gecko Profiler for async tab switching, to help figure out what’s going on when I experience slow tab switch. Maybe there are optimizations we can make there.

One thing I’ve noticed is that there’s this function in the graphics layer, “ClientTiledLayerBuffer::ValidateTile”, that takes much, much longer in the content process than in the single-process case. I’ve filed a bug on that, and I’ll ask folks from the Graphics Team this week.

How you can help

If you’d like to help me find more potential causes, Profiles are very useful! Grab the Gecko Profiler add-on, make sure it’s enabled, and then dump a profile when you see the big spinner of doom. The interesting part will be between two markers, “AsyncTabSwitch:Start” and “AsyncTabSwitch:Finish”. There are also markers for when the parent process displays the spinner – “AsyncTabSwitch:SpinnerShown” and “AsyncTabSwitch:SpinnerHidden”. The interesting stuff, I believe, will be in the “Content” section of the profile between those markers. Here are more comprehensive instructions on using the Gecko Profiler add-on.

And here’s a video of me demonstrating how to use the profiler, and how to attach a profile to the bug where we’re working on improving tab switch times:

And here’s the link I refer you to in the video for getting the add-on.

So hopefully we’ll get some useful data, and we can drive instances of this spinner into the ground.

I’d really like that.

http://mikeconley.ca/blog/2015/05/04/electrolysis-and-the-big-tab-spinner-of-doom/

|

|

Laura Hilliger: Open Web Leadership |

Over the last couple of weeks, we’ve been talking about an organizing structure for future (and current) Teach Like Mozilla content and curriculum. This stream of curriculum is aimed at helping leaders gain the competencies and skills needed for teaching, organizing and sustaining learning for the web. We’ve been short-handing this work “Open Fluency” after I wrote a post about the initial thinking.

Last week, in our biweekly community call, we talked about the vision for our call. In brief, we want to:

“Work together to define leadership competencies and skills, as well as provide ideas and support to our various research initiatives.”

We decided to change the naming of this work to “Open Web Leadership”, with a caveat that we might find a better name sometime in the future. We discussed leadership in the Mozilla context and took some notes on what we view as “leadership” in our community. We talked about the types of leadership we’ve seen within the community, noted that we’ve seen all sorts, and, in particular, had a lengthy conversation about people confusing management with leadership.

We decided that as leaders in the Mozilla Community, we want to be collaborative, effective, supported, compassionate for people’s real life situations. We want to inspire inquiry and exploration and ensure that our community can make independent decisions and take ownership. We want to be welcoming and encouraging, and we are especially interested in making sure that as leaders, we encourage new leaders to come forward, grow and participate.

I believe it was Greg who wrote in the call etherpad:

“Open Web Leaders engage in collaborative design while serving as a resource to others as we create supportive learning spaces that merge multiple networks, communities, and goals.”

Next, we discussed what people need to feel ownership and agency here in the Mozilla community. People expressed some love for the type of group work we’re doing with Open Web Leadership, pointing out that working groups who make decisions together fuels their own participation. It was pointed out that the chaos of the Mozilla universe should be a forcing function for creating on-boarding materials for getting involved, and that a good leader:

“Makes sure everyone “owns” the project”

There’s a lot in that statement. Giving ownership and agency to your fellow community members requires open and honest communication, not one time but constantly. No matter how much we SAY it, our actions (or lack of action) color how people view the work (as well as each other).

After talking about leadership, we added the progressive “ing” form to the verbs we’re using to designate each Open Web Leadership strand. I think this was a good approach as to me it signifies that understanding, modeling and uniting to TeachTheWeb are ongoing and participatory practices. Or, said another way, lifelong learning FTW! Our current strands are:

- Understanding Participatory Learning (what you need to know)

- Modeling Processes and Content (how you wield what you know)

- Uniting Locally and Globally (why you wield what you know)

We established a need for short, one line descriptors on each strand, and decided that the competency “Open Thinking” is actually a part of “Open Practices”. We’ll refine and further develop this in future calls!

As always, you’re invited to participate. There are tons of thought provoking Github issues you can dive into (coding skills NOT required), and your feedback, advice, ideas and criticisms are all welcome.

|

|

Daniel Stenberg: HTTP/2 in curl, status update |

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

I’m right now working on adding proper multiplexing to libcurl’s HTTP/2 code. So far we’ve only done a single stream per connection and while that works fine and is HTTP/2, applications will still want more when switching to HTTP/2 as the multiplexing part is one of the key components and selling features of the new protocol version.

Pipelining means multiplexed

As a starting point, I’m using the “enable HTTP pipelining” switch to tell libcurl it should consider multiplexing. It makes libcurl work as before by default. If you use the multi interface and enable pipelining, libcurl will try to re-use established connections and just add streams over them rather than creating new connections. Yes this means that A) you need to use the multi interface to get the full HTTP/2 stuff and B) the curl tool won’t be able to take advantage of it since it doesn’t use the multi interface! (An old outstanding idea is to move the tool to use the multi interface and this would yet another reason why this could be a good idea.)

We still have some decisions to make about how we want libcurl to act by default – especially when we can expect application to use both HTTP/1.1 and HTTP/2 at the same time. Since we don’t know if the server supports HTTP/2 until after a certain point in the negotiation, we need to decide on how to do when we issue N transfers at once to the same server that might speak HTTP/2… Right now, we get the best HTTP/2 behavior by telling libcurl we only want one connection per host but that is probably not ideal for an application that might use a mix of HTTP/1.1 and HTTP/2 servers.

Downsides with abusing pipelining

There are some drawbacks with using that pipelining switch to allow multiplexing since users may very well want HTTP/2 multiplexing but not HTTP/1.1 pipelining since the latter is just riddled with interop problems.

Also, re-using the same options for limited connections to host names etc for both HTTP/1.1 and HTTP/2 may not at all be what real-world applications want or need.

One easy handle, one stream

libcurl API wise, each HTTP/2 stream is its own easy handle. It makes it simple and keeps the API paradigm very much in the same way it works for all the other protocols. It comes very natural for the libcurl application author. If you setup three easy handles, all identifying a resource on the same server and you tell libcurl to use HTTP/2, it makes perfect sense that all these three transfers are made using a single connection.

As multiplexed data means that when reading from the socket, there is data arriving that belongs to other streams than just a single one. So we need to feed the received data into the different “data buckets” for the involved streams. It gives us a little internal challenge: we get easy handles with no socket activity to trigger a read, but there is data to take care of in the incoming buffer. I’ve solved this so far with a special trigger that says that there is data to take care of, that it should make a read anyway that then will get the data from the buffer.

Server push

HTTP/2 supports server push. That’s a stream that gets initiated from the server side without the client specifically asking for it. A resource the server deems likely that the client wants since it asked for a related resource, or similar. My idea is to support server push with the application setting up a transfer with an easy handle and associated options, but the URL would only identify the server so that it knows on which connection it would accept a push, and we will introduce a new option to libcurl that would tell it that this is an easy handle that should be used for the next server pushed stream on this connection.

Of course there are a few outstanding issues with this idea. Possibly we should allow an easy handle to get created when a new stream shows up so that we can better deal with a dynamic number of new streams being pushed.

It’d be great to hear from users who have ideas on how to use server push in a real-world application and how you’d imagine it could be used with libcurl.

Work in progress code

My work in progress code for this drive can be found in two places.

First, I do the libcurl multiplexing development in the separate http2-multiplex branch in the regular curl repo:

https://github.com/bagder/curl/tree/http2-multiplex.

Then, I put all my test setup and test client work in a separate repository just in case you want to keep up and reproduce my testing and experiments:

https://github.com/bagder/curl-http2-dev

Feedback?

All comments, questions, praise or complaints you may have on this are best sent to the curl-library mailing list. If you are planning on doing a HTTP/2 capable applications or otherwise have thoughts or ideas about the API for this, please join in and tell me what you think. It is much better to get the discussions going early and work on different design ideas now before anything is set in stone rather than waiting for us to ship something semi-stable as the closer to an actual release we get, the harder it’ll be to change the API.

Not quite working yet

As I write this, I’m repeatedly doing 99 parallel HTTP/2 streams with no data corruption… But there’s a lot more to be done before I’ll call it a victory.

http://daniel.haxx.se/blog/2015/05/04/http2-in-curl-status-update/

|

|

Andy McKay: TFSA |

In the budget the Conservatives increased the TFSA in allowance from $5,500 to $10,000. This was claimed to be:

11 million Cdns use Tax-Free Savings Accounts. #Budget2015 will increase limit from $5500 to $10000. Help for low and middle income Cdns.

"Low income" really? According to Revenue Canada we can see that most people are not to maxing out their TFSA room. In fact since 2009, the amount of unused contribution has been growing each year.

| Year | Aveage unused contribution | Change |

|---|---|---|

| 2009 | $1,156.29 | |

| 2010 | $3,817.25 | $2,660.96 |

| 2011 | $6,692.37 | $2,875.12 |

| 2012 | $9,969.19 | $3,276.83 |

People are having trouble keeping up with TFSA contributions as it is. But what's low income? Depends how you define it, there's a few ways.

LICO is an income threshold below which a family will likely devote a larger share of its income to the necessities of food, shelter and clothing than an average family would

And "Thus for 2011, the 1992 based after-tax LICO for a family of four living in an community with a population between 30,000 and 99,999 is $30,487, expressed in current dollars.". That is after tax.

Of that income by definition, over 50% of the families incomes is on food, shelter and clothing. Meaning that there's $15,243 left. Maybe, these are all averages and many people will be way, way worse off.

Is $10,000 in TFSA reasonable for families who have less than $15,243 a year? No. It benefits people with more money and the ability to save. Further we can see that the actual amount of money going into TFSA has been dropping every year since its creation and the unused contribution has been growing.

There isn't really a good justification for increasing the TFSA except as a way of helping the rich just before the election.

|

|

Mike Conley: Things I’ve Learned This Week (April 27 – May 1, 2015) |

Another short one this week.

You can pass DOM Promises back through XPIDL

XPIDL is what we use to define XPCOM interfaces in Gecko. I think we’re trying to avoid XPCOM where we can, but sometimes you have to work with pre-existing XPCOM interfaces, and, well, you’re just stuck using it unless you want to rewrite what you’re working on.

What I’m working on lately is nsIProfiler, which is the interface to “SPS”, AKA the Gecko Profiler. nsIProfiler allows me to turn profiling on and off with various features, and then retrieve those profiles to send to a file, or to Cleopatra1.

What I’ve been working on recently is Bug 1116188 – [e10s] Stop using sync messages for Gecko profiler, which will probably have me adding new methods to nsIProfiler for async retrieval of profiles.

In the past, doing async stuff through XPCOM / XPIDL has meant using (or defining a new) callback interface which can be passed as an argument to the async method.

I was just about to go down that road, when ehsan (or was it jrmuizel? One of them, anyhow) suggested that I just pass a DOM Promise back.

I find that Promises are excellent. I really like them, and if I could pass a Promise back, that’d be incredible. But I had no idea how to do it.

It turns out that if I can ensure that the async methods are called such that there is a JS context on the stack, I can generate a DOM Promise, and pass it back to the caller as an “nsISupports”. According to ehsan, XPConnect will do the necessary magic so that the caller, upon receiving the return value, doesn’t just get this opaque nsISupports thing, but an actual DOM Promise. This is because, I believe, that DOM Promise is something that is defined via WebIDL. I think. I can’t say I fully understand the mechanics of XPConnect2, but this all sounded wonderful.

I even found an example in our new Service Worker code:

From dom/workers/ServiceWorkerManager.cpp (I’ve edited the method to highlight the Promise stuff):

// If we return an error code here, the ServiceWorkerContainer will// automatically reject the Promise.NS_IMETHODIMPServiceWorkerManager::Register(nsIDOMWindow* aWindow,nsIURI* aScopeURI,nsIURI* aScriptURI,nsISupports** aPromise){AssertIsOnMainThread();// XXXnsm Don't allow chrome callers for now, we don't support chrome// ServiceWorkers.MOZ_ASSERT(!nsContentUtils::IsCallerChrome());nsCOMPtr<nsPIDOMWindow> window = do_QueryInterface(aWindow);// ...nsCOMPtr<nsIGlobalObject> sgo = do_QueryInterface(window);ErrorResult result;nsRefPtr<Promise> promise = Promise::Create(sgo, result);if (result.Failed()) {return result.StealNSResult();}// ...nsRefPtr<ServiceWorkerResolveWindowPromiseOnUpdateCallback> cb =new ServiceWorkerResolveWindowPromiseOnUpdateCallback(window, promise);nsRefPtr<ServiceWorkerRegisterJob> job =new ServiceWorkerRegisterJob(queue, cleanedScope, spec, cb, documentPrincipal);queue->Append(job);promise.forget(aPromise);return NS_OK;}

Notice that the outparam aPromise is an nsISupports**, and yet, I do believe the caller will end up handling a DOM Promise. Wicked!

http://mikeconley.ca/blog/2015/05/02/things-ive-learned-this-week-april-27-may-1-2015/

|

|

Mike Conley: The Joy of Coding (Ep. 12): Making “Save Page As” Work |

After giving some updates on the last bug we were working on together, I started a new bug: Bug 1128050 – [e10s] Save page as… doesn’t always load from cache. The problem here is that if the user were to reach a page via a POST request, attempting to save that page from the Save Page item in the menu would result in silent failure1.

Luckily, the last bug we were working on was related to this – we had a lot of context about cache keys swapped in already.

The other important thing to realize is that fixing this bug is a bandage fix, or a wallpaper fix. I don’t think those are official terms, but it’s what I use. Basically, we’re fixing a thing with the minimum required effort because something else is going to fix it properly down the line. So we just need to do what we can to get the feature to limp along until such time as the proper fix lands.

My proposed solution was to serialize an nsISHEntry on the content process side, deserialize it on the parent side, and pass it off to nsIWebBrowserPersist.

So did it work? Watch the episode and find out!

I also want to briefly apologize for some construction noise during the video – I think it occurs somewhere halfway through minute 20 of the video. It doesn’t last long, I promise!

References

Bug 1128050 – [e10s] Save page as… doesn’t always load from cache – Notes

Well, it’d show something in the Browser Console, but for a typical user, I think that’s still a silent failure.

http://mikeconley.ca/blog/2015/05/02/the-joy-of-coding-ep-12-making-save-page-as-work/

|

|

Mozilla Release Management Team: Firefox 38 beta8 to beta9 |

In this beta, 16 changesets are test-only or NPOTB changes. Besides those patches, we took graphic fixes, stabilities improvements and polish fixes.

- 38 changesets

- 87 files changed

- 713 insertions

- 287 deletions

| Extension | Occurrences |

| h | 22 |

| cpp | 14 |

| js | 12 |

| cc | 6 |

| html | 4 |

| txt | 2 |

| py | 2 |

| jsm | 2 |

| ini | 2 |

| xml | 1 |

| sh | 1 |

| list | 1 |

| java | 1 |

| css | 1 |

| c | 1 |

| build | 1 |

| Module | Occurrences |

| ipc | 13 |

| dom | 11 |

| js | 10 |

| browser | 8 |

| media | 5 |

| image | 5 |

| gfx | 5 |

| mobile | 3 |

| layout | 3 |

| toolkit | 2 |

| mfbt | 2 |

| xpcom | 1 |

| uriloader | 1 |

| tools | 1 |

| testing | 1 |

| services | 1 |

| python | 1 |

| parser | 1 |

| netwerk | 1 |

| mozglue | 1 |

| docshell | 1 |

| config | 1 |

List of changesets:

| Ryan VanderMeulen | Bug 1062496 - Disable browser_aboutHome.js on OSX 10.6 debug. a=test-only - 657cfe2d4078 |

| Ryan VanderMeulen | Bug 1148224 - Skip timeout-prone subtests in mediasource-duration.html on Windows. a=test-only - 82de02ddde1b |

| Ehsan Akhgari | Bug 1095517 - Increase the timeout of browser_identity_UI.js. a=test-only - 611ca5bd91d4 |

| Ehsan Akhgari | Bug 1079617 - Increase the timeout of browser_test_new_window_from_content.js. a=test-only - 1783df5849c7 |

| Eric Rahm | Bug 1140537 - Sanity check size calculations. r=peterv, a=abillings - a7d6b32a504c |

| Hiroyuki Ikezoe | Bug 1157985 - Use getEntriesByName to search by name attribute. r=qdot, a=test-only - 55b58d5184ce |

| Morris Tseng | Bug 1120592 - Create iframe directly instead of using setTimeout. r=kanru, a=test-only - a4f506639153 |

| Gregory Szorc | Bug 1128586 - Properly look for Mercurial version. r=RyanVM, a=NPOTB - 49abfe1a8ef8 |

| Gregory Szorc | Bug 1128586 - Prefer hg.exe over hg. r=RyanVM, a=NPOTB - a0b48af4bb54 |

| Shane Tomlinson | Bug 1146724 - Use a SendingContext for WebChannels. r=MattN, r=markh, a=abillings - 56d740d0769f |

| Brian Hackett | Bug 1138740 - Notify Ion when changing a typed array's data pointer due to making a lazy buffer for it. r=sfink, a=sledru - e1fb2a5ab48d |

| Seth Fowler | Bug 1151309 - Part 1: Block until the previous multipart frame is decoded before processing another. r=tn, a=sledru - 046c97d2eb23 |

| Seth Fowler | Bug 1151309 - Part 2: Hide errors in multipart image parts both visually and internally. r=tn, a=sledru - 0fcbbecc843d |

| Alessio Placitelli | Bug 1154518 - Make sure extended data gathering (Telemetry) is disabled when FHR is disabled. r=Gijs, a=sledru - cb2725c612b2 |

| Bas Schouten | Bug 1151821 - Make globalCompositeOperator work correctly when a complex clip is pushed. r=jrmuizel, a=sledru - 987c18b686eb |

| Bas Schouten | Bug 1151821 - Test whether simple canvas globalCompositeOperators work when a clip is set. r=jrmuizel, a=sledru - 1bbb50c6a494 |

| Bob Owen | Bug 1087565 - Verify the child process with a secret hello on Windows. r=dvander, a=sledru - c1f04200ed98 |

| Randell Jesup | Bug 1157766 - Mismatched DataChannel initial channel size in JSEP database breaks adding channels. r=bwc, a=sledru - a8fb9422ff13 |

| David Major | Bug 1130061 - Block version 1.5 of vwcsource.ax. r=bsmedberg, a=sledru - 053da808c6d9 |

| Martin Thomson | Bug 1158343 - Temporarily enable TLS_RSA_WITH_AES_128_CBC_SHA for WebRTC. r=ekr, a=sledru - d10817faa571 |

| Margaret Leibovic | Bug 1155083 - Properly hide reader view tablet on landscape tablets. r=bnicholson, a=sledru - f7170ad49667 |

| Steve Fink | Bug 1136309 - Rename the spidermonkey build variants. r=terrence, a=test-only - 604326355be0 |

| Mike Hommey | Bug 1142908 - Avoid arm simulator builds being considered cross-compiled. r=sfink, a=test-only - 517741a918b0 |

| Jan de Mooij | Bug 1146520 - Fix some minor autospider issues on OS X. r=sfink, a=test-only - 620cae899342 |

| Steve Fink | Bug 1146520 - Do not treat osx arm-sim as a cross-compile. a=test-only - a5013ed3d1f0 |

| Steve Fink | Bug 1135399 - Timeout shell builds. r=catlee, a=test-only - b6bf89c748b7 |

| Steve Fink | Bug 1150347 - Fix autospider.sh --dep flag name. r=philor, a=test-only - b8f7eabd31b9 |

| Steve Fink | Bug 1149476 - Lengthen timeout because we are hitting it with SM(cgc). r=me (also jonco for a more complex version), a=test-only - 16c98999de0b |

| Chris Pearce | Bug 1136360 - Backout 3920b67e97a3 to fix A/V sync regressions (Bug 1148299 & Bug 1157886). r=backout a=sledru - 4ea8cdc621e8 |

| Patrick Brosset | Bug 1153463 - Intermittent browser_animation_setting_currentTime_works_and_pauses.js. r=miker, a=test-only - c31c2a198a71 |

| Andrew McCreight | Bug 1062479 - Use static strings for WeakReference type names. r=ehsan, a=sledru - 5d903629f9bd |

| Michael Comella | Bug 1152314 - Duplicate action bar configuration in code. r=liuche, a=sledru - cdfd06d73d17 |

| Ethan Hugg | Bug 1158627 - WebRTC return error if GetEmptyFrame returns null. r=jesup, a=sledru - f1cd36f7e0e1 |

| Jeff Muizelaar | Bug 1154703 - Avoid using WARP if nvdxgiwrapper.dll is around. a=sledru - 348c2ae68d50 |

| Shu-yu Guo | Bug 1155474 - Consider the input to MThrowUninitializedLexical implicitly used. r=Waldo, a=sledru - daaa2c27b89f |

| Jean-Yves Avenard | Bug 1149605 - Avoid potential integers overflow. r=kentuckyfriedtakahe, a=abillings - fcfec0caa7be |

| Ryan VanderMeulen | Backed out changeset daaa2c27b89f (Bug 1155474) for bustage. - 0a1accb16d39 |

| Shu-yu Guo | Bug 1155474 - Consider the input to MThrowUninitializedLexical implicitly used. r=Waldo, a=sledru - ff65ba4cd38a |

http://release.mozilla.org/statistics/38/2015/05/02/fx-38-b8-to-b9.html

|

|

Christian Heilmann: Start of my very busy May speaking tour and lots of //build videos to watch |

I am currently in the Heathrow airport lounge on the first leg of my May presenting tour. Here is what lies ahead for me (with various interchanges in other countries in between to get from one to the other):

- 02-07/05/2015 – Mountain View, California for Spartan Summit (Microsoft Edge now)

- 09/05/2015 – Tirana, Albania for Oscal (opening keynote)

- 11/05/2015 – D"usseldorf, Germany for Beyond Tellerand

- 13-14/05/2015 – Verona, Italy – JSDay (opening keynote)

- 15/05/2015 – Thessaloniki, Greece – DevIt (opening keynote)

- 18/05/2015 – Amsterdam, The Netherlands – PhoneGap Day (MC)

- 27/05/2015 – Copenhagen, Denmark – At The Frontend

- 29/05/2015 – Prague, Czech Republic – J and Beyond

I will very likely be too busy to answer a lot of requests this month, and if you meet me, I might be disheveled and unkempt – I never have more than a day in a hotel. The good news is that I have written 3 of these talks so far.

To while away the time on planes with my laptop being flat, I just downloaded lots of videos from build to watch (you can do that on each of these pages, just do the save-as), so I am up to speed with that. Here’s my list, in case you want to do the same:

- What’s New in JavaScript for Fast and Scalable Apps

- Getting Great Performance Out of Cordova Apps

- Building Consumer and Enterprise Device Solutions with Windows 10 IoT

- Developing Audio and Video Apps

- Deploying Complex Open Source Workloads on Azure

- Vision APIs: Understanding Images in Your App

- Case Studies of HoloLens App Development

- Building a Single-Page App Using Angular and TypeScript Using Office 365 APIs

- Windows for Makers: Raspberry Pi 2, Arduino and More

- JavaScript Frameworks in Your Apps and Sites from WinJS and Beyond

- Cortana and Speech Platform In Depth

- Building Office Add-ins using Node.JS

- Getting Started with Cross-Platform Mobile Development with Apache Cordova

- Microsoft Edge (discussion round video)

- iOS and Android Apps with Office 365

- “PROJECT ASTORIA“: Build Great Windows Apps with Your Android Code

- Visual Studio Code: A Deep Dive on the Redefined Code Editor for OS X, Linux and Windows

- Using Git in Visual Studio

- Hosted Web Apps and Web Platform Innovations

- Python and Node.js: Microsoft’s Best Kept Secrets

- “Microsoft Edge”: Introducing the New Browser and Web App Platform for Windows 10

- What’s New in F12 for Microsoft Edge

- Building Accessible Universal Windows Apps

|

|

Morgan Phillips: To Serve Developers |

Lately, I've become very interested in improving the developer experience by bringing our CI infrastructure closer to contributors. In short, I would like developers to have access to the same environments that we use to test/build their code. This will make it:

- easier to run tests locally

- easier to set up a dev environment

- easier to reproduce bugs (especially environment dependent bugs)

[The release pipeline from 50,000ft]

The first part of my plan revolves around integrating release engineering's CI system with a tool that developers are already using: mach; starting with a utility called: mozbootstrap -- a system that detects its host operating system and invokes a package manager for installing all of the libraries needed to build firefox desktop or firefox android.

The first step here was to make it possible to automate the bootstrapping process (see bug: 1151834 "allow users to bootstrap without any interactive prompts"), and then integrate it into the standing up of our own systems. Luckily, at the moment I'm also porting some of our Linux builds from buildbot to TaskCluster (see bug: 1135206), which necessitates scrapping our old chroot based build environments in favor of docker containers. This fresh start has given me the opportunity begin this transition painlessly.

This simple change alone strengthens the interface between RelEng and developers, because now we'll be using the same packages (on a given platform). It also means that our team will be actively maintaining a tool used by contributors. I think it's a huge step in the right direction!

Right now, I'm only focusing on Linux, though in the future I expect to support OSX as well. The bootstrap utility supports several distributions (Debian/Ubuntu/CentOS/Arch), though, I've been trying to base all of release engineering's new docker containers on Ubuntu 14.04 -- as such, I'd consider this our canonical distribution. Our old builders were based on CentOS, so it would have been slightly easier to go with that platform, but I'd rather support the platform that the majority of our contributors are using.

My new dream in life: integrate @docker into Mozilla's build system / developer toolchain https://t.co/b09ckKZf0k -- no more fighting w/deps

— Morgan Phillips (@mrrrgn) March 2, 2015One fabulous side effect of using TaskCluster is that we're forced to create docker containers for running our jobs, in fact, they even live in mozilla-central. That being the case, I've started a conversation around integrating our docker containers into mozbootstrap, giving it the option to pull down a releng docker container in lieu of bootstrapping a host system.

On my own machine, I've been mounting my src directory inside of a builder and running

./mach build, then ./mach run within it. All of the source, object files, and executables live on my host machine, but the actual building takes place in a black box. This is a very tidy development workflow that's easy to replicate and automate with a few bash functions [which releng should also write/support].

[A simulation of how I'd like to see developers interacting with our docker containers.]

Lastly, as the final nail in the coffin of hard to reproduce CI bugs, I'd like to make it possible for developers to run our TaskCluster based test/build jobs on their local machines. Either from mach, or a new utility that lives in /testing.

If you'd like to follow my progress toward creating this brave new world -- or heckle me in bugzilla comments -- check out these tickets:

|

|

Kim Moir: Mozilla pushes - April 2015 |

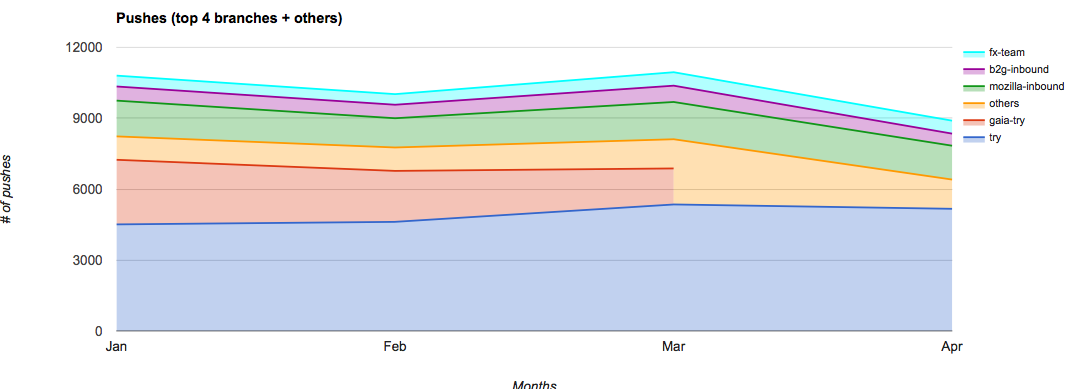

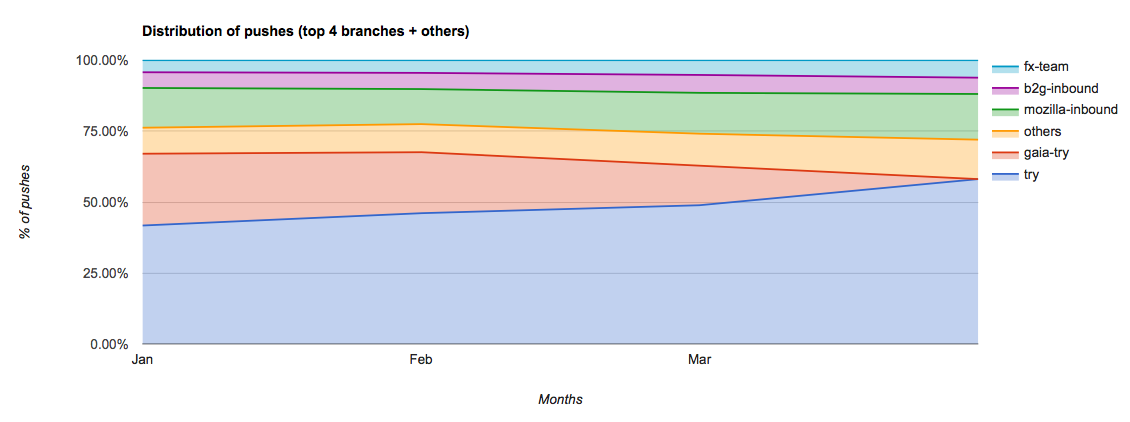

Trends

The number of pushes decreased from those recorded in the previous month with a total of 8894. This is due to the fact that gaia-try is managed by taskcluster and thus these jobs don't appear in the buildbot scheduling databases anymore which this report tracks.

Highlights

- 8894 pushes

- 296 pushes/day (average)

- Highest number of pushes/day: 528 pushes on Apr 1, 2015

- 17.87 pushes/hour (highest average)

General Remarks

- Try has around 58% of all the pushes now that we no longer track gaia-try

- The three integration repositories (fx-team, mozilla-inbound and b2g-inbound) account around 28% of all the pushes.

Records

- August 2014 was the month with most pushes (13090 pushes)

- August 2014 had the highest pushes/day average with 422 pushes/day

- July 2014 had the highest average of "pushes-per-hour" with 23.51 pushes/hour

- October 8, 2014 had the highest number of pushes in one day with 715 pushes

Note

I've changed the graphs to only track 2015 data. Last month they were tracking 2014 data as well but it looked crowded so I updated them. Here's a graph showing the number of pushes over the last few years for comparison.

http://relengofthenerds.blogspot.com/2015/05/mozilla-pushes-april-2015.html

|

|

Daniel Stenberg: talking curl on the changelog |

The changelog is the name of a weekly podcast on which the hosts discuss open source and stuff.

Last Friday I was invited to participate and I joined hosts Adam and Jerod for an hour long episode about curl. It all started as a response to my post on curl 17 years, so we really got into how things started out and how curl has developed through the years, how much time I’ve spent on it and if I could mention a really great moment in time that stood out over the years?

They day before, they released the little separate teaser we made about about the little known –remote-name-all command line option that basically makes curl default to do -O on all given URLs.

The full length episode can be experienced in all its glory here: https://changelog.com/153/

http://daniel.haxx.se/blog/2015/05/01/talking-curl-on-the-changelog/

|

|

Brian Birtles: What do we do with SMIL? |

Earlier this week, Blink announced their intention to deprecate SMIL. I thought they were going to replace their native implementation with a Javascript one so this was a surprise to me.

Prompted by this, the SVG WG decided it would be better to split the animation features in SVG2 out into a separate spec. (This was something I started doing a while ago, calling it Animation Elements, but I haven’t had time to follow up on it recently.)

I’ve spent quite a lot of time working on SMIL in Gecko (Firefox) so I’m probably more attached to it than most. I also started work on Web Animations specifically to address Microsoft’s concern that we needed a unified model for animations on the Web and I was under the impression they were finally open to the idea of a Javascript implementation of SMIL in Edge.

I’m not sure what will happen next, but it’s interesting to think about what we would lose without SMIL and what we could do to fix that. Back in 2011 I wrote up a gap analysis of features missing in CSS that exist in SVG animation. One example, is that even with CSS Animations, CSS Transitions, Web Animations and the Motion Path module, we still couldn’t create a font using SVG-in-OpenType where the outlines of the glyphs wiggle. That’s because even though Web Animations lets you animate attributes (and not just CSS properties), that feature is only available via the script API and you can’t run script in some contexts like font glyph documents.

So what would we need? I think some of the following might be interesting specs:

-

Path animation module – We need some means of animating path data such as the ‘d’ attribute on an SVG

element. With SMIL this is actually really hard—you need to have exactly the same number and type of segments in order to interpolate between two paths. Tools could help with this but there aren’t any yet.It would be neat to be able to interpolate between, say, a

and a. Once you allow different numbers of segments you probably need a means of annotating anchor points so you can describe how the different paths are supposed to line up.

(If, while we’re at it, we could define a way of warping paths that would be great for doing cartoons!)

-

Animation group module – SMIL lets you sequence and group animations so they play perfectly together. That’s not easy with CSS at the moment. Web Animations level 2 actually defines grouping and synchronization primitives for this but there’s no proposed CSS syntax for it.

I think it would be useful if CSS Animations Level 2 added a single level of grouping, something like an

animation-groupproperty where all animations with a matching group name were kept in lock-step (withanimation-group-resetto create new groups of the same name). A subsequent level could extend that to the more advanced hierarchies of groups described in Web Animations level 2.

- Property addition – SMIL lets you have independent animations target the same element and add together. For example, you can have a ‘spin’ animation and a ‘swell’ animation defined completely independently and then applied to the same element, and they combine together without conflict. Allowing CSS properties to add together sounds like a big change but you can actually narrow down the problem space in three ways:

- Most commonly you’re adding together lists: e.g. transform lists or filter lists. A solution that only lets you add lists together would probably solve a lot of use cases.

- Amongst lists, transform lists are the most common. For this the FXTF already resolved to add translate, rotate and scale properties in CSS transforms level 2 so we should be able to address some of those use cases in the near future.

- While it would be nice to add properties together in static contexts like below, if it simplifies the solution, we could just limit the issue to animations at first.

.blur { filter: blur(10px); }

.sepia { filter: sepia(50%); }

There are other things that SMIL lets you do such as change the source URL of an image in response to an arbitrary event like a click without writing any programming code but I think the above cover some of the bigger gaps? What else would we miss?

https://birtles.wordpress.com/2015/05/01/what-do-we-do-with-smil/

|

|

Mike Hommey: Using a git clone of gecko-dev to push to mercurial |

The next branch of git-cinnabar now has minimal support for grafting, enough to allow to graft a clone of gecko-dev to mozilla-central and other Mozilla branches. This will be available in version 0.3 (which I don’t expect to release before June), but if you are interested, you can already try it.

There are two ways you can work with gecko-dev and git-cinnabar.

Switching to git-cinnabar

This is the recommended setup.

There are several reasons one would want to start from gecko-dev instead of a fresh clone of mozilla-central. One is to get the full history from before Mozilla switched to Mercurial, which gecko-dev contains. Another is if you already have a gecko-dev clone with local branches, where rebasing against a fresh clone would not be very convenient (but not impossible).

The idea here is to use gecko-dev as a start point, and from there on, use cinnabar to pull and push from/to Mercurial. The main caveat is that new commits pulled from Mercurial after this will not have the same SHA-1s as those on gecko-dev. Only the commits until the switch will. This also means different people grafting gecko-dev at different moments will have different SHA-1s for new commits. Eventually, I hope we can switch gecko-dev itself to use git-cinnabar instead of hg-git, which would solve this issue.

Assuming you already have a gecko-dev clone, and git-cinnabar next installed:

- Change the remote URL:

$ git remote set-url origin hg::https://hg.mozilla.org/mozilla-central

(replace

originwith the remote name forgecko-devif it’s notorigin) - Add other mercurial repositories you want to track as well:

$ git remote add inbound hg::https://hg.mozilla.org/integration/mozilla-inbound $ git remote add aurora hg::https://hg.mozilla.org/releases/mozilla-aurora (...)

- Pull with grafting enabled:

$ git -c cinnabar.graft=true -c cinnabar.graft-refs=refs/remotes/origin/* remote update

(replace

originwith the remote name forgecko-devif it’s notorigin) - Finish the setup by setting push urls for all those remotes:

$ git remote set-url --push origin hg::ssh://hg.mozilla.org/mozilla-central $ git remote set-url --push inbound hg::ssh://hg.mozilla.org/integration/mozilla-inbound $ git remote set-url --push aurora hg::ssh://hg.mozilla.org/releases/mozilla-aurora (...)

Now, you’re mostly done setting things up. Check out my git workflow for Gecko development for how to work from there.

Following gecko-dev

This setup allows to keep pulling from gecko-dev and thus keep the same SHA-1s. It relies on everything pulled from Mercurial existing in gecko-dev, which makes it cumbersome, which is why I don’t recommend using it. For instance, you may end up pulling from Mercurial before the server-side mirroring made things available in gecko-dev, and that will fail. Some commands such as git pull --rebase will require you to ensure gecko-dev is up-to-date first (that makes winning push races essentially impossible). And more importantly, in some cases, what you push to Mercurial won’t have the same commit SHA-1 in gecko-dev, so you’ll have to manually deal with that (fortunately, it most cases, this shouldn’t happen).

Assuming you already have a gecko-dev clone, and git-cinnabar next installed:

- Add a remote for all mercurial repositories you want to track:

$ git remote add central hg::https://hg.mozilla.org/mozilla-central $ git remote add inbound hg::https://hg.mozilla.org/integration/mozilla-inbound $ git remote add aurora hg::https://hg.mozilla.org/releases/mozilla-aurora (...)

- For each of those remotes, set a push url:

$ git remote set-url --push origin hg::ssh://hg.mozilla.org/mozilla-central $ git remote set-url --push inbound hg::ssh://hg.mozilla.org/integration/mozilla-inbound $ git remote set-url --push aurora hg::ssh://hg.mozilla.org/releases/mozilla-aurora (...)

- For each of those remotes, set a refspec that limits to pulling the tip of the default branch:

$ git remote.central.fetch +refs/heads/branches/default/tip:refs/remotes/central/default $ git remote.inbound.fetch +refs/heads/branches/default/tip:refs/remotes/inbound/default $ git remote.aurora.fetch +refs/heads/branches/default/tip:refs/remotes/aurora/default (...)

Other branches can be added, but that must be done with care, because not all branches are exposed on

gecko-dev. - Set git-cinnabar grafting mode permanently:

$ git config cinnabar.graft only

- Make git-cinnabar never store metadata when pushing:

$ git config cinnabar.data never

- Make git-cinnabar only graft with

gecko-devcommits:

$ git config cinnabar.graft-refs refs/remotes/origin/*

(replace

originwith the remote name forgecko-devif it’s notorigin) - Then pull from all remotes:

$ git remote update

Retry as long as you see errors about “Not allowing non-graft import”. This will keep happening until all Mercurial changesets you’re trying to pull make it to

gecko-dev.

With this setup, you can now happily push to Mercurial and see your commits appear in gecko-dev after a while. As long as you don’t copy or move files, their SHA-1 should be the same as what you pushed.

|

|

Mike Hommey: Announcing git-cinnabar 0.2.2 |

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

What’s new since 0.2.1?

- Don’t require

core.ignorecaseto be set to false on the repository when using a case-insensitive file system. If you did setcore.ignorecaseto false because git-cinnabar told you to, you can now set it back to true. - Raise an exception when git update-ref or git fast-import return an error. Silently ignoring those errors could lead to bad repositories after an upgrade from pre-0.1.0 versions on OS X, where the default maximum number of open files is low (256), and where git update-ref uses a lot of lock files for large transactions.

- Updated git to 2.4.0, when building with the native helper.

- When doing

git cinnabar reclone, skip remotes withremote.$remote.skipDefaultUpdateset to true.

|

|

Christopher Arnold: Calling Android users: Help Mozilla Map the World! |

The reason is fairly simple. There are of course thousands of radio frequencies traveling through the walls of buildings all around us. What makes Wi-Fi frequency (or even bluetooth) particularly useful for location mapping is that the frequency travels a relatively short distance before it decays, due to how low energy the Wi-Fi wavelengths are. A combination of three or more Wi-Fi signals can be used in a very small area by a phone to triangulate locations on a map in the same manner that earthquake shockwave strengths can be used to triangulate epicenters. Wi-Fi hubs don't need to transmit their locations to be useful. Most are oblivious of their location. It is the phone's interpretations of their signal strength and inferred location that creates the value to the phone's internal mapping capabilities. No data that goes over the Wi-Fi frequency is relevant to using radio for triangulation. It is merely the signal strength/weakness that makes it useful for triangulation. (Most Wi-Fi hubs are password protected and the data sent over them is encrypted.)

Being able to let phone users determine their own location is of keen interest to developers who can’t make location-based-services work without fairly precise location determinations. The developers don't want to track the users per se. They want the users to be able to self-determine location when they request a service at a precise location in space. (Say requesting a Lyft ride or checking in at a local eatery.) There are a broad range of businesses that try to help phones accurately orient themselves on maps. The data that each application developer uses may be different across a range of phones. Android, Windows and iPhones all have different data sources for this, which can make it frustrating to have consistency of app experience for many users, even when they’re all using the same basic application.

At Mozilla, we think the best way to solve this problem is to create an open source solution. We are app developers ourselves and we want our users to have consistent quality of experience, along with all the websites that our users access using our browsers and phones. If we make location data accessible to developers, we should be able to help Internet users navigate their world more consistently. By doing it in an open source way, dozens of phone vendors and app developers can utilize this open data source without cumbersome and expensive contracts that are sometimes imposed by location service vendors. And as Mozilla we do this in a way that empowers users to make personal choice as to whether they wish to participate in data contribution or not.

How can I help? There are two ways Firefox users can get involved. (Several ways that developers can help.) We have two applications for Android that have the capability to “stumble” Wi-Fi locations.

The first app is called “Mozilla Stumbler” and is available for free download in the Google Play store. (https://play.google.com/store/apps/details?id=org.mozilla.mozstumbler) By opening MozStumbler and letting it collect radio frequencies around you, you are able to help the location database register those frequencies so that future users can determine their location. None of the data your Android phone contributes can be specifically tied to you. It’s collecting the ambient radio signals just for the purpose of determining map accuracy. To make it fun to use MozStumbler, we have also created a leaderboard for users to keep track of their contributions to the database.

Second app is our Firefox mobile browser that runs on the Android operating system. (If it becomes possible to stumble on other operating systems, I’ll post an update to this blog.) You need to take a couple of steps to enable background stumbling on your Firefox browser. Specifically, you have to opt-in to share location data to Mozilla. To do this, first download Firefox on your Android device. On the first run you should get a prompt on what data you want to share with Mozilla. If you bypassed that step, or installed Firefox a long time ago, here’s how to find the setting:

1) Click on the three dots at the right side of the Firefox browser chrome then select "Settings" (Above image)

2) Select Mozilla (Right image)

Check the box that says “Help Mozilla map the world! Share approximate Wi-Fi and cellular location of your device to improve our geolocation services.” (Below image)

If you ever want to change your settings, you can return to the settings of Firefox, or you can view your Android device's main settings menu on this path: Settings>Personal>Location which is the same place where you can see all the applications you've previously granted access to look up your physical location.

The benefit of the data contributed is manifold:

1) Firefox users on PCs (which do not have GPS sensors) will be able to determine their positions based on the frequency of the WiFi hotspots they use rather than having to continually require users to type in specific location requests.

2) Apps on Firefox Operating System and websites that load in Firefox that use location services will perform more accurately and rapidly over time.

3) Other developers who want to build mobile applications and browsers will be able to have affordable access to location service tools. So your contribution will foster the open source developer community.

And in addition to the benefits above, my colleague Robert Kaiser points out that even devices with GPS chips can benefit from getting Wi-Fi validation in the following way:

2) The location from this wifi triangulation can be fed into the GPS system, which enables it to know which satellites it roughly should expect to see and therefore get a fix on those sooner (Firefox OS at least is doing that).

3) In cities or buildings, signals from GPS satellites get reflected or absorbed by walls, often making the GPS position inaccurate or not being able to get a fix at all - while you might still see enough wi-fi signals to determine a position."

Thank you for helping improve Mozilla Location Services.

If you'd like to read more about Mozilla Location Services please visit:

https://location.services.mozilla.com/

To see how well our map currently covers your region, visit:

https://location.services.mozilla.com/map#2/15.0/10.0

If you are a developer, you can also integrate our open source code directly into your own app to enable your users to stumble for fun as well. Code is available here: https://github.com/mozilla/DemoStumbler

For an in-depth write-up on the launch of the Mozilla Location Service please read Hanno's blog here: http://blog.hannosch.eu/2013/12/mozilla-location-service-what-why-and.html

For a discussion of the issues on privacy management view Gervase's blog:

http://blog.gerv.net/2013/10/location-services-and-privacy/

http://ncubeeight.blogspot.com/2015/04/calling-android-users-help-mozilla-map.html

|

|

Niko Matsakis: A few more remarks on reference-counting and leaks |

So there has been a lot of really interesting discussion in response to my blog post. I wanted to highlight some of the comments I’ve seen, because I think they raise good points that I failed to address in the blog post itself. My comments here are lightly edited versions of what I wrote elsewhere.

Isn’t the problem with objects and leak-safe types more general?

I posit that this is in fact a problem with trait objects, not a problem with Leak; the exact same flaw pointed about in the blog post already applies to the existing OIBITs, Send, Sync, and Reflect. The decision of which OIBITs to include on any trait object is already a difficult one, and is a large reason why std strives to avoid trait objects as part of public types.

I agree with him that the problems I described around Leak and

objects apply equally to Send (and, in fact, I said so in my post),

but I don’t think this is something we’ll be able to solve later on,

as he suggests. I think we are working with something of a fundamental

tension. Specifically, objects are all about encapsulation. That is,

they completely hide the type you are working with, even from the

compiler. This is what makes them useful: without them, Rust just

plain wouldn’t work, since you couldn’t (e.g.) have a vector of

closures. But, in order to gain that flexibility, you have to state

your requirements up front. The compiler can’t figure them out

automatically, because it doesn’t (and shouldn’t) know the types

involved.

So, given that objects are here to stay, the question is whether

adding a marker trait like Leak is a problem, given that we already

have Send. I think the answer is yes; basically, because we can’t

expect object types to be analyzed statically, we should do our best

to minimize the number of fundamental splits people have to work

with. Thread safety is pretty fundamental. I don’t think Leak

makes the cut. (I said some of the reasons in conclusion of my

previous blog post, but I have a few more in the questions below.)

Could we just remove Rc and only have RcScoped? Would that solve the problem?

Certainly you could remove Rc in favor of RcScoped. Similarly, you

could have only Arc and not Rc. But you don’t want to because you

are basically failing to take advantage of extra constraints. If we

only had RcScoped, for example, then creating an Rc always

requires taking some scoped as argument – you can have a global

constant for 'static data, but it’s still the case that generic

abstractions have to take in this scope as argument. Moreover, there

is a runtime cost to maintaining the extra linked list that will

thread through all Rc abstractions (and the Rc structs get bigger,

as well). So, yes, this avoids the “split” I talked about, but it

does it by pushing the worst case on all users.

Still, I admit to feeling torn on this point. What pushes me over the edge, I think, is that simple reference counting of the kind we are doing now is a pretty fundamental thing. You find it in all kinds of systems (Objective C, COM, etc). This means that if we require that safe Rust cannot leak, then you cannot safely integrate borrowed data with those systems. I think it’s better to just use closures in Rust code – particularly since, as annodomini points out on Reddit, there are other kinds of cases where RAII is a poor fit for cleanup.

Could a proper GC solve this? Is reference counting really worth it?

It’ll depend on the precise design, but tracing GC most definitely

is not a magic bullet. If anything, the problem around leaks is

somewhat worse: GC’s don’t give any kind of guarantee about when the

destructor bans. So we either have to ban GC’d data from having

destructors or ban it from having borrowed pointers; either of those

implies a bound very similar to Leak or 'static. Hence I think

that GC will never be a “fundamental building block” for

abstractions in the way that Rc/Arc can be. This is sad, but

perhaps inevitable: GC inherently requires a runtime as well, which

already limits its reusability.

|

|

Andrew Sutherland: Talk Script: Firefox OS Email Performance Strategies |

Last week I gave a talk at the Philly Tech Week 2015 Dev Day organized by the delightful people at technical.ly on some of the tricks/strategies we use in the Firefox OS Gaia Email app. Note that the credit for implementing most of these techniques goes to the owner of the Email app’s front-end, James Burke. Also, a special shout-out to Vivien for the initial DOM Worker patches for the email app.

I tried to avoid having slides that both I would be reading aloud as the audience read silently, so instead of slides to share, I have the talk script. Well, I also have the slides here, but there’s not much to them. The headings below are the content of the slides, except for the one time I inline some code. Note that the live presentation must have differed slightly, because I’m sure I’m much more witty and clever in person than this script would make it seem…

Cover Slide: Who!

Hi, my name is Andrew Sutherland. I work at Mozilla on the Firefox OS Email Application. I’m here to share some strategies we used to make our HTML5 app Seem faster and sometimes actually Be faster.

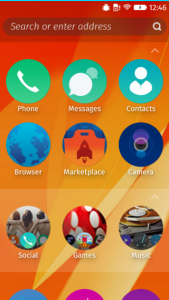

What’s A Firefox OS (Screenshot Slide)

But first: What is a Firefox OS? It’s a multiprocess Firefox gecko engine on an android linux kernel where all the apps including the system UI are implemented using HTML5, CSS, and JavaScript. All the apps use some combination of standard web APIs and APIs that we hope to standardize in some form.

Here are some screenshots. We’ve got the default home screen app, the clock app, and of course, the email app.

It’s an entirely client-side offline email application, supporting IMAP4, POP3, and ActiveSync. The goal, like all Firefox OS apps shipped with the phone, is to give native apps on other platforms a run for their money.

And that begins with starting up fast.

Fast Startup: The Problems

But that’s frequently easier said than done. Slow-loading websites are still very much a thing.

The good news for the email application is that a slow network isn’t one of its problems. It’s pre-loaded on the phone. And even if it wasn’t, because of the security implications of the TCP Web API and the difficulty of explaining this risk to users in a way they won’t just click through, any TCP-using app needs to be a cryptographically signed zip file approved by a marketplace. So we do load directly from flash.

However, it’s not like flash on cellphones is equivalent to an infinitely fast, zero-latency network connection. And even if it was, in a naive app you’d still try and load all of your HTML, CSS, and JavaScript at the same time because the HTML file would reference them all. And that adds up.

It adds up in the form of event loop activity and competition with other threads and processes. With the exception of Promises which get their own micro-task queue fast-lane, the web execution model is the same as all other UI event loops; events get scheduled and then executed in the same order they are scheduled. Loading data from an asynchronous API like IndexedDB means that your read result gets in line behind everything else that’s scheduled. And in the case of the bulk of shipped Firefox OS devices, we only have a single processor core so the thread and process contention do come into play.

So we try not to be a naive.

Seeming Fast at Startup: The HTML Cache

If we’re going to optimize startup, it’s good to start with what the user sees. Once an account exists for the email app, at startup we display the default account’s inbox folder.

What is the least amount of work that we can do to show that? Cache a screenshot of the Inbox. The problem with that, of course, is that a static screenshot is indistinguishable from an unresponsive application.

So we did the next best thing, (which is) we cache the actual HTML we display. At startup we load a minimal HTML file, our concatenated CSS, and just enough Javascript to figure out if we should use the HTML cache and then actually use it if appropriate. It’s not always appropriate, like if our application is being triggered to display a compose UI or from a new mail notification that wants to show a specific message or a different folder. But this is a decision we can make synchronously so it doesn’t slow us down.

Local Storage: Okay in small doses

We implement this by storing the HTML in localStorage.

Important Disclaimer! LocalStorage is a bad API. It’s a bad API because it’s synchronous. You can read any value stored in it at any time, without waiting for a callback. Which means if the data is not in memory the browser needs to block its event loop or spin a nested event loop until the data has been read from disk. Browsers avoid this now by trying to preload the Entire contents of local storage for your origin into memory as soon as they know your page is being loaded. And then they keep that information, ALL of it, in memory until your page is gone.

So if you store a megabyte of data in local storage, that’s a megabyte of data that needs to be loaded in its entirety before you can use any of it, and that hangs around in scarce phone memory.

To really make the point: do not use local storage, at least not directly. Use a library like localForage that will use IndexedDB when available, and then fails over to WebSQLDatabase and local storage in that order.

Now, having sufficiently warned you of the terrible evils of local storage, I can say with a sorta-clear conscience… there are upsides in this very specific case.

The synchronous nature of the API means that once we get our turn in the event loop we can act immediately. There’s no waiting around for an IndexedDB read result to gets its turn on the event loop.

This matters because although the concept of loading is simple from a User Experience perspective, there’s no standard to back it up right now. Firefox OS’s UX desires are very straightforward. When you tap on an app, we zoom it in. Until the app is loaded we display the app’s icon in the center of the screen. Unfortunately the standards are still assuming that the content is right there in the HTML. This works well for document-based web pages or server-powered web apps where the contents of the page are baked in. They work less well for client-only web apps where the content lives in a database and has to be dynamically retrieved.

The two events that exist are:

“DOMContentLoaded” fires when the document has been fully parsed and all scripts not tagged as “async” have run. If there were stylesheets referenced prior to the script tags, the script tags will wait for the stylesheet loads.

“load” fires when the document has been fully loaded; stylesheets, images, everything.

But none of these have anything to do with the content in the page saying it’s actually done. This matters because these standards also say nothing about IndexedDB reads or the like. We tried to create a standards consensus around this, but it’s not there yet. So Firefox OS just uses the “load” event to decide an app or page has finished loading and it can stop showing your app icon. This largely avoids the dreaded “flash of unstyled content” problem, but it also means that your webpage or app needs to deal with this period of time by displaying a loading UI or just accepting a potentially awkward transient UI state.

(Trivial HTML slide)

DOMContentLoaded!

This is the important summary of our index.html.

We reference our stylesheet first. It includes all of our styles. We never dynamically load stylesheets because that compels a style recalculation for all nodes and potentially a reflow. We would have to have an awful lot of style declarations before considering that.

Then we have our single script file. Because the stylesheet precedes the script, our script will not execute until the stylesheet has been loaded. Then our script runs and we synchronously insert our HTML from local storage. Then DOMContentLoaded can fire. At this point the layout engine has enough information to perform a style recalculation and determine what CSS-referenced image resources need to be loaded for buttons and icons, then those load, and then we’re good to be displayed as the “load” event can fire.

After that, we’re displaying an interactive-ish HTML document. You can scroll, you can press on buttons and the :active state will apply. So things seem real.

Being Fast: Lazy Loading and Optimized Layers

But now we need to try and get some logic in place as quickly as possible that will actually cash the checks that real-looking HTML UI is writing. And the key to that is only loading what you need when you need it, and trying to get it to load as quickly as possible.

There are many module loading and build optimizing tools out there, and most frameworks have a preferred or required way of handling this. We used the RequireJS family of Asynchronous Module Definition loaders, specifically the alameda loader and the r-dot-js optimizer.

One of the niceties of the loader plugin model is that we are able to express resource dependencies as well as code dependencies.

RequireJS Loader Plugins

var fooModule = require('./foo');var htmlString = require('text!./foo.html');var localizedDomNode = require('tmpl!./foo.html');

The standard Common JS loader semantics used by node.js and io.js are the first one you see here. Load the module, return its exports.

But RequireJS loader plugins also allow us to do things like the second line where the exclamation point indicates that the load should occur using a loader plugin, which is itself a module that conforms to the loader plugin contract. In this case it’s saying load the file foo.html as raw text and return it as a string.

But, wait, there’s more! loader plugins can do more than that. The third example uses a loader that loads the HTML file using the ‘text’ plugin under the hood, creates an HTML document fragment, and pre-localizes it using our localization library. And this works un-optimized in a browser, no compilation step needed, but it can also be optimized.

So when our optimizer runs, it bundles up the core modules we use, plus, the modules for our “message list” card that displays the inbox. And the message list card loads its HTML snippets using the template loader plugin. The r-dot-js optimizer then locates these dependencies and the loader plugins also have optimizer logic that results in the HTML strings being inlined in the resulting optimized file. So there’s just one single javascript file to load with no extra HTML file dependencies or other loads.

We then also run the optimizer against our other important cards like the “compose” card and the “message reader” card. We don’t do this for all cards because it can be hard to carve up the module dependency graph for optimization without starting to run into cases of overlap where many optimized files redundantly include files loaded by other optimized files.

Plus, we have another trick up our sleeve:

Seeming Fast: Preloading

Preloading. Our cards optionally know the other cards they can load. So once we display a card, we can kick off a preload of the cards that might potentially be displayed. For example, the message list card can trigger the compose card and the message reader card, so we can trigger a preload of both of those.

But we don’t go overboard with preloading in the frontend because we still haven’t actually loaded the back-end that actually does all the emaily email stuff. The back-end is also chopped up into optimized layers along account type lines and online/offline needs, but the main optimized JS file still weighs in at something like 17 thousand lines of code with newlines retained.

So once our UI logic is loaded, it’s time to kick-off loading the back-end. And in order to avoid impacting the responsiveness of the UI both while it loads and when we’re doing steady-state processing, we run it in a DOM Worker.

Being Responsive: Workers and SharedWorkers

DOM Workers are background JS threads that lack access to the page’s DOM, communicating with their owning page via message passing with postMessage. Normal workers are owned by a single page. SharedWorkers can be accessed via multiple pages from the same document origin.

By doing this, we stay out of the way of the main thread. This is getting less important as browser engines support Asynchronous Panning & Zooming or “APZ” with hardware-accelerated composition, tile-based rendering, and all that good stuff. (Some might even call it magic.)

When Firefox OS started, we didn’t have APZ, so any main-thread logic had the serious potential to result in janky scrolling and the impossibility of rendering at 60 frames per second. It’s a lot easier to get 60 frames-per-second now, but even asynchronous pan and zoom potentially has to wait on dispatching an event to the main thread to figure out if the user’s tap is going to be consumed by app logic and preventDefault called on it. APZ does this because it needs to know whether it should start scrolling or not.

And speaking of 60 frames-per-second…

Being Fast: Virtual List Widgets

…the heart of a mail application is the message list. The expected UX is to be able to fling your way through the entire list of what the email app knows about and see the messages there, just like you would on a native app.

This is admittedly one of the areas where native apps have it easier. There are usually list widgets that explicitly have a contract that says they request data on an as-needed basis. They potentially even include data bindings so you can just point them at a data-store.

But HTML doesn’t yet have a concept of instantiate-on-demand for the DOM, although it’s being discussed by Firefox layout engine developers. For app purposes, the DOM is a scene graph. An extremely capable scene graph that can handle huge documents, but there are footguns and it’s arguably better to err on the side of fewer DOM nodes.

So what the email app does is we create a scroll-region div and explicitly size it based on the number of messages in the mail folder we’re displaying. We create and render enough message summary nodes to cover the current screen, 3 screens worth of messages in the direction we’re scrolling, and then we also retain up to 3 screens worth in the direction we scrolled from. We also pre-fetch 2 more screens worth of messages from the database. These constants were arrived at experimentally on prototype devices.

We listen to “scroll” events and issue database requests and move DOM nodes around and update them as the user scrolls. For any potentially jarring or expensive transitions such as coordinate space changes from new messages being added above the current scroll position, we wait for scrolling to stop.

Nodes are absolutely positioned within the scroll area using their ‘top’ style but translation transforms also work. We remove nodes from the DOM, then update their position and their state before re-appending them. We do this because the browser APZ logic tries to be clever and figure out how to create an efficient series of layers so that it can pre-paint as much of the DOM as possible in graphic buffers, AKA layers, that can be efficiently composited by the GPU. Its goal is that when the user is scrolling, or something is being animated, that it can just move the layers around the screen or adjust their opacity or other transforms without having to ask the layout engine to re-render portions of the DOM.

When our message elements are added to the DOM with an already-initialized absolute position, the APZ logic lumps them together as something it can paint in a single layer along with the other elements in the scrolling region. But if we start moving them around while they’re still in the DOM, the layerization logic decides that they might want to independently move around more in the future and so each message item ends up in its own layer. This slows things down. But by removing them and re-adding them it sees them as new with static positions and decides that it can lump them all together in a single layer. Really, we could just create new DOM nodes, but we produce slightly less garbage this way and in the event there’s a bug, it’s nicer to mess up with 30 DOM nodes displayed incorrectly rather than 3 million.

But as neat as the layerization stuff is to know about on its own, I really mention it to underscore 2 suggestions:

1, Use a library when possible. Getting on and staying on APZ fast-paths is not trivial, especially across browser engines. So it’s a very good idea to use a library rather than rolling your own.

2, Use developer tools. APZ is tricky to reason about and even the developers who write the Async pan & zoom logic can be surprised by what happens in complex real-world situations. And there ARE developer tools available that help you avoid needing to reason about this. Firefox OS has easy on-device developer tools that can help diagnose what’s going on or at least help tell you whether you’re making things faster or slower:

– it’s got a frames-per-second overlay; you do need to scroll like mad to get the system to want to render 60 frames-per-second, but it makes it clear what the net result is