Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: The state and rate of HTTP/2 adoption |

The protocol HTTP/2 as defined in the draft-17 was approved by the IESG and is being implemented and deployed widely on the Internet today, even before it has turned up as an actual RFC. Back in February, already upwards 5% or maybe even more of the web traffic was using HTTP/2.

The protocol HTTP/2 as defined in the draft-17 was approved by the IESG and is being implemented and deployed widely on the Internet today, even before it has turned up as an actual RFC. Back in February, already upwards 5% or maybe even more of the web traffic was using HTTP/2.

My prediction: We’ll see >10% usage by the end of the year, possibly as much as 20-30% a little depending on how fast some of the major and most popular platforms will switch (Facebook, Instagram, Tumblr, Yahoo and others). In 2016 we might see HTTP/2 serve a majority of all HTTP requests – done by browsers at least.

Counted how? Yeah the second I mention a rate I know you guys will start throwing me hard questions like exactly what do I mean. What is Internet and how would I count this? Let me express it loosely: the share of HTTP requests (by volume of requests, not by bandwidth of data and not just counting browsers). I don’t know how to measure it and we can debate the numbers in December and I guess we can all end up being right depending on what we think is the right way to count!

Who am I to tell? I’m just a person deeply interested in protocols and HTTP/2, so I’ve been involved in the HTTP work group for years and I also work on several HTTP/2 implementations. You can guess as well as I, but this just happens to be my blog!

The HTTP/2 Implementations wiki page currently lists 36 different implementations. Let’s take a closer look at the current situation and prospects in some areas.

Browsers

Firefox and Chome have solid support since a while back. Just use a recent version and you’re good.

Internet Explorer has been shown in a tech preview that spoke HTTP/2 fine. So, run that or wait for it to ship in a public version soon.

There are no news about this from Apple regarding support in Safari. Give up on them and switch over to a browser that keeps up!

Other browsers? Ask them what they do, or replace them with a browser that supports HTTP/2 already.

My estimate: By the end of 2015 the leading browsers with a market share way over 50% combined will support HTTP/2.

Server software

Apache HTTPd is still the most popular web server software on the planet. mod_h2 is a recent module for it that can speak HTTP/2 – still in “alpha” state. Give it time and help out in other ways and it will pay off.

Nginx has told the world they’ll ship HTTP/2 support by the end of 2015.

IIS was showing off HTTP/2 in the Windows 10 tech preview.

H2O is a newcomer on the market with focus on performance and they ship with HTTP/2 support since a while back already.

nghttp2 offers a HTTP/2 => HTTP/1.1 proxy (and lots more) to front your old server with and can then help you deploy HTTP/2 at once.

Apache Traffic Server supports HTTP/2 fine. Will show up in a release soon.

Also, netty, jetty and others are already on board.

HTTPS initiatives like Let’s Encrypt, helps to make it even easier to deploy and run HTTPS on your own sites which will smooth the way for HTTP/2 deployments on smaller sites as well. Getting sites onto the TLS train will remain a hurdle and will be perhaps the single biggest obstacle to get even more adoption.

My estimate: By the end of 2015 the leading HTTP server products with a market share of more than 80% of the server market will support HTTP/2.

Proxies

Squid works on HTTP/2 support.

HAproxy? I haven’t gotten a straight answer from that team, but Willy Tarreau has been actively participating in the HTTP/2 work all the time so I expect them to have work in progress.

While very critical to the protocol, PHK of the Varnish project has said that Varnish will support it if it gets traction.

My estimate: By the end of 2015, the leading proxy software projects will start to have or are already shipping HTTP/2 support.

Services

Google (including Youtube and other sites in the Google family) and Twitter have ran HTTP/2 enabled for months already.

Lots of existing services offer SPDY today and I would imagine most of them are considering and pondering on how to switch to HTTP/2 as Chrome has already announced them going to drop SPDY during 2016 and Firefox will also abandon SPDY at some point.

My estimate: By the end of 2015 lots of the top sites of the world will be serving HTTP/2 or will be working on doing it.

Content Delivery Networks

Akamai plans to ship HTTP/2 by the end of the year. Cloudflare have stated that they “will support HTTP/2 once NGINX with it becomes available“.

Amazon has not given any response publicly that I can find for when they will support HTTP/2 on their services.

Not a totally bright situation but I also believe (or hope) that as soon as one or two of the bigger CDN players start to offer HTTP/2 the others might feel a bigger pressure to follow suit.

Non-browser clients

curl and libcurl support HTTP/2 since months back, and the HTTP/2 implementations page lists available implementations for just about all major languages now. Like node-http2 for javascript, http2-perl, http2 for Go, Hyper for Python, OkHttp for Java, http-2 for Ruby and more. If you do HTTP today, you should be able to switch over to HTTP/2 relatively easy.

More?

I’m sure I’ve forgotten a few obvious points but I might update this as we go as soon as my dear readers point out my faults and mistakes!

How long is HTTP/1.1 going to be around?

My estimate: HTTP 1.1 will be around for many years to come. There is going to be a double-digit percentage share of the existing sites on the Internet (and who knows how many that aren’t even accessible from the Internet) for the foreseeable future. For technical reasons, for philosophical reasons and for good old we’ll-never-touch-it-again reasons.

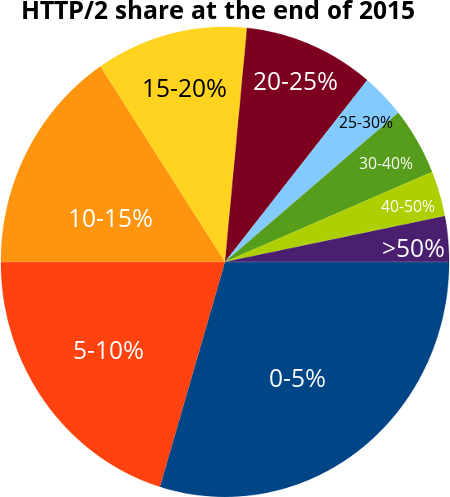

The survey

Finally, I asked friends on twitter, G+ and Facebook what they think the HTTP/2 share would be by the end of 2015 with the help of a little poll. This does of course not make it into any sound or statistically safe number but is still just a collection of what a set of random people guessed. A quick poll to get a rough feel. This is how the 64 responses I received were distributed:

Evidently, if you take a median out of these results you can see that the middle point is between 5-10 and 10-15. I’ll make it easy and say that the poll showed a group estimate on 10%. Ten percent of the total HTTP traffic to be HTTP/2 at the end of 2015.

I didn’t vote here but I would’ve checked the 15-20 choice, thus a fair bit over the median but only slightly into the top quarter..

In plain numbers this was the distribution of the guesses:

| 0-5% | 29.1% (19) |

| 5-10% | 21.8% (13) |

| 10-15% | 14.5% (10) |

| 15-20% | 10.9% (7) |

| 20-25% | 9.1% (6) |

| 25-30% | 3.6% (2) |

| 30-40% | 3.6% (3) |

| 40-50% | 3.6% (2) |

| more than 50% | 3.6% (2) |

http://daniel.haxx.se/blog/2015/03/31/the-state-and-rate-of-http2-adoption/

|

|

Gervase Markham: Happy Birthday, Mozilla! |

17 years ago today, the code shipped, and the Mozilla project was born. I’ve been involved for over 15 of those years, and it’s been a fantastic ride. With Firefox OS taking off, and freedom coming to the mobile space (compare: when the original code shipped, the hottest new thing you could download to your phone was a ringtone), I can’t wait to see where we go next.

http://feedproxy.google.com/~r/HackingForChrist/~3/oUaOZ-1ybFA/

|

|

Tim Guan-tin Chien: Service Worker and the grand re-architecture proposal of Firefox OS Gaia |

TL;DR: Service Worker, a new Web API, can be used as a mean to re-engineering client-side web applications, and a departure from the single-page web application paradigm. Detail of realizing that is being experimented on Gaia and proposed. In Gaia, particularly, “hosted packaged app” is served as a new iteration of security model work to make sure Service Workers works with Gaia.

Last week, I spent an entire week, in face-to-face meetings, going through the technical plans of re-architecture Gaia apps, the web applications that powers the front-end of Firefox OS, and the management plan on resourcing and deployment. Given the there were only a few of developers in the meeting and the public promise of “the new architecture”, I think it’s make sense to do a recap on what’s being proposed and what are the challenges already foreseen.

Using Service Worker

Before dive into the re-architecture plan, we need to explain what Service Worker is. From a boarder perspective, Service Worker can be understood as simply a browser feature/Web API that allow web developers to insert a JavaScript-implemented proxy between the server content and the actual page shown. It is the latest piece of sexy Web technologies that is heavily marketed by Google. The platform engineering team of Mozilla is devoting to ship it as well.

Many things previously not possible can be done with the worker proxy. For starter, it could replace AppCache while keeping the flexibility of managing cache in the hand of the app. The “flexibility” bits is the part where it gets interesting — theologically everything not touching the DOM can be moved into the worker — effectively re-creating the server-client architecture without a real remove HTTP server.

The Gaia Re-architecture Plan

Indeed, that’s what the proponent of the re-architecture is aiming for — my colleagues, mostly whom based in Paris, proposed such architecture as the 2015 iteration/departure of “traditional” single-page web application. What’s more, the intention is to create a framework where the backend, or “server” part of the code, to be individually contained in their own worker threads, with strong interface definitions to achieve maximum reusability of these components — much like Web APIs themselves, if I understand it correctly.

It does not, however, tie to a specific front-end framework. User of the proposed framework should be free to use any of the strategy she/he feel comfortable with — the UI can be as hardcore as entirely rendered in WebGL, or simply plain HTML/CSS/jQuery.

The plan is made public on a Wiki page, where I expect there will be changes as progress being made. This post intentionally does not cover many of the features the architecture promise to unlock, in favor of fresh contents (as opposed of copy-edit) so I recommend readers to check out the page.

Technical Challenges around using Service Workers

There are two major technical challenges: one is the possible performance (memory and cold-launch time) impact to fit this multi-thread framework and it’s binding middleware in to a phone, the other is the security model changes needed to make the framework usable in Gaia.

To speak about the backend, “server” side, the one key difference between real remote servers and workers is one lives in data centers with endless power supply, and the other depend on your phone battery. Remote servers can push constructed HTML as soon as possible, but for an local web app backed by workers, it might need to wait for the worker to spin up. For that the architecture might be depend on yet another out-of-spec feature of Service Worker, a cache that the worker thread have control of. The browser should render these pre-constructed HTML without waiting for the worker to launch.

Without considering the cache feature, and considering the memory usage, we kind of get to a point where we can only say for sure on performance, once there is a implementation to measure. The other solution the architecture proposed to workaround that on low-end phones would be to “merge back” the back-end code into one single thread, although I personally suspect the risk of timing issues, as essentially doing so would require the implementation to simulate multi-threading in one single thread. We would just have to wait for the real implementation.

The security model part is really tricky. Gaia currently exists as packaged zips shipped in the phone and updates with OTA images, pinning the Gecko version it ships along with. Packaging is one piece of sad workaround since Firefox OS v1.0 — the primary reasons of doing so are (1) we want to make sure proprietary APIs does not pollute the general Web and (2) we want a trusted 3rd-party (Mozilla) to involve in security decisions for users by check and sign contents.

The current Gecko implementation of Service Worker does not work with the classic packaged apps which serve from an app: URL. Incidentally, the app: URL something we feel not webby enough so we try to get rid off. The proposal of the week is called “hosted packaged apps”, which serve packages from the real, remote Web and allow references of content in the package directly with a special path syntax. We can’t get rid of packaged yet because of the reasons stated above, but serving content from HTTP should allow us to use Service Worker from the trusted contents, i.e. Gaia.

One thing to note about this mix is that a signed package means it is offline by default by it’s own right, and it’s updates must be signed as well. The Service Worker spec will be violated a bit in order to make them work well — it’s a detail currently being work out.

Technical Challenges on the proposed implementation

As already mentioned on the paragraph on Service Worker challenges, one worker might introduce performance issue, let along many workers. With each worker threads, it would imply memory usage as well. For that the proposal is for the framework to control the start up and shut down threads (i.e. part of the app) as necessary. But again, we will have to wait for the implementation and evaluate it.

The proposed framework asks for restriction of Web API access to “back-end” only, to decouple UI with the front-end as far as possible. However, having little Web APIs available in the worker threads will be a problem. The framework proposes to workaround it with a message routing bus and send the calls back to the UI thread, and asking Gecko to implement APIs to workers from time to time.

As an abstraction to platform worker threads and attempt to abstract platform/component changes, the architecture deserves special attention on classic abstraction problems: abstraction eventually leaks, and abstraction always comes with overhead, whether is runtime performance overhead, or human costs on learning the abstraction or debugging. I am not the export; Joel is.

Technical Challenges on enabling Gaia

Arguably, Gaia is one of the topmost complex web projects in the industry. We inherit a strong Mozilla tradition on continuous integration. The architecture proposal call for a strong separation between front-end application codebase and the back-end application codebase — includes separate integration between two when build for different form factors. The integration plan, itself, is something worthy to rethink along to meet such requirement.

With hosted packaged apps, the architecture proposal unlocks the possibility to deploy Gaia from the Web, instead of always ships with the OTA image. How to match Gaia/Gecko version all the way to every Nightly builds is something to figure out too.

Conclusion

Given everything is in flux and the immense amount of work (as outlined above), it’s hard to achieve any of the end goals without prioritizing the internals and land/deploy them separately. From last week, it’s already concluded parts of security model changes will be blocking Service Worker usage in signed package — we’ll would need to identify the part and resolve it first. It’s also important to make sure the implementation does not suffer any performance issue before deploy the code and start the major work of revamping every app. We should be able to figure out a scaled-back version of the work and realizing that first.

If we could plan and manage the work properly, I remain optimistic on the technical outcome of the architecture proposal. I trust my colleagues, particularly whom make the architecture proposal, to make reasonable technical judgements. It’s been years since the introduction of single-page web application — it’s indeed to worth to rethink what’s possible if we depart from it.

The key here is trying not to do all the things at once, strength what working and amend what’s not, along the process of making the proposal into a usable implementation.

|

|

Air Mozilla: Mozilla Winter of Security: Seasponge, a tool for easy Threat Modeling |

Threat modeling is a crucial but often neglected part of developing, implementing and operating any system. If you have no mental model of a system...

Threat modeling is a crucial but often neglected part of developing, implementing and operating any system. If you have no mental model of a system...

https://air.mozilla.org/mozilla-winter-of-security-seasponge-a-tool-for-easy-threat-modeling/

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20150330/

|

|

QMO: Improving Recognition |

Earlier this month I blogged about improving recognition of our Mozilla QA contributors. The goal was to get some constructive feedback this quarter and to take action on that feedback next quarter. As this quarter is coming to a close, we’ve received four responses. I’d like to think a lot more people out there have ideas and experiences from which we could learn. This is an opportunity to make your voice heard and make contributing at Mozilla better for everyone.

Please, take some time this week to send me your feedback.

Thank you

|

|

Zack Weinberg: Announcing readings.owlfolio.org |

I’d like to announce my new project, readings.owlfolio.org, where I will be reading and reviewing papers from the academic literature mostly (but not exclusively) about information security. I made a false start at this near the end of 2013 (it is the same site that’s been linked under readings

in the top bar since then) but now I have a posting queue and a rhythm going. Expect three to five reviews a week. It’s not going to be syndicated to Planet Mozilla, but I may mention it here when I post something I think is of particular interest to that audience.

Longtime readers of this blog will notice that it has been redesigned and matches readings. That process is not 100% complete, but it’s close enough that I feel comfortable inviting people to kick the tires. Feedback is welcome, particularly regarding readability and organization; but unfortunately you’re going to have to email it to me, because the new CMS has no comment system. (The old comments have been preserved.) I’d also welcome recommendations of comment systems which are self-hosted, open-source, database-free, and don’t involve me manually copying comments out of my email. There will probably be a technical postmortem on the new CMS eventually.

(I know about the pages that are still using the old style sheet.)

|

|

Michael Verdi: 5 Years at Mozilla |

Today is my 5 year Mozilla anniversary. Back in 2010, I joined the support team to create awesome documentation for Firefox. That quickly evolved into looking for ways to help users before ever reaching the support site. And this year I joined the Firefox UX team to expand on that work. A lot of things have changed in those five years but Mozilla’s work is as relevant as ever. That’s why I’m even more excited about the work we’re doing today as I was back in 2010. These last 5 years have been amazing and I’m looking forward to many more to come.

For fun, here’s some video from my first week.

Planet Mozilla viewers – you can watch this video on YouTube.

|

|

Mozilla Science Lab: Mozilla Science Lab Week in Review March 23-29 |

The Week in Review is our weekly roundup of what’s new in open science from the past week. If you have news or announcements you’d like passed on to the community, be sure to share on Twitter with @mozillascience and @billdoesphysics, or join our mailing list and get in touch there.

Conferences & Events

- The International Science 2.0 Conference in Hamburg ran this past week; a couple of highlights from the conference:

- The OKFN highlighted their proposed Open Definition, to help bring technical and legal clarity to what is meant by ‘openness’ in science.

- GROBID (site, code), a tool for extracting bibliographic information from PDFs, was well-received by attendees.

- The first World Seabird Twitter Conference presentations have been compiled in a post over at Storify (what’s a ‘Twitter Conference’? Check out their event info here).

- Document Freedom Day was this past Wednesday; more than 50 events worldwide highlighted the value of open standards, and the key role of interoperability in functional openness.

- Right here at the Mozilla Science Lab, we ran our first Ask us Anything forum event on ‘local user groups for coding in research’, co-organized with Noam Ross – check out the thread and our reflections on the event.

Blogs & Papers

- The Electronic Frontier Foundation came out in support of the Fair Access to Science & Technology Research (FASTR) bill, recently reintroduced to the US Congress as discussed on last week’s Week in Review.

- Noam Ross posted a detailed and data driven analysis of what common errors people learning R struggle with (spoiler: an enormous fraction of them boil down to missing information). Ross goes on to consider how these trends can inform an effective strategy for teaching new R users how to read and debug common errors.

- Elizabeth Gilbert wrote an thoughtful description of both value and strategy of open science for junior researchers, both in her field of Social Psychology, and beyond.

- Deep Ganguli published an illustrated journey of his first experiences using R for data manipulation and visualization. An experienced Pythonista, Ganguli reports on the advantages of each language, and the importance of using the right tool for the job.

- Titus Brown got down to brass tacks on exactly why open data is so important, particularly in the context of the biological sciences.

- Sabina Leonelli et al published a thoughtful discussion of practical and systemic ways to implement open science standards in real research.

- Kratz & Strasser released a paper on Researchers Perspectives on Publication & Peer Review of Data (presented as an IPython Notebook), that examines how the research community envisions open data, and discusses how those goals inform the implementation of successful open data strategy.

- Michael Strack began a blog series on his ongoing journey tackling a major data science challenge in his biological research. Strack describes the series as a case study in the importance of not working in isolation, and the value of a supportive and interactive research community.

- Ben Marwick reflected on his recent experiences teaching Software Carpentry to archaeology students in Myanmar.

Government & Policy

- The 5th Annual White House Science Fair ran this past week; in addition to a $240 million dollar commitment to building STEM education in the US, the Office of Science & Technology Policy plans to convene a Citizen Science Forum before the end of this year to examine how to scale and support citizen science efforts.

Open Projects

- Work continues on ScienceToolbox (code), an index of scientific software packages – check out their issues for ways to get involved.

- Arfon Smith pushed a new release of the popular Journal of Brief Ideas.

http://mozillascience.org/mozilla-science-lab-week-in-review-march-23-29/

|

|

Andreas Gal: Data is at the heart of search. But who has access to it? |

In my February 23 blog post, I gave a brief overview of how search engines have evolved over the years and how today’s search engines learn from past searches to anticipate which results will be most relevant to a given query. This means that who succeeds in the $50 billion search business and who doesn’t mostly depends on who has access to search data. In this blog post, I will explore how search engines have obtained queries in the past and how (and why) that’s changing.

For some 90% of searches, a modern search engine analyzes and learns from past queries, rather than searching the Web itself, to deliver the most relevant results. Most the time, this approach yields better results than full text search. The Web has become so vast that searches often find millions or billions of result pages that are difficult to rank algorithmically.

One important way a search engine obtains data about past queries is by logging and retaining search results from its own users. For a search engine with many users, there’s enough data to learn from and make informed predictions. It’s a different story for a search engine that wants to enter a new market (and thus has no past search data!) or compete in a market where one search engine is very dominant.

In Germany, for example, where Google has over 95% market share, competing search engines don’t have access to adequate past search data to deliver search results that are as relevant as Google’s. And, because their search results aren’t as relevant as Google’s, it’s difficult for them to attract new users. You could call it a vicious circle.

Search engines with small user bases can acquire search traffic by working with large Internet Service providers (also called ISPs, think Comcast, Verizon, etc.) to capture searches that go from users’ browsers to competing search engines. This is one option that was available in the past to Google’s competitors such as Yahoo and Bing as they attempted to become competitive with Google’s results.

In an effort to improve privacy, Google began using encrypted connections to make searches unintelligible to ISPs. One side effect was that an important avenue was blocked for competing search engines to obtain data that would improve their products.

An alternative to working with ISPs is to work with popular content sites to track where visitors are coming from. In Web lingo this is called a “referer header.” When a user clicks on a link, the browser tells the target site where the user was before (what site “referred” the user). If the user was referred by a search result page, that address contains the query string, making it possible to associate the original search with the result link. Because the vast majority of Web traffic goes to a few thousand top sites, it is possible to reconstruct a pretty good model of what people frequently search for and what results they follow.

Until late 2011, that is, when Google began encrypting the query in the referer header. Today, it’s no longer possible for the target site to reconstruct the user’s original query. This is of course good for user privacy—the target site knows only that a user was referred from Google after searching for something. At the same time, though, query encryption also locked out everyone (except Google) from accessing the underlying query data.

This chain of events has led to a “winner take all” situation in search, as a commenter on my previous blog post noted: a successful search engine is likely to get more and more successful, leaving in the dust the competitors who lack access to vital data.

These days, the search box in the browser is essentially the last remaining place where Google’s competitors can access a large volume of search queries. In 2011, Google famously accused Microsoft’s Bing search engine of doing exactly that: logging Google search traffic in Microsoft’s own Internet Explorer browser in order to improve the quality of Bing results. Having almost tripled the market share of Chrome since then, this is something Google has to worry much less about in the future. Its competitors will not be able to use Chrome’s search box to obtain data the way Microsoft did with Internet Explorer in the past.

So, if you have ever wondered why, in most markets, Google’s search results are so much better than their competitors’, don’t assume it’s because Google has a better search engine. The real reason is that Google has access to so much more search data. And, the company has worked diligently over the past few years to make sure it stays that way.

Filed under: Mozilla

http://andreasgal.com/2015/03/30/data-is-at-the-heart-of-search-but-who-has-access-to-it/

|

|

Rob Hawkes: Join 250 others in the Open Data Community on Slack |

This is a cross-post with the ViziCities blog.

This is a short and personal post written with the hope that it encourages you to join the new Open Data Community on Slack – a place for realtime communication and collaboration on the topic of open data.

It's important to foster open data, as it is to provide a place for the discussion and sharing of ideas around the production and use of open data. It's for this reason that we've created the Open Data Community in the hope of not only giving something back for the things that we have taken, but to provide a place for people to come together to help further this common goal.

The Open Data Community is not ViziCities; it's a group of like-minded invidividuals, non-profits and corporations alike. It's for anyone interested in open data, as well as for those who produce, use or are otherwise involved in its lifecycle.

In just 2 days the community has grown to 250 strong – I look forward to seeing you there and talking open data!

Robin - ViziCities Founder

http://feedproxy.google.com/~r/rawkes/~3/0k5SGj4rUBE/join-the-open-data-community-on-slack

|

|

Cameron Kaiser: 31.6.0 available |

|

|

Geoff Lankow: 1 Million Add-Ons Downloaded |

This is a celebratory post. Today I learned that the add-ons I've created for Firefox, Thunderbird, and SeaMonkey have been downloaded over 1,000,000 times in total. For some authors I'm sure that's not a major milestone – some add-ons have more than a million users – but for me it's something I think I can be proud of. (By comparison my add-ons have a collective 80,000 users.)

Here are some of them:

I started six years ago with Shrunked Image Resizer, which makes photos smaller for uploading them to websites. Later I modified it to also make photos smaller in Thunderbird email, and that's far more popular that the original purpose.

Around the same time I got frustrated when developing websites, having to open the page I was looking at in different browsers to test. The process involved far more keystrokes and mouse clicks for my liking, so I created Open With, which turned that into a one-click job.

Later on I created Tab Badge, to provide a visual alert of stuff happening with a tab. This can be quite handy when watching trees, as well as with Twitter and Facebook.

Then there's New Tab Tools – currently my most popular add-on. It's the standard Firefox new tab page, plus a few things, and minus a few things. Kudos to those of you who wrote the built in page, but I like mine better. :-)

Lastly I want to point out my newest add-on, which I think will do quite well once it gets some publicity. I call it Noise Control and it provides a visual indicator of tabs playing audio. (Yes, just like Chrome does.) I've seen lots of people asking for this sort of thing over the years, and the answer was always "it can't be done". Yes it can.

Big thanks to all of you reading this who've downloaded my add-ons, use them, helped me fix bugs, translated, sent me money, answered my inane questions or otherwise done something useful. Thank you. Really.

http://www.darktrojan.net/news/2015-03/1-million-add-ons-downloaded

|

|

Robert O'Callahan: Eclipse + Gecko = Win |

With Eclipse 4.4.1 CDT and the in-tree Eclipse project builder (./mach build-backend -b CppEclipse), the Eclipse C++ tools work really well on Gecko. Features I really enjoy:

- Ctrl-click to navigate to definitions/declarations

- Ctrl-T to popup the superclasses/subclasses of a class, or the overridden/overriding implementations of a method

- Shift-ctrl-G to find all uses of a declaration (not 100% reliable, but almost always good enough)

- Instant coloring of syntax errors as you type (useless messages, but still worth having)

- Instant coloring of unknown identifier and type errors as you type; not 100% reliable, but good enough that most of my compiler errors are caught before doing a build.

- Really good autocomplete. E.g. given

nsTArray> array;

Eclipse will autocomplete methods of Foo starting with P ... i.e., it handles "auto", C++ for-range loops, nsTArray and nsRefPtr operator overloading.

for (auto& v : array) {

v->P - Shift-ctrl-R: automated renaming of identifiers. Again, not 100% reliable but a massive time saver nonetheless.

Thanks to Jonathan Watt and Benoit Girard for the mach support and other Eclipse work over the years!

I assume other IDEs can do these things too, but if you're not using a tool at least this powerful, you might be leaving some productivity on the table.

With Eclipse, rr, and a unified hg repo, hacking Gecko has never felt so good :-).

|

|

L. David Baron: The need for government |

I've become concerned about the attitudes towards government in the technology industry. It seems to me (perhaps exaggerating a little) that much of the effort in computer security these days considers the major adversary to be the government (whether acting legally or illegally), rather than those attempting to gain illegal access to systems or information (whether private or government actors).

Democracy requires that the government have power. Not absolute power, but some, limited, power. Widespread use of technology that makes it impossible for the government to exercise certain powers could be a threat to democracy.

Let's look at one recent example: a recent article in the Economist about ransomware: malicious software that encrypts files on a computer, whose authors then demand payment to decrypt the files. The payment demanded these days is typically in Bitcoin, a system designed to avoid the government's power. This means that Bitcoin avoids the mechanisms that the international system has to find and catch criminals by following the money they make, and thus makes a perfect system for authors of ransomware and other criminals. The losers are those who don't have the mix of computer expertise and luck needed to avoid the ransomware.

One of the things that democracies often try to do is to protect the less powerful. For example, laws to protect property (in well-functioning governments) protect everybody's property, not just the property of those who can defend their property by force. Having laws like these not only (often) provides a fairer chance for the weak, but it also lets people use their labor on things that can improve people's lives rather than on zero-sum fighting over existing resources. Technology that keeps government out risks making it impossible for government to do this.

I worry that things like ransomware payment in Bitcoin could be just the tip of the iceberg. Technology is changing society quickly, and I don't think this will be the only harmful result of technology designed to keep government out. I don't want the Internet to turn into a “wild west,” where only the deepest experts in technology can survive. Such a change to the Internet risks either giving up many of the potential benefits of the Internet for society by keeping important things off of it, or alternatively risks moving society towards anarchy, where there is no government power that can do what we have relied on governments to do for centuries.

Now I'm not saying today's government is perfect; far from it. Government has responsibility too, including to deserve the trust that we need to place in it. I hope to write about that more in the future.

|

|

Andrew Halberstadt: Making mercurial bookmarks more git-like |

I mentioned in my previous post a mercurial extension I wrote for making bookmarks easier to manipulate. Since then it has undergone a large overhaul, and I believe it is now stable and intuitive enough to advertise a bit more widely.

Introducing bookbinder

When working with bookmarks (or anonymous heads) I often wanted to operate on the entire series of commits within the feature I was working on. I often found myself digging out revision numbers to find the first commit in a bookmark to do things like rebasing, grafting or diffing. This was annoying. I wanted bookmarks to work more like a git-style branch, that has a definite start as well as an end. And I wanted to be able to easily refer to the set of commits contained within. Enter bookbinder.

First, you can install bookbinder by cloning:

bash

$ hg clone https://bitbucket.org/halbersa/bookbinder

Then add the following to your hgrc:

ini

[extensions]

bookbinder = path/to/bookbinder

Usage is simple. Any command that accepts a revset with --rev, will be wrapped so that bookmark labels are replaced with the series of commits contained within the bookmark.

For example, let's say we create a bookmark to work on a feature called foo and make two commits:

```bash $ hg log -f changeset: 2:fcd3bdafbc88 bookmark: foo summary: Modify foo

changeset: 1:8dec92fc1b1c summary: Implement foo

changeset: 0:165467d1f143 summary: Initial commit ```

Without bookbinder, bookmarks are only labels to a commit:

bash

$ hg log -r foo

changeset: 2:fcd3bdafbc88

bookmark: foo

summary: Modify foo

But with bookbinder, bookmarks become a logical series of related commits. They are more similar to git-style branches:

```bash $ hg log -r foo changeset: 2:fcd3bdafbc88 bookmark: foo summary: Modify foo

changeset: 1:8dec92fc1b1c summary: Implement foo ```

Remember hg log is just one example. Bookbinder automatically detects and wraps all commands that have a --rev option and that can

receive a series of commits. It even finds commands from arbitrary extensions that may be installed!

Here are few examples that I've found handy in addition to hg log:

```bash

$ hg rebase -r

etc.

```

They all replace the single commit pointed to by the bookmark with the series of commits within the bookmark. But what if you actually only want the single commit pointed to by the bookmark label? Bookbinder uses '.' as an escape character, so using the example above:

bash

$ hg log -r .foo

changeset: 2:fcd3bdafbc88

bookmark: foo

summary: Modify foo

Bookbinder will also detect if bookmarks are based on top of one another:

bash

$ hg rebase -r my_bookmark_2 -d my_bookmark_1

Running hg log -r my_bookmark_2 will not print any of the commits contained by my_bookmark_1.

The gory details

But how does bookbinder know where one feature ends, and another begins? Bookbinder implements a new revset called "feature". The feature revset is roughly equivalent to the following alias (kudos to smacleod for coming up with it):

ini

[revsetalias]

feature($1) = ($1 or (ancestors($1) and not (excludemarks($1) or hg ancestors(excludemarks($1))))) and not public() and not merge()

excludemarks($1) = ancestors(parents($1)) and bookmark()

Here is a formal definition. A commit C is "within" a feature branch ending at revision R if all of the following statements are true:

- C is R or C is an ancestor of R

- C is not public

- C is not a merge commit

- no bookmarks exist in [C, R) for C != R

- all commits in (C, R) are also within R for C != R

In easier to understand terms, this means all ancestors of a revision that aren't public, a merge

commit or part of a different bookmark, are within that revision's 'feature'. One thing to be aware

of, is that this definition allows empty bookmarks. For example, if you create a new bookmark on a

public commit and haven't made any changes yet, that bookmark is "empty". Running hg log -r with

an empty bookmark won't have any output.

The feature revset that bookbinder exposes, works just as well on revisions that don't have any associated bookmark. For example, if you are working with an anonymous head, you could do:

bash

$ hg log -r 'feature(

In fact, when you pass in a bookmark label to a supported command, bookbinder is literally just

substituting -r with -r feature(. All the hard work is happening in the

feature revset.

In closing, bookbinder has helped me make a lot more sense out of my bookmark based workflow. It's solving a problem I think should be handled in mercurial core, maybe one day I'll attempt to submit a patch upstream. But until then, I hope it can be useful to others as well.

http://ahal.ca/blog/2015/making-mercurial-bookmarks-more-git-like/

|

|

Christian Heilmann: No more excuses – a “HTML5 now” talk at #codemotion Rome |

Yesterday I closed up the “inspiration” track of Codemotion Rome with a talk about the state of browsers and how we as developers make it much too hard for ourselves. You can see the slides on Slideshare and watch a screencast on YouTube.

http://christianheilmann.com/2015/03/29/no-more-excuses-an-html5-now-talk-at-codemotion-rome/

|

|

Mozilla Privacy Blog: Mozilla Privacy Teaching Task Force |

https://blog.mozilla.org/privacy/2015/03/29/mozilla-privacy-teaching-task-force/

|

|

Cameron Kaiser: IonPower: phase 5! |

http://tenfourfox.blogspot.com/2015/03/ionpower-phase-5.html

|

|

Jordan Lund: Mozharness is moving into the forest |

Since its beginnings, Mozharness has been living in its own world (repo). That's about to change. Next quarter we are going to be moving it in-tree.

what's Mozharness?

it's a configuration driven script harness

why in tree?

- First and foremost: transparency.

- There is an overarching goal to provide developers the keys to manage and stand up their own builds & tests (AKA self-serve). Having the automation step logic side by side to the compile and test step logic provides developers transparency and a sense of determinism. Which leads to reason number 2.

- deterministic builds & tests

- This is somewhat already in place thanks to Armen's work on pinning specific Mozharness revisions to in-tree revisions. However the pins can end up behind the latest Mozharness revisions so we end up often landing multiple changes to Mozharness at once to one in-tree revsion.

- Mozharness automated build & test jobs are not just managed by Buildbot anymore. Taskcluster is starting to take the weight off Buildbot's hands and, because of its own behaviour, Mozharness is better suited in-`tree.

- ateam is going to put effort this quarter into unifying how we run tests locally vs automation. Having mozharness in-tree should make this easier

this sounds great. why wouldn't we want to do this?

There are downsides. It arguably puts extra strain on Release Engineering for managing infra health. Though issues will be more isolated, it does become trickier to have a higher view of when and where Mozharness changes land.

In addition, there is going to be more friction for deployments. This is because a number of our Mozharness scripts are not directly related to continuous integration jobs: e.g. releases, vcs-sync, b2g bumper, and merge tasks.

why wasn't this done yester-year?

Mozharness now handles > 90% of our build and test jobs. Its internal components: config, script, and log logic, are starting to mature. However, this wasn't always the case.

When it was being developed and its uses were unknown, it made sense to develop on the side and tie itself close to buildbot deployments.

okay. I'm sold. can we just simply hg add mozharness?

Integrating Mozharness in-tree comes with a fe6 challenges

chicken and egg issue

- currently, for build jobs, Mozharness is in charge of managing version control of the tree itself. How can Mozharness checkout a repo if it itself lives within that repo?

test jobs don't require the src tree

- test jobs only need a binary and a tests.zip. It doesn't make sense to keep a copy of our branches on each machine that runs tests. In line with that, putting mozharness inside tests.zip also leads us back to a similar 'chicken and egg' issue.

which branch and revisions do our release engineering scripts use?

how do we handle releases?

how do we not cause extra load on hg.m.o?

what about integrating into Buildbot without interruption?

it's easy!

This shouldn't be too hard to solve. Here is a basic outline my plan of action and roadmap for this goal:

- land copy of mozharness on a project branch

- add an end point on relengapi with the following logic

- endpoint will contain 'mozharness' and a '$REVISION'

- look in s3 for equivalent mozharness archive

- if not present: download a sub repo dir archive from hg.m.o, run tests, and push that archive to s3

- finally, return the url to the s3 archive

- integrate the endpoint into buildbot

- call endpoint before scheduling jobs

- add builder step: download and unpack the archive on the slave

- for machines that run mozharness based releng scripts

- add manifest that points to 'known good s3 archive'

- fix deploy model to listen to manifest changes and downloads/unpacks mozharness in a similar manner to builds+tests

This is a loose outline of the integration strategy. What I like about this

- no code change required within Mozharness' code

- there is very little code change within Buildbot

- allows Taskcluster to use Mozharness in whatever way it likes

- no chicken and egg problem as (in Buildbot world), Mozharness will exist before the tree exists on the slave

- no need to manage multiple repos and keep them in sync

I'm sure I am not taking into account many edge cases and I look forward to hitting those edges head on as I start this in Q2. Stay tuned for further developments.

One day, I'd like to see Mozharness (at least its internal parts) be made into isolated python packages installable by pip. However, that's another problem for another day.

Questions? Concerns? Ideas? Please comment here or in the tracking bug

http://jordan-lund.ghost.io/mozharness-steps-into-the-forest/

|

|