Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Monica Chew: Tracking Protection talk on Air Mozilla |

http://monica-at-mozilla.blogspot.com/2015/03/tracking-protection-talk-on-air-mozilla.html

|

|

James Long: Backend Apps with Webpack, Part II: Driving with Gulp |

In Part I of this series, we configured webpack for building backend apps. With a few simple tweaks, like leaving all dependencies from node_modules alone, we can leverage webpack's powerful infrastructure for backend modules and reuse the same system for the frontend. It's a relief to not maintain two separate build systems.

This series is targeted towards people already using webpack for the frontend. You may find babel's require hook fine for the backend, which is great. You might want to run files through multiple loaders, however, or share code between frontend and backend. Most importantly, you want to use hot module replacement. This is an experiment to reuse webpack for all of that.

In this post we are going to look at more fine-grained control over webpack, and how to manage both frontend and backend code at the same time. We are going to use gulp to drive webpack. This should be a usable setup for a real app.

Some of the responses to Part I criticized webpack as too complicated and not standards-compliant, and we should be moving to jspm and SystemJS. SystemJS is a runtime module loaded based on the ES6 specification. The people behind jspm are doing fantastic work, but all I can say is that they don't have many features that webpack users love. A simple example is hot module replacement. I'm sure in the years to come something like webpack will emerge based on the loader specification, and I'll gladly switch to it.

The most important thing is that we start writing ES6 modules. This affects the community a whole lot more than loaders, and luckily it's very simple to do with webpack. You need to use a compiler like Babel that supports modules, which you really want to do anyway to get all the good ES6 features. These compilers will turn ES6 modules into require statements, which can be processed with webpack.

I converted the backend-with-webpack repo to use the Babel loader and ES6 modules in the part1-es6 branch, and I will continue to use ES6 modules from here on.

Gulp

Gulp is nice task runner that makes it simple to automate anything. Even though we aren't using it to transform or bundle modules, its still useful as a "master controller" to drive webpack, test runners, and anything else you might need to do.

If you are going to use webpack for both frontend and backend code, you will need two separate configuration files. You could manually specify the desired config with --config, and run two separate watchers, but that gets redundant quickly. It's annoying to have two separate processes in two different terminals.

Webpack actually supports multiple configurations. Instead of exporting a single one, you export an array of them and it will run multiple processes for you. I still prefer using gulp instead because you might not want to always run both at the same time.

We need to convert our webpack usage to use the API instead of the CLI, and make a gulp task for it. Let's start by converting our existing config file into a gulp task.

The only difference is instead of exporting the config, you pass it to the webpack API. The gulpfile will look like this:

var gulp = require('gulp');

var webpack = require('webpack');

var config = {

...

};

gulp.task('build-backend', function(done) {

webpack(config).run(function(err, stats) {

if(err) {

console.log('Error', err);

}

else {

console.log(stats.toString());

}

done();

});

});

You can pass a config to the webpack function and you get back a compiler. You can call run or watch on the compiler, so if you wanted to make a build-watch task which automatically recompiles modules on change, you would call watch instead of run.

Our gulpfile is getting too big to show all of it here, but you can check out the new gulpfile.js which is a straight conversion of our old webpack.config.js. Note that we added a babel loader so we can write ES6 module syntax.

Multiple Webpack Configs

Now we're ready to roll! We can create another task for building frontend code, and simply provide a different webpack configuration. But we don't want to manage two completely separate configurations, since there are common properties between them.

What I like to do is create a base config and have others extend from it. Let's start with this:

var DeepMerge = require('deep-merge');

var deepmerge = DeepMerge(function(target, source, key) {

if(target instanceof Array) {

return [].concat(target, source);

}

return source;

});

// generic

var defaultConfig = {

module: {

loaders: [

{test: /\.js$/, exclude: /node_modules/, loaders: ['babel'] },

]

}

};

if(process.env.NODE_ENV !== 'production') {

defaultConfig.devtool = 'source-map';

defaultConfig.debug = true;

}

function config(overrides) {

return deepmerge(defaultConfig, overrides || {});

}

We create a deep merging function for recursively merging objects, which allows us to override the default config, and we provide a function config for generating configs based off of it.

Note that you can turn on production mode by running the gulp task with NODE_ENV=production prefixed to it. If so, sourcemaps are not generated and you could add plugins for minifying code.

Now we can create a frontend config:

var frontendConfig = config({

entry: './static/js/main.js',

output: {

path: path.join(__dirname, 'static/build'),

filename: 'frontend.js'

}

});

This makes static/js/main.js the entry point and bundles everything together at static/build/frontend.js.

Our backend config uses the same technique: customizing the config to be backend-specific. I don't think it's worth pasting here, but you can look at it on github. Now we have two tasks:

function onBuild(done) {

return function(err, stats) {

if(err) {

console.log('Error', err);

}

else {

console.log(stats.toString());

}

if(done) {

done();

}

}

}

gulp.task('frontend-build', function(done) {

webpack(frontendConfig).run(onBuild(done));

});

gulp.task('backend-build', function(done) {

webpack(backendConfig).run(onBuild(done));

});

In fact, you could go crazy and provide several different interactions:

gulp.task('frontend-build', function(done) {

webpack(frontendConfig).run(onBuild(done));

});

gulp.task('frontend-watch', function() {

webpack(frontendConfig).watch(100, onBuild());

});

gulp.task('backend-build', function(done) {

webpack(backendConfig).run(onBuild(done));

});

gulp.task('backend-watch', function() {

webpack(backendConfig).watch(100, onBuild());

});

gulp.task('build', ['frontend-build', 'backend-build']);

gulp.task('watch', ['frontend-watch', 'backend-watch']);

watch takes a delay as the first argument, so any changes within 100ms will only fire one rebuild.

You would typically run gulp watch to watch the entire codebase for changes, but you could just build or watch a specific piece if you wanted.

Nodemon

Nodemon is a nice process management tool for development. It starts a process for you and provides APIs to restart it. The goal of nodemon is to watch file changes and restart automatically, but we are only interested in manual restarts.

After installing with npm install nodemon and adding var nodemon = require('nodemon') to the top of the gulpfile, we can create a run task which executes the compiled backend file:

gulp.task('run', ['backend-watch', 'frontend-watch'], function() {

nodemon({

execMap: {

js: 'node'

},

script: path.join(__dirname, 'build/backend'),

ignore: ['*'],

watch: ['foo/'],

ext: 'noop'

}).on('restart', function() {

console.log('Restarted!');

});

});

This task also specifies dependencies on the backend-watch and frontend-watch tasks, so the watchers are automatically fired up and will code will recompile on change.

The execMap and script options specify how to actually run the program. The rest of the options are for nodemon's watcher, and we actually don't want it to watch anything. That's why ignore is *, watch is a non-existant directory, and ext is a non-existant file extension. Initially I only used the ext option but I ran into performance problems because nodemon still was watching everything in my project.

So how does our program actually restart on change? Calling nodemon.restart() does the trick, and we can do this within the backend-watch task:

gulp.task('backend-watch', function() {

webpack(backendConfig).watch(100, function(err, stats) {

onBuild()(err, stats);

nodemon.restart();

});

});

Now, when running backend-watch, if you change a file it will be rebuilt and the process will automatically restart.

Our gulpfile is complete. After all this work, you just need to run this to start everything:

gulp run

As you code, everything will automatically be rebuilt and the server will restart. Hooray!

A Few Tips

Better Performance

If you are using sourcemaps, you will notice compilation performance degrades the more files you have, even with incremental compilation (using watchers). This happens because webpack has to regenerate the entire sourcemap of the generated file even if a single module changes. This can be fixed by changing the devtool from source-map to #eval-source-map:

config.devtool = '#eval-source-map';

This tells webpack to process source-maps individually for each module, which it achieves by eval-ing each module at runtime with its own sourcemap. Prefixing it with # tells it you use the //# comment instead of the older //@ style.

Node Variables

I mentioned this in Part I, but some people missed it. Node defines variables like __dirname which are different for each module. This is a downside to using webpack, because we no longer have the node context for these variables, and webpack needs to fill them in.

Webpack has a workable solution, though. You can tell it how to treat these variables with the node configuration entry. You most likely want to set __dirname and __filename to true which will keep its real values. They default to "mock" which gives them dummy values (meant for browser environments).

Until Next Time

Our setup is now capable of building a large, complex app. If you want to share code between the frontend and backend, its easy to do since both sides use the same infrastructure. We get the same incremental compilation on both sides, and with the #eval-source-map setting, even with large amount of files modules are rebuilt in under 200ms.

I encourage you to modify this gulpfile to your heart's content. The great thing about webpack and gulp and is that its easy to customize it to your needs, so go wild.

These posts have been building towards the final act. We are now ready to take advantage of the most significant gain of this infrastructure: hot module replacement. React users have enjoyed this via react-hot-loader, and now that we have access to it on the backend, we can live edit backend apps. Part III will show you how to do this.

Thanks to Dan Abramov for reviewing this post.

|

|

Gen Kanai: Analyse Asia – The Firefox Browser & Mobile OS with Gen Kanai |

I had the pleasure to sit down with Bernard Leong, host of the Analyse Asia podcast, after my keynote presentation at FOSSASIA 2015. Please enjoy our discussion on Firefox, Firefox OS in Asia and other related topics.

Analyse Asia with Bernard Leong, Episode 22: The Firefox Browser & Mobile OS with Gen Kanai

https://blog.mozilla.org/gen/2015/03/19/analyse-asia-the-firefox-browser-mobile-os-with-gen-kanai/

|

|

Air Mozilla: Kids' Vision - Mentorship Series |

Mozilla hosts Kids Vision Bay Area Mentor Series

Mozilla hosts Kids Vision Bay Area Mentor Series

|

|

Air Mozilla: Product Coordination Meeting |

Weekly coordination meeting for Firefox Desktop & Android product planning between Marketing/PR, Engineering, Release Scheduling, and Support.

Weekly coordination meeting for Firefox Desktop & Android product planning between Marketing/PR, Engineering, Release Scheduling, and Support.

https://air.mozilla.org/product-coordination-meeting-20150318/

|

|

Air Mozilla: The Joy of Coding (mconley livehacks on Firefox) - Episode 6 |

Watch mconley livehack on Firefox Desktop bugs!

Watch mconley livehack on Firefox Desktop bugs!

https://air.mozilla.org/the-joy-of-coding-mconley-livehacks-on-firefox-episode-6/

|

|

Air Mozilla: MWoS 2014: OpenVPN MFA |

The OpenVPN MFA MWoS team presents their work to add native multi-factor authentication to OpenVPN. The goal of this project is to improve the ease...

The OpenVPN MFA MWoS team presents their work to add native multi-factor authentication to OpenVPN. The goal of this project is to improve the ease...

|

|

Justin Crawford: MDN Product Talk: The Case for Experiments |

This is the third post in a series that is leading up to a roadmap of sorts — a set of experiments I will recommend to help MDN both deepen and widen its service to web developers.

The first post in the series introduced MDN as a product ecosystem. The second post in the series explained the strategic context for MDN’s product work. In this post I will explain how an experimental approach can help us going forward, and I’ll talk about the two very different kinds of experiments we’re undertaking in 2015.

In the context of product development, “experiments” is shorthand for market-validated learning — a product discovery and optimization activity advocated by practitioners of lean product development and many others. Product development teams run experiments by testing hypotheses against reality. The primary benefit of this methodology is eliminating wasted effort by building things that people demonstrably want.

Now my method, though hard to practise, is easy to explain; and it is this. I propose to establish progressive stages of certainty.

-Francis Bacon (introducing the scientific method in Novum Organum)

Product experiments all…

- have a hypothesis

- test the hypothesis by exposing it to reality (in other words, introducing it to the market)

- deliver some insight to drive further product development

Here is a concrete example of how MDN will benefit from an experimental approach. We have an outstanding bug, “Kuma: Optional IRC Widget“, opened 3 years ago and discussed at great length on numerous occasions. This bug, like so many enhancement requests, is really a hypothesis in disguise: It asserts that MDN would attract and retain more contributors if they had an easier way to discuss contribution in realtime with other contributors.

That hypothesis is untested. We don’t have an easy way to discuss contribution in realtime now. In order to test the bug’s hypothesis we propose to integrate a 3rd-party IRC widget into a specific subset of MDN pages and measure the result. We will undoubtedly learn something from this experiment: We will learn something about the specific solution or something about the problem itself, or both.

Understanding the actual problem to be solved (and for who) is a critical element of product experimentation. In this case, we do not assert that anonymous MDN visitors need a realtime chat feature, and we do not assert that MDN contributors specifically want to use IRC. We assert that contributors need a way to discuss and ask questions about contribution, and by giving them such a facility we’ll increase the quality of contribution. Providing this facility via IRC widget is an implementation detail.

This experiment is an example of optimization. We already know that contribution is a critical factor in the quality of documentation in the documentation wiki. This is because we already understand the business model and key metrics of the documentation wiki. The MDN documentation wiki is a very successful product, and our focus going forward should be on improving and optimizing it. We can do that with experiments like the one above.

In order to optimize anything, though, we need better measurements than we have now. Here’s an illustration of the key components of MDN’s documentation wiki:

Visitors come to the wiki from search, by way of events, or through links in online social activity. If they create an account they become users and we notify them that they can contribute. If they follow up on that notification they become returners. If they contribute they become contributors. If they stop visiting they become disengaged users. Users can request content (in Bugzilla and elsewhere). Users can share content (manually).

Visitors come to the wiki from search, by way of events, or through links in online social activity. If they create an account they become users and we notify them that they can contribute. If they follow up on that notification they become returners. If they contribute they become contributors. If they stop visiting they become disengaged users. Users can request content (in Bugzilla and elsewhere). Users can share content (manually).

All the red and orange shapes in the picture above represent things we’re measuring imperfectly or not at all. So we track the number of visitors and the number of users, but we don’t measure the rate by which visitors become users (or any other conversion rate). We measure the rates of content production and content consumption, but we don’t measure the helpfulness of content. And so forth.

If we wanted to add a feature to the wiki that might impact one of these numbers, how would we measure the before and after states? We couldn’t. If we wanted to choose between features that might affect these numbers, how would we decide which metric needed the most attention? We couldn’t. So in 2015 we must prioritize building enough measurements into the MDN platform that we can see what needs optimization and which optimizations make a difference. In particular, considering the size of content’s role in our ecosystem, we must prioritize features that help us better understand the impact of our content.

Once we have proper measurements, we have a huge backlog of optimization opportunities to consider for the documentation wiki. Experiments will help us prioritize them and implement them.

As we do so, we are also simultaneously engaged in a completely different kind of experimentation. Steve Blank describes the difference in his recent post, “Fear of Failure and Lack of Speed In a Large Corporation”. To paraphrase him: A successful product organization that has already found market fit (i.e. MDN’s documentation wiki) properly seeks to maximize the value of its existing fit — it optimizes. But a fledgling product organization has no idea what the fit is, and so properly seeks to discover it.

This second kind of experimentation is not for optimization, it is for discovery. MDN’s documentation wiki clearly solves a problem and there is clearly value in solving that problem, but MDN’s audience has plenty of problems to solve (and more on the way), and new audiences similar to MDN’s current audience have similar problems to solve, too. We can see far enough ahead to advance some broad hypotheses about solving these problems, and we now need to learn how accurate those hypotheses are.

Here is an illustration of the different kinds of product experiments we’re running in the context of the overall MDN ecosystem:

The left bubble represents the existing documentation wiki: It’s about content and contribution; we have a lot of great optimizations to build there. The right bubble represents our new product/market areas: We’re exploring new products for web developers (so far, in services) and we’re serving a new audience of web learners (so far, by adding some new areas to our existing product).

The left bubble represents the existing documentation wiki: It’s about content and contribution; we have a lot of great optimizations to build there. The right bubble represents our new product/market areas: We’re exploring new products for web developers (so far, in services) and we’re serving a new audience of web learners (so far, by adding some new areas to our existing product).

The right bubble is far less knowable than the left. We need to conduct experiments as quickly as possible to learn whether any particular service or teaching material resonates with its audience. Our experiments with new products and audiences will be more wide-ranging than our experiments to improve the wiki; they will also be measured in smaller numbers. These new initiatives have the possibility to grow into products as successful as the documentation wiki, but our focus in 2015 is to validate that these experiments can solve real problems for any part of MDN’s audience.

As Marty Cagan says, “Good [product] teams are skilled in the many techniques to rapidly try out product ideas to determine which ones are truly worth building. Bad teams hold meetings to generate prioritized roadmaps.” On MDN we have an incredible opportunity to develop our product team by taking a more experimental approach to our work. Developing our product team will improve the quality of our products and help us serve more web developers better.

In an upcoming post I will talk about how our 2015 focus areas will help us meet the future. And of course I will talk about specific experiments soon, too.

http://hoosteeno.com/2015/03/18/mdn-product-talk-the-case-for-experiments/

|

|

Will Kahn-Greene: Input status: March 18th, 2015 |

Development

High-level summary:

- new Alerts API

- Heartbeat fixes

- bunch of other minor fixes and updates

Thank you to contributors!:

- Guruprasad: 6

- Ricky Rosario: 2

Landed and deployed:

- 73eaaf2 bug 1103045 Add create survey form (L. Guruprasad)

- e712384 bug 1130765 Implement Alerts API

- 6bc619e bug 1130765 Docs fixes for the alerts api

- 1e1ca9a bug 1130765 Tweak error msg in case where auth header is missing

- 067d6e8 bug 1130765 Add support for Fjord-Authorization header

- 1f3bde0 bug 909011 Handle amqp-specific indexing errors

- 3da2b2d Fix alerts_api requests examples

- 601551d Cosmetic: Rename heartbeat/views.py to heartbeat/api_views.py

- 8f3b8e8 bug 1136810 Fix UnboundLocalError for "showdata"

- 1721758 Update help_text in api_auth initial migration

- 473e900 Fix migration for fixing AlertFlavor.allowed_tokens

- 2d3d05a bug 1136809 Fix (person, survey, flow) uniqueness issues

- 3ce45ec Update schema migration docs regarding module-level docstrings

- 2a91627 bug 1137430 Validate sortby values

- 6e3961c Update setup docs for django 1.7. (Ricky Rosario)

- 6739af7 bug 1136814 Update to Django 1.7.5

- 334eed7 Tweak commit msg linter

- ac35deb bug 1048462 Update some requirements to pinned versions. (Ricky Rosario)

- 8284cfa Clarify that one token can GET/POST to multiple alert flavors

- 7a60497 bug 1137839 Add start_time/end_time to alerts api

- 7a21735 Fix flavor.slug tests and eliminate needless comparisons

- 89dbb49 bug 1048462 Switch some github url reqs to pypi

- e1b62b5 bug 1137839 Add start_time/end_time to AlertAdmin

- 3668585 bug 1103045 Add update survey form (L. Guruprasad)

- ab706c6 bug 1139510 Update selenium to 2.45

- 6df753d Cosmetic: Minor cleanup of server error testing

- 1dcaf62 Make throw_error csrf exempt

- ceb53eb bug 1136840 Fix error handling for better debugging

- 92ce3b6 bug 1139545 Handle all exceptions

- e33cf9f bug 1048462 Upgrade gengo-python from 0.1.14 to 0.1.19

- 4a8de81 bug 1048462 Remove nuggets

- ff9f01c bug 1139713 Add received_ts field to hb Answer model

- d853fa9 bug 1139713 Fix received_ts migration

- ae5cb13 bug 1048462 Upgrade django-multidb-router to 0.6

- 649b136 bug 1048462 Nix django-compressor

- 1547073 Cosmetic: alphabetize requirements

- e165f49 Add note to compiled reqs about py-bcrypt

- ecdd00f bug 1136840 Back out new WSGIHandler

- cc75bef bug 1141153 Upgrade Django to 1.7.6

- d518731 bug 1136840 Back out rest of WSGIHandler mixin

- 12940b0 bug 1139545 Wrap hb integrity error with logging

- 8b61f14 bug 1139545 Fix "get or create" section of HB post view

- d44faf3 bug 1129102 ditch ditchchart flag (L. Guruprasad)

- 7fa256a bug 1141410 Fix unicode exception when feedback has invalid unicode URL (L. Guruprasad)

- c1fe25a bug 1134475 Cleanup all references to input-dev environment (L. Guruprasad)

Landed, but not deployed:

- 1cac166 bug 1081177 Rename feedback api and update docs

- 026d9ae bug 1144476 stop logging update_ts errors (L. Guruprasad)

Current head: 9b3e263

Rough plan for the next two weeks

- removing settings we don't need and implementing environment-based configuration for instance settings

- prepare for 2015q2

End of OPW and thank you to Adam!

March 9th was the last day of OPW. Adam did some really great work on Input which is greatly appreciated. We hope he sticks around with us. Thank you, Adam!

http://bluesock.org/~willkg/blog/mozilla/input_status_20150318

|

|

Gregory Szorc: Network Events |

The Firefox source repositories and automation have been closed the past few days due to a couple of outages.

Yesterday, aggregate CPU usage on many of the machines in the hg.mozilla.org cluster hit 100%. Previously, whenever hg.mozilla.org was under high load, we'd run out of network bandwidth before we ran out of CPU on the machines. In other words, Mercurial was generating data faster than the network could accept it.

When this happened, the service started issuing HTTP 503 Service Not Available responses. This is the universal server signal for I'm down, go away. Unfortunately, not all clients did this.

Parts of Firefox's release automation retried failing requests immediately, or with insufficient jitter in their backoff interval. Actively retrying requests against a server that's experiencing load issues only makes the problem worse. This effectively prolonged the outage.

Today, we had a similar but different network issue. The load balancer fronting hg.mozilla.org can only handle so much bandwidth. Today, we hit that limit. The load balancer started throttling connections. Load on hg.mozilla.org skyrocketed and request latency increased. From the perspective of clients, the service grinded to a halt.

hg.mozilla.org was partially sharing a load balancer with ftp.mozilla.org. That meant if one of the services experienced very high load, the other service could effectively be locked out of bandwidth. We saw this happening this morning. ftp.mozilla.org load was high (it looks like downloads of Firefox Developer Edition are a major contributor - these don't go through the CDN for reasons unknown to me) and there wasn't enough bandwidth to go around.

Separately today, hg.mozilla.org again hit 100% CPU. At that time, it also set a new record for network throughput: ~3 Gbps. It normally consumes between 200 and 500 Mbps, with periodic spikes to 750 Mbps. (Yesterday's event saw a spike to around ~2 Gbps.)

Going back through the hg.mozilla.org server logs, an offender is quite obvious. Before March 9, total outbound transfer for the build/tools repo was around 1 tebibyte per day. Starting on March 9, it increased to 3 tebibytes per day! This is quite remarkable, as a clone of this repo is only about 20 MiB. This means the repo was getting cloned about 150,000 times per day! (Note: I think all these numbers may be low by ~20% - stay tuned for the final analysis.)

2 TiB/day is statistically significant because we transfer less than 10 TiB/day across all of hg.mozilla.org. And, 1 TiB/day is close to 100 Mbps, assuming requests are evenly spread out (which of course they aren't).

Multiple things went wrong. If only one or two happened, we'd likely be fine. Maybe there would have been a short blip. But not the major event we've been firefighting the last ~24 hours.

This post is only a summary of what went wrong. I'm sure there will be a post-mortem and that it will contain lots of details for those who want to know more.

|

|

L. David Baron: Priority of constituencies |

Since the HTML design principles (which are effectively design principles for modern Web technology) were published, I've thought that the priority of constituencies was among the most important. It's certainly among the most frequently cited in debates over Web technology. But I've also thought that it was wrong in a subtle way.

I'd rather it had been phrased in terms of utility, so that instead of stating as a rule that value (benefit minus cost) to users is more important than value to authors, it recognized that there are generally more users than authors, which means that a smaller value per user multiplied by the number of users is generally more important than a somewhat larger value per author, because it provides more total value when the value is multiplied by the number of people it applies to. However, this doesn't hold for a very large difference in value, that is, one where multiplying the cost and benefit by the numbers of people they apply to yields results where the magnitude of the cost and benefit control which side is larger, rather than the numbers of people. The same holds for implementors and specification authors; there are generally fewer in each group. Likewise, the principle should recognize that something that benefits a very small portion of users doesn't outweigh the interests of authors as much, because the number of users it benefits is no longer so much greater than the number of authors who have to work to make it happen.

Also, the current wording of the principle doesn't consider the scarcity of the smaller groups (particularly implementors and specification authors), and thus the opportunity costs of choosing one behavior over another. In other words, there might be a behavior that we could implement that would be slightly better for authors, but would take more time for implementors to implement. But there aren't all that many implementors, and they can only work on so many things. (Their number isn't completely fixed, but it can't be changed quickly.) So given the scarcity of implementors, we shouldn't consider only whether the net benefit to users is greater than the net cost to implementors; we should also consider whether there are other things those implementors could work on in that time that would provide greater net benefit to users. The same holds for scarcity of specification authors. A good description of the principle in terms of utility would also correct this problem.

|

|

Jet Villegas: What can SVG learn from Flash? |

Regular readers of my blog know that I also worked on the Macromedia Flash Professional authoring tool and the Adobe Flash Player for many years. I learned a great deal about the design of ubiquitous platforms, and the limitations of single-vendor implementations. At a recent meeting with the W3C SVG working group, I shared some of my thoughts on how Flash was able to reach critical mass across the Web, and how SVG can leverage those lessons for the future.

Basically, it boils down to 3 principles:

1. Flash offered expressive design-fidelity across all user agents.

2. Flash authoring was superior to SVG authoring tools for producing content that adheres to principle # 1.

3. Most Flash content is self-contained and atomic in a packaged file format that helped preserve design-fidelity in # 1.

I shared some feedback regarding what I hear from Firefox users about SVG. I also shared what I never hear from Firefox users: “We need more SVG features.”

As the working group ponders new SVG specifications for review, the main gripe I hear from users is the lack of interoperability for the current feature set. That is, I don’t get requests for a new DOM or fancy gradient meshes, I get bugs about basic rendering differences across browsers. As a result, I’ve directed our SVG investment towards these paper cuts that make authors distrust SVG for complex designs. I can see why it’s more tempting to focus on new feature specifications, but adoption is hampered by the legacy of interoperability (or lack thereof.) I’d like to see the group organize around fixing these bugs across all browsers in a coordinated fashion, eg. in a hackathon or bug bash at a future multi-browser face-to-face meeting.

I also talked about how SVG could be a very expressive authoring source format for a modern implementation that is more focused on pixel-fidelity. Unfortunately, I didn’t get a lot of support for that idea from other browser vendors, as the desire to compete for the best implementation seemed to outweigh the benefits of dependable runtime characteristics. I’m really surprised that SVG hasn’t stepped in to replace Flash for more use cases, and I’m quite certain that the 3 principles I mentioned above are the reason why. I do hope that authoring tool vendors step in and help drive the state of the art here. It’s one thing for browser vendors to offer competing implementations, but the lack of strong authoring systems makes it hard to define what it means to be correct.

I spoke with a few people about how the packaged SWF format was an advantage for Flash because it was easy to have this content move across the internet in a viral fashion without losing any of the assets. Flash games, for example, are commonly hosted on multiple servers (often unknown to the original publisher) and still retain all the graphics and logic within the SWF file. The W3C application package proposal is something we could implement as a format that lets HTML/SVG content traverse networks intact. It’s not hard for such HTML/SVG applications to be made up of hundreds of individual assets that are easy to lose track of. Having a packaged format with clear semantics and security rules (eg. iframe in a zip) could be a really good feature for the modern web.

What else are we missing for SVG to gain critical mass? Post a reply below or find me on twitter!

|

|

Mozilla Release Management Team: Firefox 37 beta5 to beta6 |

In this beta release, we continued the work on MSE. We also added on new telemetry data.

- 33 changesets

- 45 files changed

- 471 insertions

- 73 deletions

| Extension | Occurrences |

| cpp | 13 |

| js | 11 |

| h | 6 |

| jsm | 2 |

| xul | 1 |

| xml | 1 |

| sh | 1 |

| rst | 1 |

| mk | 1 |

| manifest | 1 |

| json | 1 |

| java | 1 |

| ini | 1 |

| in | 1 |

| build | 1 |

| Module | Occurrences |

| dom | 8 |

| browser | 8 |

| js | 7 |

| toolkit | 5 |

| security | 5 |

| services | 3 |

| mobile | 2 |

| gfx | 2 |

| xulrunner | 1 |

| testing | 1 |

| modules | 1 |

List of changesets:

| Mark Goodwin | Bug 1120748 - Fix intermittent orange from message manager misuse. r=felipe, a=test-only - 6a2affbbe91d |

| Panos Astithas | Bug 1101196 - Use the correct l10n keys for the event listeners pane. r=vporof, a=lmandel - 5fae7363bcad |

| Brian Birtles | Bug 1134487 - Remove delegated constructors in GMP{Audio,Video}Decoder since they're not supported by GCC 4.6. r=cpearce, a=lmandel - 64c87e9b4fbe |

| Syd Polk | Bug 1134888 - Disable the mediasource-config-change-webm-a-bitrate.html test; r=cpearce a=testonly - 84cb53dff47c |

| Gijs Kruitbosch | Bug 1111967 - Honor browser.casting.enabled pref for casting on desktop. r=mconley, a=lsblakk - 4f9eeb2285b0 |

| Margaret Leibovic | Bug 1134553 - Disable downloads in guest session. r=rnewman, a=lsblakk - 6df5a4ea72f3 |

| Jeff Muizelaar | Bug 1132432 - Implement AsShadowableLayer() for ClientReadbackLayers. r=roc, a=lsblakk - ff5485e89210 |

| James Willcox | Bug 1142459 - Fix mixed content shield notification broke by Bug 1140830. r=rnewman, a=sledru - b31df8fa73a5 |

| Mike Taylor | Bug 1125340 - Gather telemetry on H.264 profile & level values from canPlayType. r=cpearce, a=lmandel - 7caca8a3e78f |

| Mike Taylor | Bug 1125340 - Collect h264 profile & level telemetry from decoded SPS. r=jya, a=lmandel - 247c345e5f23 |

| Jean-Yves Avenard | Bug 1139380 - Ensure all queued tasks are aborted when shutting down. r=cpearce a=lmandel - 1604edfb5a6c |

| Mike Taylor | Bug 1136877 - Collect telemetry on constraint_set flags from canPlayType. r=jya, a=lsblakk - 3aa618647813 |

| Mike Taylor | Bug 1136877 - Collect telemetry on constraint_set flags from decoded SPS. r=jya, a=lsblakk - 027a0ac06377 |

| Mike Taylor | Bug 1136877 - Collect telemetry on SPS.max_num_ref_frames. r=jya, a=lsblakk - 99fe61cb246f |

| Florian Qu`eze | Bug 1140440 - Mouse chooses options when search menu pops out under it. r=Gijs, a=lmandel - ec555c9715d2 |

| Matt Woodrow | Bug 1131638 - Discard video frames that fail to sync. r=cpearce, a=lmandel - 6d4536c5fa38 |

| Matt Woodrow | Bug 1131638 - Record invalid frames as dropped for video playback stats. r=ajones, a=lmandel - 772774b9be23 |

| Matt Woodrow | Bug 1131638 - Disable hardware decoding if too many frames are invalid. r=cpearce, a=lmandel - d35ca21dada8 |

| David Keeler | Bug 1138332 - Re-allow overrides for certificates signed by non-CA certificates. r=mmc, a=lmandel - 464844fd7f46 |

| Maire Reavy | Bug 1142140 - Citrix GoToMeeting Free added to screensharing whitelist. r=jesup, a=lmandel - 1c8c794f8c3d |

| Nicolas B. Pierron | Bug 1133389 - Fix FrameIter::matchCallee to consider all inner functions and not only lambdas. r=shu, a=lmandel - d1dc38edb7b1 |

| Nicolas B. Pierron | Bug 1138391 - LazyLinkStub stops making a call and reuses the parent frame. r=h4writer, a=lmandel - 44cc57c29710 |

| Perry Wagle | Bug 1122941 - Remove bookmark tags field max length. r=mak, a=lmandel - 3836553057c4 |

| Mark Hammond | Bug 1081158 - Ensure we report all login related errors. r=rnewman, a=lmandel - ad1f181d8593 |

| Ryan VanderMeulen | Backed out changesets d35ca21dada8, 772774b9be23, and 6d4536c5fa38 (Bug 1131638) for bustage. - 3a27c2da51d1 |

| Mike Shal | Bug 1137060 - Set _RESPATH for OSX xulrunner; r=glandium a=lmandel - becc3f84ea4e |

| Gijs Kruitbosch | Bug 1142521 - Disable casting on Firefox desktop by default. r=mconley, a=lmandel - ae0452603a3a |

| Bas Schouten | Bug 1131370 - Give content side more time to finish its copy. r=jrmuizel, a=lmandel - eaeb3aeb7a77 |

| Bas Schouten | Bug 1131370 - Try to ignore transient errors and increase D3D11 timeout as well. r=milan, a=lmandel - be0abc9b1af7 |

| Allison Naaktgeboren | Bug 1124895 - 1/2 Add password manager data to FHR. r=gps, a=lmandel - 5ec7f3ce97d2 |

| Justin Dolske | Backed out changeset 5ec7f3ce97d2 (landed the wrong/older version of the patch in Bug 1124895) - 42877284c697 |

| Allison Naaktgeboren | Bug 1124895 - 1/2 Add password manager data to FHR. r=gps, a=lmandel (relanding the correct patch) - fe5932c2b378 |

| Chris Double | Bug 1131884 - Video buffering calculation fails for some MP4 videos - r=jya a=lmandel - bb7b546e6188 |

http://release.mozilla.org/statistics/37/2015/03/17/fx-37-b5-to-b6.html

|

|

Soledad Penades: Superturbocharging Firefox OS app development with node-firefox |

Well, that’s funny–I finish writing a few modules for (potentially) node-firefox and then on the same day I discover the recording for my FOSDEM talk on node-firefox is online!

It’s probably not the best recording you’ve ever seen, as it is not recording the output of my laptop, but here are the slides too if you want to see my fabulously curated GIFs (and you know you want to). Here’s also the source of the slides, and the article for Mozilla Hacks that presents node-firefox and which might probably help you more than watching the video with the slides.

If you’re interested in watching the other Mozilla talks at FOSDEM they’re here.

|

|

Mozilla Reps Community: Reps Weekly Call – March 12th 2015 |

Last Thursday we had our weekly call about the Reps program, where we talk about what’s going on in the program and what Reps have been doing during the last week.

Summary

- MWC Recap

- FOSSAsia

- ReMo Council meeting

- Community Design Team

- Community Education

AirMozilla video

Don’t forget to comment about this call on Discourse and we hope to see you next week!

https://blog.mozilla.org/mozillareps/2015/03/17/reps-weekly-call-march-12th-2015/

|

|

Kannan Vijayan: The Smartphone as Application Server |

I’ve been working on a small proof of concept over the last couple of months. It’s an architecture for building smartphone apps that seamlessly “serve themselves” to devices around you (such as a smart-TV), using entirely open protocols and technologies.

It can loosely be viewed as a variation on ChromeCast, except instead of sending raw data over the wire to a smart-device, a smartphone app becomes a webapp-server that treats the smart-device as a web-client. In this prototype, the smart-device is a Raspberry Pi 2:

A Small Demo

The prototype is built entirely on open protocols and web technologies. The “app server” is just a standard smartphone app that runs its own webserver. The “app client” is a webpage, hosted by a browser running on the “smart endpoint” (in this demo a TV, or more specifically a Raspberry Pi 2 connected to a TV).

Here I’m demoing an Android app I wrote (called FlyPic) that displays a photo on the screen, and then lets the user control the photo on the screen by swiping it on the phone. The android app implements a “webapp architecture” where the server component runs on the phone and the client component runs on the Pi.

https://www.youtube.com/watch?v=g-zHE33xKng

How it Works

The overall architecture is pretty simple:

Step 1 – The FlyPic smartphone app uses service discovery on the local network to find the Pi. The Pi is running Linux, and uses the Avahi mDNS responder to broadcast a service under the name “browsercast”. The service points to a “handshake server”, which is a small HTTP server that is used to initiate the app session. After this step, FlyPic knows about the Raspberry Pi and how to connect to the handshake server.

FlyPic displays the list of discovered endpoints to the user.

Step 2 – When an endpoint is selected, FlyPic starts up a small embedded HTTP server, and a websocket server to stream events. It then connects to the handshake server on the Pi and asks the handshake server to start a web session. It tells the handshake server (using GET parameters) the address of the app webserver it started.

Step 3 – The handshake server on the Pi starts up a browser (fullscreen showing on the TV), giving it the address of the FlyPic http server (on the phone) to connect to.

Step 4 – The browser, after starting up, connects to the FlyPic http server URL it was given.

Step 5 – The browser gets the page and displays it. FlyPic serves up a page containing an image, and also some javascript code to establish a WebSocket connection back to FlyPic’s WebSocket server (to receive push touch events without having to poll the phone).

Step 6 – Since FlyPic is otherwise a regular Android app (which just happens to be running a HTTP and WebSocket server), it can listen for touch events on the phone.

Step 7 – When FlyPic gets touch events, it sends them to the page via the WebSocket connection that was established earlier. On the Pi, the webpage receives the touch events and moves the displayed picture back and forth to match what is happening on the phone.

And voila! I’m using my phone as an app server, my raspberry Pi as an app client, and “controlling” my app on the TV using my phone. At this point, I can pretty much do anything I want. I can make my app take voice input, or gyro input, or whatever. I can put arbitrarily complex logic in my app, and have it execute on the remote endpoint instead of on my phone.

What can we do with this?

I’m using a smartphone to leverage a remote CPU connected to the TV, to build arbitrary apps that run partly on the “endpoint” (TV) and partly on the “controller” (smartphone).

By leveraging web technologies, and pre-existing standard protocols, we can build rich applications that “send themselves” to devices around them, and then establish a rich interaction between the phone as a controller device, and the endpoint as a client device.

Use Cases?

I have some fun follow-up ideas for this application architecture.

One idea is presentations. No more hooking your laptop to your projector and getting screen sharing working. If your projector box acted as an endpoint device, your presentation app could effectively be written in HTML and CSS and JS, and run on the projector itself – animating all slide transitions using the CPU on the projector – but still take input from your phone (for swiping to the next and previous slides).

Another use case is games. Imagine a game-console that wouldn’t need to have games directly installed on it. You carry your games around with you, on your phone. High-performance games can be written in C++ and compiled with asm.js and webgl. When you’re near a console and want to use it to play, your game effectively “beams itself” to your game console, and you play it with your phone as a controller. When finished, you disconnect and walk off. Every game console can now play the games that you own.

Games can be rich and high-resolution, because they run directly on the game console, which has direct, low-latency access to the display. All the phone needs to do is shuttle the input events to the endpoint.

The phone’s battery life is preserved, since all the game computation is offloaded onto the endpoint.. network bandwidth is preserved since we’re only sending events back and forth.. graphics can be amped up since the game logic is running directly on the display device.

Games could also take advantage of the fact that the phone is a rich input device, and incorporate motion input, touch input, voice input, and camera input as desired. The game developer would be able to choose how much processing to do on the phone, and how much to do on the console, and tailor it appropriately to ensure that input from the phone to the game was appropriately low-latency.

The Code

The source code to get this going on your own Pi and Android device is available at: https://github.com/kannanvijayan/browsercast.

Please note, this is a VERY rough implementation. Error handling is barebones, almost nonexistant. The Android app will crash outside of the simplest usage behaviour (e.g. when the network connection times out), because I have very little experience with Android programming, and I learned just enough to implement the demo. The python scripts for the service discovery and the handshake HTTP server are a bit better put together, but still pretty rough.

Lastly, the HTTP server implementation I’m using is called NanoHTTPD (slightly modified), the original source for which is available here: https://github.com/NanoHttpd/nanohttpd

I’ve embedded the source for NanoHTTPD in my sources because I had to make some changes to get it to work. NanoHTTPD is definitely not a production webserver, and its websocket implementation is a bit unpolished. I had to hack it a bit to get it working correctly for my purposes.

Final Thoughts

It really seems like this design has some fundamental advantages compared to the usual “control remote devices with your smartphone” approach. I was able to put together a prototype with basically a raspberry Pi, and some time. Every single part of this design is open:

1. Any web browser can be used for display. I’ve tested this with both Chrome and Firefox. Any browser that is reasonably modern will serve fine as a client platform.

2. Any hardware platform can be used as the endpoint device. You could probably take the python scripts above and run them on any modern linux-based device (including laptops). Writing the equivalent Windows or OSX wouldn’t be hard either.

3. Nearly any smartphone can act as a controller. If a phone supports service discovery and creating an HTTP server on the local network, that phone can work with this design.

4. You can develop your app in any “server-side” language you want. My test app is written in Java for the Android. I see no reason one wouldn’t be able to write an iOS app that did the same thing, in Objective-C or Swift.

5. You can leverage the massive toolchest of web display technologies, and web-app frameworks, to build your app’s client-side experience.

I’d like to see what other people can build with this approach. My smartphone app skills are pretty limited. I wonder what a skilled smartphone developer could do.

http://vijayan.ca/blog/2015/03/17/the-smartphone-as-application-server/

|

|

Air Mozilla: Martes mozilleros |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

|

|

Kim Moir: Mozilla pushes - February 2015 |

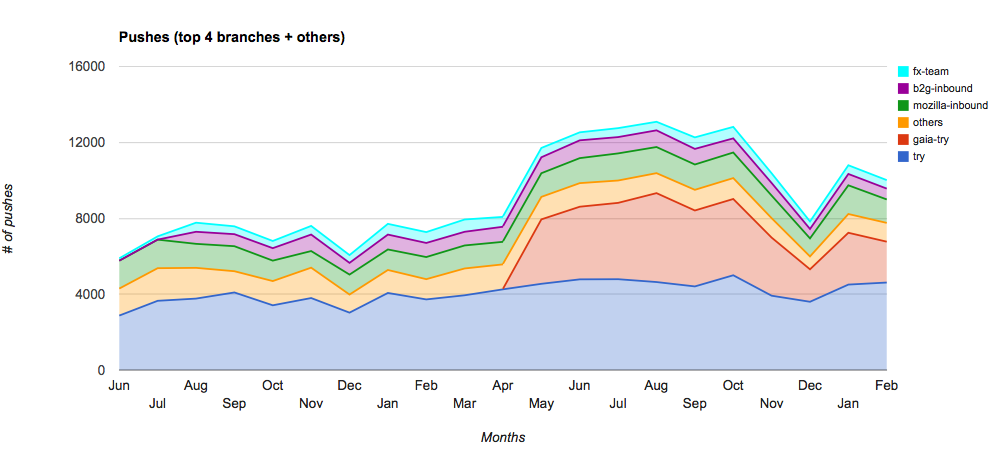

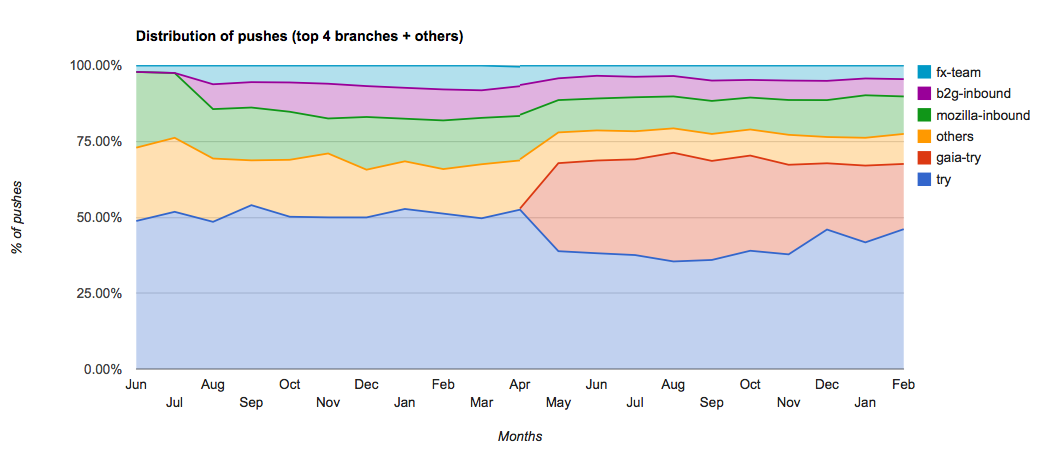

Trends

Although February is a shorter month, the number of pushes were close to those recorded in the previous month. We had a higher average number of daily pushes (358) than in January (348).

Highlights

10015 pushes

358 pushes/day (average)

Highest number of pushes/day: 574 pushes on Feb 25, 2015

23.18 pushes/hour (highest)

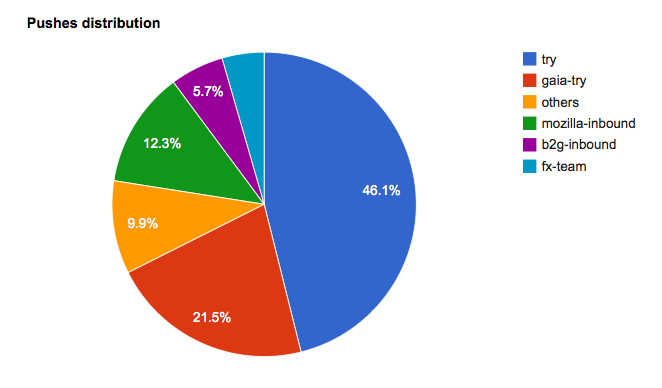

General Remarks

Try had around 46% of all the pushes

The three integration repositories (fx-team, mozilla-inbound and b2g-inbound) account around 22% of all the pushes

Records

August 2014 was the month with most pushes (13090 pushes)

August 2014 has the highest pushes/day average with 422 pushes/day

July 2014 has the highest average of "pushes-per-hour" with 23.51 pushes/hour

October 8, 2014 had the highest number of pushes in one day with 715 pushes

http://relengofthenerds.blogspot.com/2015/03/mozilla-pushes-february-2015.html

|

|

Soledad Penades: Install to ADB: installing packaged Firefox OS apps to USB connected phones (using ADB) |

I abhor repetition, so I’m always looking for opportunities to improve my processes. Spending a bit of time early on can save you so much time on the long run!

If you’re trying to build something that can only run in devices (for example, apps that use WiFi direct), pushing updates gets boring really quickly: with WebIDE you have to select each USB device manually and then initiate the push.

So I decided I would optimise this because I wanted to focus on writing software, not clicking on dropdowns and etc.

And thus after a bit of research I can finally show install-to-adb:

In the video you can see how I’m pushing the same app to two Flame phones, both of them connected with USB to my laptop. The whole process is a node.js script (and a bunch of modules!).

The module is not in npm yet so to install it:

npm install git+https://github.com/sole/install-to-adb.gitAnd then to deploy an app to your devices, just pass in the app path, and it will do its magic:

var installToADB = require('install-to-adb');

installToADB('/path/to/packaged/app').then(function(result) {

console.log('result', result);

process.exit(0);

});Guillaume thinks this is WITCHCRAFT!

@supersole WITCHCRAFT!!

— Guillaume Marty (@g_marty) March 17, 2015

… and I wouldn’t blame him!

But it is actually just the beauty of port forwarding. What installToADB does is enumerate all connected ADB devices, then set up port forwarding so each device appears as a port in localhost, and then from there it’s just the same process as pushing to a simulator or any other firefox client: we connect, get a client object, and install the app using said client. That’s it!

Other artifacts

One of my favourite parts of writing code is when you refactor parts out and end up producing a ton of interesting artifacts that can be used everywhere else! In this case I built two other modules that can come in handy if you’re doing app development.

push-app

This is a module that uses a bunch of other node-firefox modules. It’s essentially syntactic sugar (or modular sugar?) to push an app to a client. In the process it will make sure there are no more copies of it installed, and also will return you the app once it’s installed, so you can do things such as launching it or any other thing you feel like doing.

Using it is similar to using other node-firefox modules:

pushApp(client, appPath);and it returns a promise, so you can chain things together!

It’s not on npm, so…

npm install --save git+https://github.com/sole/push-appsample-packaged-app

I also noticed that I kept copying and pasting the same sample app for each demo and that is a big code smell. So I made another npm module that gives you a sample packaged app. Once you install it:

npm install --save git+https://github.com/sole/sample-packaged-appyou can then access its contents via node_modules/sample-packaged-app. In node code, you could build its full path with this:

var path = require('path');

var appPath = path.join(__dirname, 'node_modules', 'sample-packaged-app');and then use it for example with installToADB ![]()

Not in node-firefox and not on npm?

You might have surely noticed that these modules are not in node-firefox or in npm. That’s because they are at a super proof of concept stage, and that’s why they are not even named with a node-firefox- prefix.

On the other hand I’m pretty sure there’s people who would appreciate being able to optimise their processes today, and nothing stops them from installing the modules from my repositories.

Once the modules become ‘official’, they’ll be published to npm and updating the code should be fairly easy, and I will transfer the repositories to the mozilla organisation so GitHub will take care of redirecting people to the right place.

Hope you enjoy your new streamlined process! ![]()

|

|

Carsten Book: Please take part in the Sheriff Survey |

Hi,

But of course there is always room for improvements and ideas how we can make things better. In order to get a picture from our Community how things went and how we can improve our day-to day-work.

– The Mozilla Tree Sheriffs!

https://blog.mozilla.org/tomcat/2015/03/17/please-take-part-in-the-sheriff-survey/

|

|