Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Benjamin Kerensa: Speaking at OSCON 2014 |

In July, I’m speaking at OSCON. But before that, I have some other events coming up including evangelizing Firefox OS at Open Source Bridge and co-organizing Community Leadership Summit. But back to OSCON; I’m really excited to speak at this event. This will be my second time speaking (I must not suck?) and this time I have a wonderful co-speaker Alex Lakatos who is coming in from Romania.

For me, OSCON is a really special event because very literally it is perhaps the one place you can find a majority of the most brilliant minds in Open Source all at one event. I’m always very ecstatic to listen to some of my favorite speakers such as Paul Fenwick who always seems to capture the audience with his talks.

This year, Alex and I are giving a talk on “Getting Started Contributing to Firefox OS,” a platform that we both wholeheartedly believe in and we think folks who attend OSCON will also be interested in.

#OSCON 2014 presents “Getting Started Contributing to Firefox OS” by @bkerensa of @mozilla http://t.co/f1iumzhg1q

— O’Reilly OSCON (@oscon) May 14, 2014

And last but not least, for the first time in some years Mozilla will have a booth at OSCON and we will be doing demos of the newest Firefox OS handsets and tablets and talking on some other topics. Be sure to stop by the booth and to fit our talk into your schedule. If you are arriving in Portland early, then be sure to attend the Community Leadership Summit which occurs the two days before OSCON, and heck, be sure to attend Open Source Bridge while you’re at it.

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/_sSGCLYK0BM/speaking-oscon-2014

|

|

Mozilla Reps Community: Measuring our impact with better metrics |

Last year Reps organized several Apps Days events to have apps submitted to the Firefox Marketplace. Those Apps Days appeared at events in the Reps Portal, and they had metrics like other events. There is no easy way to see how many apps were submitted as a result of those events because of how the metrics were stored as text. While the events had significant impact, it was hard to measure because the Reps Portal did not structure or aggregate event metrics.

Today we are introducing a better, easier way to create and report metrics for your events. We have heard from many Reps that 1) it is hard to know what metrics are useful when planning an event and 2) it would be valuable to report the actual success of your events.

In the past, you entered metrics and success scenarios (predicted outcomes) in text boxes when creating your events. Now you can select from a list of common metrics that Rosana has curated. For each metric type, you will need to provide a numeric value for the expected outcome.

Action needed: If you already have a future event on the Reps Portal and it is starting after June 16th, you need to edit your event and select at least 2 new-style metrics from the dropdown menus. Be sure to do this before June 16th.

Also, starting today any new events created will use the new metrics types.

We have changed the attendance estimate to a numeric field, so you can be more specific about how many people you believe will attend your event.

As an event organizer you will receive an email notification after the event asking you to report your success. This form asks for a count of the number of attendees and the actual outcomes of the metrics you created. Completing this form counts as an activity, and that activity will appear on your profile. Soon we will have an aggregate display of all event metrics.

Why are we using a curated list of metrics? It helps us have standardized metrics for events and also measure the impact of our events in aggregate. For example, going forward we will know how many strings have been translated this year at all the Localization Sprint events and how many Firefox Marketplace apps have been submitted. Having a fixed list of metrics may feel limiting. If there is a metric you think would be valuable to have for Reps events, add it this suggestions etherpad.

Having better metrics and data will significantly help the Reps program measure its impact. From knowing the number of attendees at events to having estimated and actual metrics data, we will be able to quantify our impact in a meaningful way, both for individual events and across the hundreds of events that Reps participate in each year.

https://blog.mozilla.org/mozillareps/2014/06/05/measuring-our-impact-with-better-metrics/

|

|

Jess Klein: Portfolio Design Workshop |

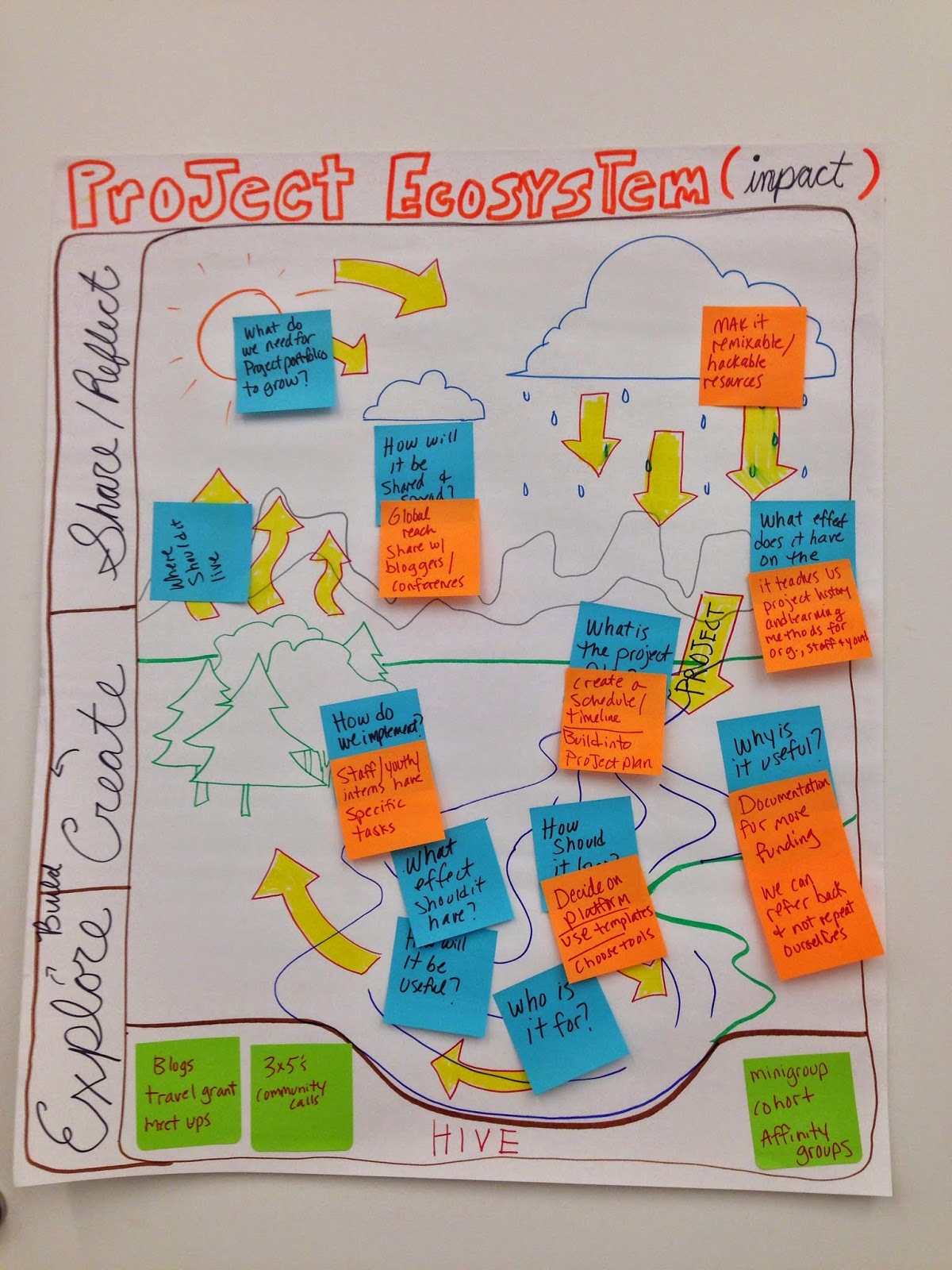

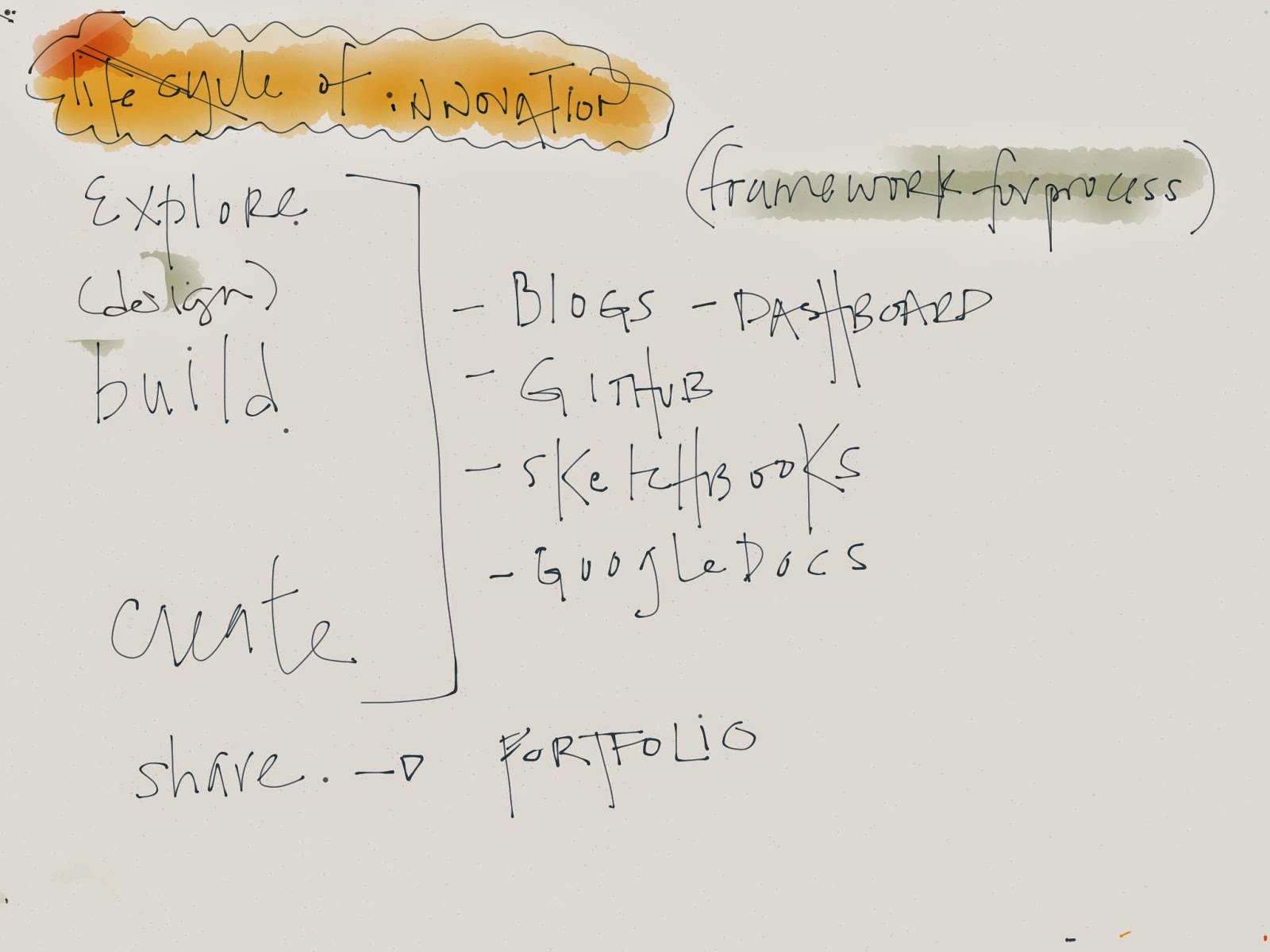

Lifecycle of a Project

Workshop participants reviewed the timeline of a project from exploration to the phase where you might be sharing out learnings (the portfolio touchpoint). Julia used this chart which compares the creation cycle to the lifecycle of water (how amazing is that?!). Particpants articulated that while there are lots of touchpoints and feeback loops after something has been created, there is a real desire for in-progress community support and reflection.

Portfolio template:

Participants used this template to start developing out their project portfolio piece. Some feedback that Hive members gave is that it would be awesome if they could be developing the content throughout their making process. Additionally, they explained that it was really important to be able to identify who the author of the content was and in turn, who that author was "speaking to" via the medium of the portfolio.

Opportunity!

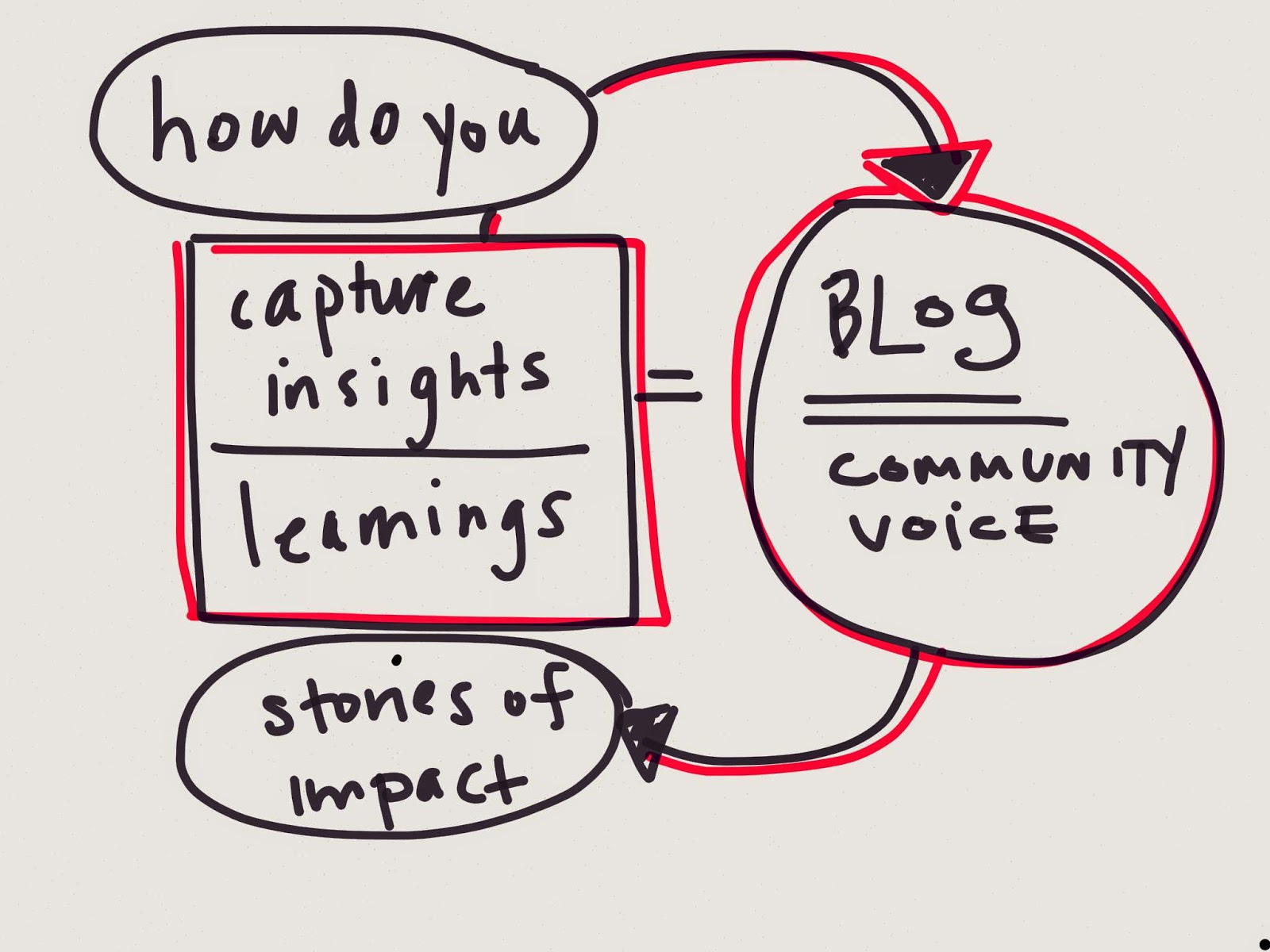

After listening to Hive members talk for a few hours and taking notes (below), I realized that there's a real opportunity to support what Brian from Beam Center referred to as a "framework for innovation" and what Leah from the Hive called a "living archive of process."

I am going to tinker with Atul, Kat, Chris and the rest of the Hive community to think through what this could be, but overall I found this workshop inspiring and am eager to start noodling in this opportunity space. Some initial thoughts is that we should think through what a process dashboard and process project profile could look like. Here's what I sketched while at the workshop, but am going to dive deeper this week.

http://jessicaklein.blogspot.com/2014/06/portfolio-design-workshop.html

|

|

Christian Heilmann: Be a great presenter: deliver on and off-stage |

As a presenter at a conference, your job is to educate, entertain and explain. This means that the few minutes on stage are the most stressful, but should also be only a small part of your overall work.

A great technical presentation needs a few things:

- Research – make sure your information is up-to-date and don’t sell things that don’t work as working

- Sensible demonstrations – by all means show what some code does before you talk about it. Make sure your demo is clean and sensible and easy to understand.

- Engagement materials – images, videos, animations, flowcharts, infographics. Make sure you have the right to use those and you don’t just use them for the sake of using them.

- Handover materials – where should people go after your talk to learn more and get their hands dirty?

- An appropriate slide deck – your slides are wall-paper for your presentation. Make them supportive of your talk and informative enough. Your slides don’t have to make sense without your presentation, but they should also not be distracting. Consider each slide an emphasis of what you are telling people.

- A good narration – it is not enough to show cool technical things. Tell a story, what are the main points you want to make, why should people remember your talk?

- An engaging presentation – own the stage, own the talk, focus the audience on you.

All of this needs a lot of work, collecting on the web, converting, coding, rehearsing and learning to become better at conveying information. All of it results in materials you use in your talk, but may also not get to use whilst they are very valuable.

It is not about you, it is about what you deliver

A great presenter could carry a talking slot just with presence and the right stage manner. Most technical presentations should be more. They should leave the audience with a “oh wow, I want to try this and I feel confident that I can do this now” feeling. It is very easy to come across as “awesome” and show great things but leave the audience frustrated and confused just after they’ve been delighted by the cool things you are able to do.

Small audience, huge viewer numbers

Great stuff happens at conferences, great demos are being shown, great solutions explained and explanations given. The problem is that all of this only applies to a small audience, and those on the outside lack context.

This is why a lot of times parts of your presentation might get quoted out of context and demos you showed to make a point get presented as endorsed by you missing the original point.

In essence: conferences are cliquey by design. That’s OK, after all people pay to go to be part of that select group and deserve to get the best out of it. You managed to score a ticket – you get to be the first to hear and the first to talk about it with the others there.

It gets frustrating when parts of the conference get disseminated over social media. Many tweets talking about the “most amazing talk ever” or “I can’t believe the cool thing $x just showed” are not only noise to the outside world, they also can make people feel bad about missing out.

This gets exacerbated when you release your slides and they don’t make any sense, as they lack notes. Why should I get excited about 50MB of animated GIFs, memes and hints of awesome stuff? Don’t make me feel bad – I already feel I am missing out as I got no ticket or couldn’t travel to the amazing conference.

If you release your talk materials, make them count. These are for people on the outside. Whilst everybody at an event will ask about the slides, the number of people really looking at them afterwards is much smaller than the ones who couldn’t go to see you live.

Waiting for recordings is frustrating

The boilerplate answer to people feeling bad about not getting what the whole twitter hype is about is “Oh, the videos will be released, just wait till you see that”. The issue with that is that in many cases the video production takes time and there is a few weeks up to months delay between the conference and the video being available. Which is OK, good video production is hard work. It does, however water down the argument that the outside world will get the hot cool information. By the time the video of the amazing talk right now is out we’re already talking about another unmissable talk happening at another conference.

Having a video recording of a talk is the best possible way to give an idea of how great the presentation was. It also expects a lot of dedication of the viewer. I watch presentation videos in my downtime – on trains, in the gym and so on. I’ve done this for a while but right now I find so much being released that it becomes impossible to catch up. I just deleted 20 talks from my iPod unwatched as their due-date has passed: the cool thing the presenter talked about is already outdated. This seems a waste, both for the presenter and the conference organiser who spent a lot of time and money on getting the video out.

Asynchronous presenting using multiple channels

Here’s something I try to do and I wished more presenters did: as a great presenter should be aware that you might involuntarily cause discontent and frustration outside the conference. People talk about the cool stuff you did without knowing what you did.

Instead of only delivering the talk, publish a technical post covering the same topic you talked about. Prepare the post using the materials you collected in preparation of your talk. If you want to, add the slides of your talk to the post. Release this post on the day of your conference talk using the hashtag of the conference and explaining where and when the talk happens and everybody wins:

- People not at the conference get the gist of what you said instead of just soundbites they may quote out of context

- You validate the message of your talk – a few times I re-wrote my slides after really trying to use the technology I wanted to promote

- You get the engagement from people following the hashtag of the conference and give them something more than just a hint of what’s to come

- You support the conference organisers by drumming up interest with real technical information

- The up-to-date materials you prepared get heard web-wide when you talk about them, not later when the video is available

- You re-use all the materials that might not have made it into your talk

- Even when you fail to deliver an amazing talk, you managed to deliver a lot of value to people in and out of the conference

For extra bonus points, write a post right after the event explaining how it went and what other parts about the conference you liked. That way you give back to the organisers and you show people who went there that you were just another geek excited to be there. Who knows, maybe your materials and your enthusiasm might be the kick some people need to start proposing talks themselves.

http://christianheilmann.com/2014/06/05/be-a-great-presenter-deliver-on-and-off-stage/

|

|

Mozilla Open Policy & Advocacy Blog: Ensuring a More Secure Internet: Reset the Net and the Cyber Security Delphi |

It’s been a year since the first National Security Agency documents leaked by Edward Snowden fundamentally changed the way people view government’s role in balancing privacy and safety. The impact was felt across the world, with greater public awareness of surveillance and more energy in civil society to mobilize. And yet, the public policy landscape itself has changed very little and the threats to privacy and security are just as strong.

Today, we are announcing two efforts that will contribute to the global movement to strengthen Internet security and protect the open Web:

- Reset the Net — Mozilla’s participation in the ‘Reset the Net’ day of action to improve security against widespread surveillance.

- Mozilla’s Cyber Security Delphi — A new research project with security leaders to better understand threats to security on the Internet.

Reset the Net – A Day of Action to Improve Security and Protect Against Mass Surveillance

Mozilla is proud to join with Fight for the Future and many other organizations in Reset the Net, a day of action to stop mass surveillance by building proven security into the everyday Internet. We encourage all Mozillians to make our collective voices heard by signing the petition, sharing it with our networks, and adopting stronger privacy and security tools and practices.

Firefox is already made under the principle that security and privacy are fundamental and must not be treated as optional. On Firefox, we’ve included several security and privacy features:

- Website ID — A site identity button that allows for users to quickly find out if the website they are viewing is encrypted, if it is verified, who owns the website, and who verified it. This helps users avoid malicious websites that are trying to get you to provide personal information.

- Built-in phishing and malware protection.

- Private browsing.

- Lightbeam — A powerful add-on with interactive visualizations that enables users to see the first and third party sites you interact with on the Web.

The Cyber Security Delphi — A New Research Initiative to Develop the Security Agenda

By all accounts, threats to the free and open Web are intensifying. According to a survey released last week by Consumer Reports, one in seven U.S. consumers received a data breach notice in 2013, and yet 62% say they did nothing to address it. Policy reform, on its own, has proven insufficient to battle the barrage of data breaches that result in an insecure Web and, for too long, the discussion of security has been dominated by a one-sided perspective that pits risk management against improving security and privacy.

In response, Mozilla is creating a path forward through the Cyber Security Delphi. As part of the Delphi research and recommendation initiative, Mozilla will bring together the best minds in security to understand threat vectors to online security and develop a concrete agenda to address them. To guide the project, we’ve recruited an expert advisory board, including: Kelly Caine (Clemson), Matthew Green (Johns Hopkins), Ed Felten (Princeton), Chris Soghoian (ACLU), and Danny McPherson (Verisign).

We anticipate recruiting approximately 50 participants from across 10 professional disciplines for the study. Ideal composition for the study would include specialists in computer security, network security, cryptography, data security, application security, as well as professionals from industry and public sector organizations responsible for addressing threats and vulnerabilities associated with cyber security.

This Delphi process will result in substantive recommendations to improve the security of the Internet. Beyond this, it will level the playing field for all sectors, including the digital rights community, and enable us to create an affirmative agenda for reform. We expect to deliver the final report and recommendation in the Fall.

Thank you to The MacArthur Foundation for supporting this important effort.

Help us Advance and Protect the Free and Open Web.

The threats to the free and open Web are not academic. What’s at stake is an Internet that fuels economic growth, provides a level playing field and opportunity for all, and enables people to freely express themselves.

While the arc of the Web is pointing in the wrong direction, it doesn’t need to be. We believe that a global grassroots movement can hold our leaders accountable to the principles of protecting the open Web as a resource for all. We believe that this movement will rise from the bottom up, and will be led by a stronger set of civil society organizations all over the world. And we believe that the Mozilla community as makers of the Web will be on the leading edge of the movement.

Fighting for the open Web will be a marathon, not a sprint, and will require many voices. In this spirit, you’ll be hearing a lot from Mozilla and many other organizations as we work together to build this global movement.

We want to invite you to join the discussion and keep updated on progress as we fight for the free and open web by signing up at our Net Policy page at mzl.la/netpolicy.

Dave Steer is Director of Advocacy at Mozilla Foundation

|

|

Robert Nyman: Speaking in 30 countries |

I’ve been very fortunate and lucky to get the chance to travel around in the world and speak at conferences, and now I’ve reached speaking in 30 countries!

All the slides, videos and more are available on Lanyrd.

For me it has been a fantastic learning experience, from getting to see all kinds of people, circumstances and contexts to getting to see many differing parts of the world.

Countries I’ve spoken in

The countries I’ve spoken in, as taken from Lanyrd:

- Argentina

- Belgium

- Brazil

- Bulgaria

- Canada

- Chile

- Colombia

- Croatia

- Czech Republic

- Denmark

- England

- Finland

- France

- Germany

- India

- Italy

- Mexico

- Netherlands

- Norway

- Poland

- Portugal

- Romania

- Russia

- Scotland

- South Africa

- Spain

- Sweden

- Switzerland

- United States

- Uruguay

I would like to add Australia or New Zealand to this list, though, to have all the major continents. ![]()

Let me know if you’re interested.

See you!

Thank you to all the conference organizers and attendees, and I hope to see you at another conference soon! ![]()

|

|

David Burns: My Ideal build, test and land world |

The other week I tweeted I was noticing for that day we had 1 revert push to Mozilla Inbound in every 10 pushes. For those that don't know, Mozilla Inbound is the most active integration repository that Firefox code lands in. A push can contain a number of commits depending on the bug or if a sheriff is handling checkin-needed bugs.

This tweet got replies like, and I am paraphrasing, "That's not too bad", "I expected it to be worse". Personally I think this is awful rate. Why? On that day, only 80% of pushes were code changes to the tree. The bad push and the revert leads to no changes to the tree but still uses our build and test infrastructure. This mean that at best we can(on that day) only be 80% efficient. So how can we fix this?

Note: A lot of this work is already in hand but I want to document where I wish them to go. A lot of the issues are really paper cuts but it can be death by 1000 paper cuts.

Building

Mach, the current CLI dispatch tool at Mozilla, passes the build detail to the build scripts. It is a great tool if you haven't been using it yet. The work the build peers have done with this is pretty amazing. However I do wish the build targets passed to Mach and then executed were aligned with the way that Chromium, Facebook, Twitter and Google build targets worked.

For example, working on Marionette, if I want run marionette tests I would do |./mach //testing/marionette:test| instead of the current |./mach marionette-test| . By passing in the directory we are declaring what we want explicitly to be built and test.. The moz.build file should have a dependency saying that we need a firefox binary (or apk or b2g). The test task in the moz.build in testing/marionette folder would pick runtests.py and then pass in the necessary arguments ideally based on items in the MozConfig. Knowing the relevant arguments based on the build is hard work involving looking at your history or at a wiki.

Working on something where it has unit tests and mochitests or xpcshell tests? It's simple to just change the task. E.g. ./mach //testing/marionette:test changes quickly to //testing/marionette:xpcshell. Again, not worrying about arguments when we can create sane defaults based on what we just built. I have used testing in my examples because it is simple to show different build targets on the same call.

The other reason declaring the path (and mentioning the dependencies in the same manner) is that if you call |./mach //testing/marionette:test| after updating your repo it will do an incremental build (or a clobber if needed) without you knowing you needed it. Manually clearing things or running builds just to run tests is just busy work again.

Reviews and Precommit builds/tests

Want a review? You currently either have to use bzexport or manually create a diff and upload it to the bug and set the reviewer. The Bugzilla team are working to stand up review board that would allow us to upload patches and has a gives us a better review tool.

The missing pieces for me are: 1)We have to manually pick a reviewer and 2) that we don't have a pre commit build and test step.

1) I have been using Opera's Critic for reviewing Web Platform Tests. Having the ability to assign people to review changes for a directory means that reviewing is everyones responsibility. Currently Bugzilla allows you to pick a reviewer based on the component that the bug is on. Sometimes a patch may span other areas and you then need to figure out who a reviewer should be. I think that we can do better here.

As for 2) don't necessarily need to do everything but the equivalent of a T-Style run would suffice I'm my opinion. We could even work to pair this down more to be literally a handful of tests that regularly catch bugs or make it run tests based on where the patch was landing.

Why does this matter, we have try that people can use and "my code works" and "it was reviewed, it will compile". Mozilla Inbound was closed for a total of 2 days (48+ hours) in April and 1 day (24hrs) in May. At the moment I only have the data on why the tree was closed, not the individual bugs that caused the failure, but a pre commit step would definitely limit the damage. The pre commit step might also catch some of the test failures (if we had test suites we could agree on for being the smoke test suite) which had Mozilla Inbound closed for over 2 1/2 (61+ Hours) days in April and over 3 days (72+ hours) in May.

Landing code

Once the review has passed we still have to manually push the code or set a keyword in the bug (checkin-needed) so that the sheriffs can land it. This manual step is just busy work really that isn't really needed. If something has a r+ then ideally we should be queueing this up to be landed. This is minor compared to manual step required to update the bug with the SHA when it has landed, when it is landed we should be updating the bug accordingly. It's not really that hard to do.

Unneeded manual steps have an impact on engineering productivity which have a financial cost that could be avoided.

I think the main reason why a lot of these issues have never been surfaced is there is not enough data to show the issues. I have created a dashboard, only has the items I care about currently but could easily be expanded if people wanted to see other bits of information. The way we can solve the issues above is being able to show the issues.

http://www.theautomatedtester.co.uk/blog/2014/my-ideal-build-test-and-land-world.html

|

|

Alon Zakai: Looking through Emscripten output |

Imagine we have a file code.c with contents

#include

int double_it(int x) {

return x+x;

}

int main() {Compiling it with emcc code.c , we can run it using node a.out.js and we get the expected output of hello, world! So far so good, now lets look in the code.

printf("hello, world!\n");

}

The first thing you might notice is the size of the file: it's pretty big! Looking inside, the reasons become obvious:

- It contains comments. Those would be stripped out in an optimized build.

- It contains runtime support code, for example it manages function pointers between C and JS, can convert between JS and C strings, provides utilities like ccall to call from JS to C, etc. An optimized build can reduce those, especially if the closure compiler is used (--closure 1): when enabled, it will remove code not actually called, so if you didn't call some runtime support function, it'll be stripped.

- It contains large parts of libc! Unlike a "normal" environment, our compiler's output can't just expect to be linked to libc as it loads. We have to provide everything we need that is not an existing web API. That means we need to provide a basic filesystem, printf/scanf/etc. ourselves. That accounts for most of the size, in fact. Closure compiler helps with the part of this that is written in normal JS, for the part that is compiled from C, it gets stripped by LLVM and is minifed by our asm.js minifier in optimized builds.

(Side note: We could probably optimize this quite a bit more. It's been lower priority I guess because the big users of Emscripten have been things like game engines, where both the code and especially the art assets are far larger anyhow.)

Ok, getting back to the naive unoptimized build - let's look for our code, the functions double_it() and main(). Searching for main leads us to

function _main() {This seems like quite a lot for just printing hello world! It's because this is unoptimized code. So let's look at an optimized build. We need to be careful, though - the optimizer will minify the code to compress it, and that makes it unreadable. So let's build with -O2 -profiling, which optimizes in all the ways that do not interfere with inspecting the code (to profile JS, it is very helpful to read it, hence that option keeps it readable but still otherwise optimized; see emcc --help for the -g1, -g2 etc. options which do related things at different levels). Looking at that code, we see

var $vararg_buffer = 0, label = 0, sp = 0;

sp = STACKTOP;

STACKTOP = STACKTOP + 16|0;

$vararg_buffer = sp;

(_printf((8|0),($vararg_buffer|0))|0);

STACKTOP = sp;return 0;

}

function _main() {There is some stack handling overhead, but now it's clear that all it's doing is calling puts(). Wait, why is it calling puts() and not printf() like we asked? The LLVM optimizer does that, as puts() is faster than printf() on the input we provide (there are no variadic arguments to printf here, so puts is sufficient).

var i1 = 0;

i1 = STACKTOP;

_puts(8) | 0;

STACKTOP = i1;

return 0;

}

Keeping Code Alive

What about the second function, double_it()? There seems to be no sign of it. The reason is that LLVM's dead code elimination got rid of it - it isn't being used by main(), which LLVM assumes is the only entry point to the entire program! Getting rid of unused code is very useful in general, but here we actually want to look at code that is dead. We can disable dead code elimination by building with -s LINKABLE=1 (a "linkable" program is one we might link with something else, so we assume we can't remove functions even if they aren't currently being used). We can then find

function _double_it(i1) {(Note btw the "_" that prefixes all compiled functions. This is a convention in Emscripten output.) Ok, this is our double_it() function from before, in asm.js notation: we coerce the input to an integer (using |0), then we multiply it by two and return it.

i1 = i1 | 0;

return i1 << 1 | 0;

}

We can keep code alive by calling it, as well. But if we called it from main, it might get inlined. So disabling dead code elimination is simplest. You can also do this in the C/C++ code, using the C macro EMSCRIPTEN_KEEPALIVE on the function (so, something like int EMSCRIPTEN_KEEPALIVE double_it(int x) { ).

C++ Name Mangling

Note btw that if our file had the suffix cpp instead of c, things would have been less fun. In C++ files, names are mangled, which would cause us to see

function __Z9double_iti(i1) {You can still search for the function name and find it, but name mangling adds some prefixes and postfixes.

asm.js Stuff

Once we can find our code, it's easy to keep poking around. For example, main() calls puts() - how is that implemented? Searching for _puts (again, remember the prefix _) shows that it is accessed from

var asm = (function(global, env, buffer) {All asm.js code is enclosed in a function (this makes it easier to optimize - it does not depend on variables from outside scopes, which could change). puts(), it turns out, is written not in asm.js, but in normal JS, and we pass it into the asm.js block so it is accessible - by simply storing it in a local variable also called _puts. Looking further up in the code, we can find where puts() is implemented in normal JS. As background, Emscripten allows you to implement C library APIs either in C code (which is compiled) or normal JS code, which is processed a little and then just included in the code. The latter are called "JS libraries" and puts() is an example of one.

'use asm';

// ..

var _puts=env._puts;

// ..

// ..main(), which uses _puts..

// ..

})(.., { .. "_puts": _puts .. }, buffer);

Conclusion

You don't need to read the code that is output by any of the compilers you use, including Emscripten - compilers emit code meant to be executed, not understood. But still, sometimes it can be interesting to read it. And it's easier to do with a compiler that emits JavaScript, because even if it isn't typical hand-written JavaScript, it is still in a fairly human-readable format.

http://mozakai.blogspot.com/2014/06/looking-through-emscripten-output.html

|

|

Hub Figui`ere: Hack the web: No Flash |

I am a hipster Flash hater. I hated Flash before Steve Jobs told it was bad. I hate Flash before Adobe said there would be no Flash 7 for Linux. I don't have Flash on my machine. I even coined "fc;dw".

I have been muling over an idea for far too long, and an enlightning conversation with fellow Mozillians made me do it Tuesday night.

I therefor introduce a proof of concept Firefox add-on: No Flash.

The problem: there are a lot of pages around the web that embed video from big name video website, like Youtube and Vimeo. These pages might use, more often than not, the Flash embedding, either because they predate HTML5, or they use a plugin for their CMS (Wordpress) that only does that. Getting these fixed will be a pointless effort. On a positive note, it appears that Google own blogspot modified the embedding of the Youtube content they serve already.

So let's fix this on the client side.

Currently the addon will look for embedded Flash Youtube and Vimeo players and replace them in the document by the more modern iframe embedding. This has the good taste of using the vendor detection for the actual player.

Note that this is, in some way, similar to what Safari on iOS does, at least with Youtube, where they replace the Flash player with the system built-in one.

Before:

Notice the Flash placeholders - I don't have Flash.

After:

Note the poster images.

Feel free to checkout the source code

Or file issues

http://www.figuiere.net/hub/blog/?2014/06/04/849-hack-the-web-no-flash

|

|

Sean McArthur: mocha-text-cov |

I’ve been looking for a super simple reporter for Mocha that shows a summary of code coverage in the console, instead of requiring me to pipe to a file and open a browser. I couldn’t find one. So I made mocha-text-cov.

Here’s a truncated example, if you don’t want to click through:

> mocha --ui exports --require blanket --reporter mocha-text-cov

Coverage Summary:

Name Stmts Miss Cover Missing

---------------------------------------------------------

lib\config.js 89 0 100%

lib\console.js 140 0 100%

lib\filter.js 23 0 100%

lib\filterer.js 22 0 100%

lib\formatter.js 57 4 93% 37,54,55,97

lib\index.js 44 0 100%

lib\levels.js 16 0 100%

lib\logger.js 155 0 100%

lib\record.js 67 5 93% 97,98,99,100,101

lib\utils\json.js 16 5 69% 10,11,12,13,14

=========================================================

TOTAL 629 14 98%

|

|

Ben Hearsum: More on “How far we’ve come” |

After I posted “How far we’ve come” this morning a few people expressed interest in what our release process looked like before, and what it looks like now.

The earliest recorded release process I know of was called the “Unified Release Process”. (I presume “unified” comes from unifying the ways different release engineers did things.) As you can see, it’s a very lengthy document, with lots of shell commands to tweak/copy/paste. A lot of the things that get run are actually scripts that wrap some parts of the process – so it’s not as bad as it could’ve been.

I was around for much of the improvements to this process. Awhile back I wrote a series of blog posts detailing some of them. For those interested, you can find them here:

I haven’t gotten around to writing a new one for the most recent version of the release automation, but if you compare our current Checklist to the old Unified Release Process, I’m sure you can get a sense of how much more efficient it is. Basically, we have push-button releases now. Fill in some basic info, push a button, and a release pops out:

|

|

John O'Duinn: Farewell to Tinderbox, the world’s 1st? 2nd? Continuous Integration server |

In April 1997, Netscape ReleaseEngineers wrote, and started running, the world’s first? second? continuous integration server. Now, just over 17 years later, in May 2014, the tinderbox server was finally turned off. Permanently.

This is a historic moment for Mozilla, and for the software industry in general, so I thought people might find it interesting to get some background, as well as outline the assumptions we changed when designing the replacement Continuous Integration and Release Engineering infrastructure now in use at Mozilla.

At Netscape, developers would checkin a code change, and then go home at night, without knowing if their change broke anything. There were no builds during the day.

Instead, developers would have to wait until the next morning to find out if their change caused any problems. At 10am each morning, Netscape RelEng would gather all the checkins from the previous day, and manually start to build. Even if a given individual change was “good”, it was frequently possible for a combination of “good” changes to cause problems. In fact, as this was the first time that all the checkins from the previous day were compiled together, or “integrated” together, surprise build breakages were common.

This integration process was so fragile that all developers who did checkins in a day had to be in the office before 10am the next morning to immediately help debug any problems that arose with the build. Only after the 10am build completed successfully were Netscape developers allowed to start checking-in more code changes on top of what was now proven to be good code. If you were lucky, this 10am build worked first time, took “only” a couple of hours, and allowed new checkins to start lunchtime-ish. However, this 10am build was frequently broken, causing checkins to remain blocked until the gathered developers and release engineers figured out which change caused the problem and fixed it.

Fixing build bustages like this took time, and lots of people, to figure out which of all the checkins that day caused the problem. Worst case, some checkins were fine by themselves, but cause problems when combined with, or integrated with, other changes, so even the best-intentioned developer could still “break the build” in non-obvious ways. Sometimes, it could take all day to debug and fix the build problem – no new checkins happened on those days, halting all development for the entire day. More rare, but not unheard of, was that the build bustage halted development for multiple days in a row. Obviously, this was disruptive to the developers who had landed a change, to the other developers who were waiting to land a change, and to the Release Engineers in the middle of it all…. With so many people involved, this was expensive to the organization in terms of salary as well as opportunity cost.

If you could do builds twice a day, you only had half-as-many changes to sort through and detangle, so you could more quickly identify and fix build problems. But doing builds more frequently would also be disruptive because everyone had to stop and help manually debug-build-problems twice as often. How to get out of this vicious cycle?

In these desperate times, Netscape RelEng built a system that grabbed the latest source code, generated a build, displayed the results in a simple linear time-sorted format on a webpage where everyone could see status, and then start again… grab the latest source code, build, post status… again. And again. And again. Not just once a day. At first, this was triggered every hour, hence the phrase “hourly build”, but that was quickly changed to starting a new build immediately after finishing the previous build.

All with no human intervention.

By integrating all the checkins and building continuously like this throughout the day, it meant that each individual build contained fewer changes to detangle if problems arose. By sharing the results on a company-wide-visible webserver, it meant that any developer (not just the few Release Engineers) could now help detangle build problems.

What do you call a new system that continuously integrates code checkins? Hmmm… how about “a continuous integration server“?! Good builds were colored “green”. The vertical columns of green reminded people of trees, giving rise to the phrase “the tree is green” when all builds looked good and it was safe for developers to land checkins. Bad builds were colored “red”, and gave rise to “the tree is burning” or “the tree is closed”. As builds would break (or “burn” with flames) with seemingly little provocation, the web-based system for displaying all this was called “tinderbox“.

Pretty amazing stuff in 1997, and a pivotal moment for Netscape developers. When Netscape moved to open source Mozilla, all this infrastructure was exposed to the entire industry and the idea spread quickly. This remains a core underlying principle in all the various continuous integration products, and agile / scrum development methodologies in use today. Most people starting a software project in 2014 would first setup a continuous integration system. But in 1997, this was unheard of and simply brilliant.

(From talking to people who were there 17 years ago, there’s some debate about whether this was originally invented at Netscape or inspired by a similar system at SGI that was hardwired into the building’s public announcement system using a synthesized voice to declare: “THE BUILD IS BROKEN. BRENDAN BROKE THE BUILD.” If anyone reading this has additional info, please let me know and I’ll update this post.)

If tinderbox server is so awesome, and worked so well for 17 years, why turn it off? Why not just fix it up and keep it running?

In mid-2007, an important criteria for the reborn Mozilla RelEng group was to significantly scale up Mozilla’s developer infrastructure – not just incrementally, but by orders of magnitude. This was essential if Mozilla was to hire more developers, gather many more community members, tackle a bunch of major initiatives, ship releases more predictably and to have these new additional Mozilla’s developers and community contributors be able to work effectively. When we analyzed how tinderbox worked, we discovered a few assumptions from 1997 no longer applied, and were causing bottlenecks we needed to solve.

1) Need to run multiple jobs-of-the-same-type at a time

2) Build-on-checkin, not build-continuously.

3) Display build results arranged by developer checkin not by time.

1) Need to run multiple jobs-of-the-same-type at a time

The design of this tinderbox waterfall assumed that you only had one job of a given type in progress at a time. For example, one linux32 opt build had to finish before the next linux32 opt build could start.

Mechanically, this was done by having only one machine dedicated to doing linux opt builds, and that one machine could only generate one build at a time. The results from one machine were displayed in one time-sorted column on the website page. If you wanted an additional different type of build, say linux32 debug builds, you needed another dedicate machine displaying results in another dedicated column.

Mechanically, this was done by having only one machine dedicated to doing linux opt builds, and that one machine could only generate one build at a time. The results from one machine were displayed in one time-sorted column on the website page. If you wanted an additional different type of build, say linux32 debug builds, you needed another dedicate machine displaying results in another dedicated column.

For a small (~15?) number of checkins per day, and a small number of types of builds, this approach works fine. However, when you increase the checkins per day, many “hourly” build has almost as many checkins as Netscape had each day in 1997. By 2007, Mozilla was routinely struggling with multi-hour blockages as developers debugged integration failures.

Instead of having only one machine do linux32 opt builds at a time, we setup a pool of identically configured machines, each able to do a build-per-checkin, even while the previous build was still in progress. In peak load situations, we might still get more-then-one-checkin-per-build, but now we could start the 2nd linux32 opt build, even while the 1st linux32 opt build was still in progress. This got us back to having very small number of checkins, ideally only one checkin, per build… identifying which checkin broke the build, and hence fixing that build, was once again quick and easy.

Another related problem here was that there were ~86 different types of machines, each dedicated to running different types of jobs, on their own OS and each reporting to different dedicated columns on the tinderbox. There was a linux32 opt builder, a linux32 debug builder, a win32 opt builder, etc. This design had two important drawbacks.

Each different type of build took different times to complete. Even if all jobs started at the same time on day1, the continuous looping of jobs of different durations meant that after a while, all the jobs were starting/stopping at different times – which made it hard for a human to look across all the time-sorted waterfall columns to determine if a particular checkin had caused a given problem. Even getting all 86 columns to fit on a screen was a problem.

It also made each of these 86 machines a single point of failure to the entire system, a model which clearly would not scale. Building out pools of identical machines from 86 machines to ~5,500 machines allowed us to generate multiple jobs-of-the-same-type at the same time. It also meant that whenever one of these set-of-identical machines failed, it was not a single point of failure, and did not immediately close the tree, because another identically-configured machine was available to handle that type of work. This allowed people time to correctly diagnose and repair the machine properly before returning it to production, instead of being under time-pressure to find the quickest way to band-aid the machine back to life so the tree could reopen, only to have the machine fail again later when the band-aid repair failed.

All great, but fixing that uncovered the next hidden assumption.

2) Build-per-checkin, not build-continuously.

The “grab latest source code, generated a build, displayed the results” loop of tinderbox never looked to check if anything had actually changed. Tinderbox just started another build – even if nothing had changed.

Having only one machine available to do a given job meant that machine was constantly busy, so this assumption was not initially obvious. And given that the machine was on anyway, what harm in having it doing an unnecessary build or two?

Generating extra builds, even when nothing had changed, complicated the manual what-change-broke-the-build debugging work. It also meant introduced delays when a human actually did a checkin, as a build containing that checkin could only start after the unneccessary-nothing-changed-build-in-progress completed.

Finally, when we changed to having multiple machines run jobs concurrently, having the machines build even when there was no checkin made no sense. We needed to make sure each machine only started building when a new checkin happened, and there was something new to build. This turned into a separate project to build out an enhanced job scheduler system and machine-tracking system which could span multiple 4 physical colos, 3 amazon regions, assign jobs to the appropriate machines, take sick/dead machines out of production, add new machines into rotation, etc.

3) Display build results arranged by developer checkin not by time.

Tinderbox sorted results by time, specifically job-start-time and job-end-time. However, developers typically care about the results of their checkin, and sometimes the results of the checkin that landed just before them.

Further: Once we started generating multiple-jobs-of-the-same-type concurrently, it uncovered another hidden assumption. The design of this cascading waterfall assumed that you only had one build of a given type running at a time; the waterfall display was not designed to show the results of two linux32 opt builds that were run concurrently. As a transition, we hacked our new replacement systems to send tinderbox-server-compatible status for each concurrent builds to the tinderbox server… more observant developers would occasionally see some race-condition bugs with how these concurrent builds were displayed on the one column of the waterfall. These intermittent display bugs were confusing, hard to debug, but usually self corrected.

As we supported more OS, more build-types-per-OS and started to run unittests and perf-tests per platform, it quickly became more and more complex to figure out whether a given change had caused a problem across all the time-sorted-columns on the waterfall display. Complaints about the width of the waterfall not fitting on developers monitors were widespread. Running more and more of these jobs concurrently make deciphering the waterfall even more complex.

Finding a way to collect all the results related to a specific developer’s checkin, and display these results in a meaningful way was crucial. We tried a few ideas, but a community member (Markus Stange) surprised us all by building a prototype server that everyone instantly loved. This new server was called “tbpl”, because it scraped the TinderBox server Push Logs to gather its data.

Finding a way to collect all the results related to a specific developer’s checkin, and display these results in a meaningful way was crucial. We tried a few ideas, but a community member (Markus Stange) surprised us all by building a prototype server that everyone instantly loved. This new server was called “tbpl”, because it scraped the TinderBox server Push Logs to gather its data.

Over time, there’s been improvements to tbpl.mozilla.org to allow sheriffs to “star” known failures, link to self-service APIs, link to the commits in the repo, link to bugs and most importantly gather all the per-checkin information directly from the buildbot scheduling database we use to schedule and keep track of job status… eliminating the intermittent race-condition bugs when scraping HTML page on tinderbox server. All great, but the user interface has remained basically the same since the first prototype by Markus – developers can easily and quickly see if a developer checkin has caused any bustage.

Fixing these 3 root assumptions in tinderbox.m.o code would be “non-trivial” – basically a re-write – so we instead focused on gracefully transitioning off tinderbox. Since Sept2012, all Mozilla RelEng systems have been off tinderbox.m.o and using tbpl.m.o plus buildbot instead.

Making the Continuous Integration process more efficient has allowed Mozilla to hire more developers who can do more checkins, transition developers from all-on-one-tip-development to multi-project-branch-development, and change the organization from traditional releases to rapid-release model. Game changing stuff. Since 2007, Mozilla has grown the number of employee engineers by a factor of 8, while the number of checkins that developers did has increased by a factor of 21. Infrastructure improvements have outpaced hiring!

On 16 May 2014, with the last Mozilla project finally migrated off tinderbox, so the tinderbox server was powered off. Tinderbox was the first of its kind, and helped changed how the software industry developed software. As much as we can gripe about tinderbox server’s various weaknesses, it has carried Mozilla from 1997 until 2012, and spawned an industry of products that help developers ship better software. Given it’s impact, it feels like we should look for a pedestal to put this on, with a small plaque that says “This changed how software companies develop software, thank you Tinderbox”… As it has been a VM for several years now, maybe this blog post counts as a virtual pedestal?! Regardless, if you are a software developer, and you ever meet any of the original team who built tinderbox, please do thank them.

I’d like to give thanks to some original Netscape folks (Tara Hernandez, Terry Weissman, Lloyd Tabb, Leaf, jwz) as well as aki, brendan, bmoss, chofmann, dmose, myk and rhelmer for their help researching the origins of Tinderbox. Also, thank you to lxt, catlee, bhearsum, rail and others for inviting me back to attend the ceremonial final-powering-off event… After the years of work leading up to this moment, it meant a lot to me to be there at the very end.

John.

ps: the curious can view the cvs commit history for tinderbox server here. My favorite is v1.10!

pps: When a server has been running for so long, figuring out what other undocumented systems might break when tinderbox is turned off is tricky. Surprise dependencies delayed this shutdown several times and frequently uncovered new non-trivial projects that had to be migrated. You can see the various loose ends that had to be tracked down in bug#843383, and all the many many linked bugs.

|

|

Pete Moore: Weekly review 2014-06-04 |

Have been working on:

Bug 1020343 – Jacuzzi emails should go to release team

Bug 1020294 – Dynamic Jacuzzi Allocator shouldn’t allow a job to have zero builders

Bug 1020131 – Request for a new hg repository: build/mapper

Bug 1019438 – end_to_end_reconfig.sh should store logs from manage_foopies.py

Bug 1019434 – update_maintenance_wiki.sh is truncating text content

Bug 1018975 – buildfarm/maintenance/manage_foopies.py not executable

Bug 1018248 – End-to-end reconfig should also update tools version on foopies

Bug 1018118 – Pending queue for tegras > 1000 and time between jobs per tegra is > 6 hours

Bug 976106 – tegra/panda health checks (verify.py) should not swallow exceptions

Bug 976100 – Slave Health should link to the watcher log files of the tegras and pandas

Bug 965691 – Create a Comprehensive Slave Loan tool

Bug 962853 – vcs-sync needs to populate mapper db once it’s live

Bug 913870 – Intermittent panda “Dying due to failing verification”

Bug 847640 – db-based mapper on web cluster

|

|

Ben Hearsum: How far we’ve come |

When I joined Mozilla’s Release Engineering team (Build & Release at the time) back in 2007, the mechanics of shipping a release were a daunting task with zero automation. My earliest memories of doing releases are ones where I get up early, stay late, and spend my entire day on the release. I logged onto at least 8 different machines to run countless commands, sometimes forgetting to start “screen” and losing work due to a dropped network connection.

Last night I had a chat with Nick. When we ended the call I realized that the Firefox 30.0 release builds had started mid-call – completely without us. When I checked my e-mail this morning I found that the rest of the release build process had completed without issue or human intervention.

It’s easy to get bogged down thinking about current problems. Times like this make me realize that sometimes you just need to sit down and recognize how far you’ve come.

|

|

Matt Thompson: Schoolhouse Rock: highlights from the latest release of Webmaker |

We’re shipping a new release of Webmaker every two weeks. Here are some highlights from the new “Schoolhouse Rocks” release we just completed. It’s currently on our staging server for your testing and feedback, and will be shipped to the live Webmaker.org by June 16.

“This release was all about building great content, and restructuring the site so that people can find that content more easily.”

–Cassie McDaniel, Webmaker UX Lead

Tell us what you think:

- Join this thread on the Webmaker newsgroup. Let us know what you think of these new pages and features.

- File bugs. All the work is on staging, so we can get your help with testing and fixing.

- See what’s coming in the next “Honeybadger” release on June13.

What’s new?

New “explore” page

The new “Explore Webmaker” page provides a quick overview of what you can do at Webmaker.org. Why we did it: the consistent feedback we got from users was: “before I join or start making or teaching something, I need a little more information.” This page is designed to provide that.

New “resources” section

15 new pages with resources, activities and curriculum for teaching digital skills.

It’s like an open textbook for teaching web literacy and digital skills.

Each of the 15 Web Literacy competencies has their own page, with a mix of the best resources we could find across the web to discover, make and teach. Check out:

- Composing for the web

- Design and Accessibility

- Coding and scripting

- Infrastructure

- Sharing

- Collaborating

- Community Participation

- Open Practices

Badges are ready for your beta-testing

The new Webmaker Super Mentor and Hive Community Member badges are ready for testing on staging. Here’s how to help.

New web literacy and training badge designs

Using Sean Martell’s Mozilla-wide style guide, we created 20 new badge designs for Webmaker Training and for the web literacy competency pages featured above.

Simplified IA and navigation

We’re simplifying Webmaker.org navigation and information architecture. We’re replacing the old “teach” page with the resources page highlighted above. And the old “Starter Makes” page will be merged into the “Gallery” (coming soon).

|

|

Christian Heilmann: Write less, achieve meh? |

In my keynote at HTML5DevConf in San Francisco I talked about a pattern of repetition those of us who’ve been around for a while will have encountered, too: every few years development becomes “too hard” and “too fragmented” and we need “simpler solutions”.

In the past, these were software packages, WYSIWYG editors and CMS that promised us to deliver “to all platforms without any code overhead”. Nowadays we don’t even wait for snake-oil salesmen to promise us the blue sky. Instead we do this ourselves. Almost every week we release new, magical scripts and workflows that solve all the problems we have for all the new browsers and with great fall-backs for older environments.

Most of these solutions stem from fixing a certain problem and – especially in the mobile space – far too many stem from trying to simulate an interaction pattern of native applications. They do a great job, they are amazing feats of coding skills and on first glance, they are superbly useful.

It gets tricky when problems come up and don’t get fixed. This – sadly enough – is becoming a pattern. If you look around GitHub you find a lot of solutions that promise utterly frictionless development with many an unanswered issue or un-merged pull request. Even worse, instead of filing bugs there is a pattern of creating yet another solution that fixes all the issues of the original one . People simply should replace the old one with the new one.

Who replaces problematic code?

All of this should not be an issue: as a developer, I am happy to discard and move on when a certain solution doesn’t deliver. I’ve changed my editor of choice a lot of times in my career.

The problem is that completely replacing solutions expects a lot of commitment from the implementer. All they want is something that works and preferably something that fixes the current problem. Many requests on Stackoverflow and other help sites don’t ask for the why, but just want a how. What can I use to fix this right now, so that my boss shuts up? A terrible question that developers of every generation seem to repeat and almost always results in unmaintainable code with lots of overhead.

That’s when “use this and it works” solutions become dangerous.

First of all, these tell those developers that there is no need to ever understand what you do. Your job seems to be to get your boss off your back or to make that one thing in the project plan – that you know doesn’t make sense – works.

Secondly, if we found out about issues of a certain solution and considered it dangerous to use (cue all those “XYZ considered dangerous” posts) we should remove and redirect them to the better solutions.

This, however, doesn’t happen often. Instead we keep them around and just add a README that tells people they can use our old code and we are not responsible for results. Most likely the people who have gotten the answer they wanted on the Stackoverflows of this world will never hear how the solution they chose and implemented is broken.

The weakest link?

Another problem is that many solutions rely on yet more abstractions. This sounds like a good plan – after all we shouldn’t re-invent things.

However, it doesn’t really help an implementer on a very tight deadline if our CSS fix needs the person to learn all about Bower, node.js, npm, SASS, Ruby or whatever else first. We can not just assume that everybody who creates things on the web is as involved in its bleeding edge as we are. True, a lot of these tools make us much more efficient and are considered “professional development”, but they are also very much still in flux.

We can not assume that all of these dependencies work and make sense in the future. Neither can we expect implementers to remove parts of this magical chain and replace them with their newer versions – especially as many of them are not backwards compatible. A chain is as strong as its weakest link, remember? That also applies to tool chains.

If we promise magical solutions, they’d better be magical and get magically maintained. Otherwise, why do we create these solutions? Is it really about making things easier or is it about impressing one another? Much like entrepreneurs shouldn’t be in love with being an entrepreneur but instead love their product we should love both our code and the people who use it. This takes much more effort than just releasing code, but it means we will create a more robust web.

The old adage of “write less, achieve more” needs a re-vamp to “write less, achieve better”. Otherwise we’ll end up with a world where a few people write small, clever solutions for individual problems and others pack them all together just to make sure that really everything gets fixed.

The overweight web

This seems to be already the case. When you see that the average web site according to HTTParchive is 1.7MB in size (46% cacheable) with 93 resource requests on 16 hosts then something, somewhere is going terribly wrong. It is as if none of the performance practices we talked about in the last few years have ever reached those who really build things.

A lot of this is baggage of legacy browsers. Many times you see posts and solutions like “This new feature of $newestmobileOS is now possible in JavaScript and CSS - even on IE8!”. This scares me. We shouldn’t block out any user of the web. We also should not take bleeding edge, computational heavy and form-factor dependent code and give it to outdated environments. The web is meant to work for all, not work the same for all and certainly not make it slow and heavy for older environments based on some misunderstanding of what “support” means.

Redundancy denied

If there is one thing that this discouraging statistic shows then it is that future redundancy of solutions is a myth. Anything we create that “fixes problems with current browsers” and “should be removed once browsers get better” is much more likely to clog up the pipes forever than to be deleted. Is it – for example – really still necessary to fix alpha transparency in PNGs for IE5.5 and 6? Maybe, but I am pretty sure that of all these web sites in these statistics only a very small percentage really still have users locked into these browsers.

The reason for denied redundancy is that we solved the immediate problem with a magical solution – we can not expect implementers to re-visit their solutions later to see if now they are not needed any longer. Many developers don’t even have the chance to do so – projects in agencies get handed over to the client when they are done and the next project with a different client starts.

Repeating XHTML mistakes

One of the main things that HTML5 was invented for was to create a more robust web by being more lenient with markup. If you remember, XHTML sent as XML (as it should, but never was as IE6 didn’t support that) had the problem that a single HTML syntax error or an un-encoded ampersand would result in an error message and nothing would get rendered.

This was deemed terrible as our end users get punished for something they can’t control or change. That’s why the HTML algorithm of newer browsers is much more lenient and does – for example – close tags for you.

Nowadays, the yellow screen of death showing an XML error message is hardly ever seen. Good, isn’t it? Well, yes, it would be – if we had learned from that mistake. Instead, we now make a lot of our work reliant on JavaScript, resource loaders and many libraries and frameworks.

This should not be an issue – the “JavaScript not available” use case is a very small one and mostly by users who either had JavaScript turned off by their sysadmins or people who prefer the web without it.

The “JavaScript caused an error” use case, on the other hand, is very much alive and will probably never go away. So many things can go wrong, from resources not being available, to network timeouts, mobile providers and proxies messing with your JavaScript up to simple syntax errors because of wrong HTTP headers. In essence, we are relying on a technology that is much less reliable than XML was and we feel very clever doing so. The more dependencies we have, the more likely it is that something can go wrong.

None of this is an issue, if we write our code in a paranoid fashion. But we don’t. Instead we also seem to fall for the siren song of abstractions telling us everything will be more stable, much better performing and cleaner if we rely on a certain framework, build-script or packaging solution.

Best of breed with basic flaws

One eye-opener for me was judging the Static Showdown Hackathon. I was very excited about the amazing entries and what people managed to achieve solely with HTML, CSS and JavaScript. What annoyed me though was the lack of any code that deals with possible failures. Now, I understand that this is hackathon code and people wanted to roll things out quickly, but I see a lot of similar basic mistakes in many live products:

- Dependency on a certain environment – many examples only worked in Chrome, some only in Firefox. I didn’t even dare to test them on a Windows machine. These dependencies were in many cases not based on functional necessity – instead the code just assumed a certain browser specific feature to be available and tried to access it. This is especially painful when the solution additionaly loads lots of libraries that promise cross-browser functionality. Why use those if you’re not planning to support more than one browser?

- Complete lack of error handling – many things can go wrong in our code. Simply not doing anything when for example loading some data failed and presenting the user with an infinite loading spinner is not a nice thing to do. Almost every technology we have has a success and an error return case. We seem to spend all the time in the success one, whilst it is much more likely that we’ll lose users and their faith in the error one. If an error case is not even reported or reported as the user’s fault we’re not writing intelligent code. Thinking paranoid is a good idea. Telling users that something went wrong, what went wrong and what they can do to re-try is not luxury – it means building a user interface. Any data loading that doesn’t refresh the view should have an error case and a timeout case – connections are the things most likely to fail.

- A lack of very basic accessibility – many solutions I encountered relied on touch alone, and doing so provided incredibly small touch targets. Others showed results far away from the original action without changing the original button or link. On a mobile device this was incredibly frustrating.

Massive web changes ahead

All of this worries me. Instead of following basic protective measures to make our code more flexible and deliver great results to all users (remember: not the same results to all users; this would limit the web) we became dependent on abstractions and we keep hiding more and more code in loaders and packaging formats. A lot of this code is redundant and fixes problems of the past.

The main reason for this is a lack of control on the web. And this is very much changing now. The flawed solutions we had for offline storage (AppCache) and widgets on the web (many, many libraries creating DOM elements) are getting new, exciting and above all control-driven replacements: ServiceWorker and WebComponents.

Both of these are the missing puzzle pieces to really go to town with creating applications on the web. With ServiceWorker we can not only create apps that work offline, but also deal with a lot of the issues we now solve with dependency loaders. WebComponents allow us to create reusable widgets that are either completely new or inherited from another or existing HTML elements. These widgets run in the rendering flow of the browser instead of trying to make our JavaScript and DOM rendering perform in it.

The danger of WebComponents is that it allows us to hide a lot of functionality in a simple element. Instead of just shifting our DOM widget solutions to the new model this is a great time to clean up what we do and find the best-of-breed solutions and create components from them.

I am confident that good things are happening there. Discussions sparked by the Edge Conference’s WebComponents and Accessibility panels already resulted in some interesting guidelines for accessible WebComponents

Welcome to the “Bring your own solution” platform

The web is and stays the “bring your own solution platform”. There are many solutions to the same problem, each with their own problems and benefits. We can work together to mix and match them and create a better, faster and more stable web. We can only do that, however, when we allow the bricks we build these solutions from to be detachable and reusable. Much like glueing Lego bricks together means using it wrong we should stop creating “perfect solutions” and create sensible bricks instead.

Welcome to the future – it is in the browsers, not in abstractions. We don’t need to fix the problems for browser makers, but should lead them to give us the platform we deserve.

http://christianheilmann.com/2014/06/04/write-less-achieve-meh/

|

|

Yunier Jos'e Sosa V'azquez: Conoce los complementos ganadores del concurso para Australis |

Celebrando la liberaci'on de Firefox 29 y el estreno de la interfaz Australis, Mozilla lanz'o un concurso con el objetivo de crear complementos que aprovecharan lo m'as posible las ventajas del nuevo dise~no. Con Australis se abren nuevas opciones de personalizaci'on y permite agilizar la experiencia con los complementos en el navegador.

La responsabilidad de analizar todas las propuestas enviadas recay'o en un jurado integrado por Michael Balazs, rctgamer, Andreas Wagner, Gijs Kruitbosch y Jorge Villalobos, colaboradores y trabajadores de Mozilla.

La responsabilidad de analizar todas las propuestas enviadas recay'o en un jurado integrado por Michael Balazs, rctgamer, Andreas Wagner, Gijs Kruitbosch y Jorge Villalobos, colaboradores y trabajadores de Mozilla.

El concurso estuvo dividido en 3 categor'ias: Mejor complemento en general, Mejor tema completo y Mejor complemento para marcadores, en las que se eligieron 3 ganadores por cada una. Todos los ganadores recibieron un tel'efono con Firefox OS y los primeros lugares, recibieron adem'as, una colecci'on de prendas Mozilla.

Sin m'as, los dejo con los ganadores por categor'ia:

Mejor complemento en general

1er lugar – The Fox, Only Better

Maximiza el espacio utilizado para mostrar las p'aginas web ocultando la barra de herramientas de Firefox.

2do lugar – Classic Theme Restorer

Devuelve el bot'on Firefox, las pesta~nas cuadradas, la barra de complementos y botones peque~nos a Australis. Usa “Personalizar” para mover los botones hacia la barra de herramientas.

A~nade un r'apido y simple gestor de perfiles de acceso al nuevo men'u. Dise~nado para la actualizaci'on a Firefox Australis.

Mejor complemento para marcadores

QuickMark prove una ligera y r'apida v'ia para crear marcadores y mantenerlos organizados. Puedes crear un marcador y ubicarlo en un carpeta con un simple clic o un atajo mediante el teclado.

Muestra los elementos de tus marcadores din'amicos en una barra lateral.

Escribe “What about:” en la barra maravillosa (direcciones) y ver'as la lista de URLs del “About:” de Firefox.

Mejor tema completo

Un viejo favorito, completamente actualizado para Firefox 29.

Un compacto y elegante tema para Firefox 29 basado en Maxthon.

3er lugar – Walnut for Firefox

Walnut es perfecto si se quiere tener la sensaci'on de madera en Firefox. Es un tema completo que redise~na todas las ventanas, widgets, paneles y muchas extensiones.

Espero que instalen estos complementos, son geniales y nos ayudar'an a tener un mejor navegador.

Fuente: Mozilla Add-ons Blog

http://firefoxmania.uci.cu/conoce-los-complementos-ganadores-del-concurso-para-australis/

|

|

Mike Hommey: FileVault 2 + Mavericks upgrade = massive FAIL |

Today, since I was using my Macbook Pro, I figured I’d upgrade OS X. Haha. What a mistake.

So. My Macbook Pro was running Mountain Lion with FileVault 2 enabled. Before that, it was running Lion, and if my recollection is correct, it was using FileVault 2 as well, so the upgrade to Mountain Lion preserved that properly.

The upgrade to Mavericks didn’t.

After the installation and the following reboot, and after a few seconds with the Apple logo and the throbber, I would be presented the infamous slashed circle.

Tried various things, but one of the most important information I got was from booting in verbose mode (hold Command+V when turning the Mac on ; took me a while to stumble on a page that mentions this one), which told me, repeatedly “Still waiting for root device”.

What bugged me the most is that it did ask for CoreStorage password before failing to boot, and it did complain when I purposefully typed the wrong password.

In Recovery mode (hold Command+R when turning the Mac on), the Disk Utility would show me the partition that was holding the data, but greyed out, and without a name. In the terminal, typing the diskutil list command displayed something like this:

/dev/disk0 #: TYPE NAME SIZE IDENTIFIER 0: GUID_partition_scheme *240.1 GB disk0 1: EFI EFI 209.7 MB disk0s1 2: Apple_HFS 59.8 GB disk0s2 3: Apple_Boot Recovery HD 650.0 MB disk0s3 4: Microsoft Basic Data Windows HD 59.8 GB disk0s4 5: Apple_HFS Debian 9.5 MB disk0s5 6: Linux LVM 119.6 GB disk0s6

(Yes, I have a triple-boot setup)

I wasn’t convinced Apple_HFS was the right thing for disk0s2 (where the FileVault storage is supposed to be), so I took a USB disk and created an Encrypted HFS on it with the Disk Utility. And surely, the GPT type for that one was not Apple_HFS, but Apple_CoreStorage.

Having no idea how to change that under OS X, I booted under Debian, and ran gdisk:

# gdisk /dev/sda GPT fdisk (gdisk) version 0.8.8 Partition table scan: MBR: hybrid BSD: not present APM: not present GPT: present Found valid GPT with hybrid MBR; using GPT. Command (? for help): p Disk /dev/sda: 468862128 sectors, 223.6 GiB Logical sector size: 512 bytes Disk identifier (GUID): 3C08CA5E-92F3-4474-90F0-88EF0023E4FF Partition table holds up to 128 entries First usable sector is 34, last usable sector is 468862094 Partitions will be aligned on 8-sector boundaries Total free space is 4054 sectors (2.0 MiB) Number Start (sector) End (sector) Size Code Name 1 40 409639 200.0 MiB EF00 EFI System Partition 2 409640 117219583 55.7 GiB AF00 Macintosh HD 3 117219584 118489119 619.9 MiB AB00 Recovery HD 4 118491136 235284479 55.7 GiB 0700 Microsoft basic data 5 235284480 235302943 9.0 MiB AF00 Apple HFS/HFS+ 6 235304960 468862078 111.4 GiB 8E00 Linux LVM

And changed the type:

Command (? for help): t Partition number (1-6): 2 Current type is 'Apple HFS/HFS+' Hex code or GUID (L to show codes, Enter = 8300): af05 Changed type of partition to 'Apple Core Storage' Command (? for help): w Final checks complete. About to write GPT data. THIS WILL OVERWRITE EXISTING PARTITIONS!! Do you want to proceed? (Y/N): y OK; writing new GUID partition table (GPT) to /dev/sda. Warning: The kernel is still using the old partition table. The new table will be used at the next reboot. The operation has completed successfully.

After a reboot under OS X, it still was failing to boot, with more erratic behaviour. On the other hand, the firmware boot chooser wasn’t displaying “Macintosh HD” as a choice, but “Mac OS X Base System”. After rebooting under Recovery again, I opened the Startup Disk dialog and chose “Macintosh HD” there.

Rebooted again, and victory was mine, I finally got the Apple logo, and then the “Completing installation” dialog.

In hope this may help people hitting the same problem in the future. If you know how to change the GPT type from the command line in Recovery (that is, without booting Linux), that would be valuable information to add in a comment below.

|

|

Byron Jones: bugzilla can now show bugs that have been updated since you last visited them |

thanks to dylan‘s work on bug 489028, bugzilla now tracks when you view a bug, allowing you to search for bugs which have been updated since you last visited them.

on bugzilla.mozilla.org this has been added to “my dashboard“:

bugzilla will only track a bug if you are involved in it (you have to be the reporter, assignee, qa-contact, or on the cc list), and if you have javascript enabled.